Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

Under this Web Page

Artificial Intelligence (AI)

we cover both the (many) positive and (some) negative impact of the many emerging technologies that AI enables

Artificial Intelligence (AI):

Articles Covered below:

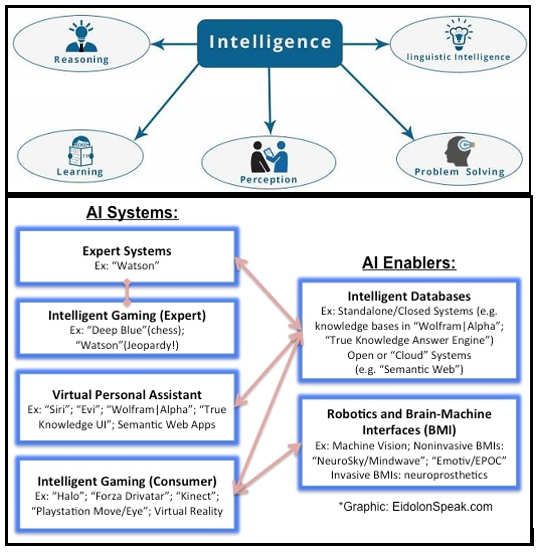

TOP: AI Systems: What is Intelligence Composed of?;

BOTTOM: AI & Artificial Cognitive Systems

Articles Covered below:

- 14 Ways AI Will Benefit Or Harm Society (Forbes Technology Council March 1, 2018)

- These are the jobs most at risk of automation according to Oxford University: Is yours one of them?

- YouTube Video: What is Artificial Intelligence Exactly?

- YouTube Video: Bill Gates on the impact of AI on the job market

- YouTube Video: The Future of Artificial Intelligence (Stanford University)

TOP: AI Systems: What is Intelligence Composed of?;

BOTTOM: AI & Artificial Cognitive Systems

14 Ways AI Will Benefit Or Harm Society (Forbes Technology Council March 1, 2018)

"Artificial intelligence (AI) is on the rise both in business and in the world in general. How beneficial is it really to your business in the long run? Sure, it can take over those time-consuming and mundane tasks that are bogging your employees down, but at what cost?

With AI spending expected to reach $46 billion by 2020, according to an IDC report, there’s no sign of the technology slowing down. Adding AI to your business may be the next step as you look for ways to advance your operations and increase your performance.

Forbes Technology Council is an invitation-only community for world-class CIOs, CTOs and technology executives. Do I qualify?To understand how AI will impact your business going forward, 14 members of Forbes Technology Council weigh in on the concerns about artificial intelligence and provide reasons why AI is either a detriment or a benefit to society. Here is what they had to say:

1. Enhances Efficiency And Throughput

Concerns about disruptive technologies are common. A recent example is automobiles -- it took years to develop regulation around the industry to make it safe. That said, AI today is a huge benefit to society because it enhances our efficiency and throughput, while creating new opportunities for revenue generation, cost savings and job creation. - Anand Sampat, Datmo

2. Frees Up Humans To Do What They Do Best

Humans are not best served by doing tedious tasks. Machines can do that, so this is where AI can provide a true benefit. This allows us to do the more interpersonal and creative aspects of work. - Chalmers Brown, Due

3. Adds Jobs, Strengthens The Economy

We all see the headlines: Robots and AI will destroy jobs. This is fiction rather than fact. AI encourages a gradual evolution in the job market which, with the right preparation, will be positive. People will still work, but they’ll work better with the help of AI. The unparalleled combination of human and machine will become the new normal in the workforce of the future. - Matthew Lieberman, PwC

4. Leads To Loss Of Control

If machines do get smarter than humans, there could be a loss of control that can be a detriment. Whether that happens or whether certain controls can be put in place remains to be seen. - Muhammed Othman, Calendar

5. Enhances Our Lifestyle

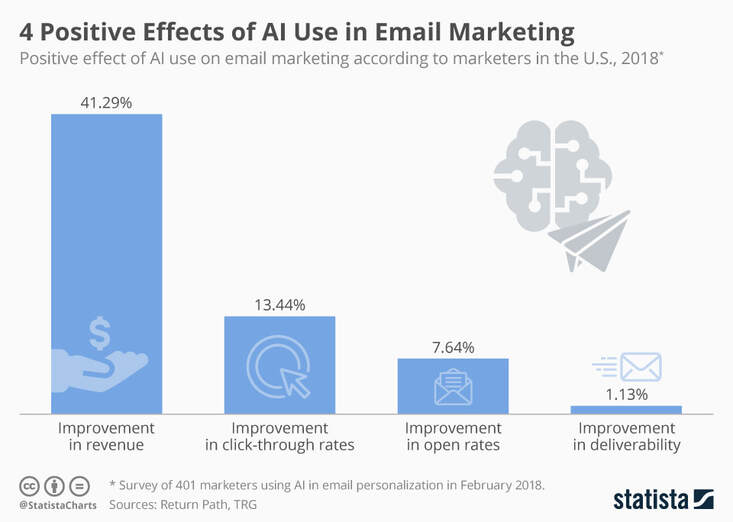

The rise of AI in our society will enhance our lifestyle and create more efficient businesses. Some of the mundane tasks like answering emails and data entry will by done by intelligent assistants. Smart homes will also reduce energy usage and provide better security, marketing will be more targeted and we will get better health care thanks to better diagnoses. - Naresh Soni, Tsunami ARVR

6. Supervises Learning For Telemedicine

AI is a technology that can be used for both good and nefarious purposes, so there is a need to be vigilant. The latest technologies seem typically applied towards the wealthiest among us, but AI has the potential to extend knowledge and understanding to a broader population -- e.g. image-based AI diagnoses of medical conditions could allow for a more comprehensive deployment of telemedicine. - Harald Quintus-Bosz, Cooper Perkins, Inc.

7. Creates Unintended And Unforeseen Consequences

While fears about killer robots grab headlines, unintended and unforeseen consequences of artificial intelligence need attention today, as we're already living with them. For example, it is believed that Facebook's newsfeed algorithm influenced an election outcome that affected geopolitics. How can we better anticipate and address such possible outcomes in future? - Simon Smith, BenchSci

8. Increases Automation

There will be economic consequences to the widespread adoption of machine learning and other AI technologies. AI is capable of performing tasks that would once have required intensive human labor or not have been possible at all. The major benefit for business will be a reduction in operational costs brought about by AI automation -- whether that’s a net positive for society remains to be seen. - Vik Patel, Nexcess

9. Elevates The Condition Of Mankind

The ability for technology to solve more problems, answer more questions and innovate with a number of inputs beyond the capacity of the human brain can certainly be used for good or ill. If history is any guide, the improvement of technology tends to elevate the condition of mankind and allow us to focus on higher order functions and an improved quality of life. - Wade Burgess, Shiftgig

10. Solves Complex Social Problems

Much of the fear with AI is due to the misunderstanding of what it is and how it should be applied. Although AI has promise for solving complex social problems, there are ethical issues and biases we must still explore. We are just beginning to understand how AI can be applied to meaningful problems. As our use of AI matures, we will find it to be a clear benefit in our lives. - Mark Benson, Exosite, LLC

11. Improves Demand Side Management

AI is a benefit to society because machines can become smarter over time and increase efficiencies. Additionally, computers are not susceptible to the same probability of errors as human beings are. From an energy standpoint, AI can be used to analyze and research historical data to determine how to most efficiently distribute energy loads from a grid perspective. - Greg Sarich, CLEAResult

12. Benefits Multiple Industries

Society has and will continue to benefit from AI based on character/facial recognition, digital content analysis and accuracy in identifying patterns, whether they are used for health sciences, academic research or technology applications. AI risks are real if we don't understand the quality of the incoming data and set AI rules which are making granular trade-off decisions at increasing computing speeds. - Mark Butler, Qualys.com

13. Absolves Humans Of All Responsibility

It is one thing to use machine learning to predict and help solve problems; it is quite another to use these systems to purposely control and act in ways that will make people unnecessary.

When machine intelligence exceeds our ability to understand it, or it becomes superior intelligence, we should take care to not blindly follow its recommendation and absolve ourselves of all responsibility. - Chris Kirby, Voices.com

14. Extends And Expands Creativity

AI intelligence is the biggest opportunity of our lifetime to extend and expand human creativity and ingenuity. The two main concerns that the fear-mongers raise are around AI leading to job losses in the society and AI going rogue and taking control of the human race.

I believe that both these concerns raised by critics are moot or solvable. - Ganesh Padmanabhan, CognitiveScale, Inc

[End of Article #1]

___________________________________________________________________________

These are the jobs most at risk of automation according to Oxford University: Is yours one of them? (The Telegraph September 27, 2017)

In his speech at the 2017 Labour Party conference, Jeremy Corbyn outlined his desire to "urgently... face the challenge of automation", which he called a " threat in the hands of the greedy".

Whether or not Corbyn is planing a potentially controversial 'robot tax' wasn't clear from his speech, but addressing the forward march of automation is a savvy move designed to appeal to voters in low-paying, routine work.

Click here for rest of Article.

___________________________________________________________________________

Artificial Intelligence (AI) by Wikipedia 10/29/2023:

In a Nutshell:

Part of a series on Artificial intelligence

Major goals:

AI Expanded follows:

Artificial intelligence (AI) is the intelligence of machines or software, as opposed to the intelligence of humans or animals. It is also the field of study in computer science that develops and studies intelligent machines. "AI" may also refer to the machines themselves.

AI technology is widely used throughout industry, government and science. Some high-profile applications are:

Artificial intelligence was founded as an academic discipline in 1956. The field went through multiple cycles of optimism followed by disappointment and loss of funding, but after 2012, when deep learning surpassed all previous AI techniques, there was a vast increase in funding and interest.

The various sub-fields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include:

General intelligence (the ability to solve an arbitrary problem) is among the field's long-term goals. To solve these problems, AI researchers have adapted and integrated a wide range of problem-solving techniques, including:

AI also draws upon psychology, linguistics, philosophy, neuroscience and many other fields.

Goals:

The general problem of simulating (or creating) intelligence has been broken down into sub-problems. These consist of particular traits or capabilities that researchers expect an intelligent system to display. The traits described below have received the most attention and cover the scope of AI research:

Reasoning, problem-solving:

Early researchers developed algorithms that imitated step-by-step reasoning that humans use when they solve puzzles or make logical deductions. By the late 1980s and 1990s, methods were developed for dealing with uncertain or incomplete information, employing concepts from probability and economics.

Many of these algorithms are insufficient for solving large reasoning problems because they experience a "combinatorial explosion": they became exponentially slower as the problems grew larger. Even humans rarely use the step-by-step deduction that early AI research could model. They solve most of their problems using fast, intuitive judgments. Accurate and efficient reasoning is an unsolved problem.

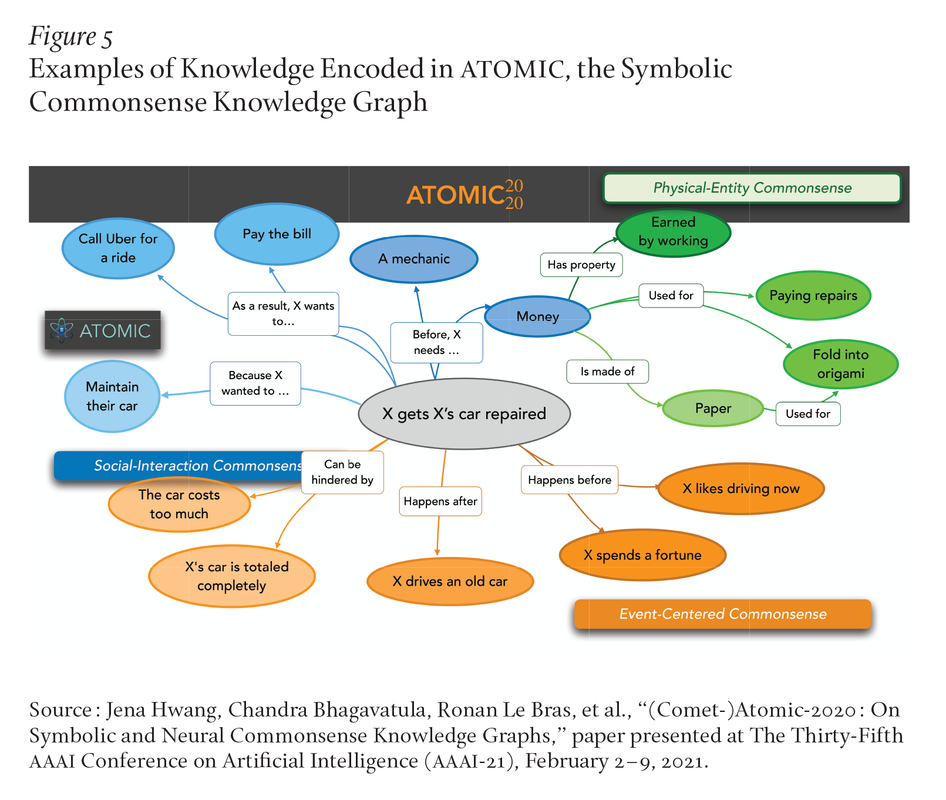

Knowledge representation:

Knowledge representation and knowledge engineering allow AI programs to answer questions intelligently and make deductions about real-world facts. Formal knowledge representations are used in:

A knowledge base is a body of knowledge represented in a form that can be used by a program. An ontology is the set of objects, relations, concepts, and properties used by a particular domain of knowledge.

Knowledge bases need to represent things such as:

Among the most difficult problems in KR are:

Knowledge acquisition is the difficult problem of obtaining knowledge for AI applications. Modern AI gathers knowledge by "scraping" the internet (including Wikipedia). The knowledge itself was collected by the volunteers and professionals who published the information (who may or may not have agreed to provide their work to AI companies).

This "crowd sourced" technique does not guarantee that the knowledge is correct or reliable. The knowledge of Large Language Models (such as ChatGPT) is highly unreliable -- it generates misinformation and falsehoods (known as "hallucinations"). Providing accurate knowledge for these modern AI applications is an unsolved problem.

Planning and decision making:

An "agent" is anything that perceives and takes actions in the world. A rational agent has goals or preferences and takes actions to make them happen:

The decision making agent assigns a number to each situation (called the "utility") that measures how much the agent prefers it. For each possible action, it can calculate the "expected utility": the utility of all possible outcomes of the action, weighted by the probability that the outcome will occur. It can then choose the action with the maximum expected utility.

In classical planning, the agent knows exactly what the effect of any action will be. In most real-world problems, however, the agent may not be certain about the situation they are in (it is "unknown" or "unobservable") and it may not know for certain what will happen after each possible action (it is not "deterministic"). It must choose an action by making a probabilistic guess and then reassess the situation to see if the action worked.

In some problems, the agent's preferences may be uncertain, especially if there are other agents or humans involved. These can be learned (e.g., with inverse reinforcement learning) or the agent can seek information to improve its preferences. Information value theory can be used to weigh the value of exploratory or experimental actions. The space of possible future actions and situations is typically intractably large, so the agents must take actions and evaluate situations while being uncertain what the outcome will be.

A Markov decision process has a transition model that describes the probability that a particular action will change the state in a particular way, and a reward function that supplies the utility of each state and the cost of each action. A policy associates a decision with each possible state.

The policy could be calculated (e.g. by iteration), be heuristic, or it can be learned.

Game theory describes rational behavior of multiple interacting agents, and is used in AI programs that make decisions that involve other agents.

Learning:

Machine learning is the study of programs that can improve their performance on a given task automatically. It has been a part of AI from the beginning.

There are several kinds of machine learning:

Computational learning theory can assess learners by computational complexity, by sample complexity (how much data is required), or by other notions of optimization.

Natural language processing:

Natural language processing (NLP) allows programs to read, write and communicate in human languages such as English.

Specific problems include:

Early work, based on Noam Chomsky's generative grammar and semantic networks, had difficulty with word-sense disambiguation unless restricted to small domains called "micro-worlds" (due to the common sense knowledge problem).

Modern deep learning techniques for NLP include word embedding (how often one word appears near another), transformers (which finds patterns in text), and others.

In 2019, generative pre-trained transformer (or "GPT") language models began to generate coherent text, and by 2023 these models were able to get human-level scores on the bar exam, SAT, GRE, and many other real-world applications.

Perception:

Machine perception is the ability to use input from sensors to deduce aspects of the world (such as the following sensors:

Computer vision is the ability to analyze visual input.The field includes:

Robotics uses AI.

Social intelligence:

Affective computing is an interdisciplinary umbrella that comprises systems that recognize, interpret, process or simulate human feeling, emotion and mood.

For example, some virtual assistants are programmed to speak conversationally or even to banter humorously; it makes them appear more sensitive to the emotional dynamics of human interaction, or to otherwise facilitate human–computer interaction.

However, this tends to give naïve users an unrealistic conception of how intelligent existing computer agents actually are. Moderate successes related to affective computing include:

General intelligence:

A machine with artificial general intelligence should be able to solve a wide variety of problems with breadth and versatility similar to human intelligence.

Tools:

AI research uses a wide variety of tools to accomplish the goals above.

Search and optimization:

AI can solve many problems by intelligently searching through many possible solutions. There are two very different kinds of search used in AI:

State space search searches through a tree of possible states to try to find a goal state. For example, Planning algorithms search through trees of goals and subgoals, attempting to find a path to a target goal, a process called means-ends analysis.

Simple exhaustive searches are rarely sufficient for most real-world problems: the search space (the number of places to search) quickly grows to astronomical numbers. The result is a search that is too slow or never completes. "Heuristics" or "rules of thumb" can help to prioritize choices that are more likely to reach a goal.

Adversarial search is used for game-playing programs, such as chess or Go. It searches through a tree of possible moves and counter-moves, looking for a winning position.

Local search uses mathematical optimization to find a numeric solution to a problem. It begins with some form of a guess and then refines the guess incrementally until no more refinements can be made.

These algorithms can be visualized as blind hill climbing: we begin the search at a random point on the landscape, and then, by jumps or steps, we keep moving our guess uphill, until we reach the top. This process is called stochastic gradient descent.

Evolutionary computation uses a form of optimization search. For example, they may begin with a population of organisms (the guesses) and then allow them to mutate and recombine, selecting only the fittest to survive each generation (refining the guesses).

Distributed search processes can coordinate via swarm intelligence algorithms. Two popular swarm algorithms used in search are particle swarm optimization (inspired by bird flocking) and ant colony optimization (inspired by ant trails).

Neural networks and statistical classifiers (discussed below), also use a form of local search, where the "landscape" to be searched is formed by learning.

Logic:

Formal Logic is used for reasoning and knowledge representation. Formal logic comes in two main forms:

Logical inference (or deduction) is the process of proving a new statement (conclusion) from other statements that are already known to be true (the premises). A logical knowledge base also handles queries and assertions as a special case of inference. An inference rule describes what is a valid step in a proof. The most general inference rule is resolution.

Inference can be reduced to performing a search to find a path that leads from premises to conclusions, where each step is the application of an inference rule. Inference performed this way is intractable except for short proofs in restricted domains. No efficient, powerful and general method has been discovered.

Fuzzy logic assigns a "degree of truth" between 0 and 1 and handles uncertainty and probabilistic situations. Non-monotonic logics are designed to handle default reasoning. Other specialized versions of logic have been developed to describe many complex domains (see knowledge representation above).

Probabilistic methods for uncertain reasoning:

Many problems in AI (including in reasoning, planning, learning, perception, and robotics) require the agent to operate with incomplete or uncertain information. AI researchers have devised a number of tools to solve these problems using methods from probability theory and economics.

Bayesian networks are a very general tool that can be used for many problems, including:

Probabilistic algorithms can also be used for filtering, prediction, smoothing and finding explanations for streams of data, helping perception systems to analyze processes that occur over time (e.g., hidden Markov models or Kalman filters).

Precise mathematical tools have been developed that analyze how an agent can make choices and plan, using:

These tools include models such as:

Classifiers and statistical learning methods:

The simplest AI applications can be divided into two types: classifiers (e.g. "if shiny then diamond"), on one hand, and controllers (e.g. "if diamond then pick up"), on the other hand.

Classifiers are functions that use pattern matching to determine the closest match. They can be fine-tuned based on chosen examples using supervised learning. Each pattern (also called an "observation") is labeled with a certain predefined class. All the observations combined with their class labels are known as a data set. When a new observation is received, that observation is classified based on previous experience.

There are many kinds of classifiers in use. The decision tree is the simplest and most widely used symbolic machine learning algorithm.

K-nearest neighbor algorithm was the most widely used analogical AI until the mid-1990s, and Kernel methods such as the support vector machine (SVM) displaced k-nearest neighbor in the 1990s.

The naive Bayes classifier is reportedly the "most widely used learner" at Google, due in part to its scalability. Neural networks are also used as classifiers.

Artificial neural networks:

Artificial neural networks were inspired by the design of the human brain: a simple "neuron" N accepts input from other neurons, each of which, when activated (or "fired"), casts a weighted "vote" for or against whether neuron N should itself activate.

In practice, the input "neurons" are a list of numbers, the "weights" are:

"The resemblance to real neural cells and structures is superficial", according to Russell and Norvig.

Learning algorithms for neural networks use local search to choose the weights that will get the right output for each input during training. The most common training technique is the backpropagation algorithm. Neural networks learn to model complex relationships between inputs and outputs and find patterns in data.

In theory, a neural network can learn any function.

In feedforward neural networks the signal passes in only one direction. Recurrent neural networks feed the output signal back into the input, which allows short-term memories of previous input events.

Long short term memory is the most successful network architecture for recurrent networks.

Perceptrons use only a single layer of neurons, whereas deep learning uses multiple layers. Convolutional neural networks strengthen the connection between neurons that are "close" to each other – this is especially important in image processing, where a local set of neurons must identify an "edge" before the network can identify an object.

Deep learning:

Deep learning uses several layers of neurons between the network's inputs and outputs.

The multiple layers can progressively extract higher-level features from the raw input. For example, in image processing, lower layers may identify edges, while higher layers may identify the concepts relevant to a human such as digits or letters or faces.

Deep learning has drastically improved the performance of programs in many important subfields of artificial intelligence, including:

The reason that deep learning performs so well in so many applications is not known as of 2023.

The sudden success of deep learning in 2012–2015 did not occur because of some new discovery or theoretical breakthrough (deep neural networks and backpropagation had been described by many people, as far back as the 1950s) but because of two factors:

Specialized hardware and software:

Main articles:

In the late 2010s, graphics processing units (GPUs) that were increasingly designed with AI-specific enhancements and used with specialized TensorFlow software, had replaced previously used central processing unit (CPUs) as the dominant means for large-scale (commercial and academic) machine learning models' training. Historically, specialized languages, such as Lisp, Prolog, and others, had been used.

Applications:

Main article: Applications of artificial intelligence

AI and machine learning technology is used in most of the essential applications of the 2020s, including:

There are also thousands of successful AI applications used to solve specific problems for specific industries or institutions. In a 2017 survey, one in five companies reported they had incorporated "AI" in some offerings or processes. A few examples are:

Game playing programs have been used since the 1950s to demonstrate and test AI's most advanced techniques. Deep Blue became the first computer chess-playing system to beat a reigning world chess champion, Garry Kasparov, on 11 May 1997.

In 2011, in a Jeopardy! quiz show exhibition match, IBM's question answering system, Watson, defeated the two greatest Jeopardy! champions, Brad Rutter and Ken Jennings, by a significant margin.

In March 2016, AlphaGo won 4 out of 5 games of Go in a match with Go champion Lee Sedol, becoming the first computer Go-playing system to beat a professional Go player without handicaps. Then it defeated Ke Jie in 2017, who at the time continuously held the world No. 1 ranking for two years.

Other programs handle imperfect-information games; such as:

DeepMind in the 2010s developed a "generalized artificial intelligence" that could learn many diverse Atari games on its own.

In the early 2020s, generative AI gained widespread prominence. ChatGPT, based on GPT-3, and other large language models, were tried by 14% of Americans adults.

The increasing realism and ease-of-use of AI-based text-to-image generators such as Midjourney, DALL-E, and Stable Diffusion sparked a trend of viral AI-generated photos. Widespread attention was gained by:

AlphaFold 2 (2020) demonstrated the ability to approximate, in hours rather than months, the 3D structure of a protein.

Ethics:

AI, like any powerful technology, has potential benefits and potential risks. AI may be able to advance science and find solutions for serious problems: Demis Hassabis of Deep Mind hopes to "solve intelligence, and then use that to solve everything else".

However, as the use of AI has become widespread, several unintended consequences and risks have been identified.

Risks and harm, e.g.:

Privacy and copyright:

Further information: Information privacy and Artificial intelligence and copyright

Machine learning algorithms require large amounts of data. The techniques used to acquire this data have raised concerns about privacy, surveillance and copyright.

Technology companies collect a wide range of data from their users, including online activity, geolocation data, video and audio.

For example, in order to build speech recognition algorithms, Amazon and others have recorded millions of private conversations and allowed temps to listen to and transcribe some of them.

Opinions about this widespread surveillance range from those who see it as a necessary evil to those for whom it is clearly unethical and a violation of the right to privacy.

AI developers argue that this is the only way to deliver valuable applications. and have developed several techniques that attempt to preserve privacy while still obtaining the data, such as data aggregation, de-identification and differential privacy.

Since 2016, some privacy experts, such as Cynthia Dwork, began to view privacy in terms of fairness -- Brian Christian wrote that experts have pivoted "from the question of 'what they know' to the question of 'what they're doing with it'.".

Generative AI is often trained on unlicensed copyrighted works, including in domains such as images or computer code; the output is then used under a rationale of "fair use". Experts disagree about how well, and under what circumstances, this rationale will hold up in courts of law; relevant factors may include "the purpose and character of the use of the copyrighted work" and "the effect upon the potential market for the copyrighted work".

In 2023, leading authors (including John Grisham and Jonathan Franzen) sued AI companies for using their work to train generative AI.

Misinformation:

See also: YouTube § Moderation and offensive content

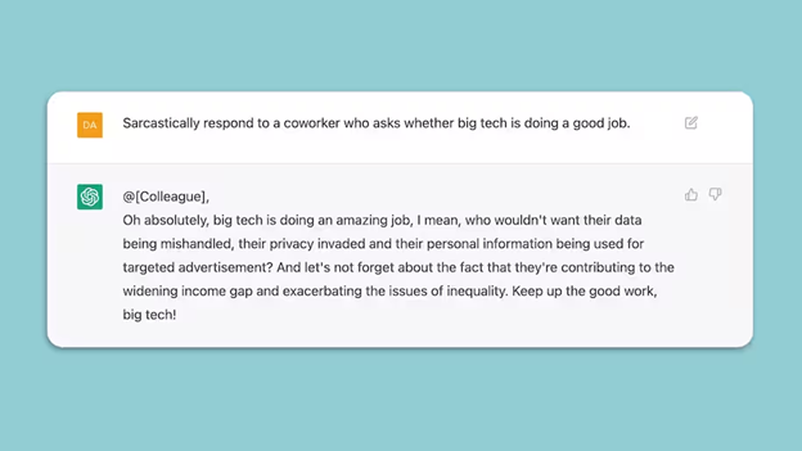

YouTube, Facebook and others use recommender systems to guide users to more content.

These AI programs were given the goal of maximizing user engagement (that is, the only goal was to keep people watching). The AI learned that users tended to choose misinformation, conspiracy theories, and extreme partisan content, and, to keep them watching, the AI recommended more of it.

Users also tended to watch more content on the same subject, so the AI led people into filter bubbles where they received multiple versions of the same misinformation. This convinced many users that the misinformation was true, and ultimately undermined trust in institutions, the media and the government.

The AI program had correctly learned to maximize its goal, but the result was harmful to society. After the U.S. election in 2016, major technology companies took steps to mitigate the problem.

In 2022, generative AI began to create images, audio, video and text that are indistinguishable from real photographs, recordings, films or human writing. It is possible for bad actors to use this technology to create massive amounts of misinformation or propaganda.

This technology has been widely distributed at minimal cost. Geoffrey Hinton (who was an instrumental developer of these tools) expressed his concerns about AI disinformation. He quit his job at Google to freely criticize the companies developing AI.

Algorithmic bias and fairness:

Main articles:

Machine learning applications will be biased if they learn from biased data. The developers may not be aware that the bias exists. Bias can be introduced by the way training data is selected and by the way a model is deployed.

If a biased algorithm is used to make decisions that can seriously harm people (as it can in the following) then the algorithm may cause discrimination:

Fairness in machine learning is the study of how to prevent the harm caused by algorithmic bias. It has become serious area of academic study within AI. Researchers have discovered it is not always possible to define "fairness" in a way that satisfies all stakeholders.

On June 28, 2015, Google Photos's new image labeling feature mistakenly identified Jacky Alcine and a friend as "gorillas" because they were black. The system was trained on a dataset that contained very few images of black people, a problem called "sample size disparity".

Google "fixed" this problem by preventing the system from labelling anything as a "gorilla". Eight years later, in 2023, Google Photos still could not identify a gorilla, and neither could similar products from Apple, Facebook, Microsoft and Amazon.

COMPAS is a commercial program widely used by U.S. courts to assess the likelihood of a defendant becoming a recidivist. In 2016, Julia Angwin at ProPublica discovered that COMPAS exhibited racial bias, despite the fact that the program was not told the races of the defendants.

Although the error rate for both whites and blacks was calibrated equal at exactly 61%, the errors for each race were different -- the system consistently overestimated the chance that a black person would re-offend and would underestimate the chance that a white person would not re-offend.

In 2017, several researchers showed that it was mathematically impossible for COMPAS to accommodate all possible measures of fairness when the base rates of re-offense were different for whites and blacks in the data.

A program can make biased decisions even if the data does not explicitly mention a problematic feature (such as "race" or "gender"). The feature will correlate with other features (like "address", "shopping history" or "first name"), and the program will make the same decisions based on these features as it would on "race" or "gender".

Moritz Hardt said “the most robust fact in this research area is that fairness through blindness doesn't work.”

Criticism of COMPAS highlighted a deeper problem with the misuse of AI. Machine learning models are designed to make "predictions" that are only valid if we assume that the future will resemble the past. If they are trained on data that includes the results of racist decisions in the past, machine learning models must predict that racist decisions will be made in the future.

Unfortunately, if an applications then uses these predictions as recommendations, some of these "recommendations" will likely be racist. Thus, machine learning is not well suited to help make decisions in areas where there is hope that the future will be better than the past. It is necessarily descriptive and not proscriptive.

Bias and unfairness may go undetected because the developers are overwhelmingly white and male: among AI engineers, about 4% are black and 20% are women.

At its 2022 Conference on Fairness, Accountability, and Transparency (ACM FAccT 2022) the Association for Computing Machinery, in Seoul, South Korea, presented and published findings recommending that until AI and robotics systems are demonstrated to be free of bias mistakes, they are unsafe and the use of self-learning neural networks trained on vast, unregulated sources of flawed internet data should be curtailed.

Lack of transparency:

See also:

Most modern AI applications can not explain how they have reached a decision. The large amount of relationships between inputs and outputs in deep neural networks and resulting complexity makes it difficult for even an expert to explain how they produced their outputs, making them a black box.

There have been many cases where a machine learning program passed rigorous tests, but nevertheless learned something different than what the programmers intended. For example, Justin Ko and Roberto Novoa developed a system that could identify skin diseases better than medical professionals, however it classified any image with a ruler as "cancerous", because pictures of malignancies typically include a ruler to show the scale.

A more dangerous example was discovered by Rich Caruana in 2015: a machine learning system that accurately predicted risk of death classified a patient that was over 65, asthma and difficulty breathing as "low risk". Further research showed that in high-risk cases like this, the hospital would allocate more resources and save the patient's life, decreasing the risk measured by the program.

Mistakes like these become obvious when we know how the program has reached a decision. Without an explanation, these problems may not not be discovered until after they have caused harm.

A second issue is that people who have been harmed by an algorithm's decision have a right to an explanation. Doctors, for example, are required to clearly and completely explain the reasoning behind any decision they make. Early drafts of the European Union's General Data Protection Regulation in 2016 included an explicit statement that this right exists. Industry experts noted that this is an unsolved problem with no solution in sight. Regulators argued that nevertheless the harm is real: if the problem has no solution, the tools should not be used.

DARPA established the XAI ("Explainable Artificial Intelligence") program in 2014 to try and solve these problems.

There are several potential solutions to the transparency problem. Multitask learning provides a large number of outputs in addition to the target classification. These other outputs can help developers deduce what the network has learned.

Deconvolution, DeepDream and other generative methods can allow developers to see what different layers of a deep network have learned and produce output that can suggest what the network is learning.

Supersparse linear integer models use learning to identify the most important features, rather than the classification. Simple addition of these features can then make the classification (i.e. learning is used to create a scoring system classifier, which is transparent).

Bad actors and weaponized AI:

Main articles:

A lethal autonomous weapon is a machine that locates, selects and engages human targets without human supervision. By 2015, over fifty countries were reported to be researching battlefield robots. These weapons are considered especially dangerous for several reasons: if they kill an innocent person it is not clear who should be held accountable, it is unlikely they will reliably choose targets, and, if produced at scale, they are potentially weapons of mass destruction.

In 2014, 30 nations (including China) supported a ban on autonomous weapons under the United Nations' Convention on Certain Conventional Weapons, however the United States and others disagreed.

AI provides a number of tools that are particularly useful for authoritarian governments:

Terrorists, criminals and rogue states can use weaponized AI such as advanced digital warfare and lethal autonomous weapons.

Machine-learning AI is also able to design tens of thousands of toxic molecules in a matter of hours.

Technological unemployment:

Main articles:

From the early days of the development of artificial intelligence there have been arguments, for example those put forward by Weizenbaum, about whether tasks that can be done by computers actually should be done by them, given the difference between computers and humans, and between quantitative calculation and qualitative, value-based judgement.

Economists have frequently highlighted the risks of redundancies from AI, and speculated about unemployment if there is no adequate social policy for full employment.

In the past, technology has tended to increase rather than reduce total employment, but economists acknowledge that "we're in uncharted territory" with AI. A survey of economists showed disagreement about whether the increasing use of robots and AI will cause a substantial increase in long-term unemployment, but they generally agree that it could be a net benefit if productivity gains are redistributed.

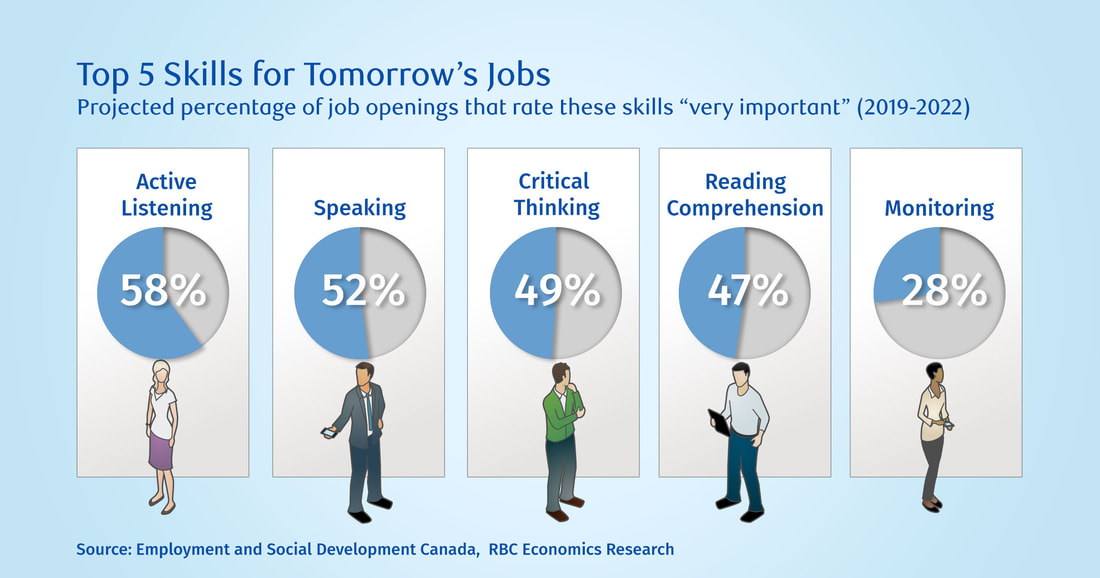

Risk estimates vary; for example, in the 2010s Michael Osborne and Carl Benedikt Frey estimated 47% of U.S. jobs are at "high risk" of potential automation, while an OECD report classified only 9% of U.S. jobs as "high risk".

The methodology of speculating about future employment levels has been criticised as lacking evidential foundation, and for implying that technology (rather than social policy) creates unemployment (as opposed to redundancies).

Unlike previous waves of automation, many middle-class jobs may be eliminated by artificial intelligence; The Economist stated in 2015 that "the worry that AI could do to white-collar jobs what steam power did to blue-collar ones during the Industrial Revolution" is "worth taking seriously".

Jobs at extreme risk range from paralegals to fast food cooks, while job demand is likely to increase for care-related professions ranging from personal healthcare to the clergy.

In April 2023, it was reported that 70% of the jobs for Chinese video game illlustrators had been eliminated by generative artificial intelligence.

Existential risk:

Main article: Existential risk from artificial general intelligence

It has been argued AI will become so powerful that humanity may irreversibly lose control of it. This could, as the physicist Stephen Hawking puts it, "spell the end of the human race".

This scenario has been common in science fiction, when a computer or robot suddenly develops a human-like "self-awareness" (or "sentience" or "consciousness") and becomes a malevolent character. These sci-fi scenarios are misleading in several ways.

First, AI does not require human-like "sentience" to be an existential risk. Modern AI programs are given specific goals and use learning and intelligence to achieve them.

Philosopher Nick Bostrom argued that if one gives almost any goal to a sufficiently powerful AI, it may choose to destroy humanity to achieve it (he used the example of a paperclip factory manager). Stuart Russell gives the example of household robot that tries to find a way to kill its owner to prevent it from being unplugged, reasoning that "you can't fetch the coffee if you're dead."

In order to be safe for humanity, a superintelligence would have to be genuinely aligned with humanity's morality and values so that it is "fundamentally on our side".

Second, Yuval Noah Harari argues that AI does not require a robot body or physical control to pose an existential risk. The essential parts of civilization are not physical. Things like ideologies, law, government, money and the economy are made of language; they exist because there are stories that billions of people believe. The current prevalence of misinformation suggests that an AI could use language to convince people to believe anything, even to take actions that are destructive.

The opinions amongst experts and industry insiders are mixed, with sizable fractions both concerned and unconcerned by risk from eventual superintelligent AI. Personalities such as Stephen Hawking, Bill Gates, Elon Musk have expressed concern about existential risk from AI.

In the early 2010's, experts argued that the risks are too distant in the future to warrant research or that humans will be valuable from the perspective of a superintelligent machine.

However, after 2016, the study of current and future risks and possible solutions became a serious area of research. In 2023, AI pioneers including Geoffrey Hinton, Yoshua Bengio, Demis Hassabis, and Sam Altman issued the joint statement that "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war".

Ethical machines and alignment:

Main articles:

Friendly AI are machines that have been designed from the beginning to minimize risks and to make choices that benefit humans. Eliezer Yudkowsky, who coined the term, argues that developing friendly AI should be a higher research priority: it may require a large investment and it must be completed before AI becomes an existential risk.

Machines with intelligence have the potential to use their intelligence to make ethical decisions. The field of machine ethics provides machines with ethical principles and procedures for resolving ethical dilemmas. The field of machine ethics is also called computational morality, and was founded at an AAAI symposium in 2005.

Other approaches include Wendell Wallach's "artificial moral agents" and Stuart J. Russell's three principles for developing provably beneficial machines.

Regulation:

Main articles:

The regulation of artificial intelligence is the development of public sector policies and laws for promoting and regulating artificial intelligence (AI); it is therefore related to the broader regulation of algorithms. The regulatory and policy landscape for AI is an emerging issue in jurisdictions globally.

According to AI Index at Stanford, the annual number of AI-related laws passed in the 127 survey countries jumped from one passed in 2016 to 37 passed in 2022 alone. Between 2016 and 2020, more than 30 countries adopted dedicated strategies for AI.

Most EU member states had released national AI strategies, as had the followng;

Others were in the process of elaborating their own AI strategy, including Bangladesh, Malaysia and Tunisia.

The Global Partnership on Artificial Intelligence was launched in June 2020, stating a need for AI to be developed in accordance with human rights and democratic values, to ensure public confidence and trust in the technology.

Henry Kissinger, Eric Schmidt, and Daniel Huttenlocher published a joint statement in November 2021 calling for a government commission to regulate AI.

In 2023, OpenAI leaders published recommendations for the governance of superintelligence, which they believe may happen in less than 10 years.

In a 2022 Ipsos survey, attitudes towards AI varied greatly by country; 78% of Chinese citizens, but only 35% of Americans, agreed that "products and services using AI have more benefits than drawbacks". A 2023 Reuters/Ipsos poll found that 61% of Americans agree, and 22% disagree, that AI poses risks to humanity.

In a 2023 Fox News poll, 35% of Americans thought it "very important", and an additional 41% thought it "somewhat important", for the federal government to regulate AI, versus 13% responding "not very important" and 8% responding "not at all important".

History:

The study of mechanical or "formal" reasoning began with philosophers and mathematicians in antiquity. The study of logic led directly to Alan Turing's theory of computation, which suggested that a machine, by shuffling symbols as simple as "0" and "1", could simulate both mathematical deduction and formal reasoning, which is known as the Church–Turing thesis.

This, along with concurrent discoveries in cybernetics and information theory, led researchers to consider the possibility of building an "electronic brain". The first paper later recognized as "AI" was McCullouch and Pitts design for Turing-complete "artificial neurons" in 1943.

The field of AI research was founded at a workshop at Dartmouth College in 1956. The attendees became the leaders of AI research in the 1960s. They and their students produced programs that the press described as "astonishing": computers were learning checkers strategies, solving word problems in algebra, proving logical theorems and speaking English.

By the middle of the 1960s, research in the U.S. was heavily funded by the Department of Defense and laboratories had been established around the world. Herbert Simon predicted, "machines will be capable, within twenty years, of doing any work a man can do".

Marvin Minsky agreed, writing, "within a generation ... the problem of creating 'artificial intelligence' will substantially be solved".

They had, however, underestimated the difficulty of the problem. Both the U.S. and British governments cut off exploratory research in response to the criticism of Sir James Lighthill and ongoing pressure from the US Congress to fund more productive projects.

Minsky's and Papert's book Perceptrons was understood as proving that artificial neural networks approach would never be useful for solving real-world tasks, thus discrediting the approach altogether. The "AI winter", a period when obtaining funding for AI projects was difficult, followed.

In the early 1980s, AI research was revived by the commercial success of expert systems, a form of AI program that simulated the knowledge and analytical skills of human experts. By 1985, the market for AI had reached over a billion dollars. At the same time, Japan's fifth generation computer project inspired the U.S. and British governments to restore funding for academic research.

However, beginning with the collapse of the Lisp Machine market in 1987, AI once again fell into disrepute, and a second, longer-lasting winter began.

Many researchers began to doubt that the current practices would be able to imitate all the processes of human cognition, especially:

A number of researchers began to look into "sub-symbolic" approaches:

Robotics researchers, such as Rodney Brooks, rejected "representation" in general and focussed directly on engineering machines that move and survive.

Judea Pearl, Lofti Zadeh and others developed methods that handled incomplete and uncertain information by making reasonable guesses rather than precise logic. But the most important development was the revival of "connectionism", including neural network research, by Geoffrey Hinton and others.

In 1990, Yann LeCun successfully showed that convolutional neural networks can recognize handwritten digits, the first of many successful applications of neural networks.

AI gradually restored its reputation in the late 1990s and early 21st century by exploiting formal mathematical methods and by finding specific solutions to specific problems. This "narrow" and "formal" focus allowed researchers to produce verifiable results and collaborate with other fields (such as statistics, economics and mathematics).

By 2000, solutions developed by AI researchers were being widely used, although in the 1990s they were rarely described as "artificial intelligence".

Several academic researchers became concerned that AI was no longer pursuing the original goal of creating versatile, fully intelligent machines. Beginning around 2002, they founded the subfield of artificial general intelligence (or "AGI"), which had several well-funded institutions by the 2010s.

Deep learning began to dominate industry benchmarks in 2012 and was adopted throughout the field. For many specific tasks, other methods were abandoned. Deep learning's success was based on both hardware improvements such as:

Deep learning's success led to an enormous increase in interest and funding in AI. The amount of machine learning research (measured by total publications) increased by 50% in the years 2015–2019, and WIPO reported that AI was the most prolific emerging technology in terms of the number of patent applications and granted patents.

According to 'AI Impacts', about $50 billion annually was invested in "AI" around 2022 in the US alone and about 20% of new US Computer Science PhD graduates have specialized in "AI"; about 800,000 "AI"-related US job openings existed in 2022.

In 2016, issues of fairness and the misuse of technology were catapulted into center stage at machine learning conferences, publications vastly increased, funding became available, and many researchers re-focussed their careers on these issues. The alignment problem became a serious field of academic study.

Philosophy:

Main article: Philosophy of artificial intelligence

Defining artificial intelligence:

Main articles:

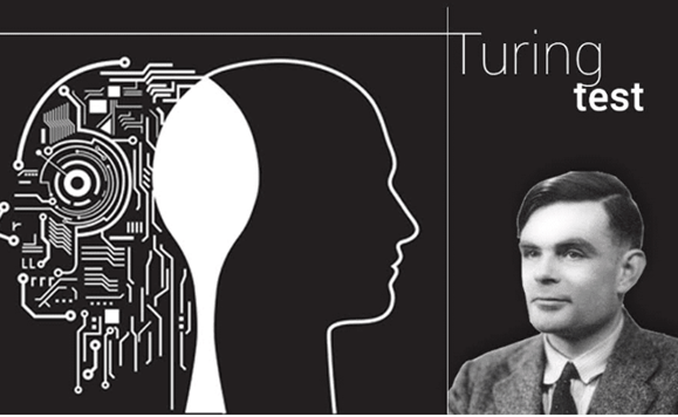

Alan Turing wrote in 1950 "I propose to consider the question 'can machines think'?" He advised changing the question from whether a machine "thinks", to "whether or not it is possible for machinery to show intelligent behaviour". He devised the Turing test, which measures the ability of a machine to simulate human conversation.

Since we can only observe the behavior of the machine, it does not matter if it is "actually" thinking or literally has a "mind". Turing notes that we can not determine these things about other people but "it is usual to have a polite convention that everyone thinks"

Russell and Norvig agree with Turing that AI must be defined in terms of "acting" and not "thinking". However, they are critical that the test compares machines to people. "Aeronautical engineering texts," they wrote, "do not define the goal of their field as making 'machines that fly so exactly like pigeons that they can fool other pigeons.'"

AI founder John McCarthy agreed, writing that "Artificial intelligence is not, by definition, simulation of human intelligence".

McCarthy defines intelligence as "the computational part of the ability to achieve goals in the world." Another AI founder, Marvin Minsky similarly defines it as "the ability to solve hard problems".

These definitions view intelligence in terms of well-defined problems with well-defined solutions, where both the difficulty of the problem and the performance of the program are direct measures of the "intelligence" of the machine—and no other philosophical discussion is required, or may not even be possible.

Another definition has been adopted by Google, a major practitioner in the field of AI. This definition stipulates the ability of systems to synthesize information as the manifestation of intelligence, similar to the way it is defined in biological intelligence.

Evaluating approaches to AI:

No established unifying theory or paradigm has guided AI research for most of its history. The unprecedented success of statistical machine learning in the 2010s eclipsed all other approaches (so much so that some sources, especially in the business world, use the term "artificial intelligence" to mean "machine learning with neural networks").

This approach is mostly sub-symbolic, soft and narrow. Critics argue that these questions may have to be revisited by future generations of AI researchers.

Symbolic AI and its limits:

Symbolic AI (or "GOFAI") simulated the high-level conscious reasoning that people use when they solve puzzles, express legal reasoning and do mathematics. They were highly successful at "intelligent" tasks such as algebra or IQ tests. In the 1960s, Newell and Simon proposed the physical symbol systems hypothesis: "A physical symbol system has the necessary and sufficient means of general intelligent action."

However, the symbolic approach failed on many tasks that humans solve easily, such as learning, recognizing an object or commonsense reasoning. Moravec's paradox is the discovery that high-level "intelligent" tasks were easy for AI, but low level "instinctive" tasks were extremely difficult.

Philosopher Hubert Dreyfus had argued since the 1960s that human expertise depends on unconscious instinct rather than conscious symbol manipulation, and on having a "feel" for the situation, rather than explicit symbolic knowledge. Although his arguments had been ridiculed and ignored when they were first presented, eventually, AI research came to agree.

The issue is not resolved: sub-symbolic reasoning can make many of the same inscrutable mistakes that human intuition does, such as algorithmic bias.

Critics such as Noam Chomsky argue continuing research into symbolic AI will still be necessary to attain general intelligence, in part because sub-symbolic AI is a move away from explainable AI: it can be difficult or impossible to understand why a modern statistical AI program made a particular decision.

The emerging field of neuro-symbolic artificial intelligence attempts to bridge the two approaches.

Neat vs. scruffy:

Main article: Neats and scruffies

"Neats" hope that intelligent behavior is described using simple, elegant principles (such as logic, optimization, or neural networks). "Scruffies" expect that it necessarily requires solving a large number of unrelated problems. Neats defend their programs with theoretical rigor, scruffies rely mainly on incremental testing to see if they work.

This issue was actively discussed in the 70s and 80s, but eventually was seen as irrelevant. Modern AI has elements of both.

Soft vs. hard computing:

Main article: Soft computing

Finding a provably correct or optimal solution is intractable for many important problems. Soft computing is a set of techniques, including genetic algorithms, fuzzy logic and neural networks, that are tolerant of imprecision, uncertainty, partial truth and approximation.

Soft computing was introduced in the late 80s and most successful AI programs in the 21st century are examples of soft computing with neural networks.

Narrow vs. general AI:

Main article: Artificial general intelligence

AI researchers are divided as to whether to pursue the goals of artificial general intelligence and superintelligence directly or to solve as many specific problems as possible (narrow AI) in hopes these solutions will lead indirectly to the field's long-term goals.

General intelligence is difficult to define and difficult to measure, and modern AI has had more verifiable successes by focusing on specific problems with specific solutions. The experimental sub-field of artificial general intelligence studies this area exclusively.

Machine consciousness, sentience and mind:

Main articles:

The philosophy of mind does not know whether a machine can have a mind, consciousness and mental states, in the same sense that human beings do. This issue considers the internal experiences of the machine, rather than its external behavior.

Mainstream AI research considers this issue irrelevant because it does not affect the goals of the field: to build machines that can solve problems using intelligence.

Russell and Norvig add that "[t]he additional project of making a machine conscious in exactly the way humans are is not one that we are equipped to take on." However, the question has become central to the philosophy of mind. It is also typically the central question at issue in artificial intelligence in fiction.

Consciousness:

Main articles:

David Chalmers identified two problems in understanding the mind, which he named the "hard" and "easy" problems of consciousness. The easy problem is understanding how the brain processes signals, makes plans and controls behavior. The hard problem is explaining how this feels or why it should feel like anything at all, assuming we are right in thinking that it truly does feel like something (Dennett's consciousness illusionism says this is an illusion).

Human information processing is easy to explain, however, human subjective experience is difficult to explain. For example, it is easy to imagine a color-blind person who has learned to identify which objects in their field of view are red, but it is not clear what would be required for the person to know what red looks like.

Computationalism and functionalism:

Main articles:

Computationalism is the position in the philosophy of mind that the human mind is an information processing system and that thinking is a form of computing. Computationalism argues that the relationship between mind and body is similar or identical to the relationship between software and hardware and thus may be a solution to the mind–body problem.

This philosophical position was inspired by the work of AI researchers and cognitive scientists in the 1960s and was originally proposed by philosophers Jerry Fodor and Hilary Putnam.

Philosopher John Searle characterized this position as "strong AI": "The appropriately programmed computer with the right inputs and outputs would thereby have a mind in exactly the same sense human beings have minds." Searle counters this assertion with his Chinese room argument, which attempts to show that, even if a machine perfectly simulates human behavior, there is still no reason to suppose it also has a mind.

Robot rights:

Main article: Robot rights

If a machine has a mind and subjective experience, then it may also have sentience (the ability to feel), and if so it could also suffer; it has been argued that this could entitle it to certain rights. Any hypothetical robot rights would lie on a spectrum with animal rights and human rights.

This issue has been considered in fiction for centuries, and is now being considered by, for example, California's Institute for the Future; however, critics argue that the discussion is premature.

Future:

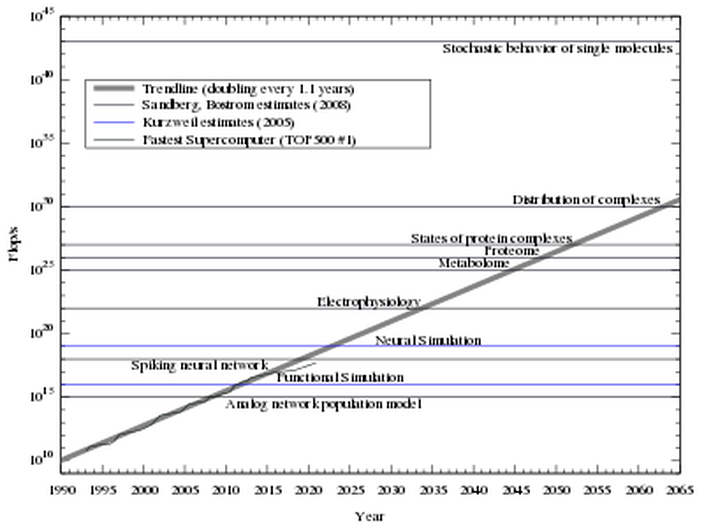

Superintelligence and the singularity:

A superintelligence is a hypothetical agent that would possess intelligence far surpassing that of the brightest and most gifted human mind.

If research into artificial general intelligence produced sufficiently intelligent software, it might be able to reprogram and improve itself. The improved software would be even better at improving itself, leading to what I. J. Good called an "intelligence explosion" and Vernor Vinge called a "singularity".

However, most technologies do not improve exponentially indefinitely, but rather follow an S-curve, slowing when they reach the physical limits of what the technology can do.

Consider, for example, transportation: speed increased exponentially from 1830 to 1970, but then the trend abruptly stopped when it reached physical limits.

Transhumanism:

Robot designer Hans Moravec, cyberneticist Kevin Warwick, and inventor Ray Kurzweil have predicted that humans and machines will merge in the future into cyborgs that are more capable and powerful than either. This idea, called transhumanism, has roots in Aldous Huxley and Robert Ettinger.

Edward Fredkin argues that "artificial intelligence is the next stage in evolution", an idea first proposed by Samuel Butler's "Darwin among the Machines" as far back as 1863, and expanded upon by George Dyson in his book of the same name in 1998.

In fiction:

Main article: Artificial intelligence in fiction

Thought-capable artificial beings have appeared as storytelling devices since antiquity, and have been a persistent theme in science fiction.

A common trope in these works began with Mary Shelley's Frankenstein, where a human creation becomes a threat to its masters.

This also includes such works as Arthur C. Clarke's and Stanley Kubrick's 2001: A Space Odyssey (both 1968), with HAL 9000, the murderous computer in charge of the Discovery One spaceship, as well as The Terminator (1984) and The Matrix (1999).

In contrast, the rare loyal robots such as Gort from The Day the Earth Stood Still (1951) and Bishop from Aliens (1986) are less prominent in popular culture.

Isaac Asimov introduced the Three Laws of Robotics in many books and stories, most notably the "Multivac" series about a super-intelligent computer of the same name. Asimov's laws are often brought up during lay discussions of machine ethics; while almost all artificial intelligence researchers are familiar with Asimov's laws through popular culture, they generally consider the laws useless for many reasons, one of which is their ambiguity.

Several works use AI to force us to confront the fundamental question of what makes us human, showing us artificial beings that have the ability to feel, and thus to suffer. This appears in Karel Čapek's R.U.R., the films A.I. Artificial Intelligence and Ex Machina, as well as the novel Do Androids Dream of Electric Sheep?, by Philip K. Dick. Dick considers the idea that our understanding of human subjectivity is altered by technology created with artificial intelligence.

See also

"Artificial intelligence (AI) is on the rise both in business and in the world in general. How beneficial is it really to your business in the long run? Sure, it can take over those time-consuming and mundane tasks that are bogging your employees down, but at what cost?

With AI spending expected to reach $46 billion by 2020, according to an IDC report, there’s no sign of the technology slowing down. Adding AI to your business may be the next step as you look for ways to advance your operations and increase your performance.

Forbes Technology Council is an invitation-only community for world-class CIOs, CTOs and technology executives. Do I qualify?To understand how AI will impact your business going forward, 14 members of Forbes Technology Council weigh in on the concerns about artificial intelligence and provide reasons why AI is either a detriment or a benefit to society. Here is what they had to say:

1. Enhances Efficiency And Throughput

Concerns about disruptive technologies are common. A recent example is automobiles -- it took years to develop regulation around the industry to make it safe. That said, AI today is a huge benefit to society because it enhances our efficiency and throughput, while creating new opportunities for revenue generation, cost savings and job creation. - Anand Sampat, Datmo

2. Frees Up Humans To Do What They Do Best

Humans are not best served by doing tedious tasks. Machines can do that, so this is where AI can provide a true benefit. This allows us to do the more interpersonal and creative aspects of work. - Chalmers Brown, Due

3. Adds Jobs, Strengthens The Economy

We all see the headlines: Robots and AI will destroy jobs. This is fiction rather than fact. AI encourages a gradual evolution in the job market which, with the right preparation, will be positive. People will still work, but they’ll work better with the help of AI. The unparalleled combination of human and machine will become the new normal in the workforce of the future. - Matthew Lieberman, PwC

4. Leads To Loss Of Control

If machines do get smarter than humans, there could be a loss of control that can be a detriment. Whether that happens or whether certain controls can be put in place remains to be seen. - Muhammed Othman, Calendar

5. Enhances Our Lifestyle

The rise of AI in our society will enhance our lifestyle and create more efficient businesses. Some of the mundane tasks like answering emails and data entry will by done by intelligent assistants. Smart homes will also reduce energy usage and provide better security, marketing will be more targeted and we will get better health care thanks to better diagnoses. - Naresh Soni, Tsunami ARVR

6. Supervises Learning For Telemedicine

AI is a technology that can be used for both good and nefarious purposes, so there is a need to be vigilant. The latest technologies seem typically applied towards the wealthiest among us, but AI has the potential to extend knowledge and understanding to a broader population -- e.g. image-based AI diagnoses of medical conditions could allow for a more comprehensive deployment of telemedicine. - Harald Quintus-Bosz, Cooper Perkins, Inc.

7. Creates Unintended And Unforeseen Consequences

While fears about killer robots grab headlines, unintended and unforeseen consequences of artificial intelligence need attention today, as we're already living with them. For example, it is believed that Facebook's newsfeed algorithm influenced an election outcome that affected geopolitics. How can we better anticipate and address such possible outcomes in future? - Simon Smith, BenchSci

8. Increases Automation

There will be economic consequences to the widespread adoption of machine learning and other AI technologies. AI is capable of performing tasks that would once have required intensive human labor or not have been possible at all. The major benefit for business will be a reduction in operational costs brought about by AI automation -- whether that’s a net positive for society remains to be seen. - Vik Patel, Nexcess

9. Elevates The Condition Of Mankind

The ability for technology to solve more problems, answer more questions and innovate with a number of inputs beyond the capacity of the human brain can certainly be used for good or ill. If history is any guide, the improvement of technology tends to elevate the condition of mankind and allow us to focus on higher order functions and an improved quality of life. - Wade Burgess, Shiftgig

10. Solves Complex Social Problems

Much of the fear with AI is due to the misunderstanding of what it is and how it should be applied. Although AI has promise for solving complex social problems, there are ethical issues and biases we must still explore. We are just beginning to understand how AI can be applied to meaningful problems. As our use of AI matures, we will find it to be a clear benefit in our lives. - Mark Benson, Exosite, LLC

11. Improves Demand Side Management

AI is a benefit to society because machines can become smarter over time and increase efficiencies. Additionally, computers are not susceptible to the same probability of errors as human beings are. From an energy standpoint, AI can be used to analyze and research historical data to determine how to most efficiently distribute energy loads from a grid perspective. - Greg Sarich, CLEAResult

12. Benefits Multiple Industries

Society has and will continue to benefit from AI based on character/facial recognition, digital content analysis and accuracy in identifying patterns, whether they are used for health sciences, academic research or technology applications. AI risks are real if we don't understand the quality of the incoming data and set AI rules which are making granular trade-off decisions at increasing computing speeds. - Mark Butler, Qualys.com

13. Absolves Humans Of All Responsibility

It is one thing to use machine learning to predict and help solve problems; it is quite another to use these systems to purposely control and act in ways that will make people unnecessary.

When machine intelligence exceeds our ability to understand it, or it becomes superior intelligence, we should take care to not blindly follow its recommendation and absolve ourselves of all responsibility. - Chris Kirby, Voices.com

14. Extends And Expands Creativity

AI intelligence is the biggest opportunity of our lifetime to extend and expand human creativity and ingenuity. The two main concerns that the fear-mongers raise are around AI leading to job losses in the society and AI going rogue and taking control of the human race.

I believe that both these concerns raised by critics are moot or solvable. - Ganesh Padmanabhan, CognitiveScale, Inc

[End of Article #1]

___________________________________________________________________________

These are the jobs most at risk of automation according to Oxford University: Is yours one of them? (The Telegraph September 27, 2017)

In his speech at the 2017 Labour Party conference, Jeremy Corbyn outlined his desire to "urgently... face the challenge of automation", which he called a " threat in the hands of the greedy".

Whether or not Corbyn is planing a potentially controversial 'robot tax' wasn't clear from his speech, but addressing the forward march of automation is a savvy move designed to appeal to voters in low-paying, routine work.

Click here for rest of Article.

___________________________________________________________________________

Artificial Intelligence (AI) by Wikipedia 10/29/2023:

In a Nutshell:

Part of a series on Artificial intelligence

Major goals:

- Artificial general intelligence

- Planning

- Computer vision

- General game playing

- Knowledge reasoning

- Machine learning

- Natural language processing

- Robotics

- AI safety

- Symbolic

- Deep learning

- Bayesian networks

- Evolutionary algorithms

- Situated approach

- Hybrid intelligent systems

- Systems integration

AI Expanded follows:

Artificial intelligence (AI) is the intelligence of machines or software, as opposed to the intelligence of humans or animals. It is also the field of study in computer science that develops and studies intelligent machines. "AI" may also refer to the machines themselves.

AI technology is widely used throughout industry, government and science. Some high-profile applications are:

- advanced web search engines (e.g., Google Search), recommendation systems (used by YouTube, Amazon, and Netflix),

- understanding human speech (such as Siri and Alexa),

- self-driving cars (e.g., Waymo),

- generative or creative tools (ChatGPT and AI art),

- and competing at the highest level in strategic games (such as chess and Go).

Artificial intelligence was founded as an academic discipline in 1956. The field went through multiple cycles of optimism followed by disappointment and loss of funding, but after 2012, when deep learning surpassed all previous AI techniques, there was a vast increase in funding and interest.

The various sub-fields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include:

- reasoning,

- knowledge representation,

- planning,

- learning,

- natural language processing,

- perception,

- and support for robotics.

General intelligence (the ability to solve an arbitrary problem) is among the field's long-term goals. To solve these problems, AI researchers have adapted and integrated a wide range of problem-solving techniques, including:

- search and mathematical optimization,

- formal logic,

- artificial neural networks,

- and methods based on

AI also draws upon psychology, linguistics, philosophy, neuroscience and many other fields.

Goals:

The general problem of simulating (or creating) intelligence has been broken down into sub-problems. These consist of particular traits or capabilities that researchers expect an intelligent system to display. The traits described below have received the most attention and cover the scope of AI research:

Reasoning, problem-solving:

Early researchers developed algorithms that imitated step-by-step reasoning that humans use when they solve puzzles or make logical deductions. By the late 1980s and 1990s, methods were developed for dealing with uncertain or incomplete information, employing concepts from probability and economics.

Many of these algorithms are insufficient for solving large reasoning problems because they experience a "combinatorial explosion": they became exponentially slower as the problems grew larger. Even humans rarely use the step-by-step deduction that early AI research could model. They solve most of their problems using fast, intuitive judgments. Accurate and efficient reasoning is an unsolved problem.

Knowledge representation:

Knowledge representation and knowledge engineering allow AI programs to answer questions intelligently and make deductions about real-world facts. Formal knowledge representations are used in:

- content-based indexing and retrieval,

- scene interpretation,

- clinical decision support,

- knowledge discovery (mining "interesting" and actionable inferences from large databases),

- and other areas.

A knowledge base is a body of knowledge represented in a form that can be used by a program. An ontology is the set of objects, relations, concepts, and properties used by a particular domain of knowledge.

Knowledge bases need to represent things such as:

- objects,

- properties,

- categories and relations between objects,

- situations,

- events,

- states and time;

- causes and effects;

- knowledge about knowledge (what we know about what other people know);

- default reasoning (things that humans assume are true until they are told differently and will remain true even when other facts are changing);

- and many other aspects and domains of knowledge.

Among the most difficult problems in KR are:

- the breadth of commonsense knowledge (the set of atomic facts that the average person knows is enormous);

- and the sub-symbolic form of most commonsense knowledge (much of what people know is not represented as "facts" or "statements" that they could express verbally).

Knowledge acquisition is the difficult problem of obtaining knowledge for AI applications. Modern AI gathers knowledge by "scraping" the internet (including Wikipedia). The knowledge itself was collected by the volunteers and professionals who published the information (who may or may not have agreed to provide their work to AI companies).

This "crowd sourced" technique does not guarantee that the knowledge is correct or reliable. The knowledge of Large Language Models (such as ChatGPT) is highly unreliable -- it generates misinformation and falsehoods (known as "hallucinations"). Providing accurate knowledge for these modern AI applications is an unsolved problem.

Planning and decision making:

An "agent" is anything that perceives and takes actions in the world. A rational agent has goals or preferences and takes actions to make them happen:

- In automated planning, the agent has a specific goal.

- In automated decision making, the agent has preferences – there are some situations it would prefer to be in, and some situations it is trying to avoid.

The decision making agent assigns a number to each situation (called the "utility") that measures how much the agent prefers it. For each possible action, it can calculate the "expected utility": the utility of all possible outcomes of the action, weighted by the probability that the outcome will occur. It can then choose the action with the maximum expected utility.

In classical planning, the agent knows exactly what the effect of any action will be. In most real-world problems, however, the agent may not be certain about the situation they are in (it is "unknown" or "unobservable") and it may not know for certain what will happen after each possible action (it is not "deterministic"). It must choose an action by making a probabilistic guess and then reassess the situation to see if the action worked.

In some problems, the agent's preferences may be uncertain, especially if there are other agents or humans involved. These can be learned (e.g., with inverse reinforcement learning) or the agent can seek information to improve its preferences. Information value theory can be used to weigh the value of exploratory or experimental actions. The space of possible future actions and situations is typically intractably large, so the agents must take actions and evaluate situations while being uncertain what the outcome will be.

A Markov decision process has a transition model that describes the probability that a particular action will change the state in a particular way, and a reward function that supplies the utility of each state and the cost of each action. A policy associates a decision with each possible state.

The policy could be calculated (e.g. by iteration), be heuristic, or it can be learned.

Game theory describes rational behavior of multiple interacting agents, and is used in AI programs that make decisions that involve other agents.

Learning:

Machine learning is the study of programs that can improve their performance on a given task automatically. It has been a part of AI from the beginning.

There are several kinds of machine learning:

- Unsupervised learning analyzes a stream of data and finds patterns and makes predictions without any other guidance.

- Supervised learning requires a human to label the input data first, and comes in two main varieties: