Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

On this Page We Cover

The Best of The Internet.

Note that, however,

For Social Networking, click here

For Web-based Television, click here

For Internet Security, click here

Google: Search Engine and Multinational Technology Company.

YouTube Video: Introducing Google Gnome Game

YouTube Video: (Google) Waymo's fully self-driving cars are here

YouTube Video: Made by Google 2017 | Event highlights

Google LLC is an American multinational technology company that specializes in Internet-related services and products. These include the following:

Google was founded in 1998 by Larry Page and Sergey Brin while they were Ph.D. students at Stanford University, in California. Together, they own about 14 percent of its shares, and control 56 percent of the stockholder voting power through supervoting stock. They incorporated Google as a privately held company on September 4, 1998.

An initial public offering (IPO) took place on August 19, 2004, and Google moved to its new headquarters in Mountain View, California, nicknamed the Googleplex.

In August 2015, Google announced plans to reorganize its various interests as a conglomerate called Alphabet Inc. Google, Alphabet's leading subsidiary, will continue to be the umbrella company for Alphabet's Internet interests. Upon completion of the restructure, Sundar Pichai was appointed CEO of Google; he replaced Larry Page, who became CEO of Alphabet.

The company's rapid growth since incorporation has triggered a chain of products, acquisitions, and partnerships beyond Google's core search engine (Google Search).

It offers services designed for

The company leads the development of the Android mobile operating system, the Google Chrome web browser, and Chrome OS, a lightweight operating system based on the Chrome browser.

Google has moved increasingly into hardware; from 2010 to 2015, it partnered with major electronics manufacturers in the production of its Nexus devices, and in October 2016, it released multiple hardware products, including the following:

The new hardware chief, Rick Osterloh, stated: "a lot of the innovation that we want to do now ends up requiring controlling the end-to-end user experience". Google has also experimented with becoming an Internet carrier.

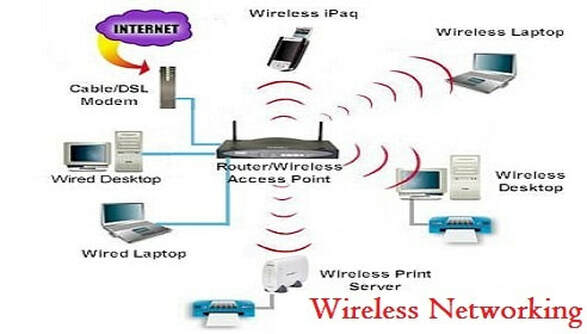

In February 2010, it announced Google Fiber, a fiber-optic infrastructure that was installed in Kansas City; in April 2015, it launched Project Fi in the United States, combining Wi-Fi and cellular networks from different providers; and in 2016, it announced the Google Station initiative to make public Wi-Fi available around the world, with initial deployment in India.

Alexa, a company that monitors commercial web traffic, lists Google.com as the most visited website in the world. Several other Google services also figure in the top 100 most visited websites, including YouTube and Blogger. Google is the most valuable brand in the world as of 2017, but has received significant criticism involving issues such as privacy concerns, tax avoidance, antitrust, censorship, and search neutrality.

Google's mission statement, from the outset, was "to organize the world's information and make it universally accessible and useful", and its unofficial slogan was "Don't be evil". In October 2015, the motto was replaced in the Alphabet corporate code of conduct by the phrase "Do the right thing".

Click on any of the following blue hyperlinks for more about the company "Google"

- online advertising technologies,

- search,

- cloud computing,

- software,

- and hardware.

Google was founded in 1998 by Larry Page and Sergey Brin while they were Ph.D. students at Stanford University, in California. Together, they own about 14 percent of its shares, and control 56 percent of the stockholder voting power through supervoting stock. They incorporated Google as a privately held company on September 4, 1998.

An initial public offering (IPO) took place on August 19, 2004, and Google moved to its new headquarters in Mountain View, California, nicknamed the Googleplex.

In August 2015, Google announced plans to reorganize its various interests as a conglomerate called Alphabet Inc. Google, Alphabet's leading subsidiary, will continue to be the umbrella company for Alphabet's Internet interests. Upon completion of the restructure, Sundar Pichai was appointed CEO of Google; he replaced Larry Page, who became CEO of Alphabet.

The company's rapid growth since incorporation has triggered a chain of products, acquisitions, and partnerships beyond Google's core search engine (Google Search).

It offers services designed for

- work and productivity:

- email (Gmail/Inbox),

- scheduling and time management (Google Calendar),

- cloud storage (Google Drive),

- social networking (Google+),

- instant messaging and video chat (Google Allo/Duo/Hangouts),

- language translation (Google Translate),

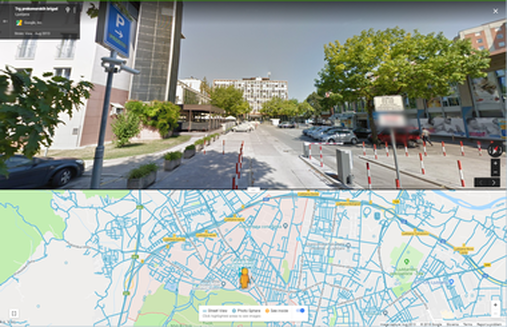

- mapping and turn-by-turn navigation (Google Maps/Waze/Earth/Street View),

- video sharing (YouTube),

- notetaking (Google Keep),

- and photo organizing and editing (Google Photos).

The company leads the development of the Android mobile operating system, the Google Chrome web browser, and Chrome OS, a lightweight operating system based on the Chrome browser.

Google has moved increasingly into hardware; from 2010 to 2015, it partnered with major electronics manufacturers in the production of its Nexus devices, and in October 2016, it released multiple hardware products, including the following:

- Google Pixel smartphone,

- Home smart speaker,

- Wifi mesh wireless router,

- and Daydream View virtual reality headset.

The new hardware chief, Rick Osterloh, stated: "a lot of the innovation that we want to do now ends up requiring controlling the end-to-end user experience". Google has also experimented with becoming an Internet carrier.

In February 2010, it announced Google Fiber, a fiber-optic infrastructure that was installed in Kansas City; in April 2015, it launched Project Fi in the United States, combining Wi-Fi and cellular networks from different providers; and in 2016, it announced the Google Station initiative to make public Wi-Fi available around the world, with initial deployment in India.

Alexa, a company that monitors commercial web traffic, lists Google.com as the most visited website in the world. Several other Google services also figure in the top 100 most visited websites, including YouTube and Blogger. Google is the most valuable brand in the world as of 2017, but has received significant criticism involving issues such as privacy concerns, tax avoidance, antitrust, censorship, and search neutrality.

Google's mission statement, from the outset, was "to organize the world's information and make it universally accessible and useful", and its unofficial slogan was "Don't be evil". In October 2015, the motto was replaced in the Alphabet corporate code of conduct by the phrase "Do the right thing".

Click on any of the following blue hyperlinks for more about the company "Google"

- History

- Products and services

- Corporate affairs and culture

- Criticism and controversy

- See also:

- AngularJS

- Comparison of web search engines

- Don't Be Evil

- Google (verb)

- Google Balloon Internet

- Google Catalogs

- Google China

- Google bomb

- Google Chrome Experiments

- Google Get Your Business Online

- Google logo

- Google Maps

- Google platform

- Google Street View

- Google tax

- Google Ventures – venture capital fund

- Google X

- Life sciences division of Google X

- Googlebot – web crawler

- Googlization

- List of Google apps for Android

- List of mergers and acquisitions by Alphabet

- Apple, Inc.

- Outline of Google

- Reunion

- Ungoogleable

- Surveillance capitalism

- Calico

- Official website

- Google website at the Wayback Machine (archived November 11, 1998)

- Google at CrunchBase

- Google companies grouped at OpenCorporates

- Business data for Google, Inc.:

YouTube Including a List of the most watched YouTube Videos

YouTube Video: HOW TO MAKE VIDEOS AND START A YOUTUBE CHANNEL

Video Sharing Website Ranked #2 by Alexa (#3 by SimilarWeb)

Click here for a List of the Most Watched YouTube Videos.

YouTube is an American video-sharing website headquartered in San Bruno, California. The service was created by three former PayPal employees — Chad Hurley, Steve Chen, and Jawed Karim — in February 2005. Google bought the site in November 2006 for US$1.65 billion; YouTube now operates as one of Google's subsidiaries.

YouTube allows users to upload, view, rate, share, add to favorites, report, comment on videos, and subscribe to other users. It uses WebM, H.264/MPEG-4 AVC, and Adobe Flash Video technology to display a wide variety of user-generated and corporate media videos.

Available content includes the following:

Most of the content on YouTube has been uploaded by individuals, but media corporations including CBS, the BBC, Vevo, and Hulu offer some of their material via YouTube as part of the YouTube partnership program.

Unregistered users can only watch videos on the site, while registered users are permitted to upload an unlimited number of videos and add comments to videos. Videos deemed potentially offensive are available only to registered users affirming themselves to be at least 18 years old.

YouTube earns advertising revenue from Google AdSense, a program which targets ads according to site content and audience. The vast majority of its videos are free to view, but there are exceptions, including subscription-based premium channels, film rentals, as well as YouTube Red, a subscription service offering ad-free access to the website and access to exclusive content made in partnership with existing users.

As of February 2017, there are more than 400 hours of content uploaded to YouTube each minute, and one billion hours of content are watched on YouTube every day. As of April 2017, the website is ranked as the second most popular site in the world by Alexa Internet, a web traffic analysis company.

Click on any of the following blue hyperlinks for more about YouTube Videos:

YouTube is an American video-sharing website headquartered in San Bruno, California. The service was created by three former PayPal employees — Chad Hurley, Steve Chen, and Jawed Karim — in February 2005. Google bought the site in November 2006 for US$1.65 billion; YouTube now operates as one of Google's subsidiaries.

YouTube allows users to upload, view, rate, share, add to favorites, report, comment on videos, and subscribe to other users. It uses WebM, H.264/MPEG-4 AVC, and Adobe Flash Video technology to display a wide variety of user-generated and corporate media videos.

Available content includes the following:

- video clips,

- TV show clips,

- music videos,

- short and documentary films,

- audio recordings,

- movie trailers,

- video blogging,

- short original videos,

- and educational videos.

Most of the content on YouTube has been uploaded by individuals, but media corporations including CBS, the BBC, Vevo, and Hulu offer some of their material via YouTube as part of the YouTube partnership program.

Unregistered users can only watch videos on the site, while registered users are permitted to upload an unlimited number of videos and add comments to videos. Videos deemed potentially offensive are available only to registered users affirming themselves to be at least 18 years old.

YouTube earns advertising revenue from Google AdSense, a program which targets ads according to site content and audience. The vast majority of its videos are free to view, but there are exceptions, including subscription-based premium channels, film rentals, as well as YouTube Red, a subscription service offering ad-free access to the website and access to exclusive content made in partnership with existing users.

As of February 2017, there are more than 400 hours of content uploaded to YouTube each minute, and one billion hours of content are watched on YouTube every day. As of April 2017, the website is ranked as the second most popular site in the world by Alexa Internet, a web traffic analysis company.

Click on any of the following blue hyperlinks for more about YouTube Videos:

- Company history

- Features

- Video technology

- Playback

- Uploading

Quality and formats

3D videos

360° videos

- User features

- Content accessibility

- Localization

- YouTube Red

- YouTube TV

- Video technology

- Social impact

- Revenue

- Community policy

- Censorship and filtering

- Music Key licensing

- NSA Prism program

- April Fools

- CNN-YouTube presidential debates

- List of YouTubers

- BookTube

- Ouellette v. Viacom International Inc.

- Reply Girls

- YouTube Awards

- YouTube Instant

- YouTube Live

- YouTube Multi Channel Network

- YouTube Symphony Orchestra

- Viacom International Inc. v. YouTube, Inc.

- Alternative media

- Comparison of video hosting services

- List of Internet phenomena

- List of video hosting services

- YouTube on Blogger

- Press room – YouTube

- YouTube – Google Developers

- Haran, Brady; Hamilton, Ted. "Why do YouTube views freeze at 301?". Numberphile. Brady Haran.

- Dickey, Megan Rose (February 15, 2013). "The 22 Key Turning Points in the History of YouTube". Business Insider. Axel Springer SE. Retrieved March 25, 2017.

- Are Youtubers Revolutionizing Entertainment? (June 6, 2013), video produced for PBS by Off Book.

- First Youtube video ever

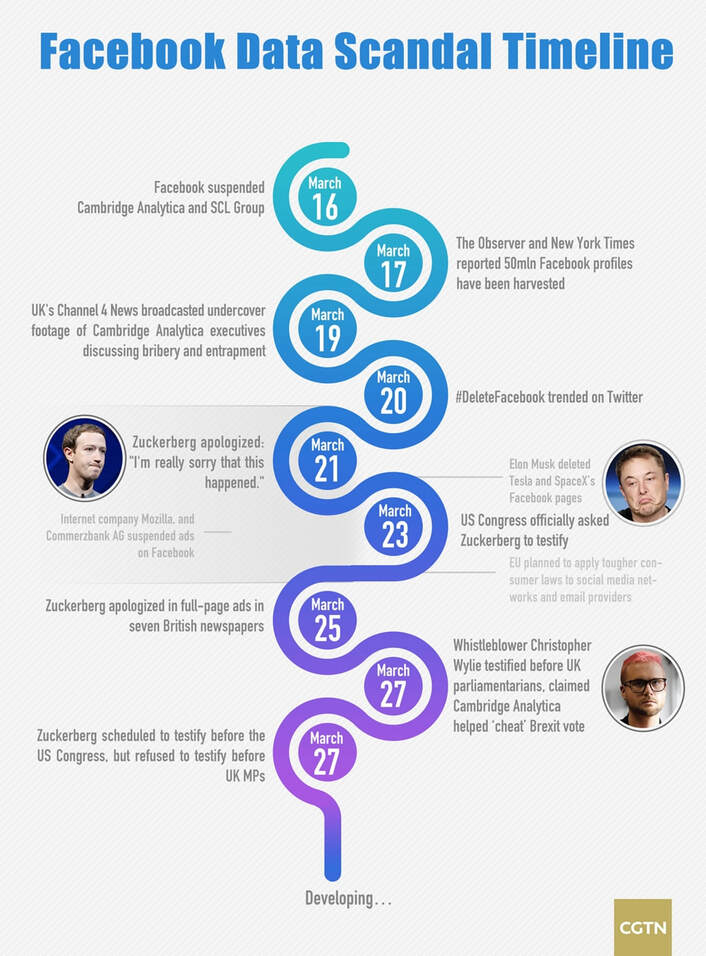

Facebook and its owner Meta Platforms Pictured below: Infographic: Timeline of Facebook data scandal

Facebook is an online social media and social networking service owned by American company Meta Platforms (see below).

Founded in 2004 by Mark Zuckerberg with fellow Harvard College students and roommates Eduardo Saverin, Andrew McCollum, Dustin Moskovitz, and Chris Hughes, its name comes from the face book directories often given to American university students.

Membership was initially limited to Harvard students, gradually expanding to other North American universities and, since 2006, anyone over 13 years old.

As of July 2022, Facebook claimed 2.93 billion monthly active users, and ranked third worldwide among the most visited websites as of July 2022. It was the most downloaded mobile app of the 2010s.

Facebook can be accessed from devices with Internet connectivity, such as personal computers, tablets and smartphones. After registering, users can create a profile revealing information about themselves. They can post text, photos and multimedia which are shared with any other users who have agreed to be their "friend" or, with different privacy settings, publicly.

Users can also communicate directly with each other with Facebook Messenger, join common-interest groups, and receive notifications on the activities of their Facebook friends and the pages they follow.

The subject of numerous controversies, Facebook has often been criticized over issues such as user privacy (as with the Cambridge Analytica data scandal), political manipulation (as with the 2016 U.S. elections) and mass surveillance.

Posts originating from the Facebook page of Breitbart News, a media organization previously affiliated with Cambridge Analytica, are currently among the most widely shared political content on Facebook.

Facebook has also been subject to criticism over psychological effects such as addiction and low self-esteem, and various controversies over content such as fake news, conspiracy theories, copyright infringement, and hate speech.

Commentators have accused Facebook of willingly facilitating the spread of such content, as well as exaggerating its number of users to appeal to advertisers

Click on any of the following blue hyperlinks for more about Facebook:

Meta Platforms, Inc., doing business as Meta and formerly named Facebook, Inc., and TheFacebook, Inc., is an American multinational technology conglomerate based in Menlo Park, California.

The company owns Facebook, Instagram, and WhatsApp, among other products and services. Meta was once one of the world's most valuable companies, but as of 2022 is not one of the top twenty biggest companies in the United States.

Meta is considered one of the Big Five American information technology companies, alongside Alphabet (Google), Amazon, Apple, and Microsoft.

As of 2022, it is the least profitable of the five.

Meta's products and services include Facebook, Messenger, Facebook Watch, and Meta Portal. It has also acquired Oculus, Giphy, Mapillary, Kustomer, Presize and has a 9.99% stake in Jio Platforms. In 2021, the company generated 97.5% of its revenue from the sale of advertising.

In October 2021, the parent company of Facebook changed its name from Facebook, Inc., to Meta Platforms, Inc., to "reflect its focus on building the metaverse". According to Meta, the "metaverse" refers to the integrated environment that links all of the company's products and services.

Click on any of the following blue hyperlinks for more about Meta Platforms, Inc.:

Founded in 2004 by Mark Zuckerberg with fellow Harvard College students and roommates Eduardo Saverin, Andrew McCollum, Dustin Moskovitz, and Chris Hughes, its name comes from the face book directories often given to American university students.

Membership was initially limited to Harvard students, gradually expanding to other North American universities and, since 2006, anyone over 13 years old.

As of July 2022, Facebook claimed 2.93 billion monthly active users, and ranked third worldwide among the most visited websites as of July 2022. It was the most downloaded mobile app of the 2010s.

Facebook can be accessed from devices with Internet connectivity, such as personal computers, tablets and smartphones. After registering, users can create a profile revealing information about themselves. They can post text, photos and multimedia which are shared with any other users who have agreed to be their "friend" or, with different privacy settings, publicly.

Users can also communicate directly with each other with Facebook Messenger, join common-interest groups, and receive notifications on the activities of their Facebook friends and the pages they follow.

The subject of numerous controversies, Facebook has often been criticized over issues such as user privacy (as with the Cambridge Analytica data scandal), political manipulation (as with the 2016 U.S. elections) and mass surveillance.

Posts originating from the Facebook page of Breitbart News, a media organization previously affiliated with Cambridge Analytica, are currently among the most widely shared political content on Facebook.

Facebook has also been subject to criticism over psychological effects such as addiction and low self-esteem, and various controversies over content such as fake news, conspiracy theories, copyright infringement, and hate speech.

Commentators have accused Facebook of willingly facilitating the spread of such content, as well as exaggerating its number of users to appeal to advertisers

Click on any of the following blue hyperlinks for more about Facebook:

- History

- 2003–2006: Thefacebook, Thiel investment, and name change

- 2006–2012: Public access, Microsoft alliance, and rapid growth

- 2012–2013: IPO, lawsuits, and one billion active users

- 2013–2014: Site developments, A4AI, and 10th anniversary

- 2015–2020: Algorithm revision; fake news

- 2020–present: FTC lawsuit, corporate re-branding, shut down of facial recognition technology, ease of policy

- Website

- Reception

- Criticisms and controversies

- Impact

- See also:

Meta Platforms, Inc., doing business as Meta and formerly named Facebook, Inc., and TheFacebook, Inc., is an American multinational technology conglomerate based in Menlo Park, California.

The company owns Facebook, Instagram, and WhatsApp, among other products and services. Meta was once one of the world's most valuable companies, but as of 2022 is not one of the top twenty biggest companies in the United States.

Meta is considered one of the Big Five American information technology companies, alongside Alphabet (Google), Amazon, Apple, and Microsoft.

As of 2022, it is the least profitable of the five.

Meta's products and services include Facebook, Messenger, Facebook Watch, and Meta Portal. It has also acquired Oculus, Giphy, Mapillary, Kustomer, Presize and has a 9.99% stake in Jio Platforms. In 2021, the company generated 97.5% of its revenue from the sale of advertising.

In October 2021, the parent company of Facebook changed its name from Facebook, Inc., to Meta Platforms, Inc., to "reflect its focus on building the metaverse". According to Meta, the "metaverse" refers to the integrated environment that links all of the company's products and services.

Click on any of the following blue hyperlinks for more about Meta Platforms, Inc.:

- History

- Mergers and acquisitions

- Lobbying

- Lawsuits

- Structure

- Revenue

- Facilities

- Reception

- See also:

- Big Tech

- Criticism of Facebook

- Facebook–Cambridge Analytica data scandal

- 2021 Facebook leak

- Meta AI

- The Social Network

- Official website

- Meta Platforms companies grouped at OpenCorporates

- Business data for Meta Platforms, Inc.:

Yahoo!

Portal and media Website Ranked #5 by both Alexa and SimilarWeb.

YouTube Video Yahoo!: What It's Really Like To Buy A Tesla

Yahoo Inc. (styled as Yahoo!) is an American multinational technology company headquartered in Sunnyvale, California.

It is globally known for its Web portal, search engine Yahoo! Search, and related services, including:

- Yahoo! Directory,

- Yahoo! Mail,

- Yahoo! News,

- Yahoo! Finance,

- Yahoo! Groups,

- Yahoo! Answers,

- advertising,

- online mapping,

- video sharing,

- fantasy sports

- and its social media website.

It is one of the most popular sites in the United States. According to third-party web analytics providers, Alexa and SimilarWeb, Yahoo! is the highest-read news and media website, with over 7 billion readers per month, being the fourth most visited website globally, as of June 2015.

According to news sources, roughly 700 million people visit Yahoo websites every month. Yahoo itself claims it attracts "more than half a billion consumers every month in more than 30 languages."

Yahoo was founded by Jerry Yang and David Filo in January 1994 and was incorporated on March 2, 1995. Marissa Mayer, a former Google executive, serves as CEO and President of the company.

In January 2015, the company announced it planned to spin-off its stake in Alibaba Group in a separately listed company. In December 2015 it reversed this decision, opting instead to spin-off its internet business as a separate company.

Amazon including its founder, Jeff Bezos

YouTube by Billionaire Jeff Bezos in Starting Amazon

Amazon.com, Inc., doing business as Amazon, is an American electronic commerce and cloud computing company based in Seattle, Washington, that was founded by Jeff Bezos [see next topic below] on July 5, 1994.

The tech giant is the largest Internet retailer in the world as measured by revenue and market capitalization, and second largest after Alibaba Group in terms of total sales.

The Amazon.com website started as an online bookstore and later diversified to sell the following:

The company also owns/produces the following:

Amazon is the world's largest provider of cloud infrastructure services (IaaS and PaaS) through its AWS subsidiary. Amazon also sells certain low-end products under its in-house brand AmazonBasics.

Amazon has separate retail websites for the United States, the United Kingdom and Ireland, France, Canada, Germany, Italy, Spain, Netherlands, Australia, Brazil, Japan, China, India, Mexico, Singapore, and Turkey. In 2016, Dutch, Polish, and Turkish language versions of the German Amazon website were also launched. Amazon also offers international shipping of some of its products to certain other countries.

In 2015, Amazon surpassed Walmart as the most valuable retailer in the United States by market capitalization.

Amazon is:

In 2017, Amazon acquired Whole Foods Market for $13.4 billion, which vastly increased Amazon's presence as a brick-and-mortar retailer. The acquisition was interpreted by some as a direct attempt to challenge Walmart's traditional retail stores.

In 2018, for the first time, Jeff Bezos released in Amazon's shareholder letter the number of Amazon Prime subscribers, which is 100 million worldwide.

In 2018, Amazon.com contributed US$1 million to the Wikimedia Endowment.

In November 2018, Amazon announced it would be splitting its second headquarters project between two cities. They are currently in the finalization stage of the process.

Click on any of the following blue hyperlinks for more about Amazon, Inc.:

Jeffrey Preston Bezos (né Jorgensen; born January 12, 1964) is an American technology and retail entrepreneur, investor, electrical engineer, computer scientist, and philanthropist, best known as the founder, chairman, and chief executive officer of Amazon.com, the world's largest online shopping retailer.

The company began as an Internet merchant of books and expanded to a wide variety of products and services, most recently video and audio streaming. Amazon.com is currently the world's largest Internet sales company on the World Wide Web, as well as the world's largest provider of cloud infrastructure services, which is available through its Amazon Web Services arm.

Bezos' other diversified business interests include aerospace and newspapers. He is the founder and manufacturer of Blue Origin (founded in 2000) with test flights to space which started in 2015, and plans for commercial suborbital human spaceflight beginning in 2018.

In 2013, Bezos purchased The Washington Post newspaper. A number of other business investments are managed through Bezos Expeditions.

When the financial markets opened on July 27, 2017, Bezos briefly surpassed Bill Gates on the Forbes list of billionaires to become the world's richest person, with an estimated net worth of just over $90 billion. He lost the title later in the day when Amazon's stock dropped, returning him to second place with a net worth just below $90 billion.

On October 27, 2017, Bezos again surpassed Gates on the Forbes list as the richest person in the world. Bezos's net worth surpassed $100 billion for the first time on November 24, 2017 after Amazon's share price increased by more than 2.5%.

Click on any of the following blue hyperlinks for more about Jeff Bezos:

The tech giant is the largest Internet retailer in the world as measured by revenue and market capitalization, and second largest after Alibaba Group in terms of total sales.

The Amazon.com website started as an online bookstore and later diversified to sell the following:

- video downloads/streaming,

- MP3 downloads/streaming,

- audiobook downloads/streaming,

- software,

- video games,

- electronics,

- apparel,

- furniture,

- food,

- toys,

- and jewelry.

The company also owns/produces the following:

- a publishing arm, Amazon Publishing,

- a film and television studio, Amazon Studios,

- produces consumer electronics lines including;

Amazon is the world's largest provider of cloud infrastructure services (IaaS and PaaS) through its AWS subsidiary. Amazon also sells certain low-end products under its in-house brand AmazonBasics.

Amazon has separate retail websites for the United States, the United Kingdom and Ireland, France, Canada, Germany, Italy, Spain, Netherlands, Australia, Brazil, Japan, China, India, Mexico, Singapore, and Turkey. In 2016, Dutch, Polish, and Turkish language versions of the German Amazon website were also launched. Amazon also offers international shipping of some of its products to certain other countries.

In 2015, Amazon surpassed Walmart as the most valuable retailer in the United States by market capitalization.

Amazon is:

- the third most valuable public company in the United States (behind Apple and Microsoft),

- the largest Internet company by revenue in the world,

- and after Walmart, the second largest employer in the United States.

In 2017, Amazon acquired Whole Foods Market for $13.4 billion, which vastly increased Amazon's presence as a brick-and-mortar retailer. The acquisition was interpreted by some as a direct attempt to challenge Walmart's traditional retail stores.

In 2018, for the first time, Jeff Bezos released in Amazon's shareholder letter the number of Amazon Prime subscribers, which is 100 million worldwide.

In 2018, Amazon.com contributed US$1 million to the Wikimedia Endowment.

In November 2018, Amazon announced it would be splitting its second headquarters project between two cities. They are currently in the finalization stage of the process.

Click on any of the following blue hyperlinks for more about Amazon, Inc.:

- History

- Choosing a name

Online bookstore and IPO

2000's

2010 to present

Amazon Go

Amazon 4-Star

Mergers and acquisitions

- Choosing a name

- Board of directors

- Merchant partnerships

- Products and services

- Subsidiaries

- Website at www.amazon.com

- Amazon sales rank

- Technology

- Multi-level sales strategy

- Finances

- October 2018 wage increase

- Controversies

- Notable businesses founded by former employees

- See also:

- Amazon Breakthrough Novel Award

- Amazon Flexible Payments Service

- Amazon Marketplace

- Amazon Standard Identification Number (ASIN)

- List of book distributors

- Statistically improbable phrases – Amazon.com's phrase extraction technique for indexing books

- Amazon (company) companies grouped at OpenCorporates

- Business data for Amazon.com, Inc.: Google Finance

Jeffrey Preston Bezos (né Jorgensen; born January 12, 1964) is an American technology and retail entrepreneur, investor, electrical engineer, computer scientist, and philanthropist, best known as the founder, chairman, and chief executive officer of Amazon.com, the world's largest online shopping retailer.

The company began as an Internet merchant of books and expanded to a wide variety of products and services, most recently video and audio streaming. Amazon.com is currently the world's largest Internet sales company on the World Wide Web, as well as the world's largest provider of cloud infrastructure services, which is available through its Amazon Web Services arm.

Bezos' other diversified business interests include aerospace and newspapers. He is the founder and manufacturer of Blue Origin (founded in 2000) with test flights to space which started in 2015, and plans for commercial suborbital human spaceflight beginning in 2018.

In 2013, Bezos purchased The Washington Post newspaper. A number of other business investments are managed through Bezos Expeditions.

When the financial markets opened on July 27, 2017, Bezos briefly surpassed Bill Gates on the Forbes list of billionaires to become the world's richest person, with an estimated net worth of just over $90 billion. He lost the title later in the day when Amazon's stock dropped, returning him to second place with a net worth just below $90 billion.

On October 27, 2017, Bezos again surpassed Gates on the Forbes list as the richest person in the world. Bezos's net worth surpassed $100 billion for the first time on November 24, 2017 after Amazon's share price increased by more than 2.5%.

Click on any of the following blue hyperlinks for more about Jeff Bezos:

- Early life and education

- Business career

- Philanthropy

- Recognition

- Criticism

- Personal life

- Politics

- See also:

Twitter

Ranked #10 by Alexa and #11 by SimilarWeb

YouTube Video: How to Use Twitter

Twitter is an online social networking service that enables users to send and read short 280-character messages called "tweets".

Registered users can read and post tweets, but those who are unregistered can only read them. Users access Twitter through the website interface, SMS or mobile device app.

Twitter Inc. is based in San Francisco and has more than 25 offices around the world. Twitter was created in March 2006 by Jack Dorsey, Evan Williams, Biz Stone, and Noah Glass and launched in July 2006.

The service rapidly gained worldwide popularity, with more than 100 million users posting 340 million tweets a day in 2012. The service also handled 1.6 billion search queries per day.

In 2013, Twitter was one of the ten most-visited websites and has been described as "the SMS of the Internet". As of May 2015, Twitter has more than 500 million users, out of which more than 332 million are active.

Registered users can read and post tweets, but those who are unregistered can only read them. Users access Twitter through the website interface, SMS or mobile device app.

Twitter Inc. is based in San Francisco and has more than 25 offices around the world. Twitter was created in March 2006 by Jack Dorsey, Evan Williams, Biz Stone, and Noah Glass and launched in July 2006.

The service rapidly gained worldwide popularity, with more than 100 million users posting 340 million tweets a day in 2012. The service also handled 1.6 billion search queries per day.

In 2013, Twitter was one of the ten most-visited websites and has been described as "the SMS of the Internet". As of May 2015, Twitter has more than 500 million users, out of which more than 332 million are active.

List of Most Subscribed Users onYouTube

YouTube Music Video by Taylor Swift performing 22

Pictured: LEFT: PewDiePie (43 Million Followers) and RIGHT: Rihanna (20 Million Followers)

This list of the most subscribed users on YouTube contains representations of the channels with the most subscribers on the video platform YouTube.

The ability to "subscribe" to a user's videos was added to YouTube by late October 2005, The "most subscribed" list on YouTube began being listed by a chart on the site by May 2006, at which time Smosh was #1 with fewer than 3,000 subscribers. As of April 5, 2016, the most subscribed user is PewDiePie, with over 42 million subscribers. The PewDiePie channel has held the peak position since December 22, 2013 (2 years, 3 months and 14 days), when it surpassed YouTube's Spotlight channel.

This list depicts the 25 most subscribed channels on YouTube as of March 10, 2016. This lists omits "channels", and instead only includes "users". A "user" is defined as a channel that has released videos. "Channels" that have released zero videos, such as #Music, #Gaming, or #Sports, are not included on this list, even if they have more subscribers than the users on this list. Additionally, these subscriber counts are approximations.

Reactions

In late 2006 when Peter Oakley aka Geriatric1927 became most subscribed, a number of TV channels wanted to interview him on his rise to fame. The Daily Mail and TechBlog did an article about him and his success. In 2009, the FRED channel was the first channel to have over one million subscribers.

Following the third time that the user Smosh became most subscribed, Ray William Johnson collaborated with the duo.

A flurry of top YouTubers including Ryan Higa, Shane Dawson, Felix Kjellberg, Michael Buckley, Kassem Gharaibeh, The Fine Brothers, and Johnson himself, congratulated the duo shortly after they surpassed Johnson as the most subscribed channel.

Following Felix Kjellberg's positioning at the top of YouTube, Variety heavily criticized the Swede's videos.

See also

The ability to "subscribe" to a user's videos was added to YouTube by late October 2005, The "most subscribed" list on YouTube began being listed by a chart on the site by May 2006, at which time Smosh was #1 with fewer than 3,000 subscribers. As of April 5, 2016, the most subscribed user is PewDiePie, with over 42 million subscribers. The PewDiePie channel has held the peak position since December 22, 2013 (2 years, 3 months and 14 days), when it surpassed YouTube's Spotlight channel.

This list depicts the 25 most subscribed channels on YouTube as of March 10, 2016. This lists omits "channels", and instead only includes "users". A "user" is defined as a channel that has released videos. "Channels" that have released zero videos, such as #Music, #Gaming, or #Sports, are not included on this list, even if they have more subscribers than the users on this list. Additionally, these subscriber counts are approximations.

Reactions

In late 2006 when Peter Oakley aka Geriatric1927 became most subscribed, a number of TV channels wanted to interview him on his rise to fame. The Daily Mail and TechBlog did an article about him and his success. In 2009, the FRED channel was the first channel to have over one million subscribers.

Following the third time that the user Smosh became most subscribed, Ray William Johnson collaborated with the duo.

A flurry of top YouTubers including Ryan Higa, Shane Dawson, Felix Kjellberg, Michael Buckley, Kassem Gharaibeh, The Fine Brothers, and Johnson himself, congratulated the duo shortly after they surpassed Johnson as the most subscribed channel.

Following Felix Kjellberg's positioning at the top of YouTube, Variety heavily criticized the Swede's videos.

See also

Vevo

YouTube Video: Lenny Kravitz - Lenny Kravitz Talks ‘Raise Vibration,’ And Why Love Still Rules

Vevo is a multinational video hosting service owned and operated by a joint venture of Universal Music Group (UMG), Google, Sony Music Entertainment (SME), and Abu Dhabi Media, and based in New York City.

Launched on December 8, 2009, Vevo hosts videos syndicated across the web, with Google and Vevo sharing the advertising revenue.

Vevo offers music videos from two of the "big three" major record labels, UMG and SME. EMI also licensed its library for Vevo shortly before launch; it was acquired by UMG in 2012.

Warner Music Group was initially reported to be considering hosting its content on the service, but formed an alliance with rival MTV Networks (now Viacom Media Networks). In August 2015, Vevo expressed interest in licensing music from Warner Music Group.

The concept for Vevo was described as being a streaming service for music videos (similar to the streaming service Hulu, a streaming service for movies and TV shows after they air), with the goal being to attract more high-end advertisers.

The site's other revenue sources include a merchandise store and referral links to purchase viewed songs on Amazon Music and iTunes.

UMG acquired the domain name vevo.com on November 20, 2008. SME reached a deal to add its content to the site in June 2009.

The site went live on December 8, 2009, and that same month became the number one most visited music site in the United States, overtaking MySpace Music.

In June 2012, Vevo launched its Certified awards, which honors artists with at least 100 million views on Vevo and its partners (including YouTube) through special features on the Vevo website.

Vevo TV:On March 12, 2013, Vevo launched Vevo TV, an advertising-supported internet television channel running 24 hours a day, featuring blocks of music videos and specials. The channel is only available to viewers in North America and Germany, with geographical IP address blocking being used to enforce the restriction. Vevo has planned launches in other countries.

After revamping its website, Vevo TV later branched off into three separate networks: Hits, Flow (hip hop and R&B), and Nashville (country music).

Availability:

Vevo is available in Belgium, Brazil, Canada, Chile, France, Germany, Ireland, Italy, Mexico, the Netherlands, New Zealand, Poland, Spain, the United Kingdom, and the United States. The website was scheduled to go worldwide in 2010, but as of January 1, 2016, it was still not available outside these countries.

Vevo's official blog cited licensing issues for the delay in the worldwide rollout. Most of Vevo's videos on YouTube are viewable by users in other countries, while others will produce the message "The uploader has not made this video available in your country."

The Vevo service in the United Kingdom and Ireland was launched on April 26, 2011.

On April 16, 2012, Vevo was launched in Australia and New Zealand by MCM Entertainment. On August 14, 2012, Brazil became the first Latin American country to have the service. It was expected to be launched in six more European and Latin American countries in 2012. Vevo launched in Spain, Italy, and France on November 15, 2012. Vevo launched in the Netherlands on April 3, 2013, and on May 17, 2013, also in Poland.

In September 29, 2013, Vevo updated its iOS application that now includes launching in Germany. On April 30, 2014, Vevo was launched in Mexico.

Vevo is also available for a range of platforms including Android, iOS, Windows Phone, Windows 8, Fire OS, Google TV, Apple TV, Boxee, Roku, Xbox 360, PlayStation 3, and PlayStation 4.

Edited content Versions of videos on Vevo with explicit content such as profanity may be edited, according to a company spokesperson, "to keep everything clean for broadcast, 'the MTV version.'" This allows Vevo to make their network more friendly to advertising partners such as McDonald's.

Vevo has stated that it does not have specific policies or a list of words that are forbidden. Some explicit videos are provided with uncut versions in addition to the edited version.

There is no formal rating system in place, aside from classifying videos as explicit or non-explicit, but discussions are taking place to create a rating system that allows users and advertisers to choose the level of profanity they are willing to accept.

24-Hour Vevo Record:

The 24-Hour Vevo Record, commonly referred to as the Vevo Record, is the record for the most views a music video associated with Vevo has received within 24 hours of its release.

The video that currently holds this record is "Hello" by Adele with 27.7 million views.

In 2012, Nicki Minaj's "Stupid Hoe" became one of the first Vevo music videos to receive a significant amount of media attention upon its release day, during which it accumulated 4.8 million views. The record has consistently been kept track of by Vevo ever since.

Total views of a video are counted from across all of Vevo's platforms, including YouTube, Yahoo! and other syndication partners.

On 14 April 2013, Psy's "Gentleman" unofficially broke the record by reaching 38.4 million views in its first 24 hours. However, this record is not acknowledged by Vevo because it was only associated with them four days after its release.

Minaj has broken the Vevo Record more than any other artist with three separate videos: "Stupid Hoe", "Beauty and a Beat" and "Anaconda". She has held the record for an accumulated 622 days.

Justin Bieber, One Direction and Miley Cyrus have all broken the record twice.

Launched on December 8, 2009, Vevo hosts videos syndicated across the web, with Google and Vevo sharing the advertising revenue.

Vevo offers music videos from two of the "big three" major record labels, UMG and SME. EMI also licensed its library for Vevo shortly before launch; it was acquired by UMG in 2012.

Warner Music Group was initially reported to be considering hosting its content on the service, but formed an alliance with rival MTV Networks (now Viacom Media Networks). In August 2015, Vevo expressed interest in licensing music from Warner Music Group.

The concept for Vevo was described as being a streaming service for music videos (similar to the streaming service Hulu, a streaming service for movies and TV shows after they air), with the goal being to attract more high-end advertisers.

The site's other revenue sources include a merchandise store and referral links to purchase viewed songs on Amazon Music and iTunes.

UMG acquired the domain name vevo.com on November 20, 2008. SME reached a deal to add its content to the site in June 2009.

The site went live on December 8, 2009, and that same month became the number one most visited music site in the United States, overtaking MySpace Music.

In June 2012, Vevo launched its Certified awards, which honors artists with at least 100 million views on Vevo and its partners (including YouTube) through special features on the Vevo website.

Vevo TV:On March 12, 2013, Vevo launched Vevo TV, an advertising-supported internet television channel running 24 hours a day, featuring blocks of music videos and specials. The channel is only available to viewers in North America and Germany, with geographical IP address blocking being used to enforce the restriction. Vevo has planned launches in other countries.

After revamping its website, Vevo TV later branched off into three separate networks: Hits, Flow (hip hop and R&B), and Nashville (country music).

Availability:

Vevo is available in Belgium, Brazil, Canada, Chile, France, Germany, Ireland, Italy, Mexico, the Netherlands, New Zealand, Poland, Spain, the United Kingdom, and the United States. The website was scheduled to go worldwide in 2010, but as of January 1, 2016, it was still not available outside these countries.

Vevo's official blog cited licensing issues for the delay in the worldwide rollout. Most of Vevo's videos on YouTube are viewable by users in other countries, while others will produce the message "The uploader has not made this video available in your country."

The Vevo service in the United Kingdom and Ireland was launched on April 26, 2011.

On April 16, 2012, Vevo was launched in Australia and New Zealand by MCM Entertainment. On August 14, 2012, Brazil became the first Latin American country to have the service. It was expected to be launched in six more European and Latin American countries in 2012. Vevo launched in Spain, Italy, and France on November 15, 2012. Vevo launched in the Netherlands on April 3, 2013, and on May 17, 2013, also in Poland.

In September 29, 2013, Vevo updated its iOS application that now includes launching in Germany. On April 30, 2014, Vevo was launched in Mexico.

Vevo is also available for a range of platforms including Android, iOS, Windows Phone, Windows 8, Fire OS, Google TV, Apple TV, Boxee, Roku, Xbox 360, PlayStation 3, and PlayStation 4.

Edited content Versions of videos on Vevo with explicit content such as profanity may be edited, according to a company spokesperson, "to keep everything clean for broadcast, 'the MTV version.'" This allows Vevo to make their network more friendly to advertising partners such as McDonald's.

Vevo has stated that it does not have specific policies or a list of words that are forbidden. Some explicit videos are provided with uncut versions in addition to the edited version.

There is no formal rating system in place, aside from classifying videos as explicit or non-explicit, but discussions are taking place to create a rating system that allows users and advertisers to choose the level of profanity they are willing to accept.

24-Hour Vevo Record:

The 24-Hour Vevo Record, commonly referred to as the Vevo Record, is the record for the most views a music video associated with Vevo has received within 24 hours of its release.

The video that currently holds this record is "Hello" by Adele with 27.7 million views.

In 2012, Nicki Minaj's "Stupid Hoe" became one of the first Vevo music videos to receive a significant amount of media attention upon its release day, during which it accumulated 4.8 million views. The record has consistently been kept track of by Vevo ever since.

Total views of a video are counted from across all of Vevo's platforms, including YouTube, Yahoo! and other syndication partners.

On 14 April 2013, Psy's "Gentleman" unofficially broke the record by reaching 38.4 million views in its first 24 hours. However, this record is not acknowledged by Vevo because it was only associated with them four days after its release.

Minaj has broken the Vevo Record more than any other artist with three separate videos: "Stupid Hoe", "Beauty and a Beat" and "Anaconda". She has held the record for an accumulated 622 days.

Justin Bieber, One Direction and Miley Cyrus have all broken the record twice.

Alexa Internet

YouTube Video: The Secret to Becoming the Top Website in Any Popular Niche 2017 - Better Alexa Rank

Alexa Internet, Inc. is a California-based company that provides commercial web traffic data and analytics. It is a wholly owned subsidiary of Amazon.com.

Founded as an independent company in 1996, Alexa was acquired by Amazon in 1999. Its toolbar collects data on browsing behavior and transmits them to the Alexa website, where they are stored and analyzed, forming the basis for the company's web traffic reporting. According to its website, Alexa provides traffic data, global rankings and other information on 30 million websites, and as of 2015 its website is visited by over 6.5 million people monthly.

Alexa Internet was founded in April 1996 by American web entrepreneurs Brewster Kahle and Bruce Gilliat. The company's name was chosen in homage to the Library of Alexandria of Ptolemaic Egypt, drawing a parallel between the largest repository of knowledge in the ancient world and the potential of the Internet to become a similar store of knowledge.

Alexa initially offered a toolbar that gave Internet users suggestions on where to go next, based on the traffic patterns of its user community. The company also offered context for each site visited: to whom it was registered, how many pages it had, how many other sites pointed to it, and how frequently it was updated.

Alexa's operations grew to include archiving of web pages as they are crawled. This database served as the basis for the creation of the Internet Archive accessible through the Wayback Machine. In 1998, the company donated a copy of the archive, two terabytes in size, to the Library of Congress. Alexa continues to supply the Internet Archive with Web crawls.

In 1999, as the company moved away from its original vision of providing an "intelligent" search engine, Alexa was acquired by Amazon.com for approximately US$250 million in Amazon stock.

Alexa began a partnership with Google in early 2002, and with the web directory DMOZ in January 2003. In May 2006, Amazon replaced Google with Bing (at the time known as Windows Live Search) as a provider of search results.

In December 2006, Amazon released Alexa Image Search. Built in-house, it was the first major application built on the company's Web platform.

In December 2005, Alexa opened its extensive search index and Web-crawling facilities to third party programs through a comprehensive set of Web services and APIs. These could be used, for instance, to construct vertical search engines that could run on Alexa's own servers or elsewhere. In May 2007, Alexa changed their API to limit comparisons to three websites, reduce the size of embedded graphs in Flash, and add mandatory embedded BritePic advertisements.

In April 2007, the lawsuit Alexa v. Hornbaker was filed to stop trademark infringement by the Statsaholic service. In the lawsuit, Alexa alleged that Hornbaker was stealing traffic graphs for profit, and that the primary purpose of his site was to display graphs that were generated by Alexa's servers. Hornbaker removed the term Alexa from his service name on March 19, 2007.

On November 27, 2008, Amazon announced that Alexa Web Search was no longer accepting new customers, and that the service would be deprecated or discontinued for existing customers on January 26, 2009. Thereafter, Alexa became a purely analytics-focused company.

On March 31, 2009, Alexa launched a major website redesign. The redesigned site provided new web traffic metrics—including average page views per individual user, bounce rate, and user time on site. In the following weeks, Alexa added more features, including visitor demographics, clickstream and search traffic statistics. Alexa introduced these new features to compete with other web analytics services.

Tracking:

Toolbar: Alexa ranks sites based primarily on tracking a sample set of internet traffic—users of its toolbar for the Internet Explorer, Firefox and Google Chrome web browsers.

The Alexa Toolbar includes a popup blocker, a search box, links to Amazon.com and the Alexa homepage, and the Alexa ranking of the site that the user is visiting. It also allows the user to rate the site and view links to external, relevant sites.

In early 2005, Alexa stated that there had been 10 million downloads of the toolbar, though the company did not provide statistics about active usage. Originally, web pages were only ranked amongst users who had the Alexa Toolbar installed, and could be biased if a specific audience subgroup was reluctant to take part in the rankings. This caused some controversy over how representative Alexa's user base was of typical Internet behavior, especially for less-visited sites.

In 2007, Michael Arrington provided examples of Alexa rankings known to contradict data from the comScore web analytics service, including ranking YouTube ahead of Google.

Until 2007, a third-party-supplied plugin for the Firefox browser served as the only option for Firefox users after Amazon abandoned its A9 toolbar. On July 16, 2007, Alexa released an official toolbar for Firefox called Sparky.

On 16 April 2008, many users reported dramatic shifts in their Alexa rankings. Alexa confirmed this later in the day with an announcement that they had released an updated ranking system, claiming that they would now take into account more sources of data "beyond Alexa Toolbar users".

Certified statistics:

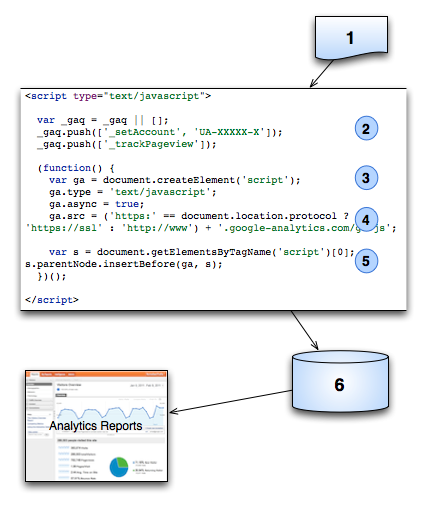

Using the Alexa Pro service, website owners can sign up for "certified statistics," which allows Alexa more access to a site's traffic data. Site owners input Javascript code on each page of their website that, if permitted by the user's security and privacy settings, runs and sends traffic data to Alexa, allowing Alexa to display—or not display, depending on the owner's preference—more accurate statistics such as total pageviews and unique pageviews.

Privacy and malware assessments:

A number of antivirus companies have assessed Alexa's toolbar. The toolbar for Internet Explorer 7 was at one point flagged as malware by Microsoft Defender.

Symantec classifies the toolbar as "trackware", while McAfee classifies it as adware, deeming it a "potentially unwanted program." McAfee Site Advisor rates the Alexa site as "green", finding "no significant problems" but warning of a "small fraction of downloads ... that some people consider adware or other potentially unwanted programs."

Though it is possible to delete a paid subscription within an Alexa account, it is not possible to delete an account that is created at Alexa through any web interface, though any user may contact the company via its support webpage.

Founded as an independent company in 1996, Alexa was acquired by Amazon in 1999. Its toolbar collects data on browsing behavior and transmits them to the Alexa website, where they are stored and analyzed, forming the basis for the company's web traffic reporting. According to its website, Alexa provides traffic data, global rankings and other information on 30 million websites, and as of 2015 its website is visited by over 6.5 million people monthly.

Alexa Internet was founded in April 1996 by American web entrepreneurs Brewster Kahle and Bruce Gilliat. The company's name was chosen in homage to the Library of Alexandria of Ptolemaic Egypt, drawing a parallel between the largest repository of knowledge in the ancient world and the potential of the Internet to become a similar store of knowledge.

Alexa initially offered a toolbar that gave Internet users suggestions on where to go next, based on the traffic patterns of its user community. The company also offered context for each site visited: to whom it was registered, how many pages it had, how many other sites pointed to it, and how frequently it was updated.

Alexa's operations grew to include archiving of web pages as they are crawled. This database served as the basis for the creation of the Internet Archive accessible through the Wayback Machine. In 1998, the company donated a copy of the archive, two terabytes in size, to the Library of Congress. Alexa continues to supply the Internet Archive with Web crawls.

In 1999, as the company moved away from its original vision of providing an "intelligent" search engine, Alexa was acquired by Amazon.com for approximately US$250 million in Amazon stock.

Alexa began a partnership with Google in early 2002, and with the web directory DMOZ in January 2003. In May 2006, Amazon replaced Google with Bing (at the time known as Windows Live Search) as a provider of search results.

In December 2006, Amazon released Alexa Image Search. Built in-house, it was the first major application built on the company's Web platform.

In December 2005, Alexa opened its extensive search index and Web-crawling facilities to third party programs through a comprehensive set of Web services and APIs. These could be used, for instance, to construct vertical search engines that could run on Alexa's own servers or elsewhere. In May 2007, Alexa changed their API to limit comparisons to three websites, reduce the size of embedded graphs in Flash, and add mandatory embedded BritePic advertisements.

In April 2007, the lawsuit Alexa v. Hornbaker was filed to stop trademark infringement by the Statsaholic service. In the lawsuit, Alexa alleged that Hornbaker was stealing traffic graphs for profit, and that the primary purpose of his site was to display graphs that were generated by Alexa's servers. Hornbaker removed the term Alexa from his service name on March 19, 2007.

On November 27, 2008, Amazon announced that Alexa Web Search was no longer accepting new customers, and that the service would be deprecated or discontinued for existing customers on January 26, 2009. Thereafter, Alexa became a purely analytics-focused company.

On March 31, 2009, Alexa launched a major website redesign. The redesigned site provided new web traffic metrics—including average page views per individual user, bounce rate, and user time on site. In the following weeks, Alexa added more features, including visitor demographics, clickstream and search traffic statistics. Alexa introduced these new features to compete with other web analytics services.

Tracking:

Toolbar: Alexa ranks sites based primarily on tracking a sample set of internet traffic—users of its toolbar for the Internet Explorer, Firefox and Google Chrome web browsers.

The Alexa Toolbar includes a popup blocker, a search box, links to Amazon.com and the Alexa homepage, and the Alexa ranking of the site that the user is visiting. It also allows the user to rate the site and view links to external, relevant sites.

In early 2005, Alexa stated that there had been 10 million downloads of the toolbar, though the company did not provide statistics about active usage. Originally, web pages were only ranked amongst users who had the Alexa Toolbar installed, and could be biased if a specific audience subgroup was reluctant to take part in the rankings. This caused some controversy over how representative Alexa's user base was of typical Internet behavior, especially for less-visited sites.

In 2007, Michael Arrington provided examples of Alexa rankings known to contradict data from the comScore web analytics service, including ranking YouTube ahead of Google.

Until 2007, a third-party-supplied plugin for the Firefox browser served as the only option for Firefox users after Amazon abandoned its A9 toolbar. On July 16, 2007, Alexa released an official toolbar for Firefox called Sparky.

On 16 April 2008, many users reported dramatic shifts in their Alexa rankings. Alexa confirmed this later in the day with an announcement that they had released an updated ranking system, claiming that they would now take into account more sources of data "beyond Alexa Toolbar users".

Certified statistics:

Using the Alexa Pro service, website owners can sign up for "certified statistics," which allows Alexa more access to a site's traffic data. Site owners input Javascript code on each page of their website that, if permitted by the user's security and privacy settings, runs and sends traffic data to Alexa, allowing Alexa to display—or not display, depending on the owner's preference—more accurate statistics such as total pageviews and unique pageviews.

Privacy and malware assessments:

A number of antivirus companies have assessed Alexa's toolbar. The toolbar for Internet Explorer 7 was at one point flagged as malware by Microsoft Defender.

Symantec classifies the toolbar as "trackware", while McAfee classifies it as adware, deeming it a "potentially unwanted program." McAfee Site Advisor rates the Alexa site as "green", finding "no significant problems" but warning of a "small fraction of downloads ... that some people consider adware or other potentially unwanted programs."

Though it is possible to delete a paid subscription within an Alexa account, it is not possible to delete an account that is created at Alexa through any web interface, though any user may contact the company via its support webpage.

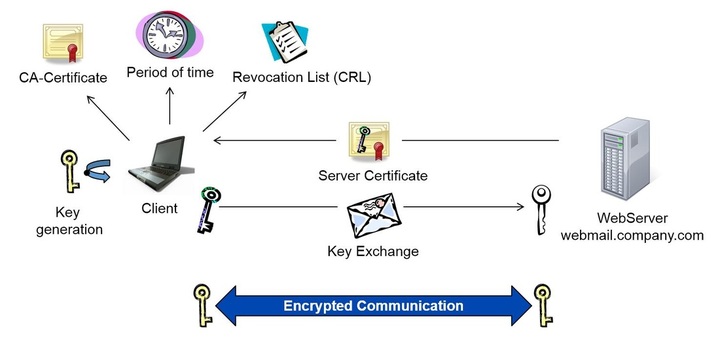

Public Key Certificate or Digital Certificate

YouTube Video: Understanding Digital Certificates

Pictured: Public Key Infrastructure: Basics about digital certificates (HTTPS, SSL)

In cryptography, a public key certificate (also known as a digital certificate or identity certificate) is an electronic document used to prove ownership of a public key.

The certificate includes information about the key, information about its owner's identity, and the digital signature of an entity that has verified the certificate's contents are correct. If the signature is valid, and the person examining the certificate trusts the signer, then they know they can use that key to communicate with its owner.

In a typical public-key infrastructure (PKI) scheme, the signer is a certificate authority (CA), usually a company which charges customers to issue certificates for them.

In a web of trust scheme, the signer is either the key's owner (a self-signed certificate) or other users ("endorsements") whom the person examining the certificate might know and trust.

Certificates are an important component of Transport Layer Security (TLS, sometimes called by its older name SSL, Secure Sockets Layer), where they prevent an attacker from impersonating a secure website or other server. They are also used in other important applications, such as email encryption and code signing.

For the Rest about this Topic, Click Here

The certificate includes information about the key, information about its owner's identity, and the digital signature of an entity that has verified the certificate's contents are correct. If the signature is valid, and the person examining the certificate trusts the signer, then they know they can use that key to communicate with its owner.

In a typical public-key infrastructure (PKI) scheme, the signer is a certificate authority (CA), usually a company which charges customers to issue certificates for them.

In a web of trust scheme, the signer is either the key's owner (a self-signed certificate) or other users ("endorsements") whom the person examining the certificate might know and trust.

Certificates are an important component of Transport Layer Security (TLS, sometimes called by its older name SSL, Secure Sockets Layer), where they prevent an attacker from impersonating a secure website or other server. They are also used in other important applications, such as email encryption and code signing.

For the Rest about this Topic, Click Here

How to Protect Your Child from Accessing Inappropriate Content on the Internet

YouTube Video: How to Block Websites with Parental Controls on Your iPhone, Android, and Computer

Pictured: CIPA Logo

The Children's Internet Protection Act (CIPA) requires that K-12 schools and libraries in the United States use Internet filters and implement other measures to protect children from harmful online content as a condition for federal funding. It was signed into law on December 21, 2000, and was found to be constitutional by the United States Supreme Court on June 23, 2003.

Background:

CIPA is one of a number of bills that the United States Congress proposed to limit children's exposure to pornography and explicit content online. Both of Congress's earlier attempts at restricting indecent Internet content, the Communications Decency Act and the Child Online Protection Act, were held to be unconstitutional by the U.S. Supreme Court on First Amendment grounds.

CIPA represented a change in strategy by Congress. While the federal government had no means of directly controlling local school and library boards, many schools and libraries took advantage of Universal Service Fund (USF) discounts derived from universal service fees paid by users in order to purchase eligible telecom services and Internet access.

In passing CIPA, Congress required libraries and K-12 schools using these E-Rate discounts on Internet access and internal connections to purchase and use a "technology protection measure" on every computer connected to the Internet.

These conditions also applied to a small subset of grants authorized through the Library Services and Technology Act (LSTA). CIPA did not provide additional funds for the purchase of the "technology protection measure".

Stipulations:

CIPA requires K-12 schools and libraries using E-Rate discounts to operate "a technology protection measure with respect to any of its computers with Internet access that protects against access through such computers to visual depictions that are obscene, child pornography, or harmful to minors".

Such a technology protection measure must be employed "during any use of such computers by minors". The law also provides that the school or library "may disable the technology protection measure concerned, during use by an adult, to enable access for bona fide research or other lawful purpose".

Schools and libraries that do not receive E-Rate discounts or only receive discounts for telecommunication services and not for Internet access or internal connections, do not have an obligation to filter under CIPA. As of 2007, approximately one-third of libraries had chosen to forego federal E-Rate and certain types of LSTA funds so they would not be required to institute filtering.

This act has several requirements for institutions to meet before they can receive government funds. Libraries and schools must "provide reasonable public notice and hold at least one public hearing or meeting to address the proposed Internet safety policy" (47 U.S.C. § 254(1)(B)) as added by CIPA sec. 1732).

The policy proposed at this meeting must address:

CIPA does not, however, require that Internet use be tracked. All Internet access, even by adults, must be filtered, though filtering requirements can be less restrictive for adults.

Content to be filtered:

The following content must be filtered or blocked:

Some of the terms mentioned in this act, such as “inappropriate matter” and what is “harmful to minors”, are explained in the law. Under the Neighborhood Act (47 U.S.C. § 254(l)(2) as added by CIPA sec. 1732), the definition of “inappropriate matter” is locally determined:

Local Determination of Content – a determination regarding what matter is inappropriate for minors shall be made by the school board, local educational agency, library, or other United States authority responsible for making the determination.

No agency or instrumentality of the Government may – (a) establish criteria for making such determination; (b) review agency determination made by the certifying school, school board, local educational agency, library, or other authority; or (c) consider the criteria employed by the certifying school, school board, educational agency, library, or other authority in the administration of subsection 47 U.S.C. § 254(h)(1)(B).

The CIPA defines “harmful to minors” as:

Any picture, image, graphic image file, or other visual depiction that –

(i) taken as a whole and with respect to minors, appeals to a prurient interest in nudity, sex, or excretion;

(ii) depicts, describes, or represents, in a patently offensive way with respect to what is suitable for minors, an actual or simulated sexual act or sexual contact, actual or simulated normal or perverted sexual acts, or a lewd exhibition of the genitals;

and (iii) taken as a whole, lacks serious literary, artistic, political, or scientific value as to minors” (Secs. 1703(b)(2), 20 U.S.C. sec 3601(a)(5)(F) as added by CIPA sec 1711, 20 U.S.C. sec 9134(b)(f )(7)(B) as added by CIPA sec 1712(a), and 147 U.S.C. sec. 254(h)(c)(G) as added by CIPA sec. 1721(a)).

As mentioned above, there is an exception for Bona Fide Research. An institution can disable filters for adults in the pursuit of bona fide research or another type of lawful purpose. However, the law provides no definition for “bona fide research”.

However, in a later ruling the U.S. Supreme Court said that libraries would be required to adopt an Internet use policy providing for unblocking the Internet for adult users, without a requirement that the library inquire into the user's reasons for disabling the filter.

Justice Rehnquist stated "[a]ssuming that such erroneous blocking presents constitutional difficulties, any such concerns are dispelled by the ease with which patrons may have the filtering software disabled. When a patron encounters a blocked site, he need only ask a librarian to unblock it or (at least in the case of adults) disable the filter". This effectively puts the decision of what constitutes "bona fide research" in the hands of the adult asking to have the filter disabled.

The U.S. Federal Communications Commission (FCC) subsequently instructed libraries complying with CIPA to implement a procedure for unblocking the filter upon request by an adult.

Other filtered content includes sites that contain "inappropriate language", "blogs", or are deemed "tasteless".

This can be somewhat limiting in research for some students, as a resource they wish to use may be disallowed by the filter's vague explanations of why a page is banned. For example, if someone tries to access the page "March 4", and, ironically "Internet Censorship" on Wikipedia, the filter will immediately turn them away, claiming the page contains "Extreme language".

Suits challenging CIPA’s Constitutionality:

On January 17, 2001, the American Library Association (ALA) voted to challenge CIPA, on the grounds that the law required libraries to unconstitutionally block access to constitutionally protected information on the Internet. It charged first that, because CIPA's enforcement mechanism involved removing federal funds intended to assist disadvantaged facilities, "CIPA runs counter to these federal efforts to close the digital divide for all Americans". Second, it argued that "no filtering software successfully differentiates constitutionally protected speech from illegal speech on the Internet".

Working with the American Civil Liberties Union (ACLU), the ALA successfully challenged the law before a three-judge panel of the U.S. District Court for the Eastern District of Pennsylvania.

In a 200-page decision, the judges wrote that "in view of the severe limitations of filtering technology and the existence of these less restrictive alternatives [including making filtering software optional or supervising users directly], we conclude that it is not possible for a public library to comply with CIPA without blocking a very substantial amount of constitutionally protected speech, in violation of the First Amendment". 201 F.Supp.2d 401, 490 (2002).

Upon appeal to the U.S. Supreme Court, however, the law was upheld as constitutional as a condition imposed on institutions in exchange for government funding. In upholding the law, the Supreme Court, adopting the interpretation urged by the U.S. Solicitor General at oral argument, made it clear that the constitutionality of CIPA would be upheld only "if, as the Government represents, a librarian will unblock filtered material or disable the Internet software filter without significant delay on an adult user's request".

In the ruling Chief Justice William Rehnquist, joined by Justice Sandra Day O'Connor, Justice Antonin Scalia, and Justice Clarence Thomas, concluded two points. First, “Because public libraries' use of Internet filtering software does not violate their patrons' First Amendment rights, CIPA does not induce libraries to violate the Constitution, and is a valid exercise of Congress' spending power”.

The argument goes that, because of the immense amount of information available online and how quickly it changes, libraries cannot separate items individually to exclude, and blocking entire websites can often lead to an exclusion of valuable information. Therefore, it is reasonable for public libraries to restrict access to certain categories of content.

Secondly, “CIPA does not impose an unconstitutional condition on libraries that receive E-Rate and LSTA subsidies by requiring them, as a condition on that receipt, to surrender their First Amendment right to provide the public with access to constitutionally protected speech”. The argument here is that, the government can offer public funds to help institutions fulfill their roles, as in the case of libraries providing access to information.

The Justices cited Rust v. Sullivan (1991) as precedent to show how the Court has approved using government funds with certain limitations to facilitate a program. Furthermore, since public libraries traditionally do not include pornographic material in their book collections, the court can reasonably uphold a law that imposes a similar limitation for online texts.

As noted above, the text of the law authorized institutions to disable the filter on request "for bona fide research or other lawful purpose", implying that the adult would be expected to provide justification with his request. But under the interpretation urged by the Solicitor General and adopted by the Supreme Court, libraries would be required to adopt an Internet use policy providing for unblocking the Internet for adult users, without a requirement that the library inquire into the user's reasons for disabling the filter.

Legislation after CIPA:

An attempt to expand CIPA to include "social networking" web sites was considered by the U.S. Congress in 2006. See Deleting Online Predators Act. More attempts have been made recently by the International Society for Technology in Education (ISTE) and the Consortium for School Networking (CoSN) urging Congress to update CIPA terms in hopes of regulating, not abolishing, students' access to social networking and chat rooms.

Neither ISTE nor CoSN wish to ban these online communication outlets entirely however, as they believe the "Internet contains valuable content, collaboration and communication opportunities that can and do materially contribute to a student's academic growth and preparation for the workforce".

See Also:

Background:

CIPA is one of a number of bills that the United States Congress proposed to limit children's exposure to pornography and explicit content online. Both of Congress's earlier attempts at restricting indecent Internet content, the Communications Decency Act and the Child Online Protection Act, were held to be unconstitutional by the U.S. Supreme Court on First Amendment grounds.

CIPA represented a change in strategy by Congress. While the federal government had no means of directly controlling local school and library boards, many schools and libraries took advantage of Universal Service Fund (USF) discounts derived from universal service fees paid by users in order to purchase eligible telecom services and Internet access.

In passing CIPA, Congress required libraries and K-12 schools using these E-Rate discounts on Internet access and internal connections to purchase and use a "technology protection measure" on every computer connected to the Internet.

These conditions also applied to a small subset of grants authorized through the Library Services and Technology Act (LSTA). CIPA did not provide additional funds for the purchase of the "technology protection measure".

Stipulations:

CIPA requires K-12 schools and libraries using E-Rate discounts to operate "a technology protection measure with respect to any of its computers with Internet access that protects against access through such computers to visual depictions that are obscene, child pornography, or harmful to minors".

Such a technology protection measure must be employed "during any use of such computers by minors". The law also provides that the school or library "may disable the technology protection measure concerned, during use by an adult, to enable access for bona fide research or other lawful purpose".