Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

Innovations (& Their Innovators)

found in smart electronics, communications, military, transportation, science, engineering, and other fields and disciplines.

For a List of Medical Breakthroughs topics Click Here

For Computer Advancements, Click Here

For the Internet, Click Here

For Smartphones, Video and Online Games, Click Here

Innovations and Inventions

YouTube Video: Top 10 Inventions of the 20th Century by WatchMojo

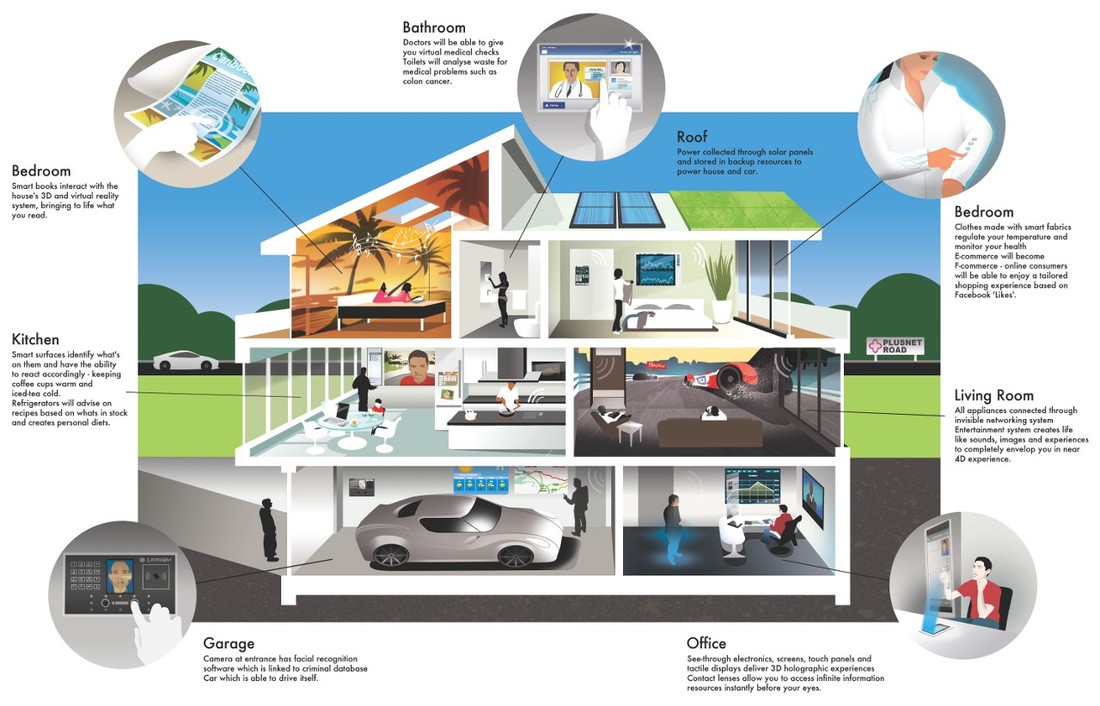

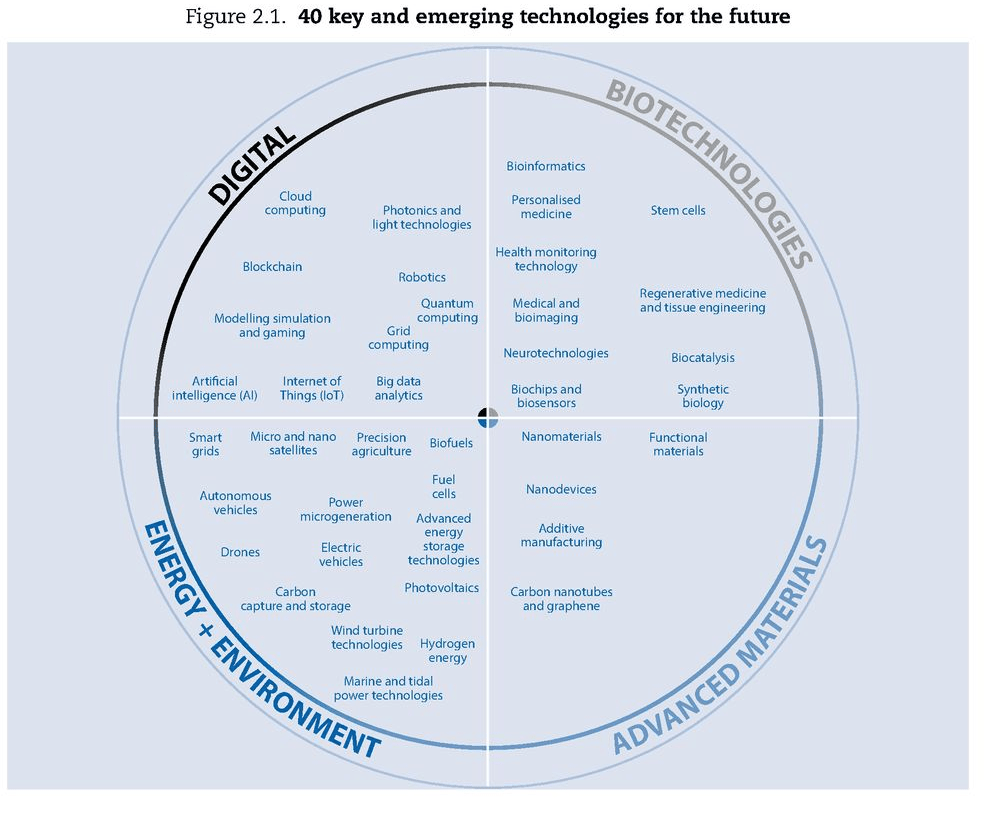

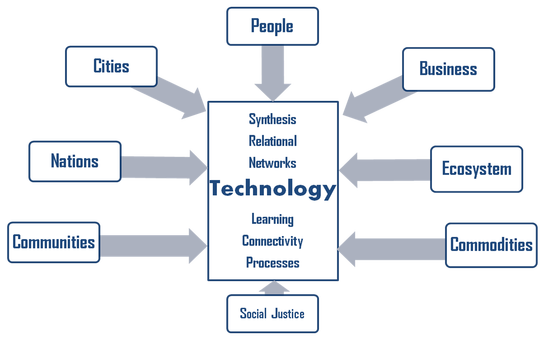

Pictured below: Innovations in Technology that Humanity Will Reach by the Year 2030

Innovation can be defined simply as a "new idea, device or method". However, innovation is often also viewed as the application of better solutions that meet new requirements, unarticulated needs, or existing market needs.

Such innovation takes place through the provision of more-effective products, processes, services, technologies, or business models that are made available to markets, governments and society. The term "innovation" can be defined as something original and more effective and, as a consequence, new, that "breaks into" the market or society.

Innovation is related to, but not the same as, invention (see next topic below), as innovation is more apt to involve the practical implementation of an invention (i.e. new/improved ability) to make a meaningful impact in the market or society, and not all innovations require an invention. Innovation often manifests itself via the engineering process, when the problem being solved is of a technical or scientific nature. The opposite of innovation is exnovation.

While a novel device is often described as an innovation, in economics, management science, and other fields of practice and analysis, innovation is generally considered to be the result of a process that brings together various novel ideas in such a way that they affect society.

In industrial economics, innovations are created and found empirically from services to meet growing consumer demand.

A 2014 survey of literature on innovation found over 40 definitions. In an industrial survey of how the software industry defined innovation, the following definition given by Crossan and Apaydin was considered to be the most complete, which builds on the Organisation for Economic Co-operation and Development (OECD) manual's definition:

Innovation is:

According to Kanter, innovation includes original invention and creative use and defines innovation as a generation, admission and realization of new ideas, products, services and processes.

Two main dimensions of innovation were degree of novelty (patent) (i.e. whether an innovation is new to the firm, new to the market, new to the industry, or new to the world) and type of innovation (i.e. whether it is process or product-service system innovation). In recent organizational scholarship, researchers of workplaces have also distinguished innovation to be separate from creativity, by providing an updated definition of these two related but distinct constructs:

Workplace creativity concerns the cognitive and behavioral processes applied when attempting to generate novel ideas. Workplace innovation concerns the processes applied when attempting to implement new ideas.

Specifically, innovation involves some combination of problem/opportunity identification, the introduction, adoption or modification of new ideas germane to organizational needs, the promotion of these ideas, and the practical implementation of these ideas.

Click on any of the following blue hyperlinks for more about Innovation:

An invention is a unique or novel device, method, composition or process. The invention process is a process within an overall engineering and product development process. It may be an improvement upon a machine or product or a new process for creating an object or a result.

An invention that achieves a completely unique function or result may be a radical breakthrough. Such works are novel and not obvious to others skilled in the same field. An inventor may be taking a big step in success or failure.

Some inventions can be patented. A patent legally protects the intellectual property rights of the inventor and legally recognizes that a claimed invention is actually an invention. The rules and requirements for patenting an invention vary from country to country and the process of obtaining a patent is often expensive.

Another meaning of invention is cultural invention, which is an innovative set of useful social behaviors adopted by people and passed on to others. The Institute for Social Inventions collected many such ideas in magazines and books. Invention is also an important component of artistic and design creativity.

Inventions often extend the boundaries of human knowledge, experience or capability.

Inventions are of three kinds:

Scientific-technological inventions include:

Sociopolitical inventions comprise new laws, institutions, and procedures that change modes of social behavior and establish new forms of human interaction and organization. Examples include:

Humanistic inventions encompass culture in its entirety and are as transformative and important as any in the sciences, although people tend to take them for granted. In the domain of linguistics, for example, many alphabets have been inventions, as are all neologisms (Shakespeare invented about 1,700 words).

Literary inventions include:

Among the inventions of artists and musicians are:

Philosophers have invented:

Religious thinkers are responsible for such inventions as:

Some of these disciplines, genres, and trends may seem to have existed eternally or to have emerged spontaneously of their own accord, but most of them have had inventors.

For more about Inventions, click on any of the following blue hyperlinks:

Such innovation takes place through the provision of more-effective products, processes, services, technologies, or business models that are made available to markets, governments and society. The term "innovation" can be defined as something original and more effective and, as a consequence, new, that "breaks into" the market or society.

Innovation is related to, but not the same as, invention (see next topic below), as innovation is more apt to involve the practical implementation of an invention (i.e. new/improved ability) to make a meaningful impact in the market or society, and not all innovations require an invention. Innovation often manifests itself via the engineering process, when the problem being solved is of a technical or scientific nature. The opposite of innovation is exnovation.

While a novel device is often described as an innovation, in economics, management science, and other fields of practice and analysis, innovation is generally considered to be the result of a process that brings together various novel ideas in such a way that they affect society.

In industrial economics, innovations are created and found empirically from services to meet growing consumer demand.

A 2014 survey of literature on innovation found over 40 definitions. In an industrial survey of how the software industry defined innovation, the following definition given by Crossan and Apaydin was considered to be the most complete, which builds on the Organisation for Economic Co-operation and Development (OECD) manual's definition:

Innovation is:

- production or adoption, assimilation, and exploitation of a value-added novelty in economic and social spheres;

- renewal and enlargement of products, services, and markets;

- development of new methods of production;

- and establishment of new management systems. It is both a process and an outcome.

According to Kanter, innovation includes original invention and creative use and defines innovation as a generation, admission and realization of new ideas, products, services and processes.

Two main dimensions of innovation were degree of novelty (patent) (i.e. whether an innovation is new to the firm, new to the market, new to the industry, or new to the world) and type of innovation (i.e. whether it is process or product-service system innovation). In recent organizational scholarship, researchers of workplaces have also distinguished innovation to be separate from creativity, by providing an updated definition of these two related but distinct constructs:

Workplace creativity concerns the cognitive and behavioral processes applied when attempting to generate novel ideas. Workplace innovation concerns the processes applied when attempting to implement new ideas.

Specifically, innovation involves some combination of problem/opportunity identification, the introduction, adoption or modification of new ideas germane to organizational needs, the promotion of these ideas, and the practical implementation of these ideas.

Click on any of the following blue hyperlinks for more about Innovation:

- Inter-disciplinary views

- Diffusion

- Measures

- Government policies

- See also:

- Communities of innovation

- Creative competitive intelligence

- Creative problem solving

- Creativity

- Diffusion of innovations

- Deployment

- Disruptive innovation

- Diffusion (anthropology)

- Ecoinnovation

- Global Innovation Index (Boston Consulting Group)

- Global Innovation Index (INSEAD)

- Greatness

- Hype cycle

- Individual capital

- Induced innovation

- Information revolution

- Ingenuity

- Innovation leadership

- Innovation management

- Innovation system

- Knowledge economy

- List of countries by research and development spending

- List of emerging technologies

- List of Russian inventors

- Multiple discovery

- Obsolescence

- Open Innovation

- Open Innovations (Forum and Technology Show)

- Outcome-Driven Innovation

- Paradigm shift

- Participatory design

- Pro-innovation bias

- Public domain

- Research

- State of art

- Sustainable Development Goals (Agenda 9)

- Technology Life Cycle

- Technological innovation system

- Theories of technology

- Timeline of historic inventions

- Toolkits for User Innovation

- UNDP Innovation Facility

- Value network

- Virtual product development

An invention is a unique or novel device, method, composition or process. The invention process is a process within an overall engineering and product development process. It may be an improvement upon a machine or product or a new process for creating an object or a result.

An invention that achieves a completely unique function or result may be a radical breakthrough. Such works are novel and not obvious to others skilled in the same field. An inventor may be taking a big step in success or failure.

Some inventions can be patented. A patent legally protects the intellectual property rights of the inventor and legally recognizes that a claimed invention is actually an invention. The rules and requirements for patenting an invention vary from country to country and the process of obtaining a patent is often expensive.

Another meaning of invention is cultural invention, which is an innovative set of useful social behaviors adopted by people and passed on to others. The Institute for Social Inventions collected many such ideas in magazines and books. Invention is also an important component of artistic and design creativity.

Inventions often extend the boundaries of human knowledge, experience or capability.

Inventions are of three kinds:

- scientific-technological (including medicine),

- sociopolitical (including economics and law),

- and humanistic, or cultural.

Scientific-technological inventions include:

- railroads,

- aviation,

- vaccination,

- hybridization,

- antibiotics,

- astronautics,

- holography,

- the atomic bomb,

- computing,

- the Internet,

- and the smartphone.

Sociopolitical inventions comprise new laws, institutions, and procedures that change modes of social behavior and establish new forms of human interaction and organization. Examples include:

- the British Parliament,

- the US Constitution,

- the Manchester (UK) General Union of Trades,

- the Boy Scouts,

- the Red Cross,

- the Olympic Games,

- the United Nations,

- the European Union,

- and the Universal Declaration of Human Rights,

- as well as movements such as:

- socialism,

- Zionism,

- suffragism,

- feminism,

- and animal-rights veganism.

Humanistic inventions encompass culture in its entirety and are as transformative and important as any in the sciences, although people tend to take them for granted. In the domain of linguistics, for example, many alphabets have been inventions, as are all neologisms (Shakespeare invented about 1,700 words).

Literary inventions include:

- the epic,

- tragedy,

- comedy,

- the novel,

- the sonnet,

- the Renaissance,

- neoclassicism,

- Romanticism,

- Symbolism,

- Aestheticism,

- Socialist Realism,

- Surrealism,

- postmodernism,

- and (according to Freud) psychoanalysis.

Among the inventions of artists and musicians are:

- oil painting,

- printmaking,

- photography,

- cinema,

- musical tonality,

- atonality,

- jazz,

- rock,

- opera,

- and the symphony orchestra.

Philosophers have invented:

- logic (several times),

- dialectics,

- idealism,

- materialism,

- utopia,

- anarchism,

- semiotics,

- phenomenology,

- behaviorism,

- positivism,

- pragmatism,

- and deconstruction.

Religious thinkers are responsible for such inventions as:

- monotheism,

- pantheism,

- Methodism,

- Mormonism,

- iconoclasm,

- puritanism,

- deism,

- secularism,

- ecumenism,

- and Baha’i.

Some of these disciplines, genres, and trends may seem to have existed eternally or to have emerged spontaneously of their own accord, but most of them have had inventors.

For more about Inventions, click on any of the following blue hyperlinks:

- Process of invention

- Invention vs. innovation

- Purposes of invention

- Invention as defined by patent law

- Invention in the arts

- See also:

- portal

- Bayh-Dole Act

- Chindōgu

- Creativity techniques

- Directive on the legal protection of biotechnological inventions

- Discovery (observation)

- Edisonian approach

- Heroic theory of invention and scientific development

- Independent inventor

- Ingenuity

- INPEX (invention show)

- International Innovation Index

- Invention promotion firm

- Inventors' Day

- Kranzberg's laws of technology

- Lemelson-MIT Prize

- Category:Lists of inventions or discoveries

- List of inventions named after people

- List of inventors

- List of prolific inventors

- Multiple discovery

- National Inventors Hall of Fame

- Patent model

- Proof of concept

- Proposed directive on the patentability of computer-implemented inventions - it was rejected

- Scientific priority

- Technological revolution

- The Illustrated Science and Invention Encyclopedia

- Timeline of historic inventions

- Science and invention in Birmingham - The first cotton spinning mill to plastics and steam power.

- Invention Ideas

- List of PCT (Patent Cooperation Treaty) Notable Inventions at WIPO

- Hottelet, Ulrich (October 2007). "Invented in Germany - made in Asia". The Asia Pacific Times. Archived from the original on 2012-05-01

Smart Home Technology

YouTube Video Video Example of Smart Home Technology in Action...

Pictured: Illustration courtesy of http://www.futureforall.org/home/homeofthefuture.htm

Home automation or smart home is the residential extension of building automation and involves the control and automation of lighting, heating (such as smart thermostats), ventilation, air conditioning (HVAC), and security, as well as home appliances such as washer/dryers, ovens or refrigerators/freezers that use WiFi for remote monitoring.

Modern systems generally consist of switches and sensors connected to a central hub sometimes called a "gateway" from which the system is controlled with a user interface that is interacted either with a wall-mounted terminal, mobile phone software, tablet computer or a web interface, often but not always via internet cloud services.

While there are many competing vendors, there are very few world-wide accepted industry standards and the smart home space is heavily fragmented.

Popular communications protocol for products include the following:

Manufacturers often prevent independent implementations by withholding documentation and by suing people.

The home automation market was worth US$5.77 billion in 2015, predicted to have a market value over US$10 billion by the year 2020.

According to Li et. al. (2016) there are three generations of home automation:

Applications and technologies:

Implementations:

In a review of home automation devices, Consumer Reports found two main concerns for consumers:

Microsoft Research found in 2011, that home automation could involve high cost of ownership, inflexibility of interconnected devices, and poor manageability.

Historically systems have been sold as complete systems where the consumer relies on one vendor for the entire system including the hardware, the communications protocol, the central hub, and the user interface. However, there are now open source software systems which can be used with proprietary hardware.

Protocols:

There are a wide variety of technology platforms, or protocols, on which a smart home can be built. Each one is, essentially, its own language. Each language speaks to the various connected devices and instructs them to perform a function.

The automation protocol transport has involved direct wire connectivity, powerline (UPB) and wireless hybrid and wireless.

Most of the protocols below are not open. All have an API.

Click here for a chart listing available protocols.

Criticism and controversies:

Home automation suffers from platform fragmentation and lack of technical standards, a situation where the variety of home automation devices, in terms of both hardware variations and differences in the software running on them, makes the task of developing applications that work consistently between different inconsistent technology ecosystems hard.

Customers may be hesitant to bet their IoT future on proprietary software or hardware devices that use proprietary protocols that may fade or become difficult to customize and interconnect.

Home automation devices' amorphous computing nature is also a problem for security, since patches to bugs found in the core operating system often do not reach users of older and lower-price devices.

One set of researchers say that the failure of vendors to support older devices with patches and updates leaves more than 87% of active devices vulnerable.

See Also:

Modern systems generally consist of switches and sensors connected to a central hub sometimes called a "gateway" from which the system is controlled with a user interface that is interacted either with a wall-mounted terminal, mobile phone software, tablet computer or a web interface, often but not always via internet cloud services.

While there are many competing vendors, there are very few world-wide accepted industry standards and the smart home space is heavily fragmented.

Popular communications protocol for products include the following:

- X10,

- Ethernet,

- RS-485,

- 6LoWPAN,

- Bluetooth LE (BLE),

- ZigBee,

- and Z-Wave,

- or other proprietary protocols all of which are incompatible with each other.

Manufacturers often prevent independent implementations by withholding documentation and by suing people.

The home automation market was worth US$5.77 billion in 2015, predicted to have a market value over US$10 billion by the year 2020.

According to Li et. al. (2016) there are three generations of home automation:

- First generation: wireless technology with proxy server, e.g. Zigbee automation;

- Second generation: artificial intelligence controls electrical devices, e.g. amazon echo;

- Third generation: robot buddy "who" interacts with humans, e.g. Robot Rovio, Roomba.

Applications and technologies:

- Heating, ventilation and air conditioning (HVAC): it is possible to have remote control of all home energy monitors over the internet incorporating a simple and friendly user interface.

- Lighting control system

- Appliance control and integration with the smart grid and a smart meter, taking advantage, for instance, of high solar panel output in the middle of the day to run washing machines.

- Security: a household security system integrated with a home automation system can provide additional services such as remote surveillance of security cameras over the Internet, or central locking of all perimeter doors and windows.

- Leak detection, smoke and CO detectors

- Indoor positioning systems

- Home automation for the elderly and disabled

Implementations:

In a review of home automation devices, Consumer Reports found two main concerns for consumers:

- A WiFi network connected to the internet can be vulnerable to hacking.

- Technology is still in its infancy, and consumers could invest in a system that becomes abandonware. In 2014, Google bought the company selling the Revolv Hub home automation system, integrated it with Nest and in 2016 shut down the servers Revolv Hub depended on, rendering the hardware useless.

Microsoft Research found in 2011, that home automation could involve high cost of ownership, inflexibility of interconnected devices, and poor manageability.

Historically systems have been sold as complete systems where the consumer relies on one vendor for the entire system including the hardware, the communications protocol, the central hub, and the user interface. However, there are now open source software systems which can be used with proprietary hardware.

Protocols:

There are a wide variety of technology platforms, or protocols, on which a smart home can be built. Each one is, essentially, its own language. Each language speaks to the various connected devices and instructs them to perform a function.

The automation protocol transport has involved direct wire connectivity, powerline (UPB) and wireless hybrid and wireless.

Most of the protocols below are not open. All have an API.

Click here for a chart listing available protocols.

Criticism and controversies:

Home automation suffers from platform fragmentation and lack of technical standards, a situation where the variety of home automation devices, in terms of both hardware variations and differences in the software running on them, makes the task of developing applications that work consistently between different inconsistent technology ecosystems hard.

Customers may be hesitant to bet their IoT future on proprietary software or hardware devices that use proprietary protocols that may fade or become difficult to customize and interconnect.

Home automation devices' amorphous computing nature is also a problem for security, since patches to bugs found in the core operating system often do not reach users of older and lower-price devices.

One set of researchers say that the failure of vendors to support older devices with patches and updates leaves more than 87% of active devices vulnerable.

See Also:

- Home automation for the elderly and disabled

- Internet of Things

- List of home automation software and hardware

- List of home automation topics

- List of network buses

- Smart device

- Web of Things

Sergey Brin (co-founder of Google)

YouTube Video: Sergey Brin talks about Google Glass at TED 2013

Sergey Mikhaylovich Brin (born August 21, 1973) is a Soviet-born American computer scientist, internet entrepreneur, and philanthropist. Together with Larry Page, he co-founded Google.

Brin is the President of Google's parent company Alphabet Inc. In October 2016 (the most recent period for which figures are available), Brin was the 12th richest person in the world, with an estimated net worth of US$39.2 billion.

Brin immigrated to the United States with his family from the Soviet Union at the age of 6. He earned his bachelor's degree at the University of Maryland, following in his father's and grandfather's footsteps by studying mathematics, as well as computer science.

After graduation, he moved to Stanford University to acquire a PhD in computer science. There he met Page, with whom he later became friends. They crammed their dormitory room with inexpensive computers and applied Brin's data mining system to build a web search engine. The program became popular at Stanford, and they suspended their PhD studies to start up Google in a rented garage.

The Economist referred to Brin as an "Enlightenment Man", and as someone who believes that "knowledge is always good, and certainly always better than ignorance", a philosophy that is summed up by Google's mission statement, "Organize the world's information and make it universally accessible and useful," and unofficial, sometimes controversial motto, "Don't be evil".

Click on any of the following blue hyperlinks to learn more about Sergey Brin:

Brin is the President of Google's parent company Alphabet Inc. In October 2016 (the most recent period for which figures are available), Brin was the 12th richest person in the world, with an estimated net worth of US$39.2 billion.

Brin immigrated to the United States with his family from the Soviet Union at the age of 6. He earned his bachelor's degree at the University of Maryland, following in his father's and grandfather's footsteps by studying mathematics, as well as computer science.

After graduation, he moved to Stanford University to acquire a PhD in computer science. There he met Page, with whom he later became friends. They crammed their dormitory room with inexpensive computers and applied Brin's data mining system to build a web search engine. The program became popular at Stanford, and they suspended their PhD studies to start up Google in a rented garage.

The Economist referred to Brin as an "Enlightenment Man", and as someone who believes that "knowledge is always good, and certainly always better than ignorance", a philosophy that is summed up by Google's mission statement, "Organize the world's information and make it universally accessible and useful," and unofficial, sometimes controversial motto, "Don't be evil".

Click on any of the following blue hyperlinks to learn more about Sergey Brin:

- Early life and education

- Search engine development

- Other interests

- Censorship of Google in China

- Personal life

- Awards and accolades

- Filmography

Larry Page (co-founder of Google)

YouTube Video Where's Google going next? | Larry Page

Lawrence "Larry" Page (born March 26, 1973) is an American computer scientist and an Internet entrepreneur who co-founded Google Inc. with Sergey Brin in 1998.

Page is the chief executive officer (CEO) of Google's parent company, Alphabet Inc. After stepping aside as Google CEO in August 2001 in favor of Eric Schmidt, he re-assumed the role in April 2011. He announced his intention to step aside a second time in July 2015 to become CEO of Alphabet, under which Google's assets would be reorganized.

Under Page, Alphabet is seeking to deliver major advancements in a variety of industries.

In November 2016, he is the 12th richest person in the world, with an estimated net worth of US$36.9 billion.

Page is the inventor of PageRank, Google's best-known search ranking algorithm. Page received the Marconi Prize in 2004.

For more about Larry Page, click on any of the following blue hyperlinks:

Page is the chief executive officer (CEO) of Google's parent company, Alphabet Inc. After stepping aside as Google CEO in August 2001 in favor of Eric Schmidt, he re-assumed the role in April 2011. He announced his intention to step aside a second time in July 2015 to become CEO of Alphabet, under which Google's assets would be reorganized.

Under Page, Alphabet is seeking to deliver major advancements in a variety of industries.

In November 2016, he is the 12th richest person in the world, with an estimated net worth of US$36.9 billion.

Page is the inventor of PageRank, Google's best-known search ranking algorithm. Page received the Marconi Prize in 2004.

For more about Larry Page, click on any of the following blue hyperlinks:

- Early life and education

- PhD studies and research

- Alphabet

- Other interests

- Personal life

- Awards and accolades

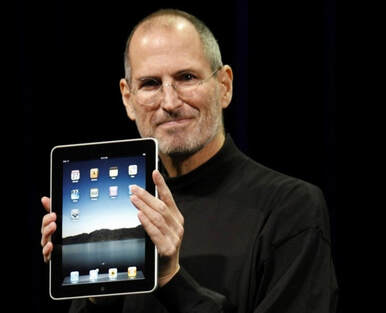

Steve Jobs

YouTube Video by Steve Jobs - Courage*

* -- This is a clip from the D8 Conference, recorded in 2010. Steve Jobs is talking about the courage it takes to remove certain pieces of technology from Apple products. This happened after the iPad was introduced without support for Flash, just as the iPhone, back in 2007. This clip adds some perspective into the debate of Apple's new AirPod and the decision to remove the traditional analog audio connector from the iPhone 7. This kind of decision is not new to Apple.

Steven Paul "Steve" Jobs (February 24, 1955 – October 5, 2011) was an American businessman, inventor, and industrial designer. He was the co-founder, chairman, and chief executive officer (CEO) of Apple Inc.; CEO and majority shareholder of Pixar; a member of The Walt Disney Company's board of directors following its acquisition of Pixar; and founder, chairman, and CEO of NeXT. Jobs is widely recognized as a pioneer of the microcomputer revolution of the 1970s and 1980s, along with Apple co-founder Steve Wozniak.

Jobs was adopted at birth in San Francisco, and raised in the San Francisco Bay Area during the 1960s. Jobs briefly attended Reed College in 1972 before dropping out. He then decided to travel through India in 1974 seeking enlightenment and studying Zen Buddhism.

Jobs's declassified FBI report says an acquaintance knew that Jobs used illegal drugs in college including marijuana and LSD. Jobs told a reporter once that taking LSD was "one of the two or three most important things" he did in his life.

Jobs co-founded Apple in 1976 to sell Wozniak's Apple I personal computer. The duo gained fame and wealth a year later for the Apple II, one of the first highly successful mass-produced personal computers. In 1979, after a tour of PARC, Jobs saw the commercial potential of the Xerox Alto, which was mouse-driven and had a graphical user interface (GUI).

This led to development of the unsuccessful Apple Lisa in 1983, followed by the breakthrough Macintosh in 1984.

In addition to being the first mass-produced computer with a GUI, the Macintosh instigated the sudden rise of the desktop publishing industry in 1985 with the addition of the Apple LaserWriter, the first laser printer to feature vector graphics. Following a long power struggle, Jobs was forced out of Apple in 1985.

After leaving Apple, Jobs took a few of its members with him to found NeXT, a computer platform development company specializing in state-of-the-art computers for higher-education and business markets.

In addition, Jobs helped to initiate the development of the visual effects industry when he funded the spinout of the computer graphics division of George Lucas's Lucasfilm in 1986. The new company, Pixar, would eventually produce the first fully computer-animated film, Toy Story—an event made possible in part because of Jobs's financial support.

In 1997, Apple acquired and merged NeXT, allowing Jobs to become CEO once again, reviving the company at the verge of bankruptcy. Beginning in 1997 with the "Think different" advertising campaign, Jobs worked closely with designer Jonathan Ive to develop a line of products that would have larger cultural ramifications:

Mac OS was also revamped into OS X (renamed “macOS” in 2016), based on NeXT's NeXTSTEP platform.

Jobs was diagnosed with a pancreatic neuroendocrine tumor in 2003 and died of respiratory arrest related to the tumor on October 5, 2011.

Click on any of the following blue hyperlinks for more about Steve Jobs:

Jobs was adopted at birth in San Francisco, and raised in the San Francisco Bay Area during the 1960s. Jobs briefly attended Reed College in 1972 before dropping out. He then decided to travel through India in 1974 seeking enlightenment and studying Zen Buddhism.

Jobs's declassified FBI report says an acquaintance knew that Jobs used illegal drugs in college including marijuana and LSD. Jobs told a reporter once that taking LSD was "one of the two or three most important things" he did in his life.

Jobs co-founded Apple in 1976 to sell Wozniak's Apple I personal computer. The duo gained fame and wealth a year later for the Apple II, one of the first highly successful mass-produced personal computers. In 1979, after a tour of PARC, Jobs saw the commercial potential of the Xerox Alto, which was mouse-driven and had a graphical user interface (GUI).

This led to development of the unsuccessful Apple Lisa in 1983, followed by the breakthrough Macintosh in 1984.

In addition to being the first mass-produced computer with a GUI, the Macintosh instigated the sudden rise of the desktop publishing industry in 1985 with the addition of the Apple LaserWriter, the first laser printer to feature vector graphics. Following a long power struggle, Jobs was forced out of Apple in 1985.

After leaving Apple, Jobs took a few of its members with him to found NeXT, a computer platform development company specializing in state-of-the-art computers for higher-education and business markets.

In addition, Jobs helped to initiate the development of the visual effects industry when he funded the spinout of the computer graphics division of George Lucas's Lucasfilm in 1986. The new company, Pixar, would eventually produce the first fully computer-animated film, Toy Story—an event made possible in part because of Jobs's financial support.

In 1997, Apple acquired and merged NeXT, allowing Jobs to become CEO once again, reviving the company at the verge of bankruptcy. Beginning in 1997 with the "Think different" advertising campaign, Jobs worked closely with designer Jonathan Ive to develop a line of products that would have larger cultural ramifications:

- the iMac,

- iTunes and iTunes Store,

- Apple Store,

- iPod,

- iPhone,

- App Store,

- and the iPad (see picture above)

Mac OS was also revamped into OS X (renamed “macOS” in 2016), based on NeXT's NeXTSTEP platform.

Jobs was diagnosed with a pancreatic neuroendocrine tumor in 2003 and died of respiratory arrest related to the tumor on October 5, 2011.

Click on any of the following blue hyperlinks for more about Steve Jobs:

- Background

- Childhood

- Homestead High

- Reed College

- 1972–1985

- 1985–1997

- 1997–2011

- Portrayals and coverage in books, film, and theater

- Innovations and designs

- Honors and awards

- See also:

Laser Technology and its Applications

YouTube Video: Laser Assisted Cataract Surgery

YouTube Video of an Amazing Laser Show

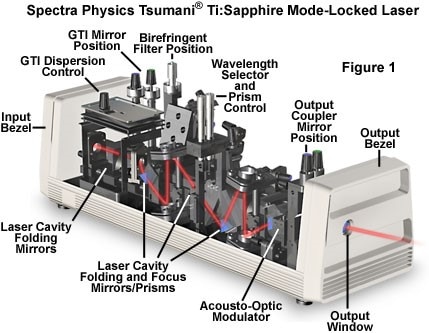

Pictured: The lasers commonly employed in laser scanning confocal microscopy are high-intensity monochromatic light sources, which are useful as tools for a variety of techniques including optical trapping, lifetime imaging studies, photobleaching recovery, and total internal reflection fluorescence. In addition, lasers are also the most common light source for scanning confocal fluorescence microscopy, and have been utilized, although less frequently, in conventional widefield fluorescence investigations

A laser is a device that emits light through a process of optical amplification based on the stimulated emission of electromagnetic radiation. The term "laser" originated as an acronym for "light amplification by stimulated emission of radiation".

The first laser was built in 1960 by Theodore H. Maiman at Hughes Research Laboratories, based on theoretical work by Charles Hard Townes and Arthur Leonard Schawlow.

A laser differs from other sources of light in that it emits light coherently. Spatial coherence allows a laser to be focused to a tight spot, enabling applications such as laser cutting and lithography.

Spatial coherence also allows a laser beam to stay narrow over great distances (collimation), enabling applications such as laser pointers. Lasers can also have high temporal coherence, which allows them to emit light with a very narrow spectrum, i.e., they can emit a single color of light. Temporal coherence can be used to produce pulses of light as short as a femtosecond.

Among their many applications, lasers are used in:

Click here for more about Laser Technology

Click on any of the following blue hyperlinks for additional Laser Applications:

The first laser was built in 1960 by Theodore H. Maiman at Hughes Research Laboratories, based on theoretical work by Charles Hard Townes and Arthur Leonard Schawlow.

A laser differs from other sources of light in that it emits light coherently. Spatial coherence allows a laser to be focused to a tight spot, enabling applications such as laser cutting and lithography.

Spatial coherence also allows a laser beam to stay narrow over great distances (collimation), enabling applications such as laser pointers. Lasers can also have high temporal coherence, which allows them to emit light with a very narrow spectrum, i.e., they can emit a single color of light. Temporal coherence can be used to produce pulses of light as short as a femtosecond.

Among their many applications, lasers are used in:

- optical disk drives,

- laser printers,

- barcode scanners;

- DNA sequencing instruments,

- fiber-optic and free-space optical communication;

- laser surgery and skin treatments;

- cutting and welding materials;

- military and law enforcement devices for marking targets and measuring range and speed;

- and laser lighting displays in entertainment.

Click here for more about Laser Technology

Click on any of the following blue hyperlinks for additional Laser Applications:

- Scientific

- Military

- Medical

- Industrial and commercial

- Entertainment and recreation

- Surveying and ranging

- Bird deterrent

- Images

- See also:

Technologies for Clothing and Textiles, including their Timelines

YouTube Video: 100 Years of Fashion: Women

YouTube Video: 100 Years of Fashion: Men

Pictured Below:

TOP: Increases in capital investment from 2009-2014: 2016 State Of The U.S. Textile Industry

BOTTOM: Images of how female fashion has changed from (LEFT) 1950; to (RIGHT) Today: (L-R) Vanessa Hudgens, Miranda Kerr and Ashley Tisdale.

Click here for the Timeline of clothing and textiles technology

Clothing technology involves the manufacturing, materials, and design innovations that have been developed and used.

The timeline of clothing and textiles technology includes major changes in the manufacture and distribution of clothing.

From clothing in the ancient world into modernity the use of technology has dramatically influenced clothing and fashion in the modern age. Industrialization brought changes in the manufacture of goods. In many nations, homemade goods crafted by hand have largely been replaced factory produced goods on assembly lines purchased in a by consumer culture.

Innovations include man-made materials such as polyester, nylon, and vinyl as well as features like zippers and velcro. The advent of advanced electronics has resulted in wearable technology being developed and popularized since the 1980s.

Design is an important part of the industry beyond utilitarian concerns and the fashion and glamour industries have developed in relation to clothing marketing and retail.

Environmental and human rights issues have also become considerations for clothing and spurred the promotion and use of some natural materials such as bamboo that are considered environmentally friendly.

Click on any of the following blue hyperlinks for more information about clothing technology:

Textile manufacturing is a major industry. It is based on the conversion of fibre into yarn, yarn into fabric. These are then dyed or printed, fabricated into clothes.

Different types of fibre are used to produce yarn. Cotton remains the most important natural fiber, so is treated in depth. There are many variable processes available at the spinning and fabric-forming stages coupled with the complexities of the finishing and coloration processes to the production of a wide ranges of products. There remains a large industry that uses hand techniques to achieve the same results.

Click here for more about Textile Manufacturing.

Clothing technology involves the manufacturing, materials, and design innovations that have been developed and used.

The timeline of clothing and textiles technology includes major changes in the manufacture and distribution of clothing.

From clothing in the ancient world into modernity the use of technology has dramatically influenced clothing and fashion in the modern age. Industrialization brought changes in the manufacture of goods. In many nations, homemade goods crafted by hand have largely been replaced factory produced goods on assembly lines purchased in a by consumer culture.

Innovations include man-made materials such as polyester, nylon, and vinyl as well as features like zippers and velcro. The advent of advanced electronics has resulted in wearable technology being developed and popularized since the 1980s.

Design is an important part of the industry beyond utilitarian concerns and the fashion and glamour industries have developed in relation to clothing marketing and retail.

Environmental and human rights issues have also become considerations for clothing and spurred the promotion and use of some natural materials such as bamboo that are considered environmentally friendly.

Click on any of the following blue hyperlinks for more information about clothing technology:

- Production

- Sports

- Education

- See also

Textile manufacturing is a major industry. It is based on the conversion of fibre into yarn, yarn into fabric. These are then dyed or printed, fabricated into clothes.

Different types of fibre are used to produce yarn. Cotton remains the most important natural fiber, so is treated in depth. There are many variable processes available at the spinning and fabric-forming stages coupled with the complexities of the finishing and coloration processes to the production of a wide ranges of products. There remains a large industry that uses hand techniques to achieve the same results.

Click here for more about Textile Manufacturing.

Advancements in Technologies for Agriculture and Food, Including their Timeline

YouTube Video: Latest Technology Machines, New Modern Agriculture Machines

Pictured: LEFT: Six Ways Drones are Revolutionizing Agriculture; RIGHT: A corn farmer sprays weed killer across his corn field in Auburn, Ill.

Click here for a Timeline of Agriculture Technology Advancements.

By the United States Department of Agriculture (USDA), National Institute of Food and Agriculture:

Modern farms and agricultural operations work far differently than those a few decades ago, primarily because of advancements in technology, including sensors, devices, machines, and information technology.

Today’s agriculture routinely uses sophisticated technologies such as robots, temperature and moisture sensors, aerial images, and GPS technology. These advanced devices and precision agriculture and robotic systems allow businesses to be more profitable, efficient, safer, and more environmentally friendly.

IMPORTANCE OF AGRICULTURAL TECHNOLOGY:

Farmers no longer have to apply water, fertilizers, and pesticides uniformly across entire fields. Instead, they can use the minimum quantities required and target very specific areas, or even treat individual plants differently. Benefits include:

In addition, robotic technologies enable more reliable monitoring and management of natural resources, such as air and water quality. It also gives producers greater control over plant and animal production, processing, distribution, and storage, which results in:

NIFA’S IMPACT:

NIFA advances agricultural technology and ensures that the nation’s agricultural industries are able to utilize it by supporting:

Forbes Magazine, July 5, 2016 Issue:

ShareFullscreenAgriculture technology is no longer a niche that no one's heard about. Agriculture has confirmed its place as an industry of interest for the venture capital community after investment in agtech broke records for the past three years in a row, reaching $4.6 billion in 2015.

For a long time, it wasn’t a target sector for venture capitalists or entrepreneurs. Only a handful of funds served the market, largely focused on biotech opportunities. And until recently, entrepreneurs were also too focused on what Steve Case calls the “Second Wave” of innovation, -- web services, social media, and mobile technology -- to look at agriculture, the least digitized industry in the world, according to McKinsey & Co.

Michael Macrie, chief information officer at agriculture cooperative Land O’ Lakes recently told Forbes that he counted only 20 agtech companies as recently as 2010.

But now, the opportunity to bring agriculture, a $7.8 trillion industry representing 10% of global GDP, into the modern age has caught the attention of a growing number of investors globally. In our 2015 annual report, we recorded 503 individual companies raising funding.

This increasing interest in the sector coincides with a more general “Third Wave” in technological innovation, where all companies are internet-powered tech companies, and startups are challenging the biggest incumbent industries like hospitality, transport, and now agriculture.

There is huge potential, and need, to help the ag industry find efficiencies, conserve valuable resources, meet global demands for protein, and ensure consumers have access to clean, safe, healthy food. In all this, technological innovation is inevitable.

It’s a complex and diverse industry, however, with many subsectors for farmers, investors, and industry stakeholders to navigate. Entrepreneurs are innovating across agricultural disciplines, aiming to disrupt the beef, dairy, row crop, permanent crop, aquaculture, forestry, and fisheries sectors. Each discipline has a specific set of needs that will differ from the others.

___________________________________________________________________________

Advancements in Food Technology:

Food technology is a branch of food science that deals with the production processes that make foods.

Early scientific research into food technology concentrated on food preservation. Nicolas Appert’s development in 1810 of the canning process was a decisive event. The process wasn’t called canning then and Appert did not really know the principle on which his process worked, but canning has had a major impact on food preservation techniques.

Louis Pasteur's research on the spoilage of wine and his description of how to avoid spoilage in 1864 was an early attempt to apply scientific knowledge to food handling.

Besides research into wine spoilage, Pasteur researched the production of alcohol, vinegar, wines and beer, and the souring of milk. He developed pasteurization—the process of heating milk and milk products to destroy food spoilage and disease-producing organisms.

In his research into food technology, Pasteur became the pioneer into bacteriology and of modern preventive medicine.

Developments in food technology have contributed greatly to the food supply and have changed our world. Some of these developments are:

Consumer Acceptance:

In the past, consumer attitude towards food technologies was not common talk and was not important in food development. Nowadays the food chain is long and complicated, foods and food technologies are diverse; consequently the consumers are uncertain about the food quality and safety and find it difficult to orient themselves to the subject.

That is why consumer acceptance of food technologies is an important question. However, in these days acceptance of food products very often depends on potential benefits and risks associated with the food. This also includes the technology the food is processed with.

Attributes like “uncertain”, “unknown” or “unfamiliar” are associated with consumers’ risk perception and consumer very likely will reject products linked to these attributes. Especially innovative food processing technologies are connected to these characteristics and are perceived as risky by consumers.

Acceptance of the different food technologies is very different. Whereas pasteurization is well recognized, high pressure treatment or even microwaves are perceived as risky very often. In studies done within Hightech Europe project, it was found that traditional technologies were well accepted in contrast to innovative technologies.

Consumers form their attitude towards innovative food technologies by three main factors mechanisms. First, knowledge or beliefs about risks and benefits which are correlated with the technology. Second, attitudes are based on their own experience and third, based on higher order values and beliefs.

Acceptance of innovative technologies can be improved by providing non-emotional and concise information about these new technological processes methods. According to a study made by HighTech project also written information seems to have higher impact than audio-visual information on the consumer in case of sensory acceptance of products processed with innovative food technologies.

See Also:

By the United States Department of Agriculture (USDA), National Institute of Food and Agriculture:

Modern farms and agricultural operations work far differently than those a few decades ago, primarily because of advancements in technology, including sensors, devices, machines, and information technology.

Today’s agriculture routinely uses sophisticated technologies such as robots, temperature and moisture sensors, aerial images, and GPS technology. These advanced devices and precision agriculture and robotic systems allow businesses to be more profitable, efficient, safer, and more environmentally friendly.

IMPORTANCE OF AGRICULTURAL TECHNOLOGY:

Farmers no longer have to apply water, fertilizers, and pesticides uniformly across entire fields. Instead, they can use the minimum quantities required and target very specific areas, or even treat individual plants differently. Benefits include:

- Higher crop productivity

- Decreased use of water, fertilizer, and pesticides, which in turn keeps food prices down

- Reduced impact on natural ecosystems

- Less runoff of chemicals into rivers and groundwater

- Increased worker safety

In addition, robotic technologies enable more reliable monitoring and management of natural resources, such as air and water quality. It also gives producers greater control over plant and animal production, processing, distribution, and storage, which results in:

- Greater efficiencies and lower prices

- Safer growing conditions and safer foods

- Reduced environmental and ecological impact

NIFA’S IMPACT:

NIFA advances agricultural technology and ensures that the nation’s agricultural industries are able to utilize it by supporting:

- Basic research and development in physical sciences, engineering, and computer sciences

- Development of agricultural devices, sensors, and systems

- Applied research that assesses how to employ technologies economically and with minimal disruption to existing practices

- Assistance and instruction to farmers on how to use new technologies

Forbes Magazine, July 5, 2016 Issue:

ShareFullscreenAgriculture technology is no longer a niche that no one's heard about. Agriculture has confirmed its place as an industry of interest for the venture capital community after investment in agtech broke records for the past three years in a row, reaching $4.6 billion in 2015.

For a long time, it wasn’t a target sector for venture capitalists or entrepreneurs. Only a handful of funds served the market, largely focused on biotech opportunities. And until recently, entrepreneurs were also too focused on what Steve Case calls the “Second Wave” of innovation, -- web services, social media, and mobile technology -- to look at agriculture, the least digitized industry in the world, according to McKinsey & Co.

Michael Macrie, chief information officer at agriculture cooperative Land O’ Lakes recently told Forbes that he counted only 20 agtech companies as recently as 2010.

But now, the opportunity to bring agriculture, a $7.8 trillion industry representing 10% of global GDP, into the modern age has caught the attention of a growing number of investors globally. In our 2015 annual report, we recorded 503 individual companies raising funding.

This increasing interest in the sector coincides with a more general “Third Wave” in technological innovation, where all companies are internet-powered tech companies, and startups are challenging the biggest incumbent industries like hospitality, transport, and now agriculture.

There is huge potential, and need, to help the ag industry find efficiencies, conserve valuable resources, meet global demands for protein, and ensure consumers have access to clean, safe, healthy food. In all this, technological innovation is inevitable.

It’s a complex and diverse industry, however, with many subsectors for farmers, investors, and industry stakeholders to navigate. Entrepreneurs are innovating across agricultural disciplines, aiming to disrupt the beef, dairy, row crop, permanent crop, aquaculture, forestry, and fisheries sectors. Each discipline has a specific set of needs that will differ from the others.

___________________________________________________________________________

Advancements in Food Technology:

Food technology is a branch of food science that deals with the production processes that make foods.

Early scientific research into food technology concentrated on food preservation. Nicolas Appert’s development in 1810 of the canning process was a decisive event. The process wasn’t called canning then and Appert did not really know the principle on which his process worked, but canning has had a major impact on food preservation techniques.

Louis Pasteur's research on the spoilage of wine and his description of how to avoid spoilage in 1864 was an early attempt to apply scientific knowledge to food handling.

Besides research into wine spoilage, Pasteur researched the production of alcohol, vinegar, wines and beer, and the souring of milk. He developed pasteurization—the process of heating milk and milk products to destroy food spoilage and disease-producing organisms.

In his research into food technology, Pasteur became the pioneer into bacteriology and of modern preventive medicine.

Developments in food technology have contributed greatly to the food supply and have changed our world. Some of these developments are:

- Instantized Milk Powder - D.D. Peebles (U.S. patent 2,835,586) developed the first instant milk powder, which has become the basis for a variety of new products that are rehydratable. This process increases the surface area of the powdered product by partially rehydrating spray-dried milk powder.

- Freeze-drying - The first application of freeze drying was most likely in the pharmaceutical industry; however, a successful large-scale industrial application of the process was the development of continuous freeze drying of coffee.

- High-Temperature Short Time Processing - These processes for the most part are characterized by rapid heating and cooling, holding for a short time at a relatively high temperature and filling aseptically into sterile containers.

- Decaffeination of Coffee and Tea - Decaffeinated coffee and tea was first developed on a commercial basis in Europe around 1900. The process is described in U.S. patent 897,763. Green coffee beans are treated with water, heat and solvents to remove the caffeine from the beans.

- Process optimization - Food Technology now allows production of foods to be more efficient, Oil saving technologies are now available on different forms. Production methods and methodology have also become increasingly sophisticated.

Consumer Acceptance:

In the past, consumer attitude towards food technologies was not common talk and was not important in food development. Nowadays the food chain is long and complicated, foods and food technologies are diverse; consequently the consumers are uncertain about the food quality and safety and find it difficult to orient themselves to the subject.

That is why consumer acceptance of food technologies is an important question. However, in these days acceptance of food products very often depends on potential benefits and risks associated with the food. This also includes the technology the food is processed with.

Attributes like “uncertain”, “unknown” or “unfamiliar” are associated with consumers’ risk perception and consumer very likely will reject products linked to these attributes. Especially innovative food processing technologies are connected to these characteristics and are perceived as risky by consumers.

Acceptance of the different food technologies is very different. Whereas pasteurization is well recognized, high pressure treatment or even microwaves are perceived as risky very often. In studies done within Hightech Europe project, it was found that traditional technologies were well accepted in contrast to innovative technologies.

Consumers form their attitude towards innovative food technologies by three main factors mechanisms. First, knowledge or beliefs about risks and benefits which are correlated with the technology. Second, attitudes are based on their own experience and third, based on higher order values and beliefs.

Acceptance of innovative technologies can be improved by providing non-emotional and concise information about these new technological processes methods. According to a study made by HighTech project also written information seems to have higher impact than audio-visual information on the consumer in case of sensory acceptance of products processed with innovative food technologies.

See Also:

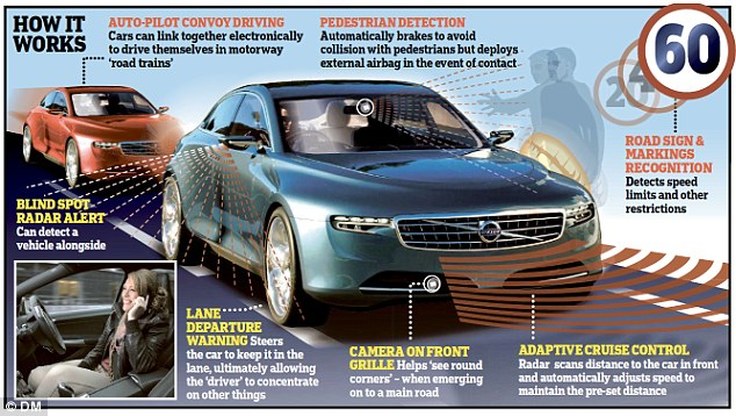

"Next-gen car technology just got another big upgrade" reported by the Washington Post (7/13/17)

Video: Chris Urmson: How a driverless car sees the road by TED Talk

Pictured: Driverless Robo-car guided by radar lasers.

By Brian Fung July 13

Federal regulators have approved a big swath of new airwaves for vehicle radar devices, opening the door to cheaper, more precise sensors that may accelerate the arrival of high-tech, next-generation cars.

Many consumer vehicles already use radar for collision avoidance, automatic lane-keeping and other purposes. But right now, vehicle radar is divided into a couple of different chunks of the radio spectrum. On Thursday, the Federal Communications Commission voted to consolidate these chunks — and added a little more, essentially giving extra bandwidth to vehicle radar.

“While we enthusiastically harness new technology that will ultimately propel us to a driverless future, we must maintain our focus on safety — and radar applications play an important role,” said Mignon Clyburn, a Democratic FCC commissioner.

Radar is a key component not only in today’s computer-assisted cars, but also in the fully self-driving cars of the future. There, the technology is even more important because it helps the computer make sound driving decisions.

Thursday’s decision by the FCC lets vehicle radar take advantage of all airwaves ranging from frequencies of 76 GHz to 81 GHz — reflecting an addition of four extra gigahertz — and ends support for the technology in the 24 GHz range.

Expanding the amount of airwaves devoted to vehicle radar could also make air travel safer, said FCC Chairman Ajit Pai, by allowing for the installation of radar devices on the wingtips of airplanes.

“Wingtip collisions account for 25 percent of all aircraft ground incidents,” said Pai. “Wingtip radars on aircraft may help with collision avoidance on the tarmac, among other areas.”

Although many analysts say fully self-driving cars are still years away from going mainstream, steps like these could help bring that future just a bit more within reach.

Federal regulators have approved a big swath of new airwaves for vehicle radar devices, opening the door to cheaper, more precise sensors that may accelerate the arrival of high-tech, next-generation cars.

Many consumer vehicles already use radar for collision avoidance, automatic lane-keeping and other purposes. But right now, vehicle radar is divided into a couple of different chunks of the radio spectrum. On Thursday, the Federal Communications Commission voted to consolidate these chunks — and added a little more, essentially giving extra bandwidth to vehicle radar.

“While we enthusiastically harness new technology that will ultimately propel us to a driverless future, we must maintain our focus on safety — and radar applications play an important role,” said Mignon Clyburn, a Democratic FCC commissioner.

Radar is a key component not only in today’s computer-assisted cars, but also in the fully self-driving cars of the future. There, the technology is even more important because it helps the computer make sound driving decisions.

Thursday’s decision by the FCC lets vehicle radar take advantage of all airwaves ranging from frequencies of 76 GHz to 81 GHz — reflecting an addition of four extra gigahertz — and ends support for the technology in the 24 GHz range.

Expanding the amount of airwaves devoted to vehicle radar could also make air travel safer, said FCC Chairman Ajit Pai, by allowing for the installation of radar devices on the wingtips of airplanes.

“Wingtip collisions account for 25 percent of all aircraft ground incidents,” said Pai. “Wingtip radars on aircraft may help with collision avoidance on the tarmac, among other areas.”

Although many analysts say fully self-driving cars are still years away from going mainstream, steps like these could help bring that future just a bit more within reach.

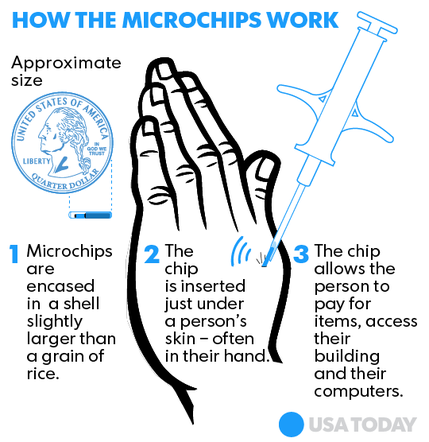

Radio Frequency Identification (RFID) including a case of RFID implant in Humans (ABC July 24, 2017)

Click Here then Click on arrow to the embedded Video "WATCH: Company offers to implant microchips in employees"

Tech company workers agree to have microchips implanted in their hands

By ENJOLI FRANCIS REBECCA JARVIS Jul 24, 2017, 6:48 PM ET ABC News

"Some workers at a company in Wisconsin will soon be getting microchips in order to enter the office, log into computers and even buy a snack or two with just a swipe of a hand.

Todd Westby, the CEO of tech company Three Square Market, told ABC News today that of the 80 employees at the company's River Falls headquarters, more than 50 agreed to get implants. He said that participation was not required.

The microchip uses radio frequency identification (RFID) technology and was approved by the Food and Drug Administration in 2004. The chip is the size of a grain of rice and will be placed between a thumb and forefinger.

Swedish company implants microchips in employees

Westby said that when his team was approached with the idea, there was some reluctance mixed with excitement.

But after further conversations and the sharing of more details, the majority of managers were on board, and the company opted to partner with BioHax International to get the microchips.

Westby said the chip is not GPS enabled, does not allow for tracking workers and does not require passwords.

"There's really nothing to hack in it, because it is encrypted just like credit cards are ... The chances of hacking into it are almost nonexistent because it's not connected to the internet," he said. "The only way for somebody to get connectivity to it is to basically chop off your hand."

Three Square Market is footing the bill for the microchips, which cost $300 each, and licensed piercers will be handling the implantations on Aug. 1. Westby said that if workers change their minds, the microchips can be removed, as if taking out a splinter.

He said his wife, young adult children and others will also be getting the microchips next week.

Critics warned that there could be dangers in how the company planned to store, use and protect workers' information.

Adam Levin, the chairman and founder of CyberScout, which provides identity protection and data risk services, said he would not put a microchip in his body.

"Many things start off with the best of intentions, but sometimes intentions turn," he said. "We've survived thousands of years as a species without being microchipped. Is there any particular need to do it now? ... Everyone has a decision to make. That is, how much privacy and security are they willing to trade for convenience?"

Jowan Osterlund of BioHax said implanting people was the next step for electronics.

"I'm certain that this will be the natural way to add another dimension to our everyday life," he told The Associated Press...."

Click here for rest of Article.

___________________________________________________________________________

Radio-frequency identification (RFID): uses electromagnetic fields to automatically identify and track tags attached to objects. The tags contain electronically stored information. Passive tags collect energy from a nearby RFID reader's interrogating radio waves.

Active tags have a local power source such as a battery and may operate at hundreds of meters from the RFID reader. Unlike a barcode, the tag need not be within the line of sight of the reader, so it may be embedded in the tracked object. RFID is one method for Automatic Identification and Data Capture (AIDC).

RFID tags are used in many industries, for example, an RFID tag attached to an automobile during production can be used to track its progress through the assembly line; RFID-tagged pharmaceuticals can be tracked through warehouses; and implanting RFID microchips in livestock and pets allows for positive identification of animals.

Since RFID tags can be attached to cash, clothing, and possessions, or implanted in animals and people, the possibility of reading personally-linked information without consent has raised serious privacy concerns. These concerns resulted in standard specifications development addressing privacy and security issues.

ISO/IEC 18000 and ISO/IEC 29167 use on-chip cryptography methods for untraceability, tag and reader authentication, and over-the-air privacy. ISO/IEC 20248 specifies a digital signature data structure for RFID and barcodes providing data, source and read method authenticity.

This work is done within ISO/IEC JTC 1/SC 31 Automatic identification and data capture techniques.

In 2014, the world RFID market is worth US$8.89 billion, up from US$7.77 billion in 2013 and US$6.96 billion in 2012. This includes tags, readers, and software/services for RFID cards, labels, fobs, and all other form factors. The market value is expected to rise to US$18.68 billion by 2026.

Click on any of the following blue hyperlinks for more about Radio-Frequency Idenfication (RFID):

By ENJOLI FRANCIS REBECCA JARVIS Jul 24, 2017, 6:48 PM ET ABC News

"Some workers at a company in Wisconsin will soon be getting microchips in order to enter the office, log into computers and even buy a snack or two with just a swipe of a hand.

Todd Westby, the CEO of tech company Three Square Market, told ABC News today that of the 80 employees at the company's River Falls headquarters, more than 50 agreed to get implants. He said that participation was not required.

The microchip uses radio frequency identification (RFID) technology and was approved by the Food and Drug Administration in 2004. The chip is the size of a grain of rice and will be placed between a thumb and forefinger.

Swedish company implants microchips in employees

Westby said that when his team was approached with the idea, there was some reluctance mixed with excitement.

But after further conversations and the sharing of more details, the majority of managers were on board, and the company opted to partner with BioHax International to get the microchips.

Westby said the chip is not GPS enabled, does not allow for tracking workers and does not require passwords.

"There's really nothing to hack in it, because it is encrypted just like credit cards are ... The chances of hacking into it are almost nonexistent because it's not connected to the internet," he said. "The only way for somebody to get connectivity to it is to basically chop off your hand."

Three Square Market is footing the bill for the microchips, which cost $300 each, and licensed piercers will be handling the implantations on Aug. 1. Westby said that if workers change their minds, the microchips can be removed, as if taking out a splinter.

He said his wife, young adult children and others will also be getting the microchips next week.

Critics warned that there could be dangers in how the company planned to store, use and protect workers' information.

Adam Levin, the chairman and founder of CyberScout, which provides identity protection and data risk services, said he would not put a microchip in his body.

"Many things start off with the best of intentions, but sometimes intentions turn," he said. "We've survived thousands of years as a species without being microchipped. Is there any particular need to do it now? ... Everyone has a decision to make. That is, how much privacy and security are they willing to trade for convenience?"

Jowan Osterlund of BioHax said implanting people was the next step for electronics.

"I'm certain that this will be the natural way to add another dimension to our everyday life," he told The Associated Press...."

Click here for rest of Article.

___________________________________________________________________________

Radio-frequency identification (RFID): uses electromagnetic fields to automatically identify and track tags attached to objects. The tags contain electronically stored information. Passive tags collect energy from a nearby RFID reader's interrogating radio waves.

Active tags have a local power source such as a battery and may operate at hundreds of meters from the RFID reader. Unlike a barcode, the tag need not be within the line of sight of the reader, so it may be embedded in the tracked object. RFID is one method for Automatic Identification and Data Capture (AIDC).

RFID tags are used in many industries, for example, an RFID tag attached to an automobile during production can be used to track its progress through the assembly line; RFID-tagged pharmaceuticals can be tracked through warehouses; and implanting RFID microchips in livestock and pets allows for positive identification of animals.

Since RFID tags can be attached to cash, clothing, and possessions, or implanted in animals and people, the possibility of reading personally-linked information without consent has raised serious privacy concerns. These concerns resulted in standard specifications development addressing privacy and security issues.

ISO/IEC 18000 and ISO/IEC 29167 use on-chip cryptography methods for untraceability, tag and reader authentication, and over-the-air privacy. ISO/IEC 20248 specifies a digital signature data structure for RFID and barcodes providing data, source and read method authenticity.

This work is done within ISO/IEC JTC 1/SC 31 Automatic identification and data capture techniques.

In 2014, the world RFID market is worth US$8.89 billion, up from US$7.77 billion in 2013 and US$6.96 billion in 2012. This includes tags, readers, and software/services for RFID cards, labels, fobs, and all other form factors. The market value is expected to rise to US$18.68 billion by 2026.

Click on any of the following blue hyperlinks for more about Radio-Frequency Idenfication (RFID):

- History

- Design

- Tags

Readers

Frequencies

Signaling

Miniaturization

- Tags

- Uses

- Commerce

- Access control

Advertising

Promotion tracking

- Access control

- Transportation and logistics

- Intelligent transportation systems

Hose stations and conveyance of fluids

Track & Trace test vehicles and prototype parts

- Intelligent transportation systems

- Infrastructure management and protection

- Passports

- Transportation payments

- Animal identification

- Human implantation

- Institutions

- Hospitals and healthcare

Libraries

Museums

Schools and universities

- Hospitals and healthcare

- Sports

- Complement to barcode

- Waste Management

- Telemetry

- Commerce

- Optical RFID

- Regulation and standardization

- Problems and concerns

- Data flooding

Global standardization

Security concerns

Health

Exploitation

Passports

Shielding

- Data flooding

- Controversies

- Privacy

Government control

Deliberate destruction in clothing and other items

- Privacy

- See also:

- AS5678

- Balise

- Bin bug

- Chipless RFID

- Internet of Things

- Mass surveillance

- Near Field Communication

- PositiveID

- Privacy by design

- Proximity card

- Resonant inductive coupling

- RFID on metal

- RSA blocker tag

- Smart label

- Speedpass

- TecTile

- Tracking system

- RFID in schools

- UHF regulations overview by GS1

- How RFID Works at HowStuffWorks

- Privacy concerns and proposed privacy legislation

- RFID at DMOZ

- What is RFID? - Animated Explanation

- IEEE Council on RFID

- RFID tracking system

The History of Technology

YouTube Video: Ellen Discusses Technology's Detailed History

(The Ellen Show)

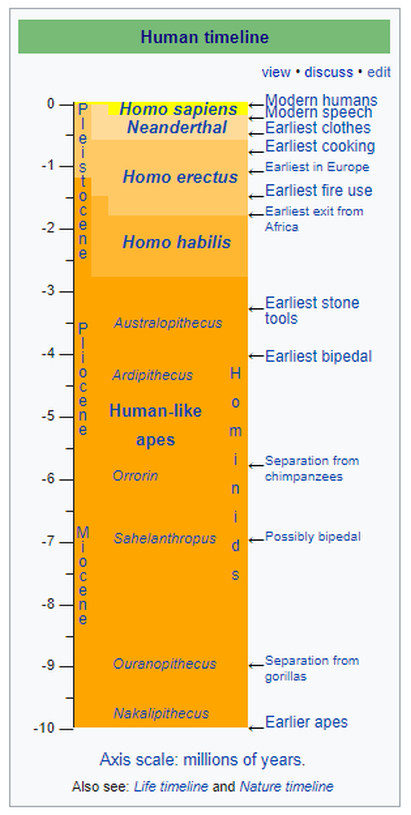

The history of technology is the history of the invention of tools and techniques and is similar to other sides of the history of humanity. Technology can refer to methods ranging from as simple as language and stone tools to the complex genetic engineering and information technology that has emerged since the 1980s.

New knowledge has enabled people to create new things, and conversely, many scientific endeavors are made possible by technologies which assist humans in travelling to places they could not previously reach, and by scientific instruments by which we study nature in more detail than our natural senses allow.

Since much of technology is applied science, technical history is connected to the history of science. Since technology uses resources, technical history is tightly connected to economic history. From those resources, technology produces other resources, including technological artifacts used in everyday life.

Technological change affects, and is affected by, a society's cultural traditions. It is a force for economic growth and a means to develop and project economic, political and military power.

Measuring technological progress:

Many sociologists and anthropologists have created social theories dealing with social and cultural evolution. Some, like Lewis H. Morgan, Leslie White, and Gerhard Lenski have declared technological progress to be the primary factor driving the development of human civilization.

Morgan's concept of three major stages of social evolution (savagery, barbarism, and civilization) can be divided by technological milestones, such as fire. White argued the measure by which to judge the evolution of culture was energy.

For White, "the primary function of culture" is to "harness and control energy." White differentiates between five stages of human development:

White introduced a formula P=E*T, where E is a measure of energy consumed, and T is the measure of efficiency of technical factors utilizing the energy. In his own words, "culture evolves as the amount of energy harnessed per capita per year is increased, or as the efficiency of the instrumental means of putting the energy to work is increased". Nikolai Kardashev extrapolated his theory, creating the Kardashev scale, which categorizes the energy use of advanced civilizations.

Lenski's approach focuses on information. The more information and knowledge (especially allowing the shaping of natural environment) a given society has, the more advanced it is. He identifies four stages of human development, based on advances in the history of communication.

Lenski also differentiates societies based on their level of technology, communication and economy:

In economics productivity is a measure of technological progress. Productivity increases when fewer inputs (labor, energy, materials or land) are used in the production of a unit of output.