Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

Below, we cover

Modern Medicine

including the Medical Profession as well as ailments and cures, whether physical or mental in cause and treatment.

See also: "Health & Fitness"

The Human Genome Project

YouTube Video: Cracking the Code Of Life | PBS Nova | 2001

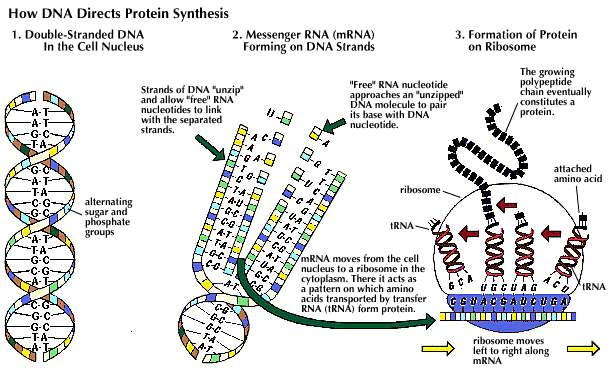

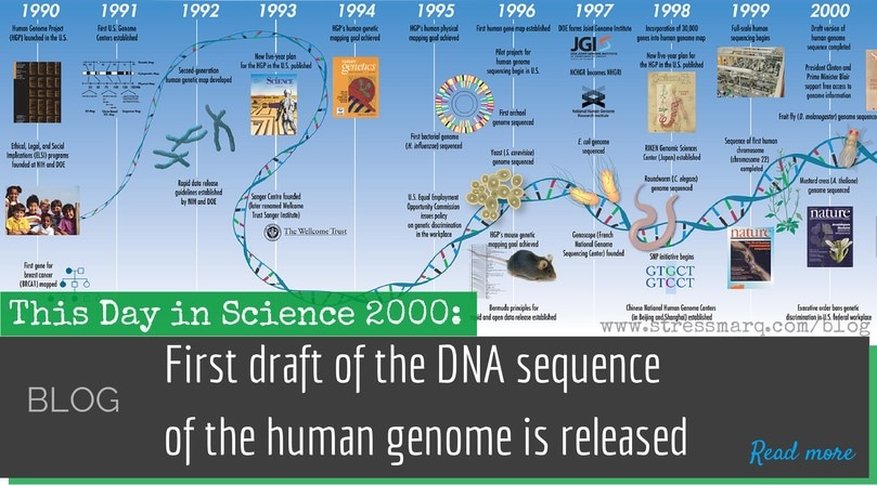

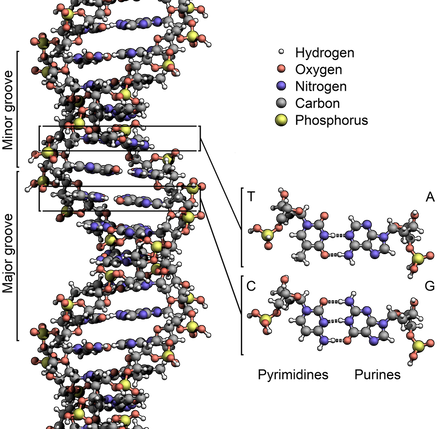

The Human Genome Project (HGP) is an international scientific research project with the goal of determining the sequence of chemical base pairs which make up human DNA, and of identifying and mapping all of the genes of the human genome from both a physical and functional standpoint.

It remains the world's largest collaborative biological project. After the idea was picked up in 1984 by the US government the planning started, with the project formally launched in 1990, and finally declared complete in 2003.

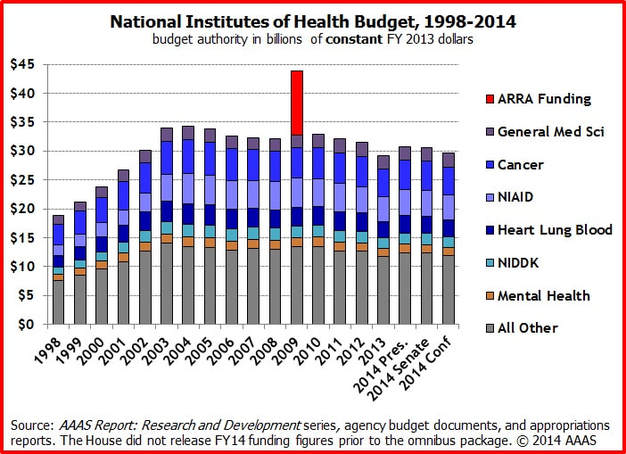

Funding came from the US government through the National Institutes of Health as well as numerous other groups from around the world. A parallel project was conducted outside of government by the Celera Corporation, or Celera Genomics, which was formally launched in 1998. Most of the government-sponsored sequencing was performed in twenty universities and research centers in the United States, the United Kingdom, Japan, France, Germany, and China.

The Human Genome Project originally aimed to map the nucleotides contained in a human haploid reference genome (more than three billion). The "genome" of any given individual is unique; mapping "the human genome" involves sequencing multiple variations of each gene.

It remains the world's largest collaborative biological project. After the idea was picked up in 1984 by the US government the planning started, with the project formally launched in 1990, and finally declared complete in 2003.

Funding came from the US government through the National Institutes of Health as well as numerous other groups from around the world. A parallel project was conducted outside of government by the Celera Corporation, or Celera Genomics, which was formally launched in 1998. Most of the government-sponsored sequencing was performed in twenty universities and research centers in the United States, the United Kingdom, Japan, France, Germany, and China.

The Human Genome Project originally aimed to map the nucleotides contained in a human haploid reference genome (more than three billion). The "genome" of any given individual is unique; mapping "the human genome" involves sequencing multiple variations of each gene.

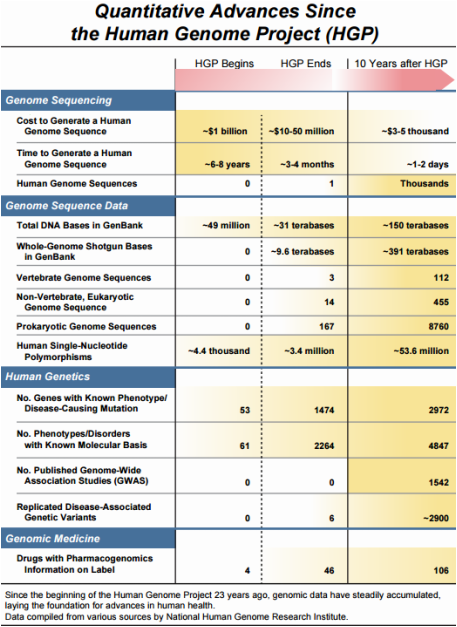

A Decade of Advancements Since the Human Genome Project

YouTube Video: Sequencing of the Human Genome to Treat Cancer - Mayo Clinic

A View by Susan Young Rojahn of MIT Technology Review.

April 12, 2013: "This Sunday, the National Institutes of Health will celebrate the 10th anniversary of the completion of the Human Genome Project.

Since the end of the 13-year and $3-billion effort to determine the sequence of a human genome (a mosaic of genomes from several people in this case), there have been some impressive advances in technology and biological understanding and the dawn of a new branch of medicine: medical genomics.

Today, sequencing a human genome can cost less than $5,000 and take only a day or two. This means genome analysis is now in the cost range of a sophisticated medical test, said Eric Green, director of the National Human Genome Research Institute, in a teleconference on Friday. Doctors can now using DNA analysis to diagnose challenging cases, such as mysterious neurodevelopmental disorders, mitochondrial disease, or other disease or unknown origin in children (see “Making Genome Sequencing Part of Clinical Care”). In such cases, genomic analysis can identify disease-causing mutations 19 percent to 33 percent of the time, according to a recent analysis.

Genomics is possibly making its biggest strides in cancer medicine. Doctors can now sequence a patient’s tumor to identify the best treatments. Specific drug targets may be found in as many as 70 percent of tumors (see “Foundation Medicine: Personalizing Cancer Drugs” and “Cancer Genomics”).

The dropping price of DNA sequencing is also changing prenatal care. A pregnant woman now has the option to eschew amniocenteses or other invasive methods for checking for chromosome aberrations in her fetus. Instead, she can get a simple blood draw (see “A Brave New World of Prenatal DNA Sequencing”).

Before the Human Genome Project, researchers knew the genetic basis of about 60 disorders. Today, they know the basis of nearly 5,000 conditions. Prescriptions are also changing because of genomics. More than 100 different FDA-approved drugs are now packaged with genomic information that tells doctors to test their patients for genetic variants linked to efficacy, dosages or risky side-effects.

But the work for human genome scientists is hardly over. There are still regions of the human genome yet to be sequenced. Most of these still unyielding regions are in parts of chromosomes that are full of complex, repetitive sequence, said Green. The much larger challenge will be to decipher what all the A’s, T’s, G’s and C’s in the human genome mean. Only a small proportion of the genome encodes for proteins and there is ongoing debate as to how much of the remainder is functional or just junk or redundant sequences.

And many scientists agree that the advances in medical genomics are just the tip of the iceberg— much more work lies ahead to fully harness genomic information to improve patient health.

You can see more of the numbers behind the advances in genome science since the Human Genome Project in this chart published by the NHGRI on Friday....: (See Chart above).

(For rest of article, click on title above).

Since the end of the 13-year and $3-billion effort to determine the sequence of a human genome (a mosaic of genomes from several people in this case), there have been some impressive advances in technology and biological understanding and the dawn of a new branch of medicine: medical genomics.

Today, sequencing a human genome can cost less than $5,000 and take only a day or two. This means genome analysis is now in the cost range of a sophisticated medical test, said Eric Green, director of the National Human Genome Research Institute, in a teleconference on Friday. Doctors can now using DNA analysis to diagnose challenging cases, such as mysterious neurodevelopmental disorders, mitochondrial disease, or other disease or unknown origin in children (see “Making Genome Sequencing Part of Clinical Care”). In such cases, genomic analysis can identify disease-causing mutations 19 percent to 33 percent of the time, according to a recent analysis.

Genomics is possibly making its biggest strides in cancer medicine. Doctors can now sequence a patient’s tumor to identify the best treatments. Specific drug targets may be found in as many as 70 percent of tumors (see “Foundation Medicine: Personalizing Cancer Drugs” and “Cancer Genomics”).

The dropping price of DNA sequencing is also changing prenatal care. A pregnant woman now has the option to eschew amniocenteses or other invasive methods for checking for chromosome aberrations in her fetus. Instead, she can get a simple blood draw (see “A Brave New World of Prenatal DNA Sequencing”).

Before the Human Genome Project, researchers knew the genetic basis of about 60 disorders. Today, they know the basis of nearly 5,000 conditions. Prescriptions are also changing because of genomics. More than 100 different FDA-approved drugs are now packaged with genomic information that tells doctors to test their patients for genetic variants linked to efficacy, dosages or risky side-effects.

But the work for human genome scientists is hardly over. There are still regions of the human genome yet to be sequenced. Most of these still unyielding regions are in parts of chromosomes that are full of complex, repetitive sequence, said Green. The much larger challenge will be to decipher what all the A’s, T’s, G’s and C’s in the human genome mean. Only a small proportion of the genome encodes for proteins and there is ongoing debate as to how much of the remainder is functional or just junk or redundant sequences.

And many scientists agree that the advances in medical genomics are just the tip of the iceberg— much more work lies ahead to fully harness genomic information to improve patient health.

You can see more of the numbers behind the advances in genome science since the Human Genome Project in this chart published by the NHGRI on Friday....: (See Chart above).

(For rest of article, click on title above).

10 Top Medical Breakthroughs

Exciting advances to health care are coming next year by Candy Sagon, AARP Bulletin, December 2015

YouTube Video: Top 10 Medical Breakthroughs according to WatchMojo

Pictured: A mind-controlled robotic limb may help those who suffer from paralysis in the future. — Zackary Canepari/ The New York Times/Redux

Game-changing new clot retriever for stroke victims. A way to restore movement by linking the brain to paralyzed limbs. New vaccines for our deadliest cancers. These will be the biggest medical advancements for 2016, according to three nationally known experts: Francis Collins, M.D., director of the National Institutes of Health; Michael Roizen, M.D., director of the Cleveland Clinic's Wellness Institute; and pathologist Michael Misialek, M.D., of Tufts University School of Medicine and Newton-Wellesley Hospital. Here are their top predictions.

1. Personalized treatment

What if you could get medical care tailored just for you, based on genetic information? The Precision Medicine Initiative, set to launch in 2016, would revolutionize how we treat disease, taking it from a one-size-fits-all approach to one fitted to each patient's unique differences. The project, Collins says, hopes to answer such important questions as: Why does a medical treatment work for some people with the same disease but not for others? One million volunteers will be asked to share their genomic and other molecular information with researchers working to understand factors that influence health.

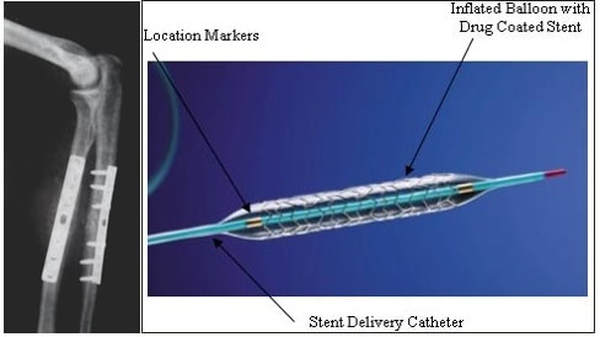

2. Busting brain clots

A tiny new tool to get rid of brain clots will be a "game changer for stroke treatment," Roizen says. Although clot-dissolving drugs are the first line of treatment for the most common type of stroke, when they don't do the trick, physicians can now use a stent retriever to remove the small mass. A wire with a mesh-like tip is guided through the artery into the brain to grab the clot. When the wire is removed, the clot goes with it. The American Heart Association has given the device its strongest recommendation, after studies found it improves the odds that certain patients will survive and function normally again.

3. Brain-limb connection

The goal of linking the brain to a robotic limb to help paralyzed people and amputees move naturally is closer than ever. Previously, brain implants to help move these artificial limbs have had limited success because the person had to think about each individual action (open hand, close hand) in order to move the limb. But now researchers are putting implants in the area of the brain that controls the intention to move, not just the physical movement itself. This allows patients to fluidly guide a robotic limb. "We think this will get FDA approval in the next year and will have a major clinical impact on paralyzed patients," Roizen says.

4. Fine-tuning genes

Next year could see major strides toward the goal of cutting harmful genetic mistakes from a person's DNA. A gene-editing tool is a powerful technology that allows scientists to easily correct or edit DNA. "It basically gives you a scissors to cut out pieces of genes," Roizen explains. The technology was recently used to eradicate leukemia in a British child by giving her gene-edited immune cells to fight off the disease. This could represent a huge step toward treating other diseases, including correcting gene mutations that cause inherited diseases, but ethicists and scientists worry the technology could also be used to alter traits for nonmedical reasons.

5. New cancer vaccines

Your body's immune system fights off germs that cause infections — could it be taught to fight off cancer cells? That's the idea behind new immunotherapy cancer vaccines, which train the immune system to use its antiviral fighting response to destroy cancer cells without harming healthy cells. The Food and Drug Administration (FDA) already has approved such vaccines for the treatment of advanced prostate cancer and melanoma. Current research is focused on pairing new and old vaccines, including the tetanus vaccine with a newer cancer vaccine to treat glioblastoma, a type of brain cancer. Those who received the dual vaccine lived three to seven more years after treatment than those who received an injection without the tetanus portion. Among the most eagerly anticipated vaccines in 2016, Misialek says, is a lung cancer vaccine. Work on such a vaccine, first developed in Cuba, is already underway here.

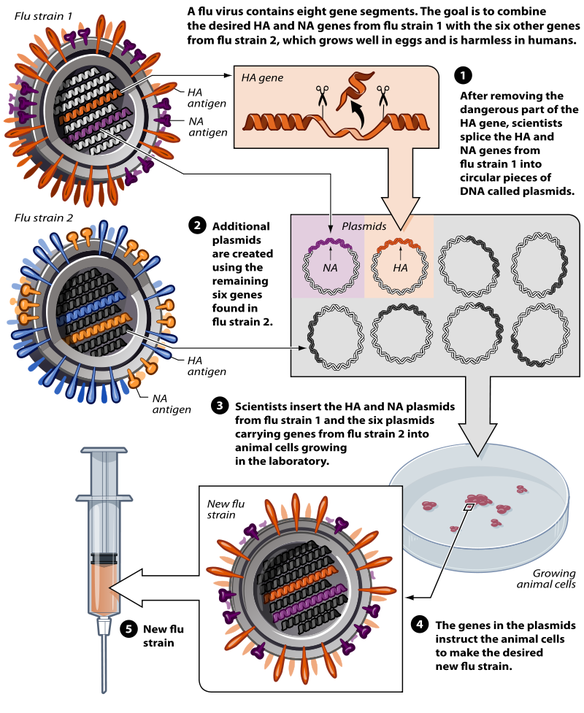

6. Faster public health vaccines

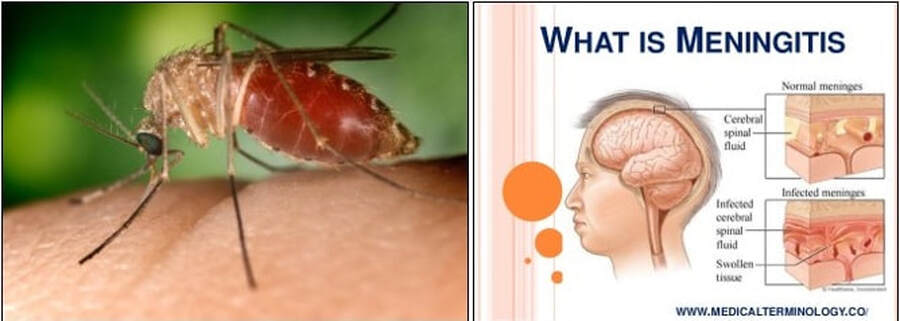

In the wake of the Ebola outbreak last year, the process for producing vaccines to protect the public against a possible epidemic has gone into warp drive — a feat that makes it the Cleveland Clinic's top medical innovation for 2016. The fear that, with international travel, a single person could potentially infect huge numbers of people "has led to a system to be able to immunize a large number of people really fast," Roizen explains. Where previously it took decades to develop a vaccine, the Ebola vaccine was ready in six months. A similarly accelerated process was behind the approval of a meningitis B vaccine following outbreaks at two universities.

7. Targeting cancer

Researchers are closing in on the day when a single drug will treat many different cancers. While traditional clinical trials focus on testing a drug for a particular type of cancer based on its location — breast or lung, for example — new studies are testing therapies that target a specific genetic mutation found in a tumor, regardless of where the cancer originated. "We're throwing all cancers with the same mutation in one basket," pathologist Misialek says, and then testing a drug that targets that mutation. Results of an international basket trial found that a drug focused on a single genetic mutation can be effective across multiple cancer types, including a common form of lung cancer and a rare form of bone cancer.

8. Wearable sensors

Wearable health sensors could soon change the way people with chronic diseases, such as diabetes, heart disease and asthma, control and monitor their condition. Drug companies and researchers are looking into using wearable technology to monitor patients more accurately in clinical trials, and hospitals and outpatient clinics could use it to monitor patients after discharge. Devices, from stick-on sensors to wristbands and special clothing, can already be used to monitor respiratory and heart rates, including EKG readings, as well as body temperature and glucose level. Stress levels and inflammation readings may be next, Roizen says.

9. Fighting superbugs

Decoding the DNA of bacteria is transforming the way we identify infectious diseases in the microbiology lab. That could help prevent the spread of dangerous, drug-resistant infections in hospitals and nursing homes, says Misialek. "Instead of identifying bugs in the traditional way and waiting days or weeks for the results, we can analyze genes and get exact identification sooner," he adds. That could help protect vulnerable patients against the spread of superbugs like MRSA, C. diff and streptococcus pneumoniae that currently resist treatment.

10. Better cancer screening

Some of the deadliest, most difficult-to-find cancers may be detected by analyzing blood for abnormal proteins. Cancer produces these abnormal protein structures. Up to now, blood tests weren't sensitive enough to identify them. A new type of analysis allows researchers to find the proteins earlier, which means cancer treatment can be started at an earlier stage. Cleveland Clinic experts predict this will lead to more effective tests for pancreatic cancer, considered the most deadly type with only about 7 percent surviving five years, as well as for prostate and ovarian cancer. A new test trial using protein analysis identified twice as many cases of ovarian cancer at an earlier stage than current tests.

At the bottom of the above link to the AARP article, you can click on a video about a Parkinson's Breakthrough: This health video will focus on the new breakthrough in the treatment of Parkinson's disease.

1. Personalized treatment

What if you could get medical care tailored just for you, based on genetic information? The Precision Medicine Initiative, set to launch in 2016, would revolutionize how we treat disease, taking it from a one-size-fits-all approach to one fitted to each patient's unique differences. The project, Collins says, hopes to answer such important questions as: Why does a medical treatment work for some people with the same disease but not for others? One million volunteers will be asked to share their genomic and other molecular information with researchers working to understand factors that influence health.

2. Busting brain clots

A tiny new tool to get rid of brain clots will be a "game changer for stroke treatment," Roizen says. Although clot-dissolving drugs are the first line of treatment for the most common type of stroke, when they don't do the trick, physicians can now use a stent retriever to remove the small mass. A wire with a mesh-like tip is guided through the artery into the brain to grab the clot. When the wire is removed, the clot goes with it. The American Heart Association has given the device its strongest recommendation, after studies found it improves the odds that certain patients will survive and function normally again.

3. Brain-limb connection

The goal of linking the brain to a robotic limb to help paralyzed people and amputees move naturally is closer than ever. Previously, brain implants to help move these artificial limbs have had limited success because the person had to think about each individual action (open hand, close hand) in order to move the limb. But now researchers are putting implants in the area of the brain that controls the intention to move, not just the physical movement itself. This allows patients to fluidly guide a robotic limb. "We think this will get FDA approval in the next year and will have a major clinical impact on paralyzed patients," Roizen says.

4. Fine-tuning genes

Next year could see major strides toward the goal of cutting harmful genetic mistakes from a person's DNA. A gene-editing tool is a powerful technology that allows scientists to easily correct or edit DNA. "It basically gives you a scissors to cut out pieces of genes," Roizen explains. The technology was recently used to eradicate leukemia in a British child by giving her gene-edited immune cells to fight off the disease. This could represent a huge step toward treating other diseases, including correcting gene mutations that cause inherited diseases, but ethicists and scientists worry the technology could also be used to alter traits for nonmedical reasons.

5. New cancer vaccines

Your body's immune system fights off germs that cause infections — could it be taught to fight off cancer cells? That's the idea behind new immunotherapy cancer vaccines, which train the immune system to use its antiviral fighting response to destroy cancer cells without harming healthy cells. The Food and Drug Administration (FDA) already has approved such vaccines for the treatment of advanced prostate cancer and melanoma. Current research is focused on pairing new and old vaccines, including the tetanus vaccine with a newer cancer vaccine to treat glioblastoma, a type of brain cancer. Those who received the dual vaccine lived three to seven more years after treatment than those who received an injection without the tetanus portion. Among the most eagerly anticipated vaccines in 2016, Misialek says, is a lung cancer vaccine. Work on such a vaccine, first developed in Cuba, is already underway here.

6. Faster public health vaccines

In the wake of the Ebola outbreak last year, the process for producing vaccines to protect the public against a possible epidemic has gone into warp drive — a feat that makes it the Cleveland Clinic's top medical innovation for 2016. The fear that, with international travel, a single person could potentially infect huge numbers of people "has led to a system to be able to immunize a large number of people really fast," Roizen explains. Where previously it took decades to develop a vaccine, the Ebola vaccine was ready in six months. A similarly accelerated process was behind the approval of a meningitis B vaccine following outbreaks at two universities.

7. Targeting cancer

Researchers are closing in on the day when a single drug will treat many different cancers. While traditional clinical trials focus on testing a drug for a particular type of cancer based on its location — breast or lung, for example — new studies are testing therapies that target a specific genetic mutation found in a tumor, regardless of where the cancer originated. "We're throwing all cancers with the same mutation in one basket," pathologist Misialek says, and then testing a drug that targets that mutation. Results of an international basket trial found that a drug focused on a single genetic mutation can be effective across multiple cancer types, including a common form of lung cancer and a rare form of bone cancer.

8. Wearable sensors

Wearable health sensors could soon change the way people with chronic diseases, such as diabetes, heart disease and asthma, control and monitor their condition. Drug companies and researchers are looking into using wearable technology to monitor patients more accurately in clinical trials, and hospitals and outpatient clinics could use it to monitor patients after discharge. Devices, from stick-on sensors to wristbands and special clothing, can already be used to monitor respiratory and heart rates, including EKG readings, as well as body temperature and glucose level. Stress levels and inflammation readings may be next, Roizen says.

9. Fighting superbugs

Decoding the DNA of bacteria is transforming the way we identify infectious diseases in the microbiology lab. That could help prevent the spread of dangerous, drug-resistant infections in hospitals and nursing homes, says Misialek. "Instead of identifying bugs in the traditional way and waiting days or weeks for the results, we can analyze genes and get exact identification sooner," he adds. That could help protect vulnerable patients against the spread of superbugs like MRSA, C. diff and streptococcus pneumoniae that currently resist treatment.

10. Better cancer screening

Some of the deadliest, most difficult-to-find cancers may be detected by analyzing blood for abnormal proteins. Cancer produces these abnormal protein structures. Up to now, blood tests weren't sensitive enough to identify them. A new type of analysis allows researchers to find the proteins earlier, which means cancer treatment can be started at an earlier stage. Cleveland Clinic experts predict this will lead to more effective tests for pancreatic cancer, considered the most deadly type with only about 7 percent surviving five years, as well as for prostate and ovarian cancer. A new test trial using protein analysis identified twice as many cases of ovarian cancer at an earlier stage than current tests.

At the bottom of the above link to the AARP article, you can click on a video about a Parkinson's Breakthrough: This health video will focus on the new breakthrough in the treatment of Parkinson's disease.

Immunology and Immunotherapy:

The list of cancers being taken down by immunotherapy keeps growing (as reported by the Washington Post on April 19, 2016)

YouTube Video: "Immunotherapy Fights Cancer"

Pictured: From the Article: “In immunotherapy, T-cells from a cancer patient can be genetically engineered to be specific to a tumor. Dendritic cells (lower left) are special cells that help the immune system recognize the cancer cells and initiate a response. Drugs called "checkpoint inhibitors" also can spur the immune system. (Science Source)”

Washington Post Article By Laurie McGinley April 19 at 4:00 PM

"NEW ORLEANS — New immunotherapy drugs are showing significant and extended effectiveness against a broadening range of cancers, including rare and intractable tumors often caused by viruses. Researchers say these advances suggest the treatment approach is poised to become a critical part of the nation’s anti-cancer strategy.

Scientists reported Tuesday on two new studies showing that the medications, which marshal the body’s own immune defenses, are now proving effective against recurrent, difficult-to-treat head and neck cancer and an extremely lethal skin cancer called Merkel cell carcinoma. The diseases can be caused by viruses as well as DNA mutations, and the data show that the drugs help the immune system to recognize and attack cancers resulting from either cause.

The new studies appear to be the first to find that "virus-driven cancers can be amenable to treatment by immunotherapy," said Paul Nghiem, an investigator with the Fred Hutchinson Cancer Research Center in Seattle who led the Merkel cell study. Since viruses and other pathogens are responsible for more than 20 percent of all cancers, “these results have implications that go far beyond" Merkel cell carcinoma, which affects about 2,000 Americans a year, he said.

The new data, plus research released Sunday that showed sharply higher survival rates among advanced-melanoma patients who received immunotherapy, is prompting growing albeit guarded optimism among researchers attending the American Association for Cancer Research annual meeting here. In addition to melanoma, the infusion drugs already have been approved for use against lung and kidney cancers...."

(Click Here for Rest of Washington Post Article)

___________________________________________________________________________

Below, we cover the underlying Medical Sciences of Immunology and Immunotherapy:

Excerpted from Stanford University: "Immunology & Immunotherapy of Cancer Program":

The Program's two major goals are:

These goals will be achieved by fostering collaborative research, advancing the latest technologies to probe immunological mechanisms, and by enhancing the infrastructure for clinical translation.

Research by program members has resulted in exciting new developments in both understanding immune function and developing novel therapies. Advances include the development and application of CyTOF and high-throughput sequencing for evaluating cellular function and responses and the translation of important concepts to the clinic in promising early phase clinical trials.

___________________________________________________________________________

Immunology is a branch of biology that covers the study of immune systems in all organisms. The Russian biologist Ilya Ilyich Mechnikov advanced studies on immunology and received the Nobel Prize for his work in 1908. He pinned small thorns into starfish larvae and noticed unusual cells surrounding the thorns. This was the active response of the body trying to maintain its integrity. It was Mechnikov who first observed the phenomenon of phagocytosis, in which the body defends itself against a foreign body, and coined the term.

Immunology charts, measures, and contextualizes:

Immunology has applications in numerous disciplines of medicine, particularly in the fields of organ transplantation, oncology, virology, bacteriology, parasitology, psychiatry, and dermatology.

Prior to the designation of immunity from the etymological root immunis, which is Latin for "exempt"; early physicians characterized organs that would later be proven as essential components of the immune system.

The important lymphoid organs of the immune system are the thymus and bone marrow, and chief lymphatic tissues such as spleen, tonsils, lymph vessels, lymph nodes, adenoids, and liver.

When health conditions worsen to emergency status, portions of immune system organs including the thymus, spleen, bone marrow, lymph nodes and other lymphatic tissues can be surgically excised for examination while patients are still alive.

Many components of the immune system are typically cellular in nature and not associated with any specific organ; but rather are embedded or circulating in various tissues located throughout the body.

Click on any of the following blue hyperlinks for more about Immunology:

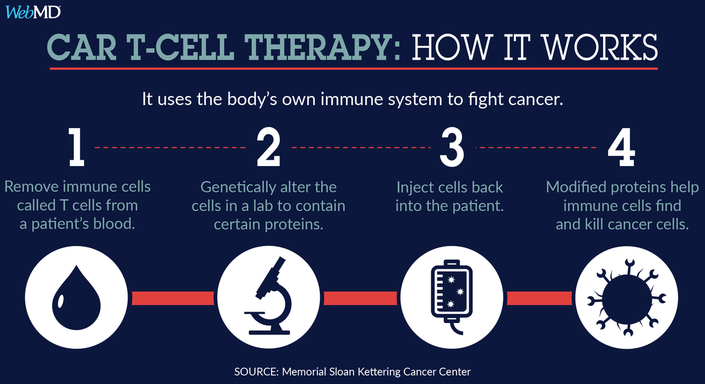

Immunotherapy is the "treatment of disease by inducing, enhancing, or suppressing an immune response".

Immunotherapies designed to elicit or amplify an immune response are classified as activation immunotherapies, while immunotherapies that reduce or suppress are classified as suppression immunotherapies. In recent years, immunotherapy has become of great interest to researchers, clinicians and pharmaceutical companies, particularly in its promise to treat various forms of cancer.

Immunomodulatory regimens often have fewer side effects than existing drugs, including less potential for creating resistance when treating microbial disease.

Cell-based immunotherapies are effective for some cancers. Immune effector cells such as lymphocytes, macrophages, dendritic cells, natural killer cells (NK Cell), cytotoxic T lymphocytes (CTL), etc., work together to defend the body against cancer by targeting abnormal antigens expressed on the surface of tumor cells.

Therapies such as granulocyte colony-stimulating factor (G-CSF), interferons, imiquimod and cellular membrane fractions from bacteria are licensed for medical use. Others including IL-2, IL-7, IL-12, various chemokines, synthetic cytosine phosphate-guanosine (CpG) oligodeoxynucleotides and glucans are involved in clinical and preclinical studies.

Click on any of the following blue hyperlinks for more about Immunotherapy:

"NEW ORLEANS — New immunotherapy drugs are showing significant and extended effectiveness against a broadening range of cancers, including rare and intractable tumors often caused by viruses. Researchers say these advances suggest the treatment approach is poised to become a critical part of the nation’s anti-cancer strategy.

Scientists reported Tuesday on two new studies showing that the medications, which marshal the body’s own immune defenses, are now proving effective against recurrent, difficult-to-treat head and neck cancer and an extremely lethal skin cancer called Merkel cell carcinoma. The diseases can be caused by viruses as well as DNA mutations, and the data show that the drugs help the immune system to recognize and attack cancers resulting from either cause.

The new studies appear to be the first to find that "virus-driven cancers can be amenable to treatment by immunotherapy," said Paul Nghiem, an investigator with the Fred Hutchinson Cancer Research Center in Seattle who led the Merkel cell study. Since viruses and other pathogens are responsible for more than 20 percent of all cancers, “these results have implications that go far beyond" Merkel cell carcinoma, which affects about 2,000 Americans a year, he said.

The new data, plus research released Sunday that showed sharply higher survival rates among advanced-melanoma patients who received immunotherapy, is prompting growing albeit guarded optimism among researchers attending the American Association for Cancer Research annual meeting here. In addition to melanoma, the infusion drugs already have been approved for use against lung and kidney cancers...."

(Click Here for Rest of Washington Post Article)

___________________________________________________________________________

Below, we cover the underlying Medical Sciences of Immunology and Immunotherapy:

Excerpted from Stanford University: "Immunology & Immunotherapy of Cancer Program":

The Program's two major goals are:

- To understand the nature of the immune system and its response to malignancies.

- To explore auto- and allo-immune responses to cancer with the goal of enabling the discovery and development of more effective anti-tumor immunotherapy.

These goals will be achieved by fostering collaborative research, advancing the latest technologies to probe immunological mechanisms, and by enhancing the infrastructure for clinical translation.

Research by program members has resulted in exciting new developments in both understanding immune function and developing novel therapies. Advances include the development and application of CyTOF and high-throughput sequencing for evaluating cellular function and responses and the translation of important concepts to the clinic in promising early phase clinical trials.

___________________________________________________________________________

Immunology is a branch of biology that covers the study of immune systems in all organisms. The Russian biologist Ilya Ilyich Mechnikov advanced studies on immunology and received the Nobel Prize for his work in 1908. He pinned small thorns into starfish larvae and noticed unusual cells surrounding the thorns. This was the active response of the body trying to maintain its integrity. It was Mechnikov who first observed the phenomenon of phagocytosis, in which the body defends itself against a foreign body, and coined the term.

Immunology charts, measures, and contextualizes:

- physiological functioning of the immune system in states of both health and diseases;

- malfunctions of the immune system in immunological disorders (such as autoimmune diseases, hypersensitivities, immune deficiency, and transplant rejection);

- and the physical, chemical and physiological characteristics of the components of the immune system in vitro, in situ, and in vivo.

Immunology has applications in numerous disciplines of medicine, particularly in the fields of organ transplantation, oncology, virology, bacteriology, parasitology, psychiatry, and dermatology.

Prior to the designation of immunity from the etymological root immunis, which is Latin for "exempt"; early physicians characterized organs that would later be proven as essential components of the immune system.

The important lymphoid organs of the immune system are the thymus and bone marrow, and chief lymphatic tissues such as spleen, tonsils, lymph vessels, lymph nodes, adenoids, and liver.

When health conditions worsen to emergency status, portions of immune system organs including the thymus, spleen, bone marrow, lymph nodes and other lymphatic tissues can be surgically excised for examination while patients are still alive.

Many components of the immune system are typically cellular in nature and not associated with any specific organ; but rather are embedded or circulating in various tissues located throughout the body.

Click on any of the following blue hyperlinks for more about Immunology:

- Classical immunology

- Clinical immunology

- Developmental immunology

- Ecoimmunology and behavioural immunity

- Immunotherapy: see next topic

- Diagnostic immunology

- Cancer immunology

- Reproductive immunology

- Theoretical immunology

- Immunologist

- Career in immunology

- See also:

- History of immunology

- Immunomics

- International Reviews of Immunology

- List of immunologists

- Osteoimmunology

- Outline of immunology

- American Association of Immunologists

- British Society for Immunology

- Annual Review of Immunology journal

- BMC: Immunology at BioMed Central, an open-access journal publishing original peer-reviewed research articles.

- Nature reviews: Immunology

- The Immunology Database and Analysis Portal, a NIAID-funded database resource.

- Federation of Clinical Immunology Societies

Immunotherapy is the "treatment of disease by inducing, enhancing, or suppressing an immune response".

Immunotherapies designed to elicit or amplify an immune response are classified as activation immunotherapies, while immunotherapies that reduce or suppress are classified as suppression immunotherapies. In recent years, immunotherapy has become of great interest to researchers, clinicians and pharmaceutical companies, particularly in its promise to treat various forms of cancer.

Immunomodulatory regimens often have fewer side effects than existing drugs, including less potential for creating resistance when treating microbial disease.

Cell-based immunotherapies are effective for some cancers. Immune effector cells such as lymphocytes, macrophages, dendritic cells, natural killer cells (NK Cell), cytotoxic T lymphocytes (CTL), etc., work together to defend the body against cancer by targeting abnormal antigens expressed on the surface of tumor cells.

Therapies such as granulocyte colony-stimulating factor (G-CSF), interferons, imiquimod and cellular membrane fractions from bacteria are licensed for medical use. Others including IL-2, IL-7, IL-12, various chemokines, synthetic cytosine phosphate-guanosine (CpG) oligodeoxynucleotides and glucans are involved in clinical and preclinical studies.

Click on any of the following blue hyperlinks for more about Immunotherapy:

- Immunomodulators

- Activation immunotherapies

- Suppression immunotherapies

- Helminthic therapies

- See also:

- Biological response modifiers

- Interleukin-2 immunotherapy

- Microtransplantation

- Langreth, Robert (12 February 2009). "Cancer Miracles". Forbes.

- International Society for Biological Therapy of Cancer

Medical Ultrasound Technology

YouTube Video Diagnostic Ultrasonography Procedure

Pictured: Portable Ultrasound Machine

Medical ultrasound (also known as diagnostic sonography or ultrasonography) is a diagnostic imaging technique based on the application of ultrasound.

It is used to see internal body structures such as tendons, muscles, joints, vessels and internal organs. Its aim is often to find a source of a disease or to exclude any pathology. The practice of examining pregnant women using ultrasound is called obstetric ultrasound, and is widely used. But, ultrasound is also used for non-invasive examination of body organs like the liver.

Ultrasound is sound waves with frequencies which are higher than those audible to humans (>20,000 Hz). Ultrasonic images also known as sonograms are made by sending pulses of ultrasound into tissue using a probe.

The sound echoes off the tissue; with different tissues reflecting varying degrees of sound. These echoes are recorded and displayed as an image to the operator.

Many different types of images can be formed using sonographic instruments. The most well-known type is a B-mode image, which displays the acoustic impedance of a two-dimensional cross-section of tissue. Other types of image can display blood flow, motion of tissue over time, the location of blood, the presence of specific molecules, the stiffness of tissue, or the anatomy of a three-dimensional region.

Compared to other prominent methods of medical imaging, ultrasound has several advantages. It provides images in real-time, it is portable and can be brought to the bedside, it is substantially lower in cost, and it does not use harmful ionizing radiation.

Drawbacks of ultrasonography include various limits on its field of view including patient cooperation and physique, difficulty imaging structures behind bone and air, and its dependence on a skilled operator.

It is used to see internal body structures such as tendons, muscles, joints, vessels and internal organs. Its aim is often to find a source of a disease or to exclude any pathology. The practice of examining pregnant women using ultrasound is called obstetric ultrasound, and is widely used. But, ultrasound is also used for non-invasive examination of body organs like the liver.

Ultrasound is sound waves with frequencies which are higher than those audible to humans (>20,000 Hz). Ultrasonic images also known as sonograms are made by sending pulses of ultrasound into tissue using a probe.

The sound echoes off the tissue; with different tissues reflecting varying degrees of sound. These echoes are recorded and displayed as an image to the operator.

Many different types of images can be formed using sonographic instruments. The most well-known type is a B-mode image, which displays the acoustic impedance of a two-dimensional cross-section of tissue. Other types of image can display blood flow, motion of tissue over time, the location of blood, the presence of specific molecules, the stiffness of tissue, or the anatomy of a three-dimensional region.

Compared to other prominent methods of medical imaging, ultrasound has several advantages. It provides images in real-time, it is portable and can be brought to the bedside, it is substantially lower in cost, and it does not use harmful ionizing radiation.

Drawbacks of ultrasonography include various limits on its field of view including patient cooperation and physique, difficulty imaging structures behind bone and air, and its dependence on a skilled operator.

Brain-Computer Interface

YouTube Video EEG mind controlled wheelchair - UC Berkeley ME102B/ME135 (demo)

Pictured: LEFT: The groundbreaking mind-controlled BIONIC ARM that plugs into the body and has given wearers back their sense of touch; RIGHT: The First Ever Bionic Leg is powered by man’s brain.

A brain–computer interface (BCI), sometimes called a mind-machine interface (MMI), direct neural interface (DNI), or brain–machine interface (BMI), is a direct communication pathway between an enhanced or wired brain and an external device.

BCIs are often directed at researching, mapping, assisting, augmenting, or repairing human cognitive or sensory-motor functions.

Research on BCIs began in the 1970s at the University of California, Los Angeles (UCLA) under a grant from the National Science Foundation, followed by a contract from DARPA. The papers published after this research also mark the first appearance of the expression brain–computer interface in scientific literature.

The field of BCI research and development has since focused primarily on neuroprosthetics applications that aim at restoring damaged hearing, sight and movement. Thanks to the remarkable cortical plasticity of the brain, signals from implanted prostheses can, after adaptation, be handled by the brain like natural sensor or effector channels.

Following years of animal experimentation, the first neuroprosthetic devices implanted in humans appeared in the mid-1990s.

For further amplification about this topic, click here.

BCIs are often directed at researching, mapping, assisting, augmenting, or repairing human cognitive or sensory-motor functions.

Research on BCIs began in the 1970s at the University of California, Los Angeles (UCLA) under a grant from the National Science Foundation, followed by a contract from DARPA. The papers published after this research also mark the first appearance of the expression brain–computer interface in scientific literature.

The field of BCI research and development has since focused primarily on neuroprosthetics applications that aim at restoring damaged hearing, sight and movement. Thanks to the remarkable cortical plasticity of the brain, signals from implanted prostheses can, after adaptation, be handled by the brain like natural sensor or effector channels.

Following years of animal experimentation, the first neuroprosthetic devices implanted in humans appeared in the mid-1990s.

For further amplification about this topic, click here.

Advancements in Neuro-imaging Technology

YouTube Video: New center advances biomedical and brain imaging

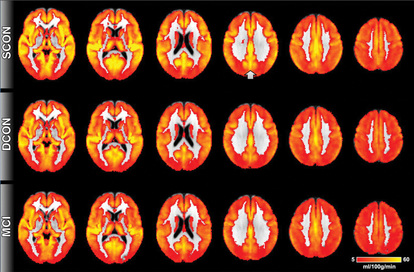

Pictured: Click for an article about the use of neuroimaging: From the National Institute of Mental Health: Neuroimaging and Mental Illness: A Window Into the Brain

Neuroimaging or brain imaging is the use of various techniques to either directly or indirectly image the structure, function/pharmacology of the nervous system.

It is a relatively new discipline within medicine and neuroscience/psychology.

Physicians who specialize in the performance and interpretation of neuroimaging in the clinical setting are neuroradiologists.

Neuroimaging falls into two broad categories:

Functional imaging enables, for example, the processing of information by centers in the brain to be visualized directly. Such processing causes the involved area of the brain to increase metabolism and "light up" on the scan. One of the more controversial uses of neuroimaging has been research into "thought identification" or mind-reading.

For further amplification, click on any of the following:

It is a relatively new discipline within medicine and neuroscience/psychology.

Physicians who specialize in the performance and interpretation of neuroimaging in the clinical setting are neuroradiologists.

Neuroimaging falls into two broad categories:

- Structural imaging, which deals with the structure of the nervous system and the diagnosis of gross (large scale) intracranial disease (such as tumor), and injury, and

- Functional imaging, which is used to diagnose metabolic diseases and lesions on a finer scale (such as Alzheimer's disease) and also for neurological and cognitive psychology research and building brain-computer interfaces.

Functional imaging enables, for example, the processing of information by centers in the brain to be visualized directly. Such processing causes the involved area of the brain to increase metabolism and "light up" on the scan. One of the more controversial uses of neuroimaging has been research into "thought identification" or mind-reading.

For further amplification, click on any of the following:

- 1 History

- 2 Indications

- 3 Brain imaging techniques

- 3.1 Computed axial tomography

- 3.2 Diffuse optical imaging

- 3.3 Event-related optical signal

- 3.4 Magnetic resonance imaging

- 3.5 Functional magnetic resonance imaging

- 3.6 Magnetoencephalography

- 3.7 Positron emission tomography

- 3.8 Single-photon emission computed tomography

- 3.9 Cranial Ultrasound

- 3.10 Comparison of imaging types

- 4 See also

- 5 References

- 6 External links

Advancements in Radiology

YouTube Video MRI Brain Sequences - radiology video tutorial

Pictured: Los Angeles Times May 12, 2016 issue: Radiologists use MRIs to find biomarker for Alzheimer's disease

Radiology is a medical specialty that uses imaging to diagnose and treat diseases seen within the body.

A variety of imaging techniques such as

Interventional radiology is the performance of (usually minimally invasive) medical procedures with the guidance of imaging technologies.

The acquisition of medical imaging is usually carried out by the radiographer, often known as a radiologic technologist.

Depending on location, the diagnostic radiologist, or reporting radiographer, then interprets or "reads" the images and produces a report of their findings and impression or diagnosis. This report is then transmitted to the clinician who requested the imaging, either routinely or emergently.

Imaging exams are stored digitally in the picture archiving and communication system (PACS) where they can be viewed by all members of the healthcare team within the same health system and compared later on with future imaging exams.

Click on any of the following hyperlinks for amplification:

A variety of imaging techniques such as

- X-ray radiography,

- ultrasound,

- computed tomography (CT),

- nuclear medicine including positron emission tomography (PET),

- and magnetic resonance imaging (MRI)

Interventional radiology is the performance of (usually minimally invasive) medical procedures with the guidance of imaging technologies.

The acquisition of medical imaging is usually carried out by the radiographer, often known as a radiologic technologist.

Depending on location, the diagnostic radiologist, or reporting radiographer, then interprets or "reads" the images and produces a report of their findings and impression or diagnosis. This report is then transmitted to the clinician who requested the imaging, either routinely or emergently.

Imaging exams are stored digitally in the picture archiving and communication system (PACS) where they can be viewed by all members of the healthcare team within the same health system and compared later on with future imaging exams.

Click on any of the following hyperlinks for amplification:

- 1 Diagnostic imaging modalities

- 2 Interventional radiology

- 3 Teleradiology

- 4 Professional training

- 5 See also

- 6 References

- 7 External links

Advancements in Organ Transplants

YouTube Video: Donation and Transplantation: How does it work?

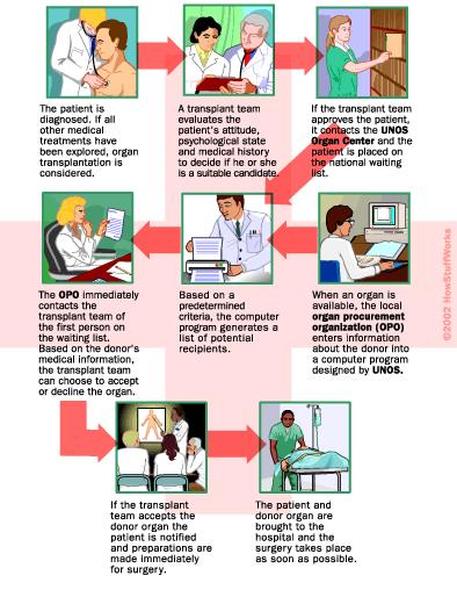

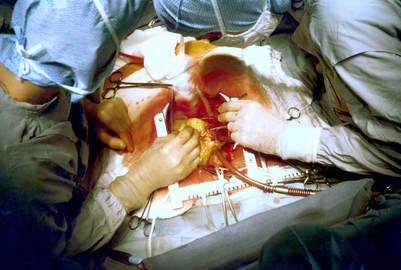

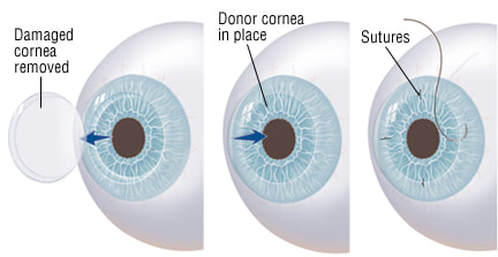

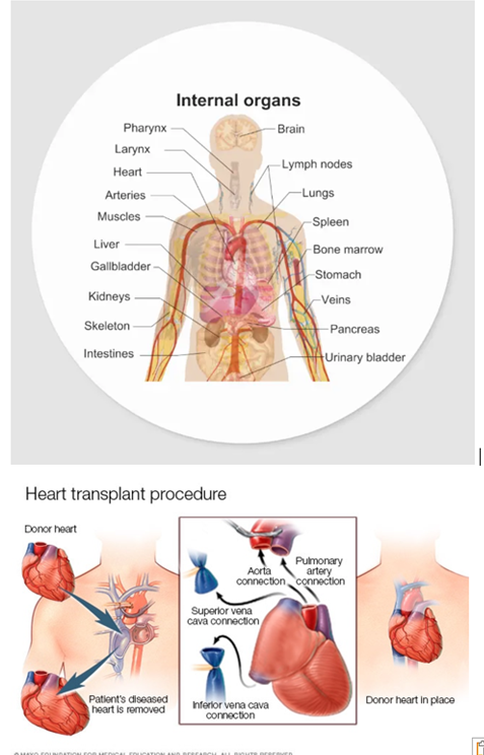

Pictured: How Organ Transplants Work

Organ transplantation is the moving of an organ from one body to another or from a donor site to another location on the person's own body, to replace the recipient's damaged or absent organ.

Organs and/or tissues that are transplanted within the same person's body are called autografts. Transplants that are recently performed between two subjects of the same species are called allografts.

Allografts can either be from a living or cadaveric source.

Organs that can be transplanted are the heart, kidneys, liver, lungs, pancreas, intestine, and thymus. Tissues include bones, tendons (both referred to as musculoskeletal grafts), cornea, skin, heart valves, nerves and veins.

Worldwide, the kidneys are the most commonly transplanted organs, followed by the liver and then the heart. Cornea and musculoskeletal grafts are the most commonly transplanted tissues; these outnumber organ transplants by more than tenfold.

Organ donors may be living, brain dead, or dead via circulatory death. Tissue may be recovered from donors who die of circulatory death, as well as of brain death – up to 24 hours past the cessation of heartbeat.

Unlike organs, most tissues (with the exception of corneas) can be preserved and stored for up to five years, meaning they can be "banked". Transplantation raises a number of bioethical issues, including the definition of death, when and how consent should be given for an organ to be transplanted, and payment for organs for transplantation.

Other ethical issues include transplantation tourism and more broadly the socio-economic context in which organ procurement or transplantation may occur. A particular problem is organ trafficking.

Some organs, such as the brain, cannot be transplanted.

Transplantation medicine is one of the most challenging and complex areas of modern medicine. Some of the key areas for medical management are the problems of transplant rejection, during which the body has an immune response to the transplanted organ, possibly leading to transplant failure and the need to immediately remove the organ from the recipient.

When possible, transplant rejection can be reduced through serotyping to determine the most appropriate donor-recipient match and through the use of immunosuppressant drugs.

For further information, click on any of the following:

Organs and/or tissues that are transplanted within the same person's body are called autografts. Transplants that are recently performed between two subjects of the same species are called allografts.

Allografts can either be from a living or cadaveric source.

Organs that can be transplanted are the heart, kidneys, liver, lungs, pancreas, intestine, and thymus. Tissues include bones, tendons (both referred to as musculoskeletal grafts), cornea, skin, heart valves, nerves and veins.

Worldwide, the kidneys are the most commonly transplanted organs, followed by the liver and then the heart. Cornea and musculoskeletal grafts are the most commonly transplanted tissues; these outnumber organ transplants by more than tenfold.

Organ donors may be living, brain dead, or dead via circulatory death. Tissue may be recovered from donors who die of circulatory death, as well as of brain death – up to 24 hours past the cessation of heartbeat.

Unlike organs, most tissues (with the exception of corneas) can be preserved and stored for up to five years, meaning they can be "banked". Transplantation raises a number of bioethical issues, including the definition of death, when and how consent should be given for an organ to be transplanted, and payment for organs for transplantation.

Other ethical issues include transplantation tourism and more broadly the socio-economic context in which organ procurement or transplantation may occur. A particular problem is organ trafficking.

Some organs, such as the brain, cannot be transplanted.

Transplantation medicine is one of the most challenging and complex areas of modern medicine. Some of the key areas for medical management are the problems of transplant rejection, during which the body has an immune response to the transplanted organ, possibly leading to transplant failure and the need to immediately remove the organ from the recipient.

When possible, transplant rejection can be reduced through serotyping to determine the most appropriate donor-recipient match and through the use of immunosuppressant drugs.

For further information, click on any of the following:

- 1 Types of transplant

- 2 Organs and tissues transplanted

- 3 Types of donor

- 4 Allocation of organs

- 5 Reasons for donation and ethical issues

- 6 Usage

- 7 History

- 8 Society and culture

- 9 Research

- 10 See also

- 11 References

- 12 Further reading

- 13 External links

Advancements in Neurosurgery

YouTube Video A day in the life of a neurosurgeon

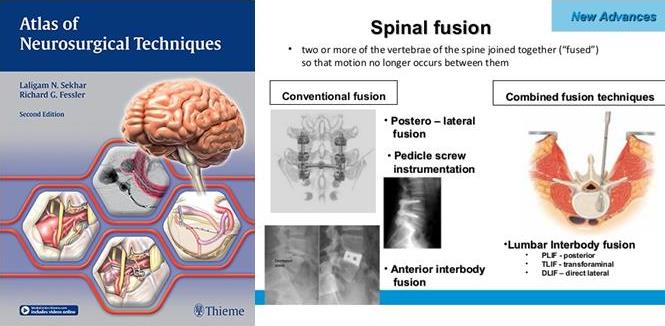

Pictured: LEFT: Atlas of Neurosurgical Techniques; RIGHT: Illustration of Spinal Fusion Surgery

Neurosurgery (or neurological surgery) is the medical specialty concerned with the prevention, diagnosis, treatment, and rehabilitation of disorders which affect any portion of the nervous system including the brain, spinal cord, peripheral nerves, and extra-cranial cerebrovascular system.

In the United States, a neurosurgeon must generally complete four years of undergraduate education, four years of medical school, and seven years of residency

Most, but not all, residency programs have some component of basic science or clinical research. Neurosurgeons may pursue additional training in the form of a fellowship, after residency or in some cases, as a senior resident.

These fellowships include pediatric neurosurgery, trauma/neurocritical care, functional and stereotactic surgery, surgical neuro-oncology, radiosurgery, neurovascular surgery, skull-base surgery, peripheral nerve and spine surgery.

In the U.S., neurosurgery is considered a highly competitive specialty composed of 0.6% of all practicing physicians.

Main divisions of neurosurgery:

General neurosurgery involves most neurosurgical conditions including neuro-trauma and other neuro-emergencies such as intracranial hemorrhage. Most level 1 hospitals have this kind of practice.

Specialized branches have developed to cater to special and difficult conditions. These specialized branches co-exist with general neurosurgery in more sophisticated hospitals. To practice advanced specialization within neurosurgery, additional higher fellowship training of one to two years is expected from the neurosurgeon.

Some of these divisions of neurosurgery are:

Neuroanesthesia is a field of anesthesiology which focuses on neurosurgery. Anesthesia is not used during the middle of an "awake" brain surgery. Awake brain surgery is where the patient is conscious for the middle of the procedure and sedated for the beginning and end. This procedure is used when the tumor does not have clear boundaries and the surgeon wants to know if they are invading on critical regions of the brain which involve functions like talking, cognition, vision, and hearing. It will also be conducted for procedures which the surgeon is trying to combat epileptic seizures.

Neurosurgery methods

Neuroradiology plays a key role not only in diagnosis but also in the operative phase of neurosurgery.

Neuroradiology methods are used in modern neurosurgery diagnosis and treatment. They include:

Some neurosurgery procedures involve the use of intra-operative MRI and functional MRI.

In conventional open surgery the neurosurgeon opens the skull, creating a large opening to access the brain. Techniques involving smaller openings with the aid of microscopes and endoscopes are now being used as well.

Methods that utilize small craniotomies in conjunction with high-clarity microscopic visualization of neural tissue offer excellent results. However, the open methods are still traditionally used in trauma or emergency situations.

Microsurgery is utilized in many aspects of neurological surgery. Microvascular techniques are used in EC-IC bypass surgery and in restoration carotid endarterectomy. The clipping of an aneurysm is performed under microscopic vision. minimally-invasive spine surgery utilizes microscopes or endoscopes. Procedures such as microdiscectomy, laminectomy, and artificial disc replacement rely on microsurgery.

Using stereotaxy neurosurgeons can approach a minute target in the brain through a minimal opening. This is used in functional neurosurgery where electrodes are implanted or gene therapy is instituted with high level of accuracy as in the case of Parkinson's disease or Alzheimer's disease. Using the combination method of open and stereotactic surgery, intraventricular hemorrhages can potentially be evacuated successfully.

Conventional surgery using image guidance technologies is also becoming common and is referred to as surgical navigation, computer assisted surgery, navigated surgery, stereotactic navigation. Similar to a car or mobile Global Positioning System (GPS), image guided surgery systems, like Curve Image Guided Surgery and StealthStation, use cameras or electromagnetic fields to capture and relay the patient’s anatomy and the surgeon’s precise movements in relation to the patient, to computer monitors in the operating room.

These sophisticated computerized systems are used before and during surgery to help orient the surgeon with three-dimensional images of the patient’s anatomy including the tumor.

Minimally invasive endoscopic surgery is commonly utilized by neurosurgeons when appropriate. Techniques such as endoscopic endonasal surgery are used in pituitary tumors, craniopharyngiomas, chordomas, and the repair of cerebrospinal fluid leaks.

Ventricular endoscopy is used in the treatment of intraventricular bleeds, hydrocephalus, colloid cyst and neurocysticercosis. Endonasal endoscopy is at times carried out with neurosurgeons and ENT surgeons working together as a team.

Repair of craniofacial disorders and disturbance of cerebrospinal fluid circulation is done by neurosurgeons who also occasionally team up with maxillofacial and plastic surgeons. Cranioplasty for craniosynostosis is performed by pediatric neurosurgeons with or without plastic surgeons.

Neurosurgeons are involved in stereotactic radiosurgery along with radiation oncologists in tumor and AVM treatment. Radiosurgical methods such as Gamma knife, Cyberknife and Novalis Radiosurgery are used as well.

Endovascular Neurosurgery utilize endovascular image guided procedures for the treatment of aneurysms, AVMs, carotid stenosis, strokes, and spinal malformations, and vasospasms. Techniques such as angioplasty, stenting, clot retrieval, embolization, and diagnostic angiography are endovascular procedures.

A common procedure performed in neurosurgery is the placement of Ventriculo-Peritoneal Shunt (VP Shunt). In pediatric practice this is often implemented in cases of congenital hydrocephalus. The most common indication for this procedure in adults is Normal Pressure Hydrocephalus (NPH).

Neurosurgery of the spine covers the cervical, thoracic and lumbar spine. Some indications for spine surgery include spinal cord compression resulting from trauma, arthritis of the spinal discs, or spondylosis. In cervical cord compression, patients may have difficulty with gait, balance issues, and/or numbness and tingling in the hands or feet.

Spondylosis is the condition of spinal disc degeneration and arthritis that may compress the spinal canal. This condition can often result in bone-spurring and disc herniation. Power drills and special instruments are often used to correct any compression problems of the spinal canal. Disc herniations of spinal vertebral discs are removed with special rongeurs. This procedure is known as a discectomy.

Generally once a disc is removed it is replaced by an implant which will create a bony fusion between vertebral bodies above and below. Instead, a mobile disc could be implanted into the disc space to maintain mobility. This is commonly used in cervical disc surgery. At times instead of disc removal a Laser discectomy could be used to decompress a nerve root. This method is mainly used for lumbar discs. Laminectomy is the removal of the Lamina portion of the vertebrae of the spine in order to make room for the compressed nerve tissue.

Radiology assisted spine surgery uses minimally-invasive procedures. They include the techniques of vertebroplasty and kyphoplasty in which certain types of spinal fractures are managed.

Potentially unstable spines will need spine fusions. At present these procedures include complex instrumentation. Spine fusions could be performed as open surgery or as minimally invasive surgery. Anterior cervical diskectomy and fusion is a common surgery that is performed for disc disease of cervical spine.

However, each method described above may not work in all patients. Therefore, careful selection of patients for each procedure is important. It has to be noted that if there is prior permanent neural tissue damage spinal surgery may not take away the symptoms.

Surgery for chronic pain is a sub branch of functional neurosurgery. Some of the techniques include implantation of deep brain stimulators, spinal cord stimulators, peripheral stimulators and pain pumps.

Surgery of the peripheral nervous system is also possible, and includes the very common procedures of carpal tunnel decompression and peripheral nerve transposition. Numerous other types of nerve entrapment conditions and other problems with the peripheral nervous system are treated as well.

Other conditions treated by neurosurgeons include:

In the United States, a neurosurgeon must generally complete four years of undergraduate education, four years of medical school, and seven years of residency

Most, but not all, residency programs have some component of basic science or clinical research. Neurosurgeons may pursue additional training in the form of a fellowship, after residency or in some cases, as a senior resident.

These fellowships include pediatric neurosurgery, trauma/neurocritical care, functional and stereotactic surgery, surgical neuro-oncology, radiosurgery, neurovascular surgery, skull-base surgery, peripheral nerve and spine surgery.

In the U.S., neurosurgery is considered a highly competitive specialty composed of 0.6% of all practicing physicians.

Main divisions of neurosurgery:

General neurosurgery involves most neurosurgical conditions including neuro-trauma and other neuro-emergencies such as intracranial hemorrhage. Most level 1 hospitals have this kind of practice.

Specialized branches have developed to cater to special and difficult conditions. These specialized branches co-exist with general neurosurgery in more sophisticated hospitals. To practice advanced specialization within neurosurgery, additional higher fellowship training of one to two years is expected from the neurosurgeon.

Some of these divisions of neurosurgery are:

- stereotactic neurosurgery, functional neurosurgery, and epilepsy surgery (the latter includes partial or total corpus callosotomy- severing part or all of the corpus callosum to stop or lessen seizure spread and activity, and the surgical removal of functional- physiological- and/or anatomical pieces or divisions of the brain, called epileptic foci, that are operable and that are causing seizures, and also the more radical and very, very rare partial or total lobectomy, or even hemispherectomy- the removal of part or all of one of the lobes, or one of the cerebral hemispheres of the brain; those two procedures, when possible, are also very, very rarely used in oncological neurosurgery or to treat very severe neurological trauma, such as stab or gunshot wounds to the brain)

- oncological neurosurgery also called neurosurgical oncology; includes pediatric oncological neurosurgery; treatment of benign and malignant central and peripheral nervous system cancers and pre-cancerous lesions in adults and children (including, among others, glioblastoma multiforme and other gliomas, brain stem cancer, astrocytoma, pontine glioma, medulloblastoma, spinal cancer, tumors of the meninges and intracranial spaces, secondary metastases to the brain, spine, and nerves, and peripheral nervous system tumors)

- peripheral nerve surgery

- pediatric neurosurgery (for cancer, seizures, bleeding, stroke, cognitive disorders or congenital neurological disorders)

- neuropsychiatric surgery (neurosurgery for the treatment of adult or pediatric mental illnesses)

- geriatric neurosurgery (for the treatment of neurological disorders and dementias and mental impairments due to age, but not due to a stroke, seizure, tumor, concussion, or neurovascular cause- namely, Parkinsonism, Alzheimer's, multiple sclerosis, and similar disorders)

Neuroanesthesia is a field of anesthesiology which focuses on neurosurgery. Anesthesia is not used during the middle of an "awake" brain surgery. Awake brain surgery is where the patient is conscious for the middle of the procedure and sedated for the beginning and end. This procedure is used when the tumor does not have clear boundaries and the surgeon wants to know if they are invading on critical regions of the brain which involve functions like talking, cognition, vision, and hearing. It will also be conducted for procedures which the surgeon is trying to combat epileptic seizures.

Neurosurgery methods

Neuroradiology plays a key role not only in diagnosis but also in the operative phase of neurosurgery.

Neuroradiology methods are used in modern neurosurgery diagnosis and treatment. They include:

- computer assisted imaging computed tomography (CT),

- magnetic resonance imaging (MRI),

- positron emission tomography (PET),

- magnetoencephalography (MEG),

- and stereotactic radiosurgery.

Some neurosurgery procedures involve the use of intra-operative MRI and functional MRI.

In conventional open surgery the neurosurgeon opens the skull, creating a large opening to access the brain. Techniques involving smaller openings with the aid of microscopes and endoscopes are now being used as well.

Methods that utilize small craniotomies in conjunction with high-clarity microscopic visualization of neural tissue offer excellent results. However, the open methods are still traditionally used in trauma or emergency situations.

Microsurgery is utilized in many aspects of neurological surgery. Microvascular techniques are used in EC-IC bypass surgery and in restoration carotid endarterectomy. The clipping of an aneurysm is performed under microscopic vision. minimally-invasive spine surgery utilizes microscopes or endoscopes. Procedures such as microdiscectomy, laminectomy, and artificial disc replacement rely on microsurgery.

Using stereotaxy neurosurgeons can approach a minute target in the brain through a minimal opening. This is used in functional neurosurgery where electrodes are implanted or gene therapy is instituted with high level of accuracy as in the case of Parkinson's disease or Alzheimer's disease. Using the combination method of open and stereotactic surgery, intraventricular hemorrhages can potentially be evacuated successfully.

Conventional surgery using image guidance technologies is also becoming common and is referred to as surgical navigation, computer assisted surgery, navigated surgery, stereotactic navigation. Similar to a car or mobile Global Positioning System (GPS), image guided surgery systems, like Curve Image Guided Surgery and StealthStation, use cameras or electromagnetic fields to capture and relay the patient’s anatomy and the surgeon’s precise movements in relation to the patient, to computer monitors in the operating room.

These sophisticated computerized systems are used before and during surgery to help orient the surgeon with three-dimensional images of the patient’s anatomy including the tumor.

Minimally invasive endoscopic surgery is commonly utilized by neurosurgeons when appropriate. Techniques such as endoscopic endonasal surgery are used in pituitary tumors, craniopharyngiomas, chordomas, and the repair of cerebrospinal fluid leaks.

Ventricular endoscopy is used in the treatment of intraventricular bleeds, hydrocephalus, colloid cyst and neurocysticercosis. Endonasal endoscopy is at times carried out with neurosurgeons and ENT surgeons working together as a team.

Repair of craniofacial disorders and disturbance of cerebrospinal fluid circulation is done by neurosurgeons who also occasionally team up with maxillofacial and plastic surgeons. Cranioplasty for craniosynostosis is performed by pediatric neurosurgeons with or without plastic surgeons.

Neurosurgeons are involved in stereotactic radiosurgery along with radiation oncologists in tumor and AVM treatment. Radiosurgical methods such as Gamma knife, Cyberknife and Novalis Radiosurgery are used as well.

Endovascular Neurosurgery utilize endovascular image guided procedures for the treatment of aneurysms, AVMs, carotid stenosis, strokes, and spinal malformations, and vasospasms. Techniques such as angioplasty, stenting, clot retrieval, embolization, and diagnostic angiography are endovascular procedures.

A common procedure performed in neurosurgery is the placement of Ventriculo-Peritoneal Shunt (VP Shunt). In pediatric practice this is often implemented in cases of congenital hydrocephalus. The most common indication for this procedure in adults is Normal Pressure Hydrocephalus (NPH).

Neurosurgery of the spine covers the cervical, thoracic and lumbar spine. Some indications for spine surgery include spinal cord compression resulting from trauma, arthritis of the spinal discs, or spondylosis. In cervical cord compression, patients may have difficulty with gait, balance issues, and/or numbness and tingling in the hands or feet.

Spondylosis is the condition of spinal disc degeneration and arthritis that may compress the spinal canal. This condition can often result in bone-spurring and disc herniation. Power drills and special instruments are often used to correct any compression problems of the spinal canal. Disc herniations of spinal vertebral discs are removed with special rongeurs. This procedure is known as a discectomy.

Generally once a disc is removed it is replaced by an implant which will create a bony fusion between vertebral bodies above and below. Instead, a mobile disc could be implanted into the disc space to maintain mobility. This is commonly used in cervical disc surgery. At times instead of disc removal a Laser discectomy could be used to decompress a nerve root. This method is mainly used for lumbar discs. Laminectomy is the removal of the Lamina portion of the vertebrae of the spine in order to make room for the compressed nerve tissue.

Radiology assisted spine surgery uses minimally-invasive procedures. They include the techniques of vertebroplasty and kyphoplasty in which certain types of spinal fractures are managed.

Potentially unstable spines will need spine fusions. At present these procedures include complex instrumentation. Spine fusions could be performed as open surgery or as minimally invasive surgery. Anterior cervical diskectomy and fusion is a common surgery that is performed for disc disease of cervical spine.

However, each method described above may not work in all patients. Therefore, careful selection of patients for each procedure is important. It has to be noted that if there is prior permanent neural tissue damage spinal surgery may not take away the symptoms.

Surgery for chronic pain is a sub branch of functional neurosurgery. Some of the techniques include implantation of deep brain stimulators, spinal cord stimulators, peripheral stimulators and pain pumps.

Surgery of the peripheral nervous system is also possible, and includes the very common procedures of carpal tunnel decompression and peripheral nerve transposition. Numerous other types of nerve entrapment conditions and other problems with the peripheral nervous system are treated as well.

Other conditions treated by neurosurgeons include:

- Meningitis and other central nervous system infections including abscesses

- Spinal disc herniation

- Cervical spinal stenosis and Lumbar spinal stenosis

- Hydrocephalus

- Head trauma (brain hemorrhages, skull fractures, etc.)

- Spinal cord trauma

- Traumatic injuries of peripheral nerves

- Tumors of the spine, spinal cord and peripheral nerves

- Intracerebral hemorrhage, such as subarachnoid hemorrhage, interdepartmental, and intracellular hemorrhages

- Some forms of drug-resistant epilepsy

- Some forms of movement disorders (advanced Parkinson's disease, chorea) – this involves the use of specially developed minimally invasive stereotactic techniques (functional, stereotactic neurosurgery) such as ablative surgery and deep brain stimulation surgery

- Intractable pain of cancer or trauma patients and cranial/peripheral nerve pain

- Some forms of intractable psychiatric disorders

- Vascular malformations (i.e., arteriovenous malformations, venous angiomas, cavernous angiomas, capillary telangectasias) of the brain and spinal cord

- Moyamoya disease.

Advancements in Heart and Lung Surgery

YouTube Video by the American College of Surgeons (ACS) Education for a Better Recovery Program, 'Your Lung Operation.'

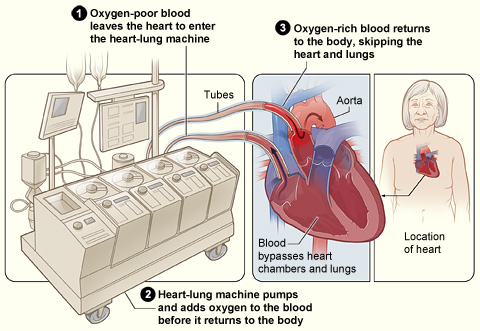

Pictured: The image shows how a heart-lung bypass machine works during surgery (courtesy of the National Heart, Lung and Blood Institute: a department of the U.S. National Institute of Health

Cardiothoracic surgery is the field of medicine involved in surgical treatment of organs inside the thorax (the chest)—generally treatment of conditions of the heart (heart disease) and lungs (lung disease).

A cardiac surgery residency typically comprises anywhere from 9 to 14 years (or longer) of training to become a fully qualified surgeon.

Cardiac surgery training may be combined with thoracic surgery and / or vascular surgery and called cardiovascular (CV) / cardiothoracic (CT) / cardiovascular thoracic (CVT) surgery.

Cardiac surgeons may enter a cardiac surgery residency directly from medical school, or first complete a general surgery residency followed by a fellowship.

Cardiac surgeons may further sub-specialize cardiac surgery by doing a fellowship in a variety of topics including: pediatric cardiac surgery, cardiac transplantation, adult acquired heart disease, weak heart issues, and many more problems in the heart.

Cardiac surgery training in the United States is combined with thoracic surgery and called cardiothoracic surgery. A cardiothoracic surgeon in the U.S. is a physician (D.O. or M.D.) who first completes a general surgery residency (typically 5–7 years), followed by a cardiothoracic surgery fellowship (typically 2–3 years).

The cardiothoracic surgery fellowship typically spans two or three years, but certification is based on the number of surgeries performed as the operating surgeon, not the time spent in the program, in addition to passing rigorous board certification tests. Recently, however, options for an integrated 6-year cardiothoracic residency (in place of the general surgery residency plus cardiothoracic residency) have been established at several programs.

Applicants match into these I-6 programs directly out of medical school, and the application process has been extremely competitive for these positions as there were approximately 160 applicants for 10 spots in the U.S. in 2010. As of May 2013, there are now 20 approved programs, which include the following:

Click Here for List of Approved Programs

Click on any of the following Hyperlinks for amplification:

A cardiac surgery residency typically comprises anywhere from 9 to 14 years (or longer) of training to become a fully qualified surgeon.

Cardiac surgery training may be combined with thoracic surgery and / or vascular surgery and called cardiovascular (CV) / cardiothoracic (CT) / cardiovascular thoracic (CVT) surgery.

Cardiac surgeons may enter a cardiac surgery residency directly from medical school, or first complete a general surgery residency followed by a fellowship.

Cardiac surgeons may further sub-specialize cardiac surgery by doing a fellowship in a variety of topics including: pediatric cardiac surgery, cardiac transplantation, adult acquired heart disease, weak heart issues, and many more problems in the heart.

Cardiac surgery training in the United States is combined with thoracic surgery and called cardiothoracic surgery. A cardiothoracic surgeon in the U.S. is a physician (D.O. or M.D.) who first completes a general surgery residency (typically 5–7 years), followed by a cardiothoracic surgery fellowship (typically 2–3 years).