Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

Culture

as a way of life for a specific group of people, including their behavior, race, ethnicity, beliefs, values, and symbols that they accept, generally without thinking about them, and that can be passed along by communication and imitation from one generation to the next.

Culture

YouTube Video about Vietnamese Culture

Pictured: LEFT: Sri Mariamman Temple, Singapore By AngMoKio. - Own work., CC BY-SA 3.0; RIGHT: Celebrations, rituals, and patterns of consumption are important aspects of folk culture.

Culture is, in the words of E.B. Tylor, "that complex whole which includes knowledge, belief, art, morals, law, custom and any other capabilities and habits acquired by man as a member of society."

Cambridge English Dictionary states that culture is, "the way of life, especially the general customs and beliefs, of a particular group of people at a particular time." Terror Management Theory posits that culture is a series of activities and worldviews that provide humans with the illusion of being individuals of value in a world meaning—raising themselves above the merely physical aspects of existence, in order to deny the animal insignificance and death that Homo Sapiens became aware of when they acquired a larger brain.

As a defining aspect of what it means to be human, culture is a central concept in anthropology, encompassing the range of phenomena that are transmitted through social learning in human societies.

The word is used in a general sense as the evolved ability to categorize and represent experiences with symbols and to act imaginatively and creatively. This ability arose with the evolution of behavioral modernity in humans around 50,000 years ago. This capacity is often thought to be unique to humans, although some other species have demonstrated similar, though much less complex abilities for social learning.

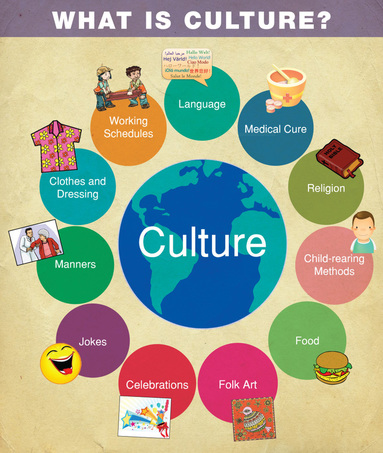

It is also used to denote the complex networks of practices and accumulated knowledge and ideas that is transmitted through social interaction and exist in specific human groups, or cultures, using the plural form. Some aspects of human behavior, such as language, social practices such as kinship, gender and marriage, expressive forms such as art, music, dance, ritual, religion, and technologies such as cooking, shelter, clothing are said to be cultural universals, found in all human societies.

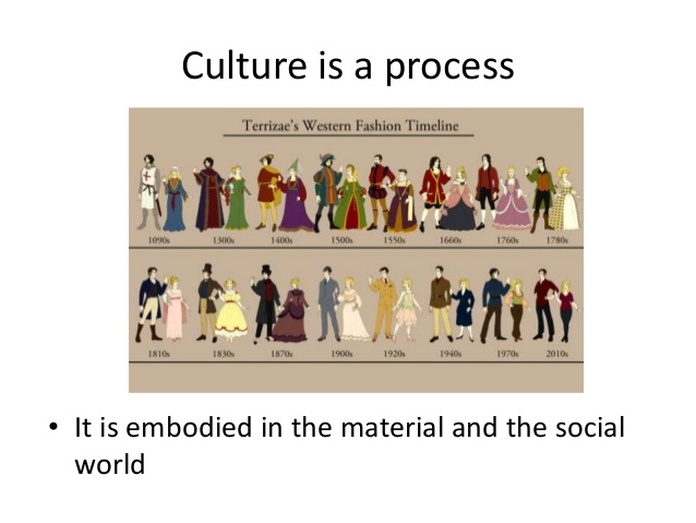

The concept material culture covers the physical expressions of culture, such as technology, architecture and art, whereas the immaterial aspects of culture such as principles of social organization (including, practices of political organization and social institutions), mythology, philosophy, literature (both written and oral), and science make up the intangible cultural heritage of a society.

In the humanities, one sense of culture, as an attribute of the individual, has been the degree to which they have cultivated a particular level of sophistication, in the arts, sciences, education, or manners. The level of cultural sophistication has also sometimes been seen to distinguish civilizations from less complex societies.

Such hierarchical perspectives on culture are also found in class-based distinctions between a high culture of the social elite and a low culture, popular culture or folk culture of the lower classes, distinguished by the stratified access to cultural capital.

In common parlance, culture is often used to refer specifically to the symbolic markers used by ethnic groups to distinguish themselves visibly from each other such as body modification, clothing or jewelry.

Mass culture refers to the mass-produced and mass mediated forms of consumer culture that emerged in the 20th century. Some schools of philosophy, such as Marxism and critical theory, have argued that culture is often used politically as a tool of the elites to manipulate the lower classes and create a false consciousness, such perspectives common in the discipline of cultural studies.

In the wider social sciences, the theoretical perspective of cultural materialism holds that human symbolic culture arises from the material conditions of human life, as humans create the conditions for physical survival, and that the basis of culture is found in evolved biological dispositions.

When used as a count noun "a culture", is the set of customs, traditions and values of a society or community, such as an ethnic group or nation. In this sense, multiculturalism is a concept that values the peaceful coexistence and mutual respect between different cultures inhabiting the same territory.

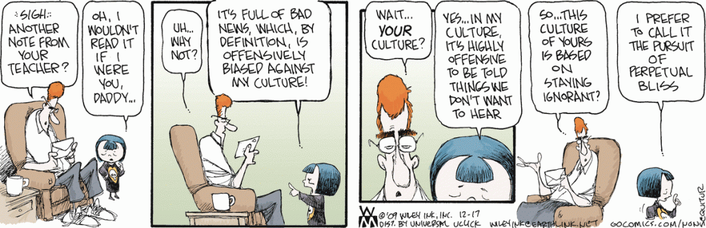

Sometimes "culture" is also used to describe specific practices within a subgroup of a society, a subculture (e.g. "bro culture"), or a counter culture. Within cultural anthropology, the ideology and analytical stance of cultural relativism holds that cultures cannot easily be objectively ranked or evaluated because any evaluation is necessarily situated within the value system of a given culture.

Click on any of the following blue hyperlinks for amplification:

Cambridge English Dictionary states that culture is, "the way of life, especially the general customs and beliefs, of a particular group of people at a particular time." Terror Management Theory posits that culture is a series of activities and worldviews that provide humans with the illusion of being individuals of value in a world meaning—raising themselves above the merely physical aspects of existence, in order to deny the animal insignificance and death that Homo Sapiens became aware of when they acquired a larger brain.

As a defining aspect of what it means to be human, culture is a central concept in anthropology, encompassing the range of phenomena that are transmitted through social learning in human societies.

The word is used in a general sense as the evolved ability to categorize and represent experiences with symbols and to act imaginatively and creatively. This ability arose with the evolution of behavioral modernity in humans around 50,000 years ago. This capacity is often thought to be unique to humans, although some other species have demonstrated similar, though much less complex abilities for social learning.

It is also used to denote the complex networks of practices and accumulated knowledge and ideas that is transmitted through social interaction and exist in specific human groups, or cultures, using the plural form. Some aspects of human behavior, such as language, social practices such as kinship, gender and marriage, expressive forms such as art, music, dance, ritual, religion, and technologies such as cooking, shelter, clothing are said to be cultural universals, found in all human societies.

The concept material culture covers the physical expressions of culture, such as technology, architecture and art, whereas the immaterial aspects of culture such as principles of social organization (including, practices of political organization and social institutions), mythology, philosophy, literature (both written and oral), and science make up the intangible cultural heritage of a society.

In the humanities, one sense of culture, as an attribute of the individual, has been the degree to which they have cultivated a particular level of sophistication, in the arts, sciences, education, or manners. The level of cultural sophistication has also sometimes been seen to distinguish civilizations from less complex societies.

Such hierarchical perspectives on culture are also found in class-based distinctions between a high culture of the social elite and a low culture, popular culture or folk culture of the lower classes, distinguished by the stratified access to cultural capital.

In common parlance, culture is often used to refer specifically to the symbolic markers used by ethnic groups to distinguish themselves visibly from each other such as body modification, clothing or jewelry.

Mass culture refers to the mass-produced and mass mediated forms of consumer culture that emerged in the 20th century. Some schools of philosophy, such as Marxism and critical theory, have argued that culture is often used politically as a tool of the elites to manipulate the lower classes and create a false consciousness, such perspectives common in the discipline of cultural studies.

In the wider social sciences, the theoretical perspective of cultural materialism holds that human symbolic culture arises from the material conditions of human life, as humans create the conditions for physical survival, and that the basis of culture is found in evolved biological dispositions.

When used as a count noun "a culture", is the set of customs, traditions and values of a society or community, such as an ethnic group or nation. In this sense, multiculturalism is a concept that values the peaceful coexistence and mutual respect between different cultures inhabiting the same territory.

Sometimes "culture" is also used to describe specific practices within a subgroup of a society, a subculture (e.g. "bro culture"), or a counter culture. Within cultural anthropology, the ideology and analytical stance of cultural relativism holds that cultures cannot easily be objectively ranked or evaluated because any evaluation is necessarily situated within the value system of a given culture.

Click on any of the following blue hyperlinks for amplification:

- Etymology

- Change

- Early modern discourses

- German Romanticism

- English Romanticism

- Anthropology

- Sociology

- Cultural studies

- Cultural dynamics

- See also:

Cities with Significant Ethnic Culture

YouTube Video of Chinatown, San Francisco, CA

Pictured: LEFT: Chinatown in San Francisco, CA: RIGHT: Hispanic Community in Chicago, IL

The above link provides a list of United States cities with large ethnic minority populations. (There are many cities in the US with no ethnic majority.)

Every large society contains ethnic minorities and linguistic minorities. Their style of life, language, culture and origin can differ from the majority. The minority status is conditioned not only by a clearly numerical relations but also by questions of political power.

In some places, subordinate ethnic groups may constitute a numerical majority, such as Blacks in South Africa under apartheid. In addition to the "traditional" (longtime resident) minorities they may be migrant, indigenous or landless nomadic communities.

There is no legal definition of national (ethnic) minorities in international law. Only in Europe is this exact definition (probably) provided by the European Charter for Regional or Minority Languages and by the Framework Convention for the Protection of National Minorities.

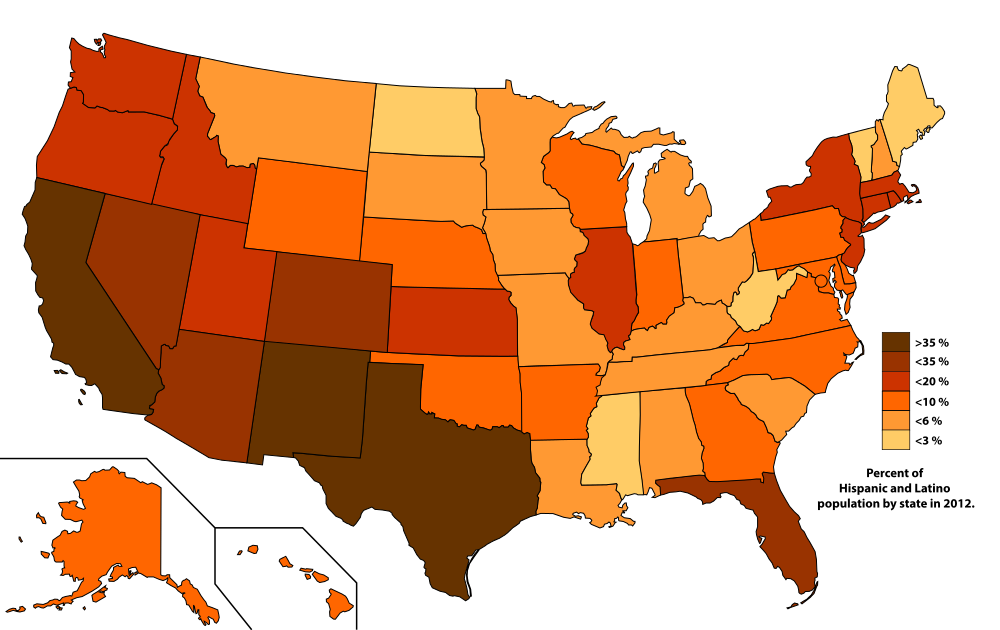

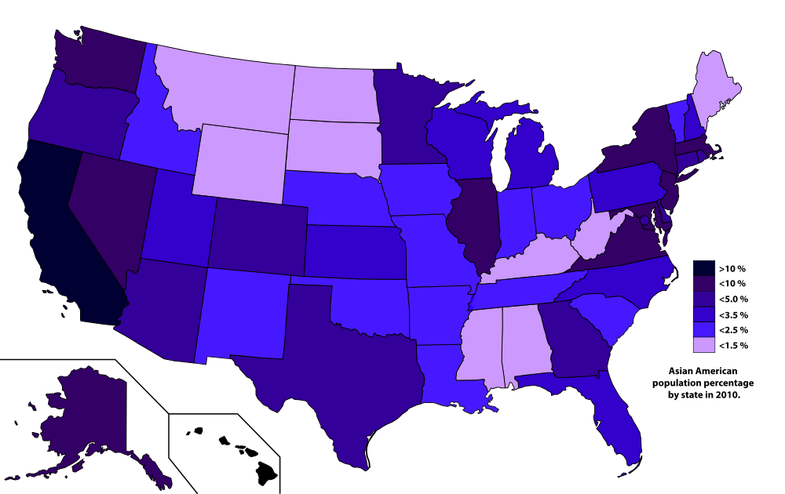

In the United States, for example, white Americans constitute the majority (72.4%) and all other racial groups (African Americans, Asian Americans, Native Americans, and Native Hawaiians) are classified as "minorities".

If the non-Hispanic white population falls below 50% the group will only be the plurality, not the majority. However, national minority can be theoretically (not legally) defined as a group of people within a given national state:

International criminal law can protect the rights of racial or ethnic minorities in a number of ways. The right to self-determination is a key issue. The formal level of protection of national (ethnic) minorities is highest in European countries.

Every large society contains ethnic minorities and linguistic minorities. Their style of life, language, culture and origin can differ from the majority. The minority status is conditioned not only by a clearly numerical relations but also by questions of political power.

In some places, subordinate ethnic groups may constitute a numerical majority, such as Blacks in South Africa under apartheid. In addition to the "traditional" (longtime resident) minorities they may be migrant, indigenous or landless nomadic communities.

There is no legal definition of national (ethnic) minorities in international law. Only in Europe is this exact definition (probably) provided by the European Charter for Regional or Minority Languages and by the Framework Convention for the Protection of National Minorities.

In the United States, for example, white Americans constitute the majority (72.4%) and all other racial groups (African Americans, Asian Americans, Native Americans, and Native Hawaiians) are classified as "minorities".

If the non-Hispanic white population falls below 50% the group will only be the plurality, not the majority. However, national minority can be theoretically (not legally) defined as a group of people within a given national state:

- which is numerically smaller than the rest of population of the state or a part of the state

- which is not in a dominant position

- which has culture, language, religion, race etc. distinct from that of the majority of the population

- whose members have a will to preserve their specificity

- whose members are citizens of the state where they have the status of a minority.

- which have a long-term presence on the territory where it has lived.

International criminal law can protect the rights of racial or ethnic minorities in a number of ways. The right to self-determination is a key issue. The formal level of protection of national (ethnic) minorities is highest in European countries.

Civil Rights Act of 1960

YouTube Video about the History of the Civil Rights Movement

Pictured: LEFT: Civil Rights March, 1960; RIGHT: President Dwight D. Eisenhower signs Civil Rights Act 1960 into law.

The Civil Rights Act of 1960 (Pub.L. 86–449, 74 Stat. 89, enacted May 6, 1960) was a United States federal law that established federal inspection of local voter registration polls and introduced penalties for anyone who obstructed someone's attempt to register to vote.

It was designed to deal with discriminatory laws and practices in the segregated South, by which blacks and Mexican Texans had been effectively disfranchised since the late 19th and start of the 20th century. It extended the life of the Civil Rights Commission, previously limited to two years, to oversee registration and voting practices. The act was signed into law by President Dwight D. Eisenhower and served to eliminate certain loopholes left by the Civil Rights Act of 1957.

Toward the end of his presidency, President Eisenhower supported civil rights legislation. In his message to Congress, he proposed seven recommendations for the protection of civil rights:

Subsequent history:

After the subsequent intensive acts of 1964 and 1965, the act of 1957 and the Civil Rights Act of 1960 were deemed ineffective for the firm establishment of civil rights. The later legislation had firmer ground for the enforcement and protection of a variety of civil rights, where the acts of 1957 and 1960 were largely limited to voting rights. The Civil Rights Act of 1960 dealt with race and color but omitted coverage of those discriminated against for national origin, although Eisenhower had called for it in his message to Congress.

The Civil Rights Act of 1964 and Voting Rights Act of 1965 worked to fulfill the seven goals suggested by President Eisenhower in 1959. The two satisfied proponents of the civil rights movement to end racial discrimination and protect legal equality in the United States.

It was designed to deal with discriminatory laws and practices in the segregated South, by which blacks and Mexican Texans had been effectively disfranchised since the late 19th and start of the 20th century. It extended the life of the Civil Rights Commission, previously limited to two years, to oversee registration and voting practices. The act was signed into law by President Dwight D. Eisenhower and served to eliminate certain loopholes left by the Civil Rights Act of 1957.

Toward the end of his presidency, President Eisenhower supported civil rights legislation. In his message to Congress, he proposed seven recommendations for the protection of civil rights:

- Strengthen the laws that would root out threats to obstruct court orders in school desegregation cases

- Provide more investigative authority to the Federal Bureau of Investigation in crimes involving the destruction of schools/churches

- Grant Attorney General power to investigate Federal election records

- Provide temporary program for aid to agencies to assist changes necessary for school desegregation decisions

- Authorize provision of education for children of the armed forces

- Consider establishing a statutory Commission on Equal Job Opportunity Under Government Contracts (later mandated in the Civil Rights Act of 1964 to create the Equal Employment Opportunity Commission)

- Extend the Civil Rights Commission an additional two years

Subsequent history:

After the subsequent intensive acts of 1964 and 1965, the act of 1957 and the Civil Rights Act of 1960 were deemed ineffective for the firm establishment of civil rights. The later legislation had firmer ground for the enforcement and protection of a variety of civil rights, where the acts of 1957 and 1960 were largely limited to voting rights. The Civil Rights Act of 1960 dealt with race and color but omitted coverage of those discriminated against for national origin, although Eisenhower had called for it in his message to Congress.

The Civil Rights Act of 1964 and Voting Rights Act of 1965 worked to fulfill the seven goals suggested by President Eisenhower in 1959. The two satisfied proponents of the civil rights movement to end racial discrimination and protect legal equality in the United States.

Civil Rights Act of 1964

YouTube Video: President Lyndon B. Johnson Signs Civil Rights Act, Gives Pen to Dr. Martin Luther King Jr.*

Pictured: President Lyndon B. Johnson signing Civil Rights Act of 1964 into law

*-- Dr. Martin Luther King, Jr.

The Civil Rights Act of 1964 (enacted July 2, 1964) is a landmark piece of civil rights legislation in the United States that outlawed discrimination based on race, color, religion, sex, or national origin.

It ended unequal application of voter registration requirements and racial segregation in schools, at the workplace and by facilities that served the general public (known as "public accommodations").

Powers given to enforce the act were initially weak, but were supplemented during later years. Congress asserted its authority to legislate under several different parts of the United States Constitution, principally its power to regulate interstate commerce under Article One (section 8), its duty to guarantee all citizens equal protection of the laws under the Fourteenth Amendment and its duty to protect voting rights under the Fifteenth Amendment.

The Act was signed into law by President Lyndon B. Johnson on July 2, 1964, at the White House.

It ended unequal application of voter registration requirements and racial segregation in schools, at the workplace and by facilities that served the general public (known as "public accommodations").

Powers given to enforce the act were initially weak, but were supplemented during later years. Congress asserted its authority to legislate under several different parts of the United States Constitution, principally its power to regulate interstate commerce under Article One (section 8), its duty to guarantee all citizens equal protection of the laws under the Fourteenth Amendment and its duty to protect voting rights under the Fifteenth Amendment.

The Act was signed into law by President Lyndon B. Johnson on July 2, 1964, at the White House.

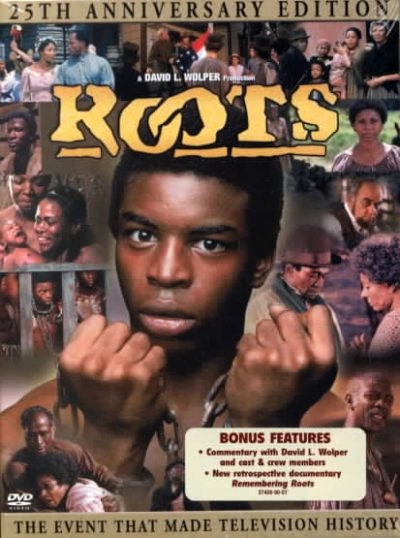

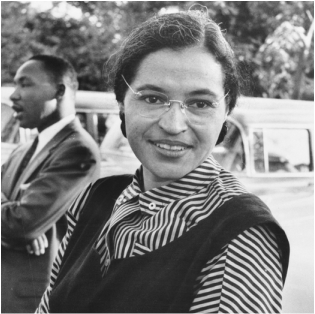

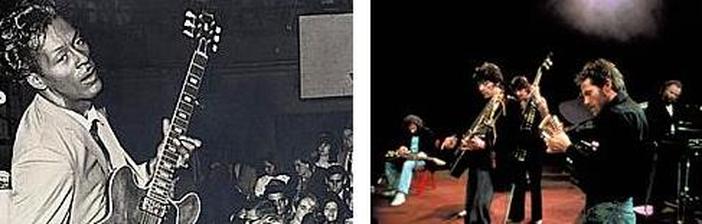

Contributors to African-American Culture

YouTube Video: Evolution of African American Music

Pictured: Vogue Magazine Covers of LEFT: Beyoncé and RIGHT: Rihanna

African-American culture, also known as Black-American culture, in the United States refers to the cultural contributions of African Americans to the culture of the United States, either as part of or distinct from American culture. The distinct identity of African-American culture is rooted in the historical experience of the African-American people, including the Middle Passage. The culture is both distinct and enormously influential to American culture as a whole.

African-American culture is rooted in West and Central Africa. Understanding its identity within the culture of the United States it is, in the anthropological sense, conscious of its origins as largely a blend of West and Central African cultures. Although slavery greatly restricted the ability of African-Americans to practice their original cultural traditions, many practices, values and beliefs survived, and over time have modified and/or blended with European cultures and other cultures such as that of Native Americans.

African-American identity was established during the slavery period, producing a dynamic culture that has had and continues to have a profound impact on American culture as a whole, as well as that of the broader world.

Elaborate rituals and ceremonies were a significant part of African Americans' ancestral culture. Many West African societies traditionally believed that spirits dwelled in their surrounding nature. From this disposition, they treated their environment with mindful care. They also generally believed that a spiritual life source existed after death, and that ancestors in this spiritual realm could then mediate between the supreme creator and the living. Honor and prayer was displayed to these "ancient ones," the spirit of those past. West Africans also believed in spiritual possession.

In the beginning of the eighteenth century Christianity began to spread across North Africa; this shift in religion began displacing traditional African spiritual practices. The enslaved Africans brought this complex religious dynamic within their culture to America. This fusion of traditional African beliefs with Christianity provided a common place for those practicing religion in Africa and America.

After emancipation, unique African-American traditions continued to flourish, as distinctive traditions or radical innovations in music, art, literature, religion, cuisine, and other fields. 20th-century sociologists, such as Gunnar Myrdal, believed that African Americans had lost most cultural ties with Africa.

But, anthropological field research by Melville Herskovits and others demonstrated that there has been a continuum of African traditions among Africans of the Diaspora. The greatest influence of African cultural practices on European culture is found below the Mason-Dixon line in the American South.

For many years African-American culture developed separately from European-American culture, both because of slavery and the persistence of racial discrimination in America, as well as African-American slave descendants' desire to create and maintain their own traditions. Today, African-American culture has become a significant part of American culture and yet, at the same time, remains a distinct cultural body.

African-American culture is rooted in West and Central Africa. Understanding its identity within the culture of the United States it is, in the anthropological sense, conscious of its origins as largely a blend of West and Central African cultures. Although slavery greatly restricted the ability of African-Americans to practice their original cultural traditions, many practices, values and beliefs survived, and over time have modified and/or blended with European cultures and other cultures such as that of Native Americans.

African-American identity was established during the slavery period, producing a dynamic culture that has had and continues to have a profound impact on American culture as a whole, as well as that of the broader world.

Elaborate rituals and ceremonies were a significant part of African Americans' ancestral culture. Many West African societies traditionally believed that spirits dwelled in their surrounding nature. From this disposition, they treated their environment with mindful care. They also generally believed that a spiritual life source existed after death, and that ancestors in this spiritual realm could then mediate between the supreme creator and the living. Honor and prayer was displayed to these "ancient ones," the spirit of those past. West Africans also believed in spiritual possession.

In the beginning of the eighteenth century Christianity began to spread across North Africa; this shift in religion began displacing traditional African spiritual practices. The enslaved Africans brought this complex religious dynamic within their culture to America. This fusion of traditional African beliefs with Christianity provided a common place for those practicing religion in Africa and America.

After emancipation, unique African-American traditions continued to flourish, as distinctive traditions or radical innovations in music, art, literature, religion, cuisine, and other fields. 20th-century sociologists, such as Gunnar Myrdal, believed that African Americans had lost most cultural ties with Africa.

But, anthropological field research by Melville Herskovits and others demonstrated that there has been a continuum of African traditions among Africans of the Diaspora. The greatest influence of African cultural practices on European culture is found below the Mason-Dixon line in the American South.

For many years African-American culture developed separately from European-American culture, both because of slavery and the persistence of racial discrimination in America, as well as African-American slave descendants' desire to create and maintain their own traditions. Today, African-American culture has become a significant part of American culture and yet, at the same time, remains a distinct cultural body.

Pop Culture in the United States

YouTube Video from "The Many Loves of Dobie Gillis" (3/9) An Honest and Decent Man (1959)

Pictured: LEFT: Young hippies near the Woodstock festival in August 1969; RIGHT: a picture of Jack Kerouac, who is considered a literary iconoclast and, alongside William S. Burroughs and Allen Ginsberg, is a pioneer of the Beat Generation.

Popular culture or pop culture is the entirety of ideas, perspectives, attitudes, images, and other phenomena that are within the mainstream of a given culture, especially Western culture of the early to mid 20th century and the emerging global mainstream of the late 20th and early 21st century.

Heavily influenced by mass media, this collection of ideas permeates the everyday lives of the society. The most common pop culture categories are: entertainment (movies, music, TV), sports, news (as in people/places in news), politics, fashion/clothes, technology, and slang.

Popular culture is often viewed as being trivial and "dumbed down" in order to find consensual acceptance throughout the mainstream. As a result, it comes under heavy criticism from various non-mainstream sources (most notably religious groups and countercultural groups) which deem it superficial, consumerist, sensationalist, and/or corrupt.

From the end of World War II, following major cultural and social changes brought by mass media innovations, the meaning of popular culture began to overlap with those of mass culture, media culture, image culture, consumer culture, and culture for mass consumption.

Social and cultural changes in the United States were a pioneer in this with respect to other western countries.

The abbreviated form "pop" for popular. as in pop music, dates from the late 1950s. Although terms "pop" and "popular" are in some cases used interchangeably, and their meaning partially overlap, the term "pop" is narrower. Pop is specific of something containing qualities of mass appeal, while "popular" refers to what has gained popularity, regardless of its style.

According to John Storey, there are six definitions of popular culture. The quantitative definition of culture has the problem that much "high culture" (e.g., television dramatizations of Jane Austen) is also "popular."

"Pop culture" is also defined as the culture that is "left over" when we have decided what high culture is. However, many works straddle the boundaries, e.g., Shakespeare and Charles Dickens.

A third definition equates pop culture with "mass culture" and ideas. This is seen as a commercial culture, mass-produced for mass consumption by mass media. From a Western European perspective, this may be compared to American culture. Alternatively, "pop culture" can be defined as an "authentic" culture of the people, but this can be problematic because there are many ways of defining the "people."

Storey argued that there is a political dimension to popular culture; neo-Gramscian hegemony theory "... sees popular culture as a site of struggle between the 'resistance' of subordinate groups in society and the forces of 'incorporation' operating in the interests of dominant groups in society." A postmodernist approach to popular culture would "no longer recognize the distinction between high and popular culture."

Storey claims that popular culture emerges from the urbanization of the Industrial Revolution. Studies of Shakespeare (by Weimann, Barber or Bristol, for example) locate much of the characteristic vitality of his drama in its participation in Renaissance popular culture, while contemporary practitioners like Dario Fo and John McGrath use popular culture in its Gramscian sense that includes ancient folk traditions (the commedia dell'arte for example).

Popular culture changes constantly and occurs uniquely in place and time. It forms currents and eddies, and represents a complex of mutually interdependent perspectives and values that influence society and its institutions in various ways.

For example, certain currents of pop culture may originate from, (or diverge into) a subculture, representing perspectives with which the mainstream popular culture has only limited familiarity.

Items of popular culture most typically appeal to a broad spectrum of the public. Important contemporary contributions for understanding what popular culture means have been given by the German researcher Ronald Daus, who studies the impact of extra-European cultures in North America, Asia and especially in Latin America.

Click here to read more.

Heavily influenced by mass media, this collection of ideas permeates the everyday lives of the society. The most common pop culture categories are: entertainment (movies, music, TV), sports, news (as in people/places in news), politics, fashion/clothes, technology, and slang.

Popular culture is often viewed as being trivial and "dumbed down" in order to find consensual acceptance throughout the mainstream. As a result, it comes under heavy criticism from various non-mainstream sources (most notably religious groups and countercultural groups) which deem it superficial, consumerist, sensationalist, and/or corrupt.

From the end of World War II, following major cultural and social changes brought by mass media innovations, the meaning of popular culture began to overlap with those of mass culture, media culture, image culture, consumer culture, and culture for mass consumption.

Social and cultural changes in the United States were a pioneer in this with respect to other western countries.

The abbreviated form "pop" for popular. as in pop music, dates from the late 1950s. Although terms "pop" and "popular" are in some cases used interchangeably, and their meaning partially overlap, the term "pop" is narrower. Pop is specific of something containing qualities of mass appeal, while "popular" refers to what has gained popularity, regardless of its style.

According to John Storey, there are six definitions of popular culture. The quantitative definition of culture has the problem that much "high culture" (e.g., television dramatizations of Jane Austen) is also "popular."

"Pop culture" is also defined as the culture that is "left over" when we have decided what high culture is. However, many works straddle the boundaries, e.g., Shakespeare and Charles Dickens.

A third definition equates pop culture with "mass culture" and ideas. This is seen as a commercial culture, mass-produced for mass consumption by mass media. From a Western European perspective, this may be compared to American culture. Alternatively, "pop culture" can be defined as an "authentic" culture of the people, but this can be problematic because there are many ways of defining the "people."

Storey argued that there is a political dimension to popular culture; neo-Gramscian hegemony theory "... sees popular culture as a site of struggle between the 'resistance' of subordinate groups in society and the forces of 'incorporation' operating in the interests of dominant groups in society." A postmodernist approach to popular culture would "no longer recognize the distinction between high and popular culture."

Storey claims that popular culture emerges from the urbanization of the Industrial Revolution. Studies of Shakespeare (by Weimann, Barber or Bristol, for example) locate much of the characteristic vitality of his drama in its participation in Renaissance popular culture, while contemporary practitioners like Dario Fo and John McGrath use popular culture in its Gramscian sense that includes ancient folk traditions (the commedia dell'arte for example).

Popular culture changes constantly and occurs uniquely in place and time. It forms currents and eddies, and represents a complex of mutually interdependent perspectives and values that influence society and its institutions in various ways.

For example, certain currents of pop culture may originate from, (or diverge into) a subculture, representing perspectives with which the mainstream popular culture has only limited familiarity.

Items of popular culture most typically appeal to a broad spectrum of the public. Important contemporary contributions for understanding what popular culture means have been given by the German researcher Ronald Daus, who studies the impact of extra-European cultures in North America, Asia and especially in Latin America.

Click here to read more.

Cultural History of the United States

YouTube Video: the Rolling Stones performing "Miss You" (Live in St. Louis -- 1997)

Pictured: LEFT: Thomas Jefferson designed his Georgian style Monticello estate in Virginia, the only World Heritage Site home in the United States; RIGHT: Apple pie is one of a number of American cultural icons.

The cultural history of the United States covers the cultural history of the United States since its founding in the late 18th century.

Various immigrant groups have been at play in the formation of the nation's culture. While different ethnic groups may display their own insular cultural aspects, throughout time a broad American culture has developed that encompasses the entire country. Developments in the culture of the United States in modern history have often been followed by similar changes in the rest of the world (American cultural imperialism).

This includes knowledge, customs, and arts of Americans; and events in the social, cultural, and political

See Also:

Various immigrant groups have been at play in the formation of the nation's culture. While different ethnic groups may display their own insular cultural aspects, throughout time a broad American culture has developed that encompasses the entire country. Developments in the culture of the United States in modern history have often been followed by similar changes in the rest of the world (American cultural imperialism).

This includes knowledge, customs, and arts of Americans; and events in the social, cultural, and political

See Also:

- Architecture of the United States

- Christianity in the United States

- Counterculture of the 1960s

- Cuisine of the United States

- History of education in the United States

- History of women in the United States

- United States religious history

- Entertainment:

- Fine arts:

Cultural Events: 1969 Woodstock Festival

- YouTube Video of the Woodstock Festival: Woodstock 1969: The Music

- YouTube Video: Janis Joplin singing Ball & Chain Live At Woodstock 1969

- YouTube Video of Jimi Hendrix performing Purple Haze Live at Woodstock 1969

The Woodstock Music & Art Fair—informally, the Woodstock Festival or simply Woodstock—was a music festival attracting an audience of 400,000 people, scheduled over three days on a dairy farm in New York state from August 15 to 17, 1969 but which ran over four days to August 18, 1969.

Billed as "An Aquarian Exposition: 3 Days of Peace & Music", it was held at Max Yasgur's 600-acre (240 ha; 0.94 sq mi) dairy farm in the Catskills near the hamlet of White Lake in the town of Bethel. Bethel, in Sullivan County, is 43 miles (69 km) southwest of the town of Woodstock, New York, in adjoining Ulster County.

During the sometimes rainy weekend, 32 acts performed outdoors before an audience of 400,000 people. It is widely regarded as a pivotal moment in popular music history, as well as the definitive nexus for the larger counterculture generation.

Rolling Stone listed it as one of the 50 Moments That Changed the History of Rock and Roll.

The event was captured in the Academy Award winning 1970 documentary movie Woodstock, an accompanying soundtrack album, and Joni Mitchell's song "Woodstock", which commemorated the event and became a major hit for both Crosby, Stills, Nash & Young and Matthews Southern Comfort.

Click Here to Read More.

Billed as "An Aquarian Exposition: 3 Days of Peace & Music", it was held at Max Yasgur's 600-acre (240 ha; 0.94 sq mi) dairy farm in the Catskills near the hamlet of White Lake in the town of Bethel. Bethel, in Sullivan County, is 43 miles (69 km) southwest of the town of Woodstock, New York, in adjoining Ulster County.

During the sometimes rainy weekend, 32 acts performed outdoors before an audience of 400,000 people. It is widely regarded as a pivotal moment in popular music history, as well as the definitive nexus for the larger counterculture generation.

Rolling Stone listed it as one of the 50 Moments That Changed the History of Rock and Roll.

The event was captured in the Academy Award winning 1970 documentary movie Woodstock, an accompanying soundtrack album, and Joni Mitchell's song "Woodstock", which commemorated the event and became a major hit for both Crosby, Stills, Nash & Young and Matthews Southern Comfort.

Click Here to Read More.

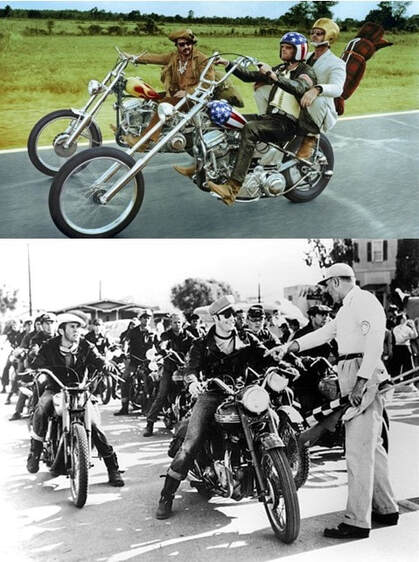

Counterculture of the 1960s

YouTube Video: John Lennon - "Give Peace A Chance" (1969)

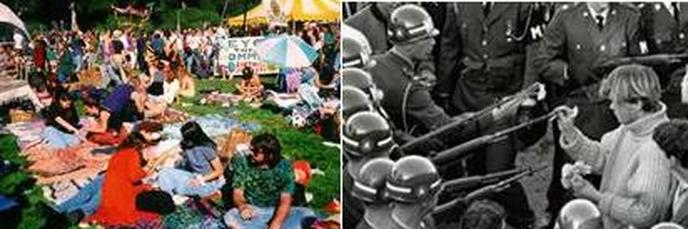

Pictured: LEFT: “The Summer of Love” 1967 Festival in San Francisco; RIGHT: Scene from the 1967 March on the Pentagon anti-Vietnam War Protest.

The counterculture of the 1960s refers to an anti-establishment cultural phenomenon that developed first in the United States and the United Kingdom, and then spread throughout much of the Western world between the early 1960s and the mid-1970s, with London, New York City, and San Francisco being hotbeds of early counter-cultural activity.

The aggregate movement gained momentum as the American Civil Rights Movement continued to grow, and became revolutionary with the expansion of the US government's extensive military intervention in Vietnam.

As the 1960s progressed, widespread social tensions also developed concerning other issues, and tended to flow along generational lines regarding human sexuality, women's rights, traditional modes of authority, experimentation with psychoactive drugs, and differing interpretations of the American Dream.

As the era unfolded, new cultural forms and a dynamic subculture which celebrated experimentation, modern incarnations of Bohemianism, and the rise of the hippie and other alternative lifestyles, emerged.

This embracing of creativity is particularly notable in the works of British Invasion bands such as the Beatles, and filmmakers whose works became far less restricted by censorship. In addition to the trendsetting Beatles, many other creative artists, authors, and thinkers, within and across many disciplines, helped define the counterculture movement.

Several factors distinguished the counterculture of the 1960s from the anti-authoritarian movements of previous eras. The post-World War II "baby boom" generated an unprecedented number of potentially disaffected young people as prospective participants in a rethinking of the direction of American and other democratic societies.

Post-war affluence allowed many of the counterculture generation to move beyond a focus on the provision of the material necessities of life that had preoccupied their Depression-era parents.

The era was also notable in that a significant portion of the array of behaviors and "causes" within the larger movement were quickly assimilated within mainstream society, particularly in the US, even though counterculture participants numbered in the clear minority within their respective national populations.

The counterculture era essentially commenced in earnest with the assassination of John F. Kennedy in November of 1963. It became absorbed into the popular culture with the termination of U.S. combat-military involvement in Southeast Asia and the end of the draft in 1973, and ultimately with the resignation of President Richard M. Nixon in August 1974.

Many key movements were born of, or were advanced within, the counterculture of the 1960s. Each movement is relevant to the larger era. The most important stand alone, irrespective of the larger counterculture.

In the broadest sense, 1960s counterculture grew from a confluence of people, ideas, events, issues, circumstances, and technological developments which served as intellectual and social catalysts for exceptionally rapid change during the era.

Click Here to Read More.

The aggregate movement gained momentum as the American Civil Rights Movement continued to grow, and became revolutionary with the expansion of the US government's extensive military intervention in Vietnam.

As the 1960s progressed, widespread social tensions also developed concerning other issues, and tended to flow along generational lines regarding human sexuality, women's rights, traditional modes of authority, experimentation with psychoactive drugs, and differing interpretations of the American Dream.

As the era unfolded, new cultural forms and a dynamic subculture which celebrated experimentation, modern incarnations of Bohemianism, and the rise of the hippie and other alternative lifestyles, emerged.

This embracing of creativity is particularly notable in the works of British Invasion bands such as the Beatles, and filmmakers whose works became far less restricted by censorship. In addition to the trendsetting Beatles, many other creative artists, authors, and thinkers, within and across many disciplines, helped define the counterculture movement.

Several factors distinguished the counterculture of the 1960s from the anti-authoritarian movements of previous eras. The post-World War II "baby boom" generated an unprecedented number of potentially disaffected young people as prospective participants in a rethinking of the direction of American and other democratic societies.

Post-war affluence allowed many of the counterculture generation to move beyond a focus on the provision of the material necessities of life that had preoccupied their Depression-era parents.

The era was also notable in that a significant portion of the array of behaviors and "causes" within the larger movement were quickly assimilated within mainstream society, particularly in the US, even though counterculture participants numbered in the clear minority within their respective national populations.

The counterculture era essentially commenced in earnest with the assassination of John F. Kennedy in November of 1963. It became absorbed into the popular culture with the termination of U.S. combat-military involvement in Southeast Asia and the end of the draft in 1973, and ultimately with the resignation of President Richard M. Nixon in August 1974.

Many key movements were born of, or were advanced within, the counterculture of the 1960s. Each movement is relevant to the larger era. The most important stand alone, irrespective of the larger counterculture.

In the broadest sense, 1960s counterculture grew from a confluence of people, ideas, events, issues, circumstances, and technological developments which served as intellectual and social catalysts for exceptionally rapid change during the era.

Click Here to Read More.

Culture of the United States

YouTube Video of the Star Spangled Banner by Lady Gaga - Live at Super Bowl 50

Pictured: United States National Symbols include LEFT: The Official Seal; RIGHT: the Official Bird: The Bald Eagle

The culture of the United States of America is primarily Western, but is influenced by African, Native American, Asian, Polynesian, and Latin American cultures.

A strand of what may be described as American culture started its formation over 10,000 years ago with the migration of Paleo-Indians from Asia, Oceania, and Europe, into the region that is today the continental United States.

The United States of America has its own unique social and cultural characteristics such as dialect, music, arts, social habits, cuisine, and folklore.

The United States of America is an ethnically and racially diverse country as a result of large-scale migration from many ethnically and racially different countries throughout its history. Differing birth and death rates among natives, settlers, and immigrants are also a factor.

Its chief early European influences came from English settlers of colonial America during British rule. Due to colonial ties with Britain that spread the English language, British culture, legal system and other cultural inheritances, had a formative influence. Other important influences came from other parts of Europe, especially Germany.

Original elements also play a strong role, such as Jeffersonian democracy. Thomas Jefferson's Notes on the State of Virginia was perhaps the first influential domestic cultural critique by an American and a reactionary piece to the prevailing European consensus that America's domestic originality was degenerate.

American culture includes both conservative and liberal elements, scientific and religious competitiveness, political structures, risk taking and free expression, materialist and moral elements. Despite certain consistent ideological principles (e.g. individualism, egalitarianism, and faith in freedom and democracy), American culture has a variety of expressions due to its geographical scale and demographic diversity. The flexibility of U.S. culture and its highly symbolic nature lead some researchers to categorize American culture as a mythic identity; others see it as American exceptionalism .

It also includes elements that evolved from Indigenous Americans, and other ethnic cultures—most prominently the culture of African Americans, cultures from Latin America, and Asian American cultures. Many American cultural elements, especially from popular culture, have spread across the globe through modern mass media.

The United States has traditionally been thought of as a melting pot. However, beginning in the 1960s and continuing on in the present day, the country trends towards cultural diversity, pluralism, and the image of a salad bowl instead.

Due to the extent of American culture, there are many integrated but unique social subcultures within the United States. The cultural affiliations an individual in the United States may have commonly depend on social class, political orientation and a multitude of demographic characteristics such as religious background, occupation and ethnic group membership.

Click here for further amplification.

A strand of what may be described as American culture started its formation over 10,000 years ago with the migration of Paleo-Indians from Asia, Oceania, and Europe, into the region that is today the continental United States.

The United States of America has its own unique social and cultural characteristics such as dialect, music, arts, social habits, cuisine, and folklore.

The United States of America is an ethnically and racially diverse country as a result of large-scale migration from many ethnically and racially different countries throughout its history. Differing birth and death rates among natives, settlers, and immigrants are also a factor.

Its chief early European influences came from English settlers of colonial America during British rule. Due to colonial ties with Britain that spread the English language, British culture, legal system and other cultural inheritances, had a formative influence. Other important influences came from other parts of Europe, especially Germany.

Original elements also play a strong role, such as Jeffersonian democracy. Thomas Jefferson's Notes on the State of Virginia was perhaps the first influential domestic cultural critique by an American and a reactionary piece to the prevailing European consensus that America's domestic originality was degenerate.

American culture includes both conservative and liberal elements, scientific and religious competitiveness, political structures, risk taking and free expression, materialist and moral elements. Despite certain consistent ideological principles (e.g. individualism, egalitarianism, and faith in freedom and democracy), American culture has a variety of expressions due to its geographical scale and demographic diversity. The flexibility of U.S. culture and its highly symbolic nature lead some researchers to categorize American culture as a mythic identity; others see it as American exceptionalism .

It also includes elements that evolved from Indigenous Americans, and other ethnic cultures—most prominently the culture of African Americans, cultures from Latin America, and Asian American cultures. Many American cultural elements, especially from popular culture, have spread across the globe through modern mass media.

The United States has traditionally been thought of as a melting pot. However, beginning in the 1960s and continuing on in the present day, the country trends towards cultural diversity, pluralism, and the image of a salad bowl instead.

Due to the extent of American culture, there are many integrated but unique social subcultures within the United States. The cultural affiliations an individual in the United States may have commonly depend on social class, political orientation and a multitude of demographic characteristics such as religious background, occupation and ethnic group membership.

Click here for further amplification.

Cultural Diversity

YouTube Video: Cultural Diversity Examples: Avoid Stereotypes while communicating [sic]

Pictured: Images from many cultures

Cultural diversity is the quality of diverse or different cultures, as opposed to monoculture, as in the global monoculture, or a homogenization of cultures, akin to cultural decay. The phrase cultural diversity can also refer to having different cultures respect each other's differences. The phrase "cultural diversity" is also sometimes used to mean the variety of human societies or cultures in a specific region, or in the world as a whole. The culturally destructive action of globalization is often said to have a negative effect on the world's cultural diversity.

Overview:

The many separate societies that emerged around the globe differed markedly from each other, and many of these differences persist to this day. As well as the more obvious cultural differences that exist between people, such as language, dress and traditions, there are also significant variations in the way societies organize themselves, in their shared conception of morality, and in the ways they interact with their environment. Cultural diversity can be seen as analogous to biodiversity.

Opposition and support:

By analogy with biodiversity, which is thought to be essential to the long-term survival of life on earth, it can be argued that cultural diversity may be vital for the long-term survival of humanity; and that the conservation of indigenous cultures may be as important to humankind as the conservation of species and ecosystems is to life in general.

The General Conference of UNESCO took this position in 2001, asserting in Article 1 of the Universal Declaration on Cultural Diversity that "...cultural diversity is as necessary for humankind as biodiversity is for nature"

This position is rejected by some people, on several grounds. Firstly, like most evolutionary accounts of human nature, the importance of cultural diversity for survival may be an un-testable hypothesis, which can neither be proved nor disproved. Secondly, it can be argued that it is unethical deliberately to conserve "less developed" societies, because this will deny people within those societies the benefits of technological and medical advances enjoyed by those in the "developed" world.

In the same manner that the promotion of poverty in underdeveloped nations as "cultural diversity" is unethical, it is similarly unethical to promote all religious practices simply

because they are seen to contribute to cultural diversity. Particular religious practices are recognized by the WHO and UN as unethical, including female genital mutilation (FGM), polygamy, child brides, and human sacrifice.

With the onset of globalization, traditional nation-states have been placed under enormous pressures. Today, with the development of technology, information and capital are transcending geographical boundaries and reshaping the relationships between the marketplace, states and citizens. In particular, the growth of the mass media industry has largely impacted on individuals and societies across the globe.

Although beneficial in some ways, this increased accessibility has the capacity to negatively affect a society's individuality. With information being so easily distributed throughout the world, cultural meanings, values and tastes run the risk of becoming homogenized. As a result, the strength of identity of individuals and societies may begin to weaken.

Some individuals, particularly those with strong religious beliefs, maintain that it is in the best interests of individuals and of humanity as a whole that all people adhere to a specific model for society or specific aspects of such a model.

Nowadays, communication between different countries becomes more and more frequent. And more and more students choose to study overseas for experiencing culture diversity. Their goal is to broaden their horizons and develop themselves from learning overseas.

For example, according to Fengling, Chen, Du Yanjun, and Yu Ma's paper "Academic Freedom in the People's Republic of China and the United States Of America.", they pointed out that Chinese education more focus on "traditionally, teaching has consisted of spoon feeding, and learning has been largely by rote. China's traditional system of education has sought to make students accept fixed and ossified content." And "In the classroom, Chinese professors are the laws and authorities; Students in China show great respect to their teachers in general."

On another hand, in United States of America education "American students treat college professors as equals." Also "American students' are encouraged to debate topics. The free open discussion on various topics is due to the academic freedom which most American colleges and universities enjoy." Discussion above gives us an overall idea about the differences between China and the United States on education. But we cannot simply judge which one is better, because each culture has its own advantages and features.

Thanks to those difference forms the culture diversity and those make our world more colorful. For students who go abroad for education, if they can combine positive culture elements from two different cultures to their self-development, it would be a competitive advantage in their whole career. Especially, with current process of global economics, people who owned different perspectives on cultures stand at a more competitive position in current world.

Quantification:

Cultural diversity is tricky to quantify, but a good indication is thought to be a count of the number of languages spoken in a region or in the world as a whole. By this measure we may be going through a period of precipitous decline in the world's cultural diversity. Research carried out in the 1990s by David Crystal (Honorary Professor of Linguistics at the University of Wales, Bangor) suggested that at that time, on average, one language was falling into disuse every two weeks. He calculated that if that rate of the language death were to continue, then by the year 2100 more than 90% of the languages currently spoken in the world will have gone extinct.

Overpopulation, immigration and imperialism (of both the militaristic and cultural kind) are reasons that have been suggested to explain any such decline. However, it could also be argued that with the advent of globalism, a decline in cultural diversity is inevitable because information sharing often promotes homogeneity.

Cultural heritage:

The Universal Declaration on Cultural Diversity adopted by UNESCO in 2001 is a legal instrument that recognizes cultural diversity as "common heritage of humanity" and considers its safeguarding to be a concrete and ethical imperative inseparable from respect for human dignity.

Beyond the Declaration of Principles adopted in 2003 at the Geneva Phase of the World Summit on the information Society (WSIS), the UNESCO Convention on the Protection and Promotion of the Diversity of Cultural Expressions, adopted in October 2005, is also regarded as a legally binding instrument that recognizes,

It was adopted in response to "growing pressure exerted on countries to waive their right to enforce cultural policies and to put all aspects of the cultural sector on the table when negotiating international trade agreements".To date, 116 member states as well as the European Union have ratified the Convention, except the US, Australia and Israel.

It is instead a clear recognition of the specificity of cultural goods and services, as well as state sovereignty and public services in this area. Thought for world trade, this soft law instrument (strength in not binding) clearly became a crucial reference to the definition of the European policy choice. In 2009, the European Court of Justice favoured a broad view of culture — beyond cultural values through the protection of film or the objective of promoting linguistic diversity yet previously recognized .

On top of it, under this Convention, the EU and China have committed to fostering more balanced cultural exchanges, strengthening international cooperation and solidarity with business and trade opportunities in cultural and creative industries. The most motivating factor behind Beijing's willingness to work in partnership at business level might certainly be the access to creative talents and skills from foreign markets.

There is also the Convention for the Safeguarding of the Intangible Cultural Heritage ratified on June 20, 2007 by 78 states which said: The intangible cultural heritage, transmitted from generation to generation is constantly recreated by communities and groups in response to their environment, their interaction with nature and their history, and gives them a sense of identity and continuity, thus promoting respect for cultural diversity and human creativity.

Cultural diversity was also promoted by the Montreal Declaration of 2007, and by the European Union. The idea of a global multicultural heritage covers several ideas, which are not exclusive (see multiculturalism). In addition to language, diversity can also include religious or traditional practice.

On a local scale, Agenda 21 for culture, the first document of world scope that establishes the foundations for a commitment by cities and local governments to cultural development, supports local authorities committed to cultural diversity.

Ocean Model of One Human Civilization:

Philosopher Nayef Al-Rodhan argues that previous concepts of civilizations, such as Samuel P. Huntington's arguments supporting a coming "clash of civilizations," are misconstrued. Human civilization should not be thought of as consisting of numerous separate and competing civilizations, but rather it should be thought of collectively as only one human civilization.

Within this civilization are many geo-cultural domains that comprise sub-cultures. This concept presents human history as one fluid story and encourages a philosophy of history that encompasses the entire span of human time as opposed to thinking about civilization in terms of single time periods.

Al-Rodhan envisions human civilization as an ocean into which the different geo-cultural domains flow like rivers. According to him, at points where geo-cultural domains first enter the ocean of human civilization, there is likely to be a concentration or dominance of that culture.

However, over time, all the rivers of geo-cultural domains become one. Therefore, an equal mix of all cultures will exist at the middle of the ocean, although the mix might be weighted towards the dominant culture of the day. Al-Rodhan maintains that there is fluidity at the ocean's center and that cultures will have the opportunity to borrow between cultures, especially when that culture's domain or "river" is in geographical proximity to the other's. However, Al-Rodhan warns that geographical proximity can also lead to friction and conflict.

Al-Rodhan maintains that sustainable civilisational triumph will occur when all components of the geo-cultural domains can flourish, even if they flourish in different degrees. Human civilization should indeed be considered as an ocean, where the various geo-cultural domains add depth whenever the conditions for the most advanced forms of human enterprise to thrive are met. This means it is necessary to focus on boundary marking practices and concrete situations. Moreover, civilisational triumph requires some degree of socio-economic equality as well as multilateral institutions that are premised on rules and practices perceived to be fair. Finally, Al-Rodhan notes that it demands conditions under which innovation and learning can thrive. He argues that there needs to be an emphasis on expanding the boundaries of geo-cultural identities and on encouraging greater acceptance of overlapping identities.

Cultural Vigor:

"Cultural Vigor" is a concept proposed by philosopher Nayef Al-Rodhan. He defines cultural vigor as cultural resilience and strength that results from mixing and exchanges between various cultures and sub-cultures around the world. In his general theory of human nature, which he calls "emotional amoral egoism".

Al-Rodhan argues that all humans are motivated amongst others by arrogance, injustice, exceptionalism, and exclusion. According to him, these particular motivating factors are unfounded, misguided, and hinder humankind's potential for synergistic progress and prosperity. In order to combat these tendencies, Al-Rodhan argues that cultural vigor and ethnic and cultural diversity must be actively promoted by governments and civil society.

Al-Rodhan compares cultural vigor to the natural phenomenon of "hybrid vigor", arguing that in nature, molecular and genetic diversity produce stronger and more resilient organisms that are less susceptible to disease and mutational challenges.

Similar resilience can be produced through fostering cultural and ethnic diversity. Ultimately, Al-Rodhan maintains that cultural vigor will ensure humanity's future and will improve humans' ability to survive and thrive.

Defense:

The defense of cultural diversity can take several meanings:

Cultural uniformity:

Cultural diversity is presented as the antithesis of cultural uniformity.

Some (including UNESCO) fear this hypothesis of a trend towards cultural uniformity. To support this argument they emphasize different aspects:

There are several international organizations that work towards protecting threatened societies and cultures, including Survival International and UNESCO. The UNESCO Universal Declaration on Cultural Diversity, adopted by 185 Member States in 2001, represents the first international standard-setting instrument aimed at preserving and promoting cultural diversity and intercultural dialogue.

Indeed, the notion of "cultural diversity" has been echoed by more neutral organizations, particularly within the UNESCO. Beyond the Declaration of Principles adopted in 2003 at the Geneva Phase of the World Summit on the information Society (WSIS), the UNESCO Convention on the Protection and Promotion of the Diversity of Cultural Expressions was adopted on 20 October 2005, but neither ratified by the US, Australia nor by Israel.

It is instead a clear recognition of the specificity of cultural goods and services, as well as state sovereignty and public services in this area. Thought for world trade, this soft law instrument (strength in not binding) clearly became a crucial reference to the definition of the European policy choice.

In 2009, the European Court of Justice favored a broad view of culture — beyond cultural values — through the protection of film or the objective of promoting linguistic diversity yet previously recognized. On top of it, under this Convention, the EU and China have committed to fostering more balanced cultural exchanges, strengthening international cooperation and solidarity with business and trade opportunities in cultural and creative industries.

The European Commission-funded Network of Excellence on "Sustainable Development in a Diverse World" (known as "SUS.DIV") builds upon the UNESCO Declaration to investigate the relationship between cultural diversity and sustainable development.

See Also:

Overview:

The many separate societies that emerged around the globe differed markedly from each other, and many of these differences persist to this day. As well as the more obvious cultural differences that exist between people, such as language, dress and traditions, there are also significant variations in the way societies organize themselves, in their shared conception of morality, and in the ways they interact with their environment. Cultural diversity can be seen as analogous to biodiversity.

Opposition and support:

By analogy with biodiversity, which is thought to be essential to the long-term survival of life on earth, it can be argued that cultural diversity may be vital for the long-term survival of humanity; and that the conservation of indigenous cultures may be as important to humankind as the conservation of species and ecosystems is to life in general.

The General Conference of UNESCO took this position in 2001, asserting in Article 1 of the Universal Declaration on Cultural Diversity that "...cultural diversity is as necessary for humankind as biodiversity is for nature"

This position is rejected by some people, on several grounds. Firstly, like most evolutionary accounts of human nature, the importance of cultural diversity for survival may be an un-testable hypothesis, which can neither be proved nor disproved. Secondly, it can be argued that it is unethical deliberately to conserve "less developed" societies, because this will deny people within those societies the benefits of technological and medical advances enjoyed by those in the "developed" world.

In the same manner that the promotion of poverty in underdeveloped nations as "cultural diversity" is unethical, it is similarly unethical to promote all religious practices simply

because they are seen to contribute to cultural diversity. Particular religious practices are recognized by the WHO and UN as unethical, including female genital mutilation (FGM), polygamy, child brides, and human sacrifice.

With the onset of globalization, traditional nation-states have been placed under enormous pressures. Today, with the development of technology, information and capital are transcending geographical boundaries and reshaping the relationships between the marketplace, states and citizens. In particular, the growth of the mass media industry has largely impacted on individuals and societies across the globe.

Although beneficial in some ways, this increased accessibility has the capacity to negatively affect a society's individuality. With information being so easily distributed throughout the world, cultural meanings, values and tastes run the risk of becoming homogenized. As a result, the strength of identity of individuals and societies may begin to weaken.

Some individuals, particularly those with strong religious beliefs, maintain that it is in the best interests of individuals and of humanity as a whole that all people adhere to a specific model for society or specific aspects of such a model.

Nowadays, communication between different countries becomes more and more frequent. And more and more students choose to study overseas for experiencing culture diversity. Their goal is to broaden their horizons and develop themselves from learning overseas.

For example, according to Fengling, Chen, Du Yanjun, and Yu Ma's paper "Academic Freedom in the People's Republic of China and the United States Of America.", they pointed out that Chinese education more focus on "traditionally, teaching has consisted of spoon feeding, and learning has been largely by rote. China's traditional system of education has sought to make students accept fixed and ossified content." And "In the classroom, Chinese professors are the laws and authorities; Students in China show great respect to their teachers in general."

On another hand, in United States of America education "American students treat college professors as equals." Also "American students' are encouraged to debate topics. The free open discussion on various topics is due to the academic freedom which most American colleges and universities enjoy." Discussion above gives us an overall idea about the differences between China and the United States on education. But we cannot simply judge which one is better, because each culture has its own advantages and features.

Thanks to those difference forms the culture diversity and those make our world more colorful. For students who go abroad for education, if they can combine positive culture elements from two different cultures to their self-development, it would be a competitive advantage in their whole career. Especially, with current process of global economics, people who owned different perspectives on cultures stand at a more competitive position in current world.

Quantification:

Cultural diversity is tricky to quantify, but a good indication is thought to be a count of the number of languages spoken in a region or in the world as a whole. By this measure we may be going through a period of precipitous decline in the world's cultural diversity. Research carried out in the 1990s by David Crystal (Honorary Professor of Linguistics at the University of Wales, Bangor) suggested that at that time, on average, one language was falling into disuse every two weeks. He calculated that if that rate of the language death were to continue, then by the year 2100 more than 90% of the languages currently spoken in the world will have gone extinct.

Overpopulation, immigration and imperialism (of both the militaristic and cultural kind) are reasons that have been suggested to explain any such decline. However, it could also be argued that with the advent of globalism, a decline in cultural diversity is inevitable because information sharing often promotes homogeneity.

Cultural heritage:

The Universal Declaration on Cultural Diversity adopted by UNESCO in 2001 is a legal instrument that recognizes cultural diversity as "common heritage of humanity" and considers its safeguarding to be a concrete and ethical imperative inseparable from respect for human dignity.

Beyond the Declaration of Principles adopted in 2003 at the Geneva Phase of the World Summit on the information Society (WSIS), the UNESCO Convention on the Protection and Promotion of the Diversity of Cultural Expressions, adopted in October 2005, is also regarded as a legally binding instrument that recognizes,

- The distinctive nature of cultural goods, services and activities as vehicles of identity, values and meaning;

- That while cultural goods, services and activities have important economic value, they are not mere commodities or consumer goods that can only be regarded as objects of trade.

It was adopted in response to "growing pressure exerted on countries to waive their right to enforce cultural policies and to put all aspects of the cultural sector on the table when negotiating international trade agreements".To date, 116 member states as well as the European Union have ratified the Convention, except the US, Australia and Israel.

It is instead a clear recognition of the specificity of cultural goods and services, as well as state sovereignty and public services in this area. Thought for world trade, this soft law instrument (strength in not binding) clearly became a crucial reference to the definition of the European policy choice. In 2009, the European Court of Justice favoured a broad view of culture — beyond cultural values through the protection of film or the objective of promoting linguistic diversity yet previously recognized .

On top of it, under this Convention, the EU and China have committed to fostering more balanced cultural exchanges, strengthening international cooperation and solidarity with business and trade opportunities in cultural and creative industries. The most motivating factor behind Beijing's willingness to work in partnership at business level might certainly be the access to creative talents and skills from foreign markets.

There is also the Convention for the Safeguarding of the Intangible Cultural Heritage ratified on June 20, 2007 by 78 states which said: The intangible cultural heritage, transmitted from generation to generation is constantly recreated by communities and groups in response to their environment, their interaction with nature and their history, and gives them a sense of identity and continuity, thus promoting respect for cultural diversity and human creativity.

Cultural diversity was also promoted by the Montreal Declaration of 2007, and by the European Union. The idea of a global multicultural heritage covers several ideas, which are not exclusive (see multiculturalism). In addition to language, diversity can also include religious or traditional practice.

On a local scale, Agenda 21 for culture, the first document of world scope that establishes the foundations for a commitment by cities and local governments to cultural development, supports local authorities committed to cultural diversity.

Ocean Model of One Human Civilization:

Philosopher Nayef Al-Rodhan argues that previous concepts of civilizations, such as Samuel P. Huntington's arguments supporting a coming "clash of civilizations," are misconstrued. Human civilization should not be thought of as consisting of numerous separate and competing civilizations, but rather it should be thought of collectively as only one human civilization.

Within this civilization are many geo-cultural domains that comprise sub-cultures. This concept presents human history as one fluid story and encourages a philosophy of history that encompasses the entire span of human time as opposed to thinking about civilization in terms of single time periods.

Al-Rodhan envisions human civilization as an ocean into which the different geo-cultural domains flow like rivers. According to him, at points where geo-cultural domains first enter the ocean of human civilization, there is likely to be a concentration or dominance of that culture.

However, over time, all the rivers of geo-cultural domains become one. Therefore, an equal mix of all cultures will exist at the middle of the ocean, although the mix might be weighted towards the dominant culture of the day. Al-Rodhan maintains that there is fluidity at the ocean's center and that cultures will have the opportunity to borrow between cultures, especially when that culture's domain or "river" is in geographical proximity to the other's. However, Al-Rodhan warns that geographical proximity can also lead to friction and conflict.

Al-Rodhan maintains that sustainable civilisational triumph will occur when all components of the geo-cultural domains can flourish, even if they flourish in different degrees. Human civilization should indeed be considered as an ocean, where the various geo-cultural domains add depth whenever the conditions for the most advanced forms of human enterprise to thrive are met. This means it is necessary to focus on boundary marking practices and concrete situations. Moreover, civilisational triumph requires some degree of socio-economic equality as well as multilateral institutions that are premised on rules and practices perceived to be fair. Finally, Al-Rodhan notes that it demands conditions under which innovation and learning can thrive. He argues that there needs to be an emphasis on expanding the boundaries of geo-cultural identities and on encouraging greater acceptance of overlapping identities.

Cultural Vigor:

"Cultural Vigor" is a concept proposed by philosopher Nayef Al-Rodhan. He defines cultural vigor as cultural resilience and strength that results from mixing and exchanges between various cultures and sub-cultures around the world. In his general theory of human nature, which he calls "emotional amoral egoism".

Al-Rodhan argues that all humans are motivated amongst others by arrogance, injustice, exceptionalism, and exclusion. According to him, these particular motivating factors are unfounded, misguided, and hinder humankind's potential for synergistic progress and prosperity. In order to combat these tendencies, Al-Rodhan argues that cultural vigor and ethnic and cultural diversity must be actively promoted by governments and civil society.