Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

Computer Advancements

covers the many forms of computer technology, whether hardware, software, applications, or computing standards.

(For Medical Breakthroughs, Click Here)

(For Smartphones, Video and Online Games, Click Here)

(For Innovations and their Innovators, Click Here)

(For Computer Security, Click Here)

(For the Internet, Click Here)

[Your Webhost is one of the earliest magazine professionals to use computers (then called "microcomputers") starting in 1982: While consulting for a publisher, I purchased a Tandy Radio Shack TRS-80 computers. This was before PCs and Windows, so the operating system was very unstable, resulting in lost files, etc.

Once PCs came available we moved up to Windows and installed our own (primitive) Local Area Network (LAN). Meanwhile, the leading "magazine about magazines", Folio got wind of this and began a decade old relationship in which I wrote articles for Folio as well as spoke at seminars.]

Once PCs came available we moved up to Windows and installed our own (primitive) Local Area Network (LAN). Meanwhile, the leading "magazine about magazines", Folio got wind of this and began a decade old relationship in which I wrote articles for Folio as well as spoke at seminars.]

Computer Technology, including a Timeline of Computing Developments

YouTube video of "Information Age: The computer that changed our world"

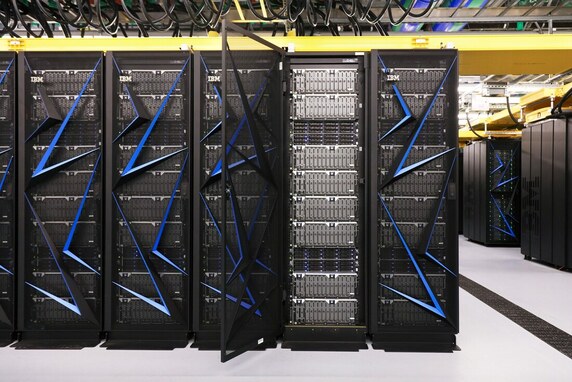

YouTube Video about the Top 10 Most Powerful Supercomputers in the world 2021

Click here for a Timeline of Computing

A computer is a device that can be instructed to carry out sequences of arithmetic or logical operations automatically via computer programming.

Modern computers have the ability to follow generalized sets of operations, called programs. These programs enable computers to perform an extremely wide range of tasks.

Computers are used as control systems for a wide variety of industrial and consumer devices. This includes simple special purpose devices like microwave ovens and remote controls, factory devices such as industrial robots and computer-aided design, and also general purpose devices like personal computers and mobile devices such as smartphones.

Early computers were only conceived as calculating devices. Since ancient times, simple manual devices like the abacus aided people in doing calculations. Early in the Industrial Revolution, some mechanical devices were built to automate long tedious tasks, such as guiding patterns for looms.

More sophisticated electrical machines did specialized analog calculations in the early 20th century. The first digital electronic calculating machines were developed during World War II. The speed, power, and versatility of computers have been increasing dramatically ever since then.

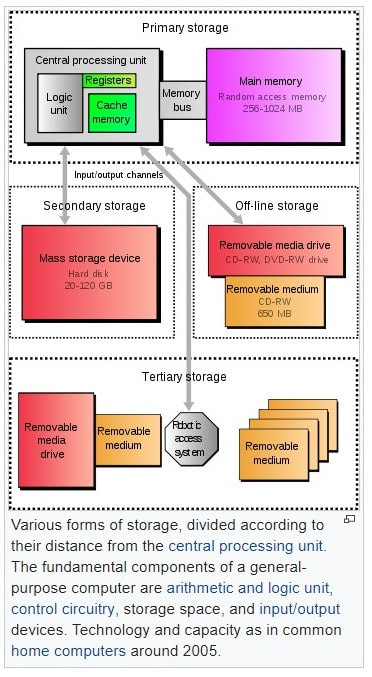

Conventionally, a modern computer consists of at least one processing element, typically a central processing unit (CPU), and some form of memory. The processing element carries out arithmetic and logical operations, and a sequencing and control unit can change the order of operations in response to stored information.

Peripheral devices include input devices (keyboards, mice, joystick, etc.), output devices (monitor screens, printers, etc.), and input/output devices that perform both functions (e.g., the 2000s-era touchscreen). Peripheral devices allow information to be retrieved from an external source and they enable the result of operations to be saved and retrieved

Click on any of the following blue hyperlinks for more about Computers:

A computer is a device that can be instructed to carry out sequences of arithmetic or logical operations automatically via computer programming.

Modern computers have the ability to follow generalized sets of operations, called programs. These programs enable computers to perform an extremely wide range of tasks.

Computers are used as control systems for a wide variety of industrial and consumer devices. This includes simple special purpose devices like microwave ovens and remote controls, factory devices such as industrial robots and computer-aided design, and also general purpose devices like personal computers and mobile devices such as smartphones.

Early computers were only conceived as calculating devices. Since ancient times, simple manual devices like the abacus aided people in doing calculations. Early in the Industrial Revolution, some mechanical devices were built to automate long tedious tasks, such as guiding patterns for looms.

More sophisticated electrical machines did specialized analog calculations in the early 20th century. The first digital electronic calculating machines were developed during World War II. The speed, power, and versatility of computers have been increasing dramatically ever since then.

Conventionally, a modern computer consists of at least one processing element, typically a central processing unit (CPU), and some form of memory. The processing element carries out arithmetic and logical operations, and a sequencing and control unit can change the order of operations in response to stored information.

Peripheral devices include input devices (keyboards, mice, joystick, etc.), output devices (monitor screens, printers, etc.), and input/output devices that perform both functions (e.g., the 2000s-era touchscreen). Peripheral devices allow information to be retrieved from an external source and they enable the result of operations to be saved and retrieved

Click on any of the following blue hyperlinks for more about Computers:

- Etymology

- History

- Types

- Hardware

- Software

- Firmware

- Networking and the Internet

- Unconventional computers

- Unconventional computing

- Future

- Professions and organizations

- See also:

- Information technology portal

- Glossary of computers

- Computability theory

- Computer insecurity

- Computer security

- Glossary of computer hardware terms

- History of computer science

- List of computer term etymologies

- List of fictional computers

- List of pioneers in computer science

- Pulse computation

- TOP500 (list of most powerful computers)

- Media related to Computers at Wikimedia Commons

- Wikiversity has a quiz on this article

- Warhol & The Computer

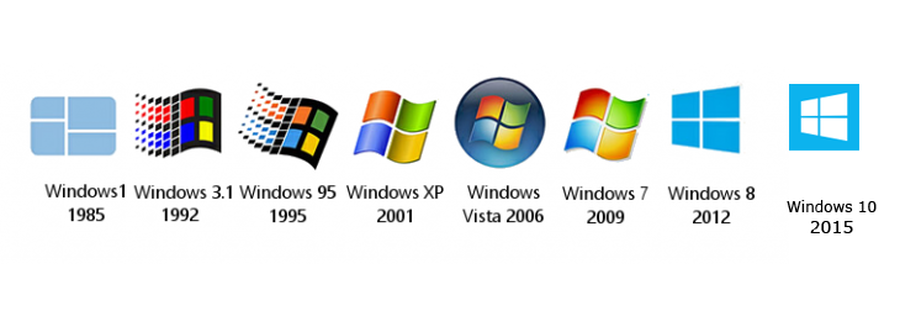

Computer Operating Systems, including a List of Operating Systems

YouTube Video about the History of Computer Operating Systems

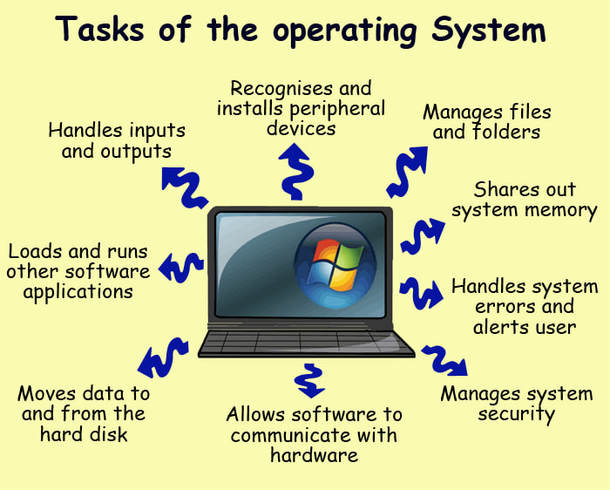

Pictured below: What is an Operating System, and what tasks does the Operating System perform?

Click here for a list of Operating Systems.

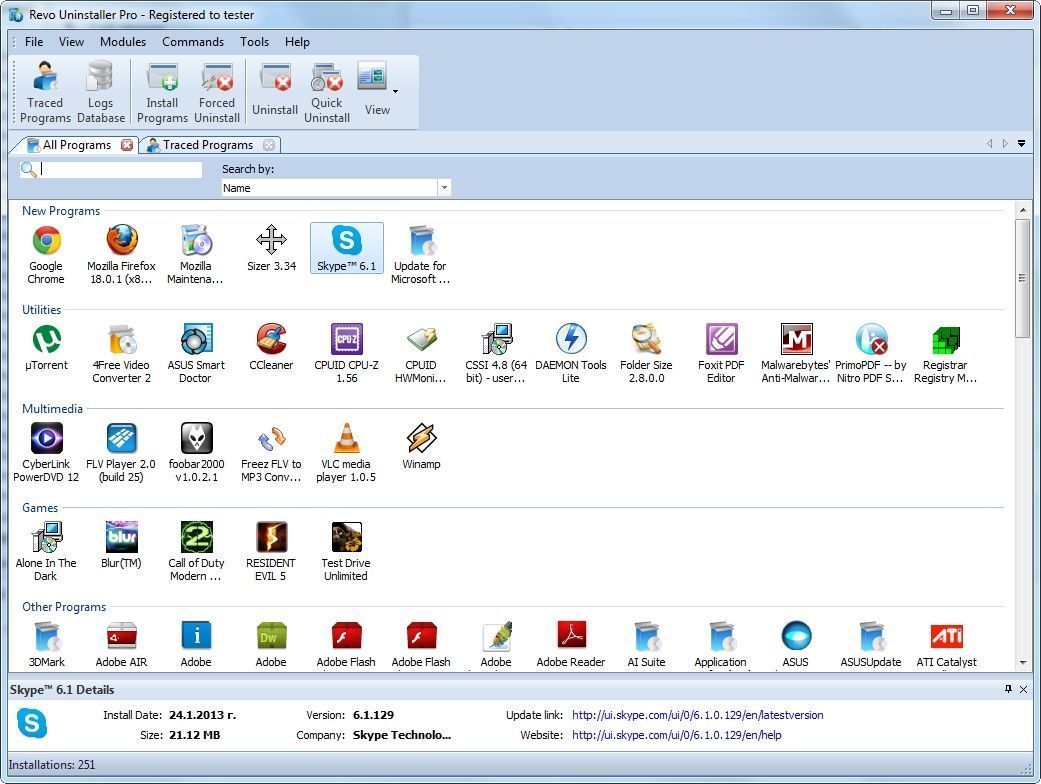

A computer operating system (OS) is system software that manages computer hardware and software resources and provides common services for computer programs.

Time-sharing operating systems schedule tasks for efficient use of the system and may also include accounting software for cost allocation of processor time, mass storage, printing, and other resources.

For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer – from cellular phones and video game consoles to web servers and supercomputers.

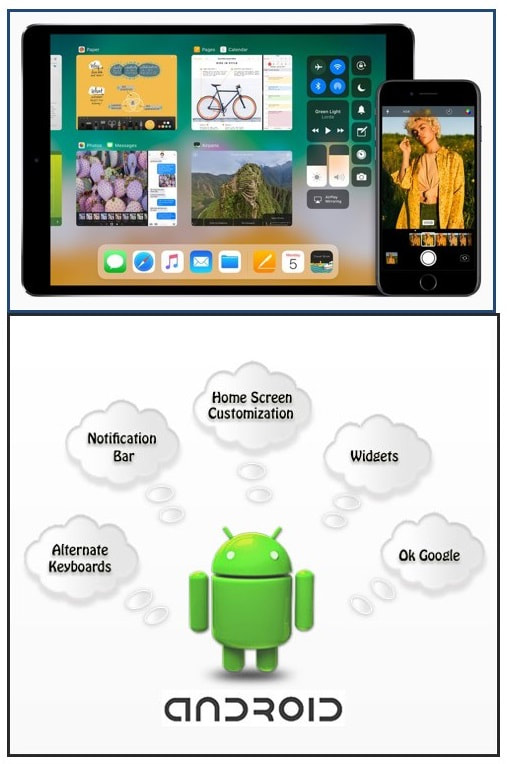

The dominant desktop operating system is Microsoft Windows with a market share of around 82.74%. macOS by Apple Inc. is in second place (13.23%), and the varieties of Linux are collectively in third place (1.57%).

In the mobile (smartphone and tablet combined) sector, use in 2017 is up to 70% of Google's Android and according to third quarter 2016 data, Android on smartphones is dominant with 87.5 percent and a growth rate 10.3 percent per year, followed by Apple's iOS with 12.1 percent and a per year decrease in market share of 5.2 percent, while other operating systems amount to just 0.3 percent.

Linux distributions are dominant in the server and supercomputing sectors. Other specialized classes of operating systems, such as embedded and real-time systems, exist for many applications.

Click on any of the following blue hyperlinks for more about Operating Systems:

A computer operating system (OS) is system software that manages computer hardware and software resources and provides common services for computer programs.

Time-sharing operating systems schedule tasks for efficient use of the system and may also include accounting software for cost allocation of processor time, mass storage, printing, and other resources.

For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer – from cellular phones and video game consoles to web servers and supercomputers.

The dominant desktop operating system is Microsoft Windows with a market share of around 82.74%. macOS by Apple Inc. is in second place (13.23%), and the varieties of Linux are collectively in third place (1.57%).

In the mobile (smartphone and tablet combined) sector, use in 2017 is up to 70% of Google's Android and according to third quarter 2016 data, Android on smartphones is dominant with 87.5 percent and a growth rate 10.3 percent per year, followed by Apple's iOS with 12.1 percent and a per year decrease in market share of 5.2 percent, while other operating systems amount to just 0.3 percent.

Linux distributions are dominant in the server and supercomputing sectors. Other specialized classes of operating systems, such as embedded and real-time systems, exist for many applications.

Click on any of the following blue hyperlinks for more about Operating Systems:

- Types of operating systems

- History

- Examples

- Components

- Real-time operating systems

- Operating system development as a hobby

- Diversity of operating systems and portability

- Market share

- See also:

- Antivirus software

- Comparison of operating systems

- Crash (computing)

- Hypervisor

- Interruptible operating system

- List of important publications in operating systems

- List of pioneers in computer science

- Live CD

- Glossary of operating systems terms

- Microcontroller

- Mobile device

- Mobile operating system

- Network operating system

- Object-oriented operating system

- Operating System Projects

- System Commander

- System image

- Timeline of operating systems

- Usage share of operating systems

The Digital Revolution

YouTube video: illustrating how much the Digital Revolution has Changed Our World

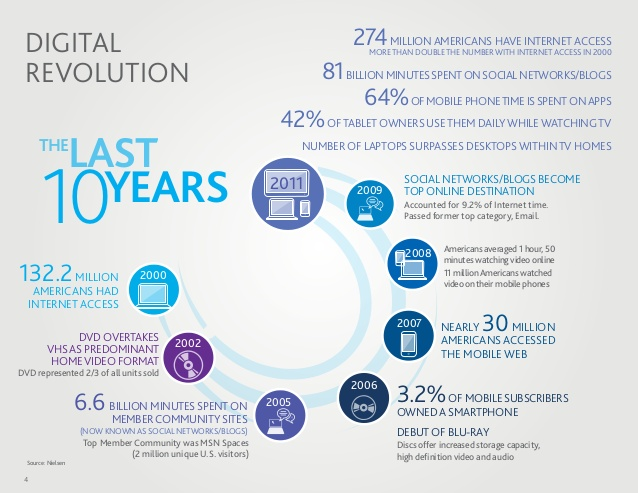

Pictured: The Digital Revolution in Just 10 Years!

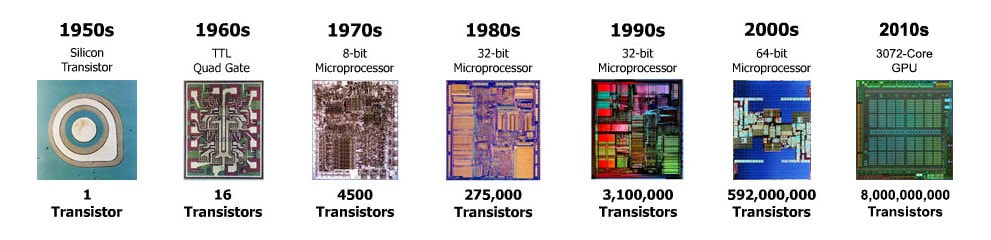

The Digital Revolution, known as the Third Industrial Revolution, is the change from mechanical and analogue electronic technology to digital electronics which began anywhere from the late 1950s to the late 1970s with the adoption and proliferation of digital computers and digital record keeping that continues to the present day.

Implicitly, the term also refers to the sweeping changes brought about by digital computing and communication technology during (and after) the latter half of the 20th century.

Analogous to the Agricultural Revolution and Industrial Revolution, the Digital Revolution marked the beginning of the Information Age.

Central to this revolution is the mass production and widespread use of digital logic circuits, and its derived technologies, including the computer, digital cellular phone, and the Internet.

Unlike older machines, digital computers can be reprogrammed to fit any purpose.

For expansion, click on any of the following hyperlinks:

Implicitly, the term also refers to the sweeping changes brought about by digital computing and communication technology during (and after) the latter half of the 20th century.

Analogous to the Agricultural Revolution and Industrial Revolution, the Digital Revolution marked the beginning of the Information Age.

Central to this revolution is the mass production and widespread use of digital logic circuits, and its derived technologies, including the computer, digital cellular phone, and the Internet.

Unlike older machines, digital computers can be reprogrammed to fit any purpose.

For expansion, click on any of the following hyperlinks:

- 1 Trends of technological revolutions

- 2 Rise in digital technology use, 1990–2010

- 3 Brief history

- 4 Timeline

- 5 Converted technologies

- 6 Technological basis

- 7 Socio-economic impact

- 8 Concerns

- See also:

Computer Science

YouTube Video: Map of Computer Science

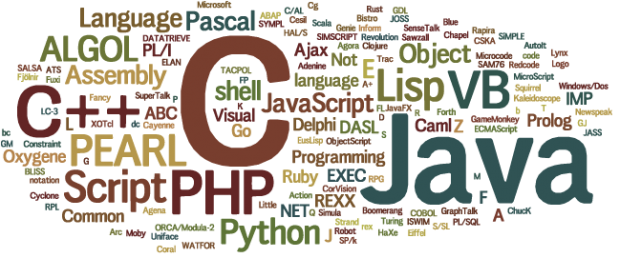

Computer science is the study of the theory, experimentation, and engineering that form the basis for the design and use of computers. It is the scientific and practical approach to computation and its applications and the systematic study of the feasibility, structure, expression, and mechanization of the methodical procedures (or algorithms) that underlie the acquisition, representation, processing, storage, communication of, and access to, information.

An alternate, more succinct definition of computer science is the study of automating algorithmic processes that scale. A computer scientist specializes in the theory of computation and the design of computational systems. See glossary of computer science.

Computer Science fields can be divided into a variety of theoretical and practical disciplines. Some fields, such as computational complexity theory (which explores the fundamental properties of computational and intractable problems), are highly abstract, while fields such as computer graphics emphasize real-world visual applications.

Other fields still focus on challenges in implementing computation. For example, programming language theory considers various approaches to the description of computation, while the study of computer programming itself investigates various aspects of the use of programming language and complex systems. Human–computer interaction considers the challenges in making computers and computations useful, usable, and universally accessible to humans.

Click on any of the following blue hyperlinks for more about Computer Science:

An alternate, more succinct definition of computer science is the study of automating algorithmic processes that scale. A computer scientist specializes in the theory of computation and the design of computational systems. See glossary of computer science.

Computer Science fields can be divided into a variety of theoretical and practical disciplines. Some fields, such as computational complexity theory (which explores the fundamental properties of computational and intractable problems), are highly abstract, while fields such as computer graphics emphasize real-world visual applications.

Other fields still focus on challenges in implementing computation. For example, programming language theory considers various approaches to the description of computation, while the study of computer programming itself investigates various aspects of the use of programming language and complex systems. Human–computer interaction considers the challenges in making computers and computations useful, usable, and universally accessible to humans.

Click on any of the following blue hyperlinks for more about Computer Science:

- History

- Etymology

- Philosophy

- Areas of computer science

- The great insights of computer science

- Academia

- Education

- See also:

- Main article: Outline of computer science

- Main article: Glossary of computer science

- Association for Computing Machinery

- Computer Science Teachers Association

- Informatics and Engineering informatics

- Information technology

- List of academic computer science departments

- List of computer scientists

- List of publications in computer science

- List of pioneers in computer science

- List of unsolved problems in computer science

- Outline of software engineering

- Technology transfer in computer science

- Turing Award

- Computer science – Wikipedia book

- Scholarly Societies in Computer Science

- What is Computer Science?

- Best Papers Awards in Computer Science since 1996

- Photographs of computer scientists by Bertrand Meyer

- EECS.berkeley.edu

- Bibliography and academic search engines

- CiteSeerx (article): search engine, digital library and repository for scientific and academic papers with a focus on computer and information science.

- DBLP Computer Science Bibliography (article): computer science bibliography website hosted at Universität Trier, in Germany.

- The Collection of Computer Science Bibliographies (article)

- Professional organizations

- Miscellanous:

- Computer Science—Stack Exchange: a community-run question-and-answer site for computer science

- What is computer science

- Is computer science science?

- Computer Science (Software) Must be Considered as an Independent Discipline.

High Tech, including Silicon Valley

YouTube Video: Inside Samsung's new Silicon Valley headquarters by C/Net

YouTube Video: Are Smart Homes the Future of High-Tech Living? by ABC News

Pictured: What tech campuses in Silicon Valley look like from above by CNBC

High technology, or "High Tech" is technology that is at the cutting edge: the most advanced technology available.

As of the onset of the 21st century, products considered high tech are often those that incorporate advanced computer electronics. However, there is no specific class of technology that is high tech—the definition shifts and evolves over time—so products hyped as high-tech in the past may now be considered to be everyday or outdated technology.

The opposite of high tech is low technology, referring to simple, often traditional or mechanical technology; for example, a calculator is a low-tech calculating device.

Click on any of the following blue hyperlinks for more about High Tech:

Silicon Valley is a nickname for the southern portion of the Bay Area, in the northern part of the U.S. state of California. The "valley" in its name refers to the Santa Clara Valley in Santa Clara County, which includes the city of San Jose and surrounding cities and towns, where the region has been traditionally centered. The region has expanded to include the southern half of the Peninsula in San Mateo County, and southern portions of the East Bay in Alameda County.

The word "silicon" originally referred to the large number of silicon chip innovators and manufacturers in the region, but the area is now the home to many of the world's largest high-tech corporations, including the headquarters of 39 businesses in the Fortune 1000, and thousands of startup companies.

Silicon Valley also accounts for one-third of all of the venture capital investment in the United States, which has helped it to become a leading hub and startup ecosystem for high-tech innovation and scientific development. It was in the Valley that the silicon-based integrated circuit, the microprocessor, and the microcomputer, among other key technologies, were developed. As of 2013, the region employed about a quarter of a million information technology workers.

As more high-tech companies were established across San Jose snd the Santa Clara Valley, and then north towards the Bay Area's two other major cities, San Francisco and Oakland, the "Silicon Valley" has come to have two definitions: a geographic one, referring to Santa Clara County, and a metonymical one, referring to all high-tech businesses in the Bay Area or even in the United States. The term is now generally used as a synecdoche for the American high-technology economic sector. The name also became a global synonym for leading high-tech research and enterprises, and thus inspired similar named locations, as well as research parks and technology centers with a comparable structure all around the world.

Click on any of the following blue hyperlinks for more about Silicon Valley:

As of the onset of the 21st century, products considered high tech are often those that incorporate advanced computer electronics. However, there is no specific class of technology that is high tech—the definition shifts and evolves over time—so products hyped as high-tech in the past may now be considered to be everyday or outdated technology.

The opposite of high tech is low technology, referring to simple, often traditional or mechanical technology; for example, a calculator is a low-tech calculating device.

Click on any of the following blue hyperlinks for more about High Tech:

- Origin of the term

- Economy

- High-tech society

- See also:

- Low technology

- Intermediate technology - sometimes used to mean technology between low and high technology

- Industrial design

- List of emerging technologies

Silicon Valley is a nickname for the southern portion of the Bay Area, in the northern part of the U.S. state of California. The "valley" in its name refers to the Santa Clara Valley in Santa Clara County, which includes the city of San Jose and surrounding cities and towns, where the region has been traditionally centered. The region has expanded to include the southern half of the Peninsula in San Mateo County, and southern portions of the East Bay in Alameda County.

The word "silicon" originally referred to the large number of silicon chip innovators and manufacturers in the region, but the area is now the home to many of the world's largest high-tech corporations, including the headquarters of 39 businesses in the Fortune 1000, and thousands of startup companies.

Silicon Valley also accounts for one-third of all of the venture capital investment in the United States, which has helped it to become a leading hub and startup ecosystem for high-tech innovation and scientific development. It was in the Valley that the silicon-based integrated circuit, the microprocessor, and the microcomputer, among other key technologies, were developed. As of 2013, the region employed about a quarter of a million information technology workers.

As more high-tech companies were established across San Jose snd the Santa Clara Valley, and then north towards the Bay Area's two other major cities, San Francisco and Oakland, the "Silicon Valley" has come to have two definitions: a geographic one, referring to Santa Clara County, and a metonymical one, referring to all high-tech businesses in the Bay Area or even in the United States. The term is now generally used as a synecdoche for the American high-technology economic sector. The name also became a global synonym for leading high-tech research and enterprises, and thus inspired similar named locations, as well as research parks and technology centers with a comparable structure all around the world.

Click on any of the following blue hyperlinks for more about Silicon Valley:

- Origin of the term

- History (before 1970s)

- History (1971 and later)

- Economy

- Demographics

- Municipalities

- Universities, colleges, and trade schools

- Art galleries and museums

- Media outlets

- Cultural references

- See also:

- BioValley

- List of attractions in Silicon Valley

- List of research parks around the world

- List of technology centers around the world

- Mega-Site, a type of land development by private developers, universities, or governments to promote business clusters

- Silicon Hills

- Silicon Wadi

- STEM fields

- Tech Valley

- Santa Clara County: California's Historic Silicon Valley—A National Park Service website

- Silicon Valley—An American Experience documentary broadcast in 2013

- Silicon Valley Cultures Project at the Wayback Machine (archived December 20, 2007) from San Jose State University

- Silicon Valley Historical Association

- The Birth of Silicon Valley

Information Technology (IT), including a List of the largest IT Companies based on Revenues

YouTube Video: What is I.T.? Information Technology

Pictured: Logos of the Three Largest IT Companies as (L-R): Samsung, Apple, and Amazon

Click here for a List of the Largest IT Companies based on Revenues.

Information technology (IT) is the application of computers to store, retrieve, transmit and manipulate data, often in the context of a business or other enterprise.

IT is considered a subset of information and communications technology (ICT). In 2012, Zuppo proposed an ICT hierarchy where each hierarchy level "contain some degree of commonality in that they are related to technologies that facilitate the transfer of information and various types of electronically mediated communications." Business/IT was one level of the ICT hierarchy.

The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones.

Several industries are associated with information technology, including,

Humans have been storing, retrieving, manipulating and communicating information since the Sumerians in Mesopotamia developed writing in about 3000 BC, but the term information technology in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that "the new technology does not yet have a single established name. We shall call it information technology (IT)."

Their definition consists of three categories: techniques for processing, the application of statistical and mathematical methods to decision-making, and the simulation of higher-order thinking through computer programs.

Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC – 1450 AD), mechanical (1450–1840), electromechanical (1840–1940) electronic (1940–present), and moreover, IT as a service. This article focuses on the most recent period (electronic), which began in about 1940.

Click on any of the following for amplification:

Information technology (IT) is the application of computers to store, retrieve, transmit and manipulate data, often in the context of a business or other enterprise.

IT is considered a subset of information and communications technology (ICT). In 2012, Zuppo proposed an ICT hierarchy where each hierarchy level "contain some degree of commonality in that they are related to technologies that facilitate the transfer of information and various types of electronically mediated communications." Business/IT was one level of the ICT hierarchy.

The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones.

Several industries are associated with information technology, including,

- computer hardware,

- software,

- electronics,

- semiconductors,

- internet,

- telecom equipment,

- engineering,

- healthcare,

- e-commerce,

- and computer services.

Humans have been storing, retrieving, manipulating and communicating information since the Sumerians in Mesopotamia developed writing in about 3000 BC, but the term information technology in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that "the new technology does not yet have a single established name. We shall call it information technology (IT)."

Their definition consists of three categories: techniques for processing, the application of statistical and mathematical methods to decision-making, and the simulation of higher-order thinking through computer programs.

Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC – 1450 AD), mechanical (1450–1840), electromechanical (1840–1940) electronic (1940–present), and moreover, IT as a service. This article focuses on the most recent period (electronic), which began in about 1940.

Click on any of the following for amplification:

Global Positioning System (GPS) and Ten Ways Your Smartphone Knows Where You Are: BUT, does GPS Work Well Enough? (New York Times 1/23/2021)

YouTube Video: The Truth About GPS: How it works (Courtesy of the U.S. Air Force Space Command)

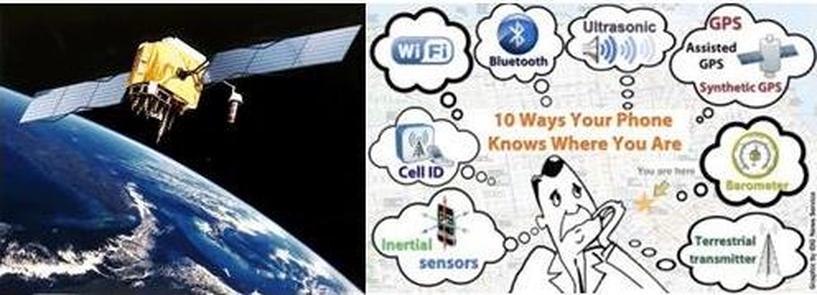

Pictured: Left: Artist's conception of GPS Block II-F satellite in Earth orbit; Right: Ten ways your smartphone knows where you are

America Has a GPS Problem (NY Times Opinion 1/23/2021)

By Kate Murphy

Kate Murphy, a frequent contributor to The New York Times, is a commercial pilot and author of “You’re Not Listening: What You’re Missing and Why It Matters.”

Time was when nobody knew, or even cared, exactly what time it was. The movement of the sun, phases of the moon and changing seasons were sufficient indicators. But since the Industrial Revolution, we’ve become increasingly dependent on knowing the time, and with increasing accuracy. Not only does the time tell us when to sleep, wake, eat, work and play; it tells automated systems when to execute financial transactions, bounce data between cellular towers and throttle power on the electrical grid.

Coordinated Universal Time, or U.T.C., the global reference for timekeeping, is beamed down to us from extremely precise atomic clocks aboard Global Positioning System (GPS) satellites. The time it takes for GPS signals to reach receivers is also used to calculate location for air, land and sea navigation.

Owned and operated by the U.S. government, GPS is likely the least recognized, and least appreciated, part of our critical infrastructure. Indeed, most of our critical infrastructure would cease to function without it.

The problem is that GPS signals are incredibly weak, due to the distance they have to travel from space, making them subject to interference and vulnerable to jamming and what is known as spoofing, in which another signal is passed off as the original. And the satellites themselves could easily be taken out by hurtling space junk or the sun coughing up a fireball.

As intentional and unintentional GPS disruptions are on the rise, experts warn that our overreliance on the technology is courting disaster, but they are divided on what to do about it.

“If we don’t get good backups on line, then GPS is just a soft rib of ours, and we could be punched here very quickly,” said Todd Humphreys, an associate professor of aerospace engineering at the University of Texas in Austin. If GPS was knocked out, he said, you’d notice. Think widespread power outages, financial markets seizing up and the transportation system grinding to a halt. Grocers would be unable to stock their shelves, and Amazon would go dark. Emergency responders wouldn’t be able to find you, and forget about using your cellphone.

Mr. Humphreys got the attention of the U.S. Department of Defense and the Federal Aviation Administration about this issue back in 2008 when he published a paper showing he could spoof GPS receivers. At the time, he said he thought the threat came mainly from hackers with something to prove: “I didn’t even imagine that the level of interference that we’ve been seeing recently would be attributable to state actors.”

More than 10,000 incidents of GPS interference have been linked to China and Russia in the past five years. Ship captains have reported GPS errors showing them 20-120 miles inland when they were actually sailing off the coast of Russia in the Black Sea.

Also well documented are ships suddenly disappearing from navigation screens while maneuvering in the Port of Shanghai. After GPS disruptions at Tel Aviv’s Ben Gurion Airport in 2019, Israeli officials pointed to Syria, where Russia has been involved in the nation’s long-running civil war. And last summer, the United States Space Command accused Russia of testing antisatellite weaponry.

But it’s not just nation-states messing with GPS. Spoofing and jamming devices have gotten so inexpensive and easy to use that delivery drivers use them so their dispatchers won’t know they’re taking long lunch breaks or having trysts at Motel 6. Teenagers use them to foil their parents’ tracking apps and to cheat at Pokémon Go. More nefariously, drug cartels and human traffickers have spoofed border control drones. Dodgy freight forwarders may use GPS jammers or spoofers to cloak or change the time stamps on arriving cargo.

These disruptions not only affect their targets; they can also affect anyone using GPS in the vicinity.

“You might not think you’re a target, but you don’t have to be,” said Guy Buesnel, a position, navigation and timing specialist with the British network and cybersecurity firm Spirent. “We’re seeing widespread collateral or incidental effects.” In 2013 a New Jersey truck driver interfered with Newark Liberty International Airport’s satellite-based tracking system when he plugged a GPS jamming device into his vehicle’s cigarette lighter to hide his location from his employer.

The risk posed by our overdependency on GPS has been raised repeatedly at least since 2000, when its signals were fully opened to civilian use. Launched in 1978, GPS was initially reserved for military purposes, but after the signals became freely available, the commercial sector quickly realized their utility, leading to widespread adoption and innovation.

Nowadays, most people carry a GPS receiver everywhere they go — embedded in a mobile phone, tablet, watch or fitness tracker.

An emergency backup for GPS was mandated by the 2018 National Timing and Resilience Security Act. The legislation said a reliable alternate system needed to be operational within two years, but that hasn’t happened yet.

Part of the reason for the holdup, aside from a pandemic, is disagreement between government agencies and industry groups on what is the best technology to use, who should be responsible for it, which GPS capabilities must be backed up and with what degree of precision.

Of course, business interests that rely on GPS want a backup that’s just as good as the original, just as accessible and also free. Meanwhile, many government officials tend to think it shouldn’t be all their responsibility, particularly when the budget to manage and maintain GPS hit $1.7 billion in 2020.

“We’re becoming more nuanced in our approach,” said James Platt, the chief of strategic defense initiatives for the Cybersecurity and Infrastructure Security Agency, a division of the Department of Homeland Security. “We recognize some things are going to need to be backed up, but we’re also realizing that maybe some systems don’t need GPS to operate” and are designed around GPS only because it’s “easy and cheap.”

The 2018 National Defense Authorization Act included funding for the Departments of Defense, Homeland Security and Transportation to jointly conduct demonstrations of various alternatives to GPS, which were concluded last March.

Eleven potential systems were tested, including eLoran, a low-frequency, high-power timing and navigation system transmitted from terrestrial towers at Coast Guard facilities throughout the United States.

“China, Russia, Iran, South Korea and Saudi Arabia all have eLoran systems because they don’t want to be as vulnerable as we are to disruptions of signals from space,” said Dana Goward, the president of the Resilient Navigation and Timing Foundation, a nonprofit that advocates for the implementation of an eLoran backup for GPS.

Also under consideration by federal authorities are timing systems delivered via fiber optic network and satellite systems in a lower orbit than GPS, which therefore have a stronger signal, making them harder to hack. A report on the technologies was submitted to Congress last week.

Prior to the report’s submission, Karen Van Dyke, the director of the Office of Positioning, Navigation and Timing and Spectrum Management at the Department of Transportation, predicted that the recommendation would probably not be a one-size-fits-all approach but would embrace “multiple and diverse technologies” to spread out the risk.

Indicators are that the government is likely to develop standards for GPS backup systems and require their use in critical sectors, but not feel obliged to wholly fund or build such systems for public use.

Last February, Donald Trump signed an executive order titled Strengthening National Resilience Through Responsible Use of Positioning, Navigation and Timing Services that essentially put GPS users on notice that vital systems needed to be designed to cope with the increasing likelihood of outages or corrupted data and that they must have their own contingency plans should they occur.

“They think the critical infrastructure folks should figure out commercial services to support themselves in terms of timing and navigation,” said Mr. Goward. “I don’t know what they think first responders, ordinary citizens and small businesses are supposed to do.”

The fear is that debate and deliberation will continue, when time is running out.

[End of OpEd Piece]

___________________________________________________________________________

Ten Ways your Smartphone knows where you are by PC world April 6, 2012:

Also refer to above right Illustration:

"One of the most important capabilities that smartphones now have is knowing where they are. More than desktops, laptops, personal navigation devices or even tablets, which are harder to take with you, a smartphone can combine its location with many other pieces of data to make new services available.

"There's a gamification aspect, there's a social aspect, and there's a utilitarian aspect," said analyst Avi Greengart of Current Analysis. Greengart believes cellphone location is in its second stage, moving beyond basic mapping and directions to social and other applications.

The third stage may bring uses we haven't even foreseen.

Like other digital technologies, these new capabilities come with worries as well as benefits. Consumers are particularly concerned about privacy when it comes to location because knowing where you are has implications for physical safety from stalking or arrest, said Seth Schoen, senior staff technologist at the Electronic Frontier Foundation. Yet most people have embraced location-based services without thinking about dangers such as service providers handing over location data in lawsuits or hackers stealing it from app vendors.

"This transition has been so quick that people haven't exactly thought through the implications on a large scale," Schoen said. "Most people aren't even very clear on which location technologies are active and which are passive." Many app-provider practices are buried in long terms of service. Risk increases with the number of apps that you authorize to collect location data, according to Schoen, so consumers have at least one element of control.

There are at least 10 different systems in use or being developed that a phone could use to identify its location. In most cases, several are used in combination, with one stepping in where another becomes less effective:

#1 GPS: Global Positioning System:

GPS was developed by the U.S. Department of Defense and was first included in cellphones in the late 1990s. It's still the best-known way to find your location outdoors. GPS uses a constellation of satellites that send location and timing data from space directly to your phone.

If the phone can pick up signals from three satellites, it can show where you are on a flat map, and with four, it can also show your elevation. Other governments have developed their own systems similar to GPS, but rather than conflicting with it, they can actually make outdoor location easier. Russia's GLONASS is already live and China's Compass is in trials.

Europe's Galileo and Japan's Quasi-Zenith Satellite System are also on the way. Phone chip makers are developing processors that can use multiple satellite constellations to get a location fix faster.

#2 Assisted GPS:

This works well once your phone finds three or four satellites, but that may take a long time, or not happen at all if you're indoors or in an "urban canyon" of buildings that reflect satellite signals. Assisted GPS describes a collection of tools that help to solve that problem.

One reason for the wait is that when it first finds the satellites, the phone needs to download information about where they will be for the next four hours. The phone needs that information to keep tracking the satellites. As soon as the information reaches the phone, full GPS service starts.

Carriers can now send that data over a cellular or Wi-Fi network, which is a lot faster than a satellite link. This may cut GPS startup time from 45 seconds to 15 seconds or less, though it's still unpredictable, said Guylain Roy-MacHabee, CEO of location technology company RX Networks.

#3 Synthetic GPS:

The form of assisted GPS described above still requires an available data network and the time to transmit the satellite information. Synthetic GPS uses computing power to forecast satellites' locations days or weeks in advance.

This function began in data centers but increasingly can be carried out on phones themselves, according to Roy-MacHabee of RX, which specializes in this type of technology. With such a cache of satellite data on board, a phone often can identify its location in two seconds or less, he said.

#4 Cell ID:

However, all the technologies that speed up GPS still require the phone to find three satellites. Carriers already know how to locate phones without GPS, and they knew it before phones got the feature. Carriers figure out which cell a customer is using, and how far they are from the neighboring cells, with a technology called Cell ID.

By knowing which sector of which base station a given phone is using, and using a database of base-station identification numbers and locations, the carriers can associate the phone's location with that of the cell tower. This system tends to be more precise in urban areas with many small cells than in rural areas, where cells may cover an area several kilometers in diameter.

#5 Wi-Fi:

Wi-fi can do much the same thing as Cell ID, but with greater precision because Wi-Fi access points cover a smaller area. There are actually two ways Wi-Fi can be used to determine location.

The most common, called RSSI (received signal strength indication), takes the signals your phone detects from nearby access points and refers to a database of Wi-Fi networks. The database says where each uniquely identified access point is located. Using signal strength to determine distance, RSSI determines where you are (down to tens of meters) in relation to those known access points.

The other form of Wi-Fi location, wireless fingerprinting, uses profiles of given places that are based on the pattern of Wi-Fi signals found there. This technique is best for places that you or other cellphone users visit frequently. The fingerprint may be created and stored the first time you go there, or a service provider may send someone out to stand in certain spots in a building and record a fingerprint for each one.

Fingerprinting can identify your location to within just a few meters, said Charlie Abraham, vice president of engineering at Broadcom's GPS division, which makes chipsets that can use a variety of location mechanisms.

#6 Inertial Sensors:

If you go into a place where no wireless system works, inertial sensors can keep track of your location based on other inputs. Most smartphones now come with three inertial sensors: a compass (or magnetometer) to determine direction, an accelerometer to report how fast your phone is moving in that direction, and a gyroscope to sense turning motions.

Together, these sensors can determine your location with no outside inputs, but only for a limited time. They'll work for minutes, but not tens of minutes, Broadcom's Abraham said. The classic use case is driving into a tunnel: If the phone knows your location from the usual sources before you enter, it can then determine where you've gone from the speed and direction you're moving.

More commonly, these tools are used in conjunction with other location systems, sometimes compensating for them in areas where they are weak, Abraham said.

#7 Barometer:

Outdoor navigation on a sidewalk or street typically happens on one level, either going straight or making right or left turns. But indoors, it makes a difference what floor of the building you're on. GPS could read this, except that it's usually hard to get good GPS coverage indoors or even in urban areas, where the satellite signals bounce off tall buildings.

One way to determine elevation is a barometer, which uses the principle that air gets thinner the farther up you go. Some smartphones already have chips that can detect barometric pressure, but this technique isn't usually suited for use by itself, RX's Roy-MacHabee said.

To use it, the phone needs to pull down local weather data for a baseline figure on barometric pressure, and conditions inside a building such as heating or air-conditioning flows can affect the sensor's accuracy, he said.

A barometer works best with mobile devices that have been carefully calibrated for a specific building, so it might work in your own office but not in a public library, Roy-MacHabee said. Barometers are best used in combination with other tools, including GPS, Wi-Fi and short-range systems that register that you've gone past a particular spot.

#8 Ultrasonic:

Sometimes just detecting whether someone has entered a certain area says something about what they're doing. This can be done with short-range wireless systems, such as RFID (radio-frequency identification) with a badge. NFC (near-field communication) is starting to appear in phones and could be used for checkpoints, but manufacturers' main intention for NFC is payments.

However, shopper loyalty company Shopkick is already using a short-range system to verify that consumers have walked into a store. Instead of using a radio, Shopkick broadcasts ultrasonic tones just inside the doors of a shop.

If the customer has the Shopkick app running when they walk through the door, the phone will pick up the tone through its microphone and the app will tell Shopkick that they've entered.

The shopper can earn points, redeemable for gift cards and other rewards, just for walking into the store, and those show up immediately. Shopkick developed the ultrasonic system partly because the tones can't penetrate walls or windows, which would let people collect points just for walking by, CTO Aaron Emigh said. They travel about 150 feet (46 meters) inside the store.

Every location of every store has a unique set of tones, which are at too high a frequency for humans to hear. Dogs can hear them, but tests showed they don't mind, Emigh said.

#9 Bluetooth Beacons:

Very precise location can be achieved in a specific area, such as inside a retail store, using beacons that send out signals via Bluetooth. The beacons, smaller than a cellphone, are placed every few meters and can communicate with any mobile device equipped with Bluetooth 4.0, the newest version of the standard.

Using a technique similar to Wi-Fi fingerprinting, the venue owner can use signals from this dense network of transmitters to identify locations within the space, Broadcom's Abraham said. Nokia, which is participating in a live in-store trial of Bluetooth beacons, says the system can determine location to within 10 centimeters. With location sensing that specific, a store could tell when you were close to a specific product on a shelf and offer a promotion, according to Nokia.

#10 Terrestrial Transmitters:

Australian startup Locata is trying to overcome GPS' limitations by bringing it down to Earth. The company makes location transmitters that use the same principle as GPS but are mounted on buildings and cell towers.

Because they are stationary and provide a much stronger signal to receivers than satellites do from space, Locata's radios can pinpoint a user's location almost instantly to as close as 2 inches, according to Locata CEO Nunzio Gambale.

Locata networks are also more reliable than GPS, he said. The company's receivers currently cost about $2500 and are drawing interest from transportation, defense and public safety customers, but within a few years the technology could be an inexpensive add-on to phones, according to Gambale.

Then, service providers will be its biggest customers, he said. Another company in this field, NextNav, is building a network using licensed spectrum that it says can cover 93 percent of the U.S. population. NextNav's transmitters will be deployed in a ring around each city and take advantage of the long range of its 900MHz spectrum, said Chris Gates, vice president of strategy and development.

[End of Article]

___________________________________________________________________________

The Global Positioning System (GPS) is a space-based navigation system that provides location and time information in all weather conditions, anywhere on or near the Earth where there is an unobstructed line of sight to four or more GPS satellites.

The system provides critical capabilities to military, civil, and commercial users around the world. The United States government created the system, maintains it, and makes it freely accessible to anyone with a GPS receiver.

The U.S. began the GPS project in 1973 to overcome the limitations of previous navigation systems, integrating ideas from several predecessors, including a number of classified engineering design studies from the 1960s.

The U.S. Department of Defense (DoD) developed the system, which originally used 24 satellites. It became fully operational in 1995. Roger L. Easton, Ivan A. Getting and Bradford Parkinson are credited with inventing it.

Advances in technology and new demands on the existing system have now led to efforts to modernize the GPS and implement the next generation of GPS Block IIIA satellites and Next Generation Operational Control System (OCX). Announcements from Vice President Al Gore and the White House in 1998 initiated these changes. In 2000, the U.S. Congress authorized the modernization effort, GPS III.

In addition to GPS, other systems are in use or under development. The Russian Global Navigation Satellite System (GLONASS) was developed contemporaneously with GPS, but suffered from incomplete coverage of the globe until the mid-2000s.

There are also the planned European Union Galileo positioning system, China's BeiDou Navigation Satellite System, the Japanese Quasi-Zenith Satellite System, and India's Indian Regional Navigation Satellite System.

Click on any of the following blue hyperlinks for further amplification:

By Kate Murphy

Kate Murphy, a frequent contributor to The New York Times, is a commercial pilot and author of “You’re Not Listening: What You’re Missing and Why It Matters.”

Time was when nobody knew, or even cared, exactly what time it was. The movement of the sun, phases of the moon and changing seasons were sufficient indicators. But since the Industrial Revolution, we’ve become increasingly dependent on knowing the time, and with increasing accuracy. Not only does the time tell us when to sleep, wake, eat, work and play; it tells automated systems when to execute financial transactions, bounce data between cellular towers and throttle power on the electrical grid.

Coordinated Universal Time, or U.T.C., the global reference for timekeeping, is beamed down to us from extremely precise atomic clocks aboard Global Positioning System (GPS) satellites. The time it takes for GPS signals to reach receivers is also used to calculate location for air, land and sea navigation.

Owned and operated by the U.S. government, GPS is likely the least recognized, and least appreciated, part of our critical infrastructure. Indeed, most of our critical infrastructure would cease to function without it.

The problem is that GPS signals are incredibly weak, due to the distance they have to travel from space, making them subject to interference and vulnerable to jamming and what is known as spoofing, in which another signal is passed off as the original. And the satellites themselves could easily be taken out by hurtling space junk or the sun coughing up a fireball.

As intentional and unintentional GPS disruptions are on the rise, experts warn that our overreliance on the technology is courting disaster, but they are divided on what to do about it.

“If we don’t get good backups on line, then GPS is just a soft rib of ours, and we could be punched here very quickly,” said Todd Humphreys, an associate professor of aerospace engineering at the University of Texas in Austin. If GPS was knocked out, he said, you’d notice. Think widespread power outages, financial markets seizing up and the transportation system grinding to a halt. Grocers would be unable to stock their shelves, and Amazon would go dark. Emergency responders wouldn’t be able to find you, and forget about using your cellphone.

Mr. Humphreys got the attention of the U.S. Department of Defense and the Federal Aviation Administration about this issue back in 2008 when he published a paper showing he could spoof GPS receivers. At the time, he said he thought the threat came mainly from hackers with something to prove: “I didn’t even imagine that the level of interference that we’ve been seeing recently would be attributable to state actors.”

More than 10,000 incidents of GPS interference have been linked to China and Russia in the past five years. Ship captains have reported GPS errors showing them 20-120 miles inland when they were actually sailing off the coast of Russia in the Black Sea.

Also well documented are ships suddenly disappearing from navigation screens while maneuvering in the Port of Shanghai. After GPS disruptions at Tel Aviv’s Ben Gurion Airport in 2019, Israeli officials pointed to Syria, where Russia has been involved in the nation’s long-running civil war. And last summer, the United States Space Command accused Russia of testing antisatellite weaponry.

But it’s not just nation-states messing with GPS. Spoofing and jamming devices have gotten so inexpensive and easy to use that delivery drivers use them so their dispatchers won’t know they’re taking long lunch breaks or having trysts at Motel 6. Teenagers use them to foil their parents’ tracking apps and to cheat at Pokémon Go. More nefariously, drug cartels and human traffickers have spoofed border control drones. Dodgy freight forwarders may use GPS jammers or spoofers to cloak or change the time stamps on arriving cargo.

These disruptions not only affect their targets; they can also affect anyone using GPS in the vicinity.

“You might not think you’re a target, but you don’t have to be,” said Guy Buesnel, a position, navigation and timing specialist with the British network and cybersecurity firm Spirent. “We’re seeing widespread collateral or incidental effects.” In 2013 a New Jersey truck driver interfered with Newark Liberty International Airport’s satellite-based tracking system when he plugged a GPS jamming device into his vehicle’s cigarette lighter to hide his location from his employer.

The risk posed by our overdependency on GPS has been raised repeatedly at least since 2000, when its signals were fully opened to civilian use. Launched in 1978, GPS was initially reserved for military purposes, but after the signals became freely available, the commercial sector quickly realized their utility, leading to widespread adoption and innovation.

Nowadays, most people carry a GPS receiver everywhere they go — embedded in a mobile phone, tablet, watch or fitness tracker.

An emergency backup for GPS was mandated by the 2018 National Timing and Resilience Security Act. The legislation said a reliable alternate system needed to be operational within two years, but that hasn’t happened yet.

Part of the reason for the holdup, aside from a pandemic, is disagreement between government agencies and industry groups on what is the best technology to use, who should be responsible for it, which GPS capabilities must be backed up and with what degree of precision.

Of course, business interests that rely on GPS want a backup that’s just as good as the original, just as accessible and also free. Meanwhile, many government officials tend to think it shouldn’t be all their responsibility, particularly when the budget to manage and maintain GPS hit $1.7 billion in 2020.

“We’re becoming more nuanced in our approach,” said James Platt, the chief of strategic defense initiatives for the Cybersecurity and Infrastructure Security Agency, a division of the Department of Homeland Security. “We recognize some things are going to need to be backed up, but we’re also realizing that maybe some systems don’t need GPS to operate” and are designed around GPS only because it’s “easy and cheap.”

The 2018 National Defense Authorization Act included funding for the Departments of Defense, Homeland Security and Transportation to jointly conduct demonstrations of various alternatives to GPS, which were concluded last March.

Eleven potential systems were tested, including eLoran, a low-frequency, high-power timing and navigation system transmitted from terrestrial towers at Coast Guard facilities throughout the United States.

“China, Russia, Iran, South Korea and Saudi Arabia all have eLoran systems because they don’t want to be as vulnerable as we are to disruptions of signals from space,” said Dana Goward, the president of the Resilient Navigation and Timing Foundation, a nonprofit that advocates for the implementation of an eLoran backup for GPS.

Also under consideration by federal authorities are timing systems delivered via fiber optic network and satellite systems in a lower orbit than GPS, which therefore have a stronger signal, making them harder to hack. A report on the technologies was submitted to Congress last week.

Prior to the report’s submission, Karen Van Dyke, the director of the Office of Positioning, Navigation and Timing and Spectrum Management at the Department of Transportation, predicted that the recommendation would probably not be a one-size-fits-all approach but would embrace “multiple and diverse technologies” to spread out the risk.

Indicators are that the government is likely to develop standards for GPS backup systems and require their use in critical sectors, but not feel obliged to wholly fund or build such systems for public use.

Last February, Donald Trump signed an executive order titled Strengthening National Resilience Through Responsible Use of Positioning, Navigation and Timing Services that essentially put GPS users on notice that vital systems needed to be designed to cope with the increasing likelihood of outages or corrupted data and that they must have their own contingency plans should they occur.

“They think the critical infrastructure folks should figure out commercial services to support themselves in terms of timing and navigation,” said Mr. Goward. “I don’t know what they think first responders, ordinary citizens and small businesses are supposed to do.”

The fear is that debate and deliberation will continue, when time is running out.

[End of OpEd Piece]

___________________________________________________________________________

Ten Ways your Smartphone knows where you are by PC world April 6, 2012:

Also refer to above right Illustration:

"One of the most important capabilities that smartphones now have is knowing where they are. More than desktops, laptops, personal navigation devices or even tablets, which are harder to take with you, a smartphone can combine its location with many other pieces of data to make new services available.

"There's a gamification aspect, there's a social aspect, and there's a utilitarian aspect," said analyst Avi Greengart of Current Analysis. Greengart believes cellphone location is in its second stage, moving beyond basic mapping and directions to social and other applications.

The third stage may bring uses we haven't even foreseen.

Like other digital technologies, these new capabilities come with worries as well as benefits. Consumers are particularly concerned about privacy when it comes to location because knowing where you are has implications for physical safety from stalking or arrest, said Seth Schoen, senior staff technologist at the Electronic Frontier Foundation. Yet most people have embraced location-based services without thinking about dangers such as service providers handing over location data in lawsuits or hackers stealing it from app vendors.

"This transition has been so quick that people haven't exactly thought through the implications on a large scale," Schoen said. "Most people aren't even very clear on which location technologies are active and which are passive." Many app-provider practices are buried in long terms of service. Risk increases with the number of apps that you authorize to collect location data, according to Schoen, so consumers have at least one element of control.

There are at least 10 different systems in use or being developed that a phone could use to identify its location. In most cases, several are used in combination, with one stepping in where another becomes less effective:

#1 GPS: Global Positioning System:

GPS was developed by the U.S. Department of Defense and was first included in cellphones in the late 1990s. It's still the best-known way to find your location outdoors. GPS uses a constellation of satellites that send location and timing data from space directly to your phone.

If the phone can pick up signals from three satellites, it can show where you are on a flat map, and with four, it can also show your elevation. Other governments have developed their own systems similar to GPS, but rather than conflicting with it, they can actually make outdoor location easier. Russia's GLONASS is already live and China's Compass is in trials.

Europe's Galileo and Japan's Quasi-Zenith Satellite System are also on the way. Phone chip makers are developing processors that can use multiple satellite constellations to get a location fix faster.

#2 Assisted GPS:

This works well once your phone finds three or four satellites, but that may take a long time, or not happen at all if you're indoors or in an "urban canyon" of buildings that reflect satellite signals. Assisted GPS describes a collection of tools that help to solve that problem.

One reason for the wait is that when it first finds the satellites, the phone needs to download information about where they will be for the next four hours. The phone needs that information to keep tracking the satellites. As soon as the information reaches the phone, full GPS service starts.

Carriers can now send that data over a cellular or Wi-Fi network, which is a lot faster than a satellite link. This may cut GPS startup time from 45 seconds to 15 seconds or less, though it's still unpredictable, said Guylain Roy-MacHabee, CEO of location technology company RX Networks.

#3 Synthetic GPS:

The form of assisted GPS described above still requires an available data network and the time to transmit the satellite information. Synthetic GPS uses computing power to forecast satellites' locations days or weeks in advance.

This function began in data centers but increasingly can be carried out on phones themselves, according to Roy-MacHabee of RX, which specializes in this type of technology. With such a cache of satellite data on board, a phone often can identify its location in two seconds or less, he said.

#4 Cell ID:

However, all the technologies that speed up GPS still require the phone to find three satellites. Carriers already know how to locate phones without GPS, and they knew it before phones got the feature. Carriers figure out which cell a customer is using, and how far they are from the neighboring cells, with a technology called Cell ID.

By knowing which sector of which base station a given phone is using, and using a database of base-station identification numbers and locations, the carriers can associate the phone's location with that of the cell tower. This system tends to be more precise in urban areas with many small cells than in rural areas, where cells may cover an area several kilometers in diameter.

#5 Wi-Fi:

Wi-fi can do much the same thing as Cell ID, but with greater precision because Wi-Fi access points cover a smaller area. There are actually two ways Wi-Fi can be used to determine location.

The most common, called RSSI (received signal strength indication), takes the signals your phone detects from nearby access points and refers to a database of Wi-Fi networks. The database says where each uniquely identified access point is located. Using signal strength to determine distance, RSSI determines where you are (down to tens of meters) in relation to those known access points.

The other form of Wi-Fi location, wireless fingerprinting, uses profiles of given places that are based on the pattern of Wi-Fi signals found there. This technique is best for places that you or other cellphone users visit frequently. The fingerprint may be created and stored the first time you go there, or a service provider may send someone out to stand in certain spots in a building and record a fingerprint for each one.

Fingerprinting can identify your location to within just a few meters, said Charlie Abraham, vice president of engineering at Broadcom's GPS division, which makes chipsets that can use a variety of location mechanisms.

#6 Inertial Sensors:

If you go into a place where no wireless system works, inertial sensors can keep track of your location based on other inputs. Most smartphones now come with three inertial sensors: a compass (or magnetometer) to determine direction, an accelerometer to report how fast your phone is moving in that direction, and a gyroscope to sense turning motions.

Together, these sensors can determine your location with no outside inputs, but only for a limited time. They'll work for minutes, but not tens of minutes, Broadcom's Abraham said. The classic use case is driving into a tunnel: If the phone knows your location from the usual sources before you enter, it can then determine where you've gone from the speed and direction you're moving.

More commonly, these tools are used in conjunction with other location systems, sometimes compensating for them in areas where they are weak, Abraham said.

#7 Barometer:

Outdoor navigation on a sidewalk or street typically happens on one level, either going straight or making right or left turns. But indoors, it makes a difference what floor of the building you're on. GPS could read this, except that it's usually hard to get good GPS coverage indoors or even in urban areas, where the satellite signals bounce off tall buildings.

One way to determine elevation is a barometer, which uses the principle that air gets thinner the farther up you go. Some smartphones already have chips that can detect barometric pressure, but this technique isn't usually suited for use by itself, RX's Roy-MacHabee said.

To use it, the phone needs to pull down local weather data for a baseline figure on barometric pressure, and conditions inside a building such as heating or air-conditioning flows can affect the sensor's accuracy, he said.

A barometer works best with mobile devices that have been carefully calibrated for a specific building, so it might work in your own office but not in a public library, Roy-MacHabee said. Barometers are best used in combination with other tools, including GPS, Wi-Fi and short-range systems that register that you've gone past a particular spot.

#8 Ultrasonic:

Sometimes just detecting whether someone has entered a certain area says something about what they're doing. This can be done with short-range wireless systems, such as RFID (radio-frequency identification) with a badge. NFC (near-field communication) is starting to appear in phones and could be used for checkpoints, but manufacturers' main intention for NFC is payments.

However, shopper loyalty company Shopkick is already using a short-range system to verify that consumers have walked into a store. Instead of using a radio, Shopkick broadcasts ultrasonic tones just inside the doors of a shop.

If the customer has the Shopkick app running when they walk through the door, the phone will pick up the tone through its microphone and the app will tell Shopkick that they've entered.

The shopper can earn points, redeemable for gift cards and other rewards, just for walking into the store, and those show up immediately. Shopkick developed the ultrasonic system partly because the tones can't penetrate walls or windows, which would let people collect points just for walking by, CTO Aaron Emigh said. They travel about 150 feet (46 meters) inside the store.

Every location of every store has a unique set of tones, which are at too high a frequency for humans to hear. Dogs can hear them, but tests showed they don't mind, Emigh said.

#9 Bluetooth Beacons:

Very precise location can be achieved in a specific area, such as inside a retail store, using beacons that send out signals via Bluetooth. The beacons, smaller than a cellphone, are placed every few meters and can communicate with any mobile device equipped with Bluetooth 4.0, the newest version of the standard.

Using a technique similar to Wi-Fi fingerprinting, the venue owner can use signals from this dense network of transmitters to identify locations within the space, Broadcom's Abraham said. Nokia, which is participating in a live in-store trial of Bluetooth beacons, says the system can determine location to within 10 centimeters. With location sensing that specific, a store could tell when you were close to a specific product on a shelf and offer a promotion, according to Nokia.

#10 Terrestrial Transmitters:

Australian startup Locata is trying to overcome GPS' limitations by bringing it down to Earth. The company makes location transmitters that use the same principle as GPS but are mounted on buildings and cell towers.

Because they are stationary and provide a much stronger signal to receivers than satellites do from space, Locata's radios can pinpoint a user's location almost instantly to as close as 2 inches, according to Locata CEO Nunzio Gambale.

Locata networks are also more reliable than GPS, he said. The company's receivers currently cost about $2500 and are drawing interest from transportation, defense and public safety customers, but within a few years the technology could be an inexpensive add-on to phones, according to Gambale.

Then, service providers will be its biggest customers, he said. Another company in this field, NextNav, is building a network using licensed spectrum that it says can cover 93 percent of the U.S. population. NextNav's transmitters will be deployed in a ring around each city and take advantage of the long range of its 900MHz spectrum, said Chris Gates, vice president of strategy and development.

[End of Article]

___________________________________________________________________________

The Global Positioning System (GPS) is a space-based navigation system that provides location and time information in all weather conditions, anywhere on or near the Earth where there is an unobstructed line of sight to four or more GPS satellites.

The system provides critical capabilities to military, civil, and commercial users around the world. The United States government created the system, maintains it, and makes it freely accessible to anyone with a GPS receiver.

The U.S. began the GPS project in 1973 to overcome the limitations of previous navigation systems, integrating ideas from several predecessors, including a number of classified engineering design studies from the 1960s.

The U.S. Department of Defense (DoD) developed the system, which originally used 24 satellites. It became fully operational in 1995. Roger L. Easton, Ivan A. Getting and Bradford Parkinson are credited with inventing it.

Advances in technology and new demands on the existing system have now led to efforts to modernize the GPS and implement the next generation of GPS Block IIIA satellites and Next Generation Operational Control System (OCX). Announcements from Vice President Al Gore and the White House in 1998 initiated these changes. In 2000, the U.S. Congress authorized the modernization effort, GPS III.

In addition to GPS, other systems are in use or under development. The Russian Global Navigation Satellite System (GLONASS) was developed contemporaneously with GPS, but suffered from incomplete coverage of the globe until the mid-2000s.

There are also the planned European Union Galileo positioning system, China's BeiDou Navigation Satellite System, the Japanese Quasi-Zenith Satellite System, and India's Indian Regional Navigation Satellite System.

Click on any of the following blue hyperlinks for further amplification:

- History:

- Basic concept of GPS:

- Structure:

- Applications:

- Civilian including Restrictions on civilian use

- Military

- Communication:

- Navigation equations:

- Problem description

- Geometric interpretation:

- Solution methods:

- Error sources and analysis

- Accuracy enhancement and surveying:

- Regulatory spectrum issues concerning GPS receivers

- Other systems

- See also:

Intel Corporation (Intel)

YouTube Video: Inside the Intel Factory Making the Chips That 'Run the Internet' (reported by Bloomberg)

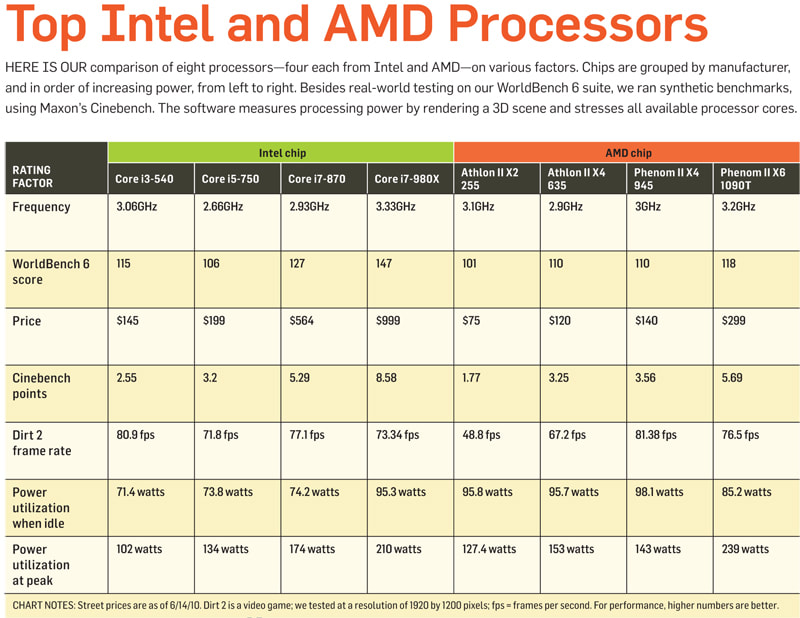

Pictured: Chip Showdown -- A Guide to the Best CPUs by PC World (June 21, 2010)

Intel Corporation (also known as Intel, stylized as intel) is an American multinational corporation and technology company headquartered in Santa Clara, California, in the Silicon Valley. It is the world's second largest and second highest valued semiconductor chip makers based on revenue after being overtaken by Samsung, and is the inventor of the x86 series of microprocessors, the processors found in most personal computers (PCs).

Intel supplies processors for computer system manufacturers such as Apple, Lenovo, HP, and Dell. Intel also manufactures motherboard chipsets, network interface controllers and integrated circuits, flash memory, graphics chips, embedded processors and other devices related to communications and computing.

Intel Corporation was founded on July 18, 1968, by semiconductor pioneers Robert Noyce and Gordon Moore (of Moore's law fame), and widely associated with the executive leadership and vision of Andrew Grove.

The company's name was conceived as portmanteau of the words integrated and electronics, with co-founder Noyce having been a key inventor of the integrated circuit (microchip). The fact that "intel" is the term for intelligence information also made the name appropriate.

Intel was an early developer of SRAM and DRAM memory chips, which represented the majority of its business until 1981. Although Intel created the world's first commercial microprocessor chip in 1971, it was not until the success of the personal computer (PC) that this became its primary business.

During the 1990s, Intel invested heavily in new microprocessor designs fostering the rapid growth of the computer industry. During this period Intel became the dominant supplier of microprocessors for PCs and was known for aggressive and anti-competitive tactics in defense of its market position, particularly against Advanced Micro Devices (AMD), as well as a struggle with Microsoft for control over the direction of the PC industry.

The Open Source Technology Center at Intel hosts PowerTOP and LatencyTOP, and supports other open-source projects such as Wayland, Intel Array Building Blocks, and Threading Building Blocks (TBB), and Xen.

Click on any of the following blue hyperlinks for more about Intel:

Intel supplies processors for computer system manufacturers such as Apple, Lenovo, HP, and Dell. Intel also manufactures motherboard chipsets, network interface controllers and integrated circuits, flash memory, graphics chips, embedded processors and other devices related to communications and computing.

Intel Corporation was founded on July 18, 1968, by semiconductor pioneers Robert Noyce and Gordon Moore (of Moore's law fame), and widely associated with the executive leadership and vision of Andrew Grove.

The company's name was conceived as portmanteau of the words integrated and electronics, with co-founder Noyce having been a key inventor of the integrated circuit (microchip). The fact that "intel" is the term for intelligence information also made the name appropriate.

Intel was an early developer of SRAM and DRAM memory chips, which represented the majority of its business until 1981. Although Intel created the world's first commercial microprocessor chip in 1971, it was not until the success of the personal computer (PC) that this became its primary business.