Copyright © 2015 Bert N. Langford (Images may be subject to copyright. Please send feedback)

Welcome to Our Generation USA!

This Page Covers

All Uses of Nuclear Energy

Whether for Peaceful Uses (energy sources) or

Military Uses (WMD as Weapons of Mass Destruction)

Nuclear Technology

- YouTube Video: Nuclear Reactors vs. Nuclear Weapons

- YouTube Video: Nuclear Reactor - Understanding how it works | Physics elearning

- YouTube Video: The risk of nuclear war in Ukraine | Russia sends nukes to Belarus | This World

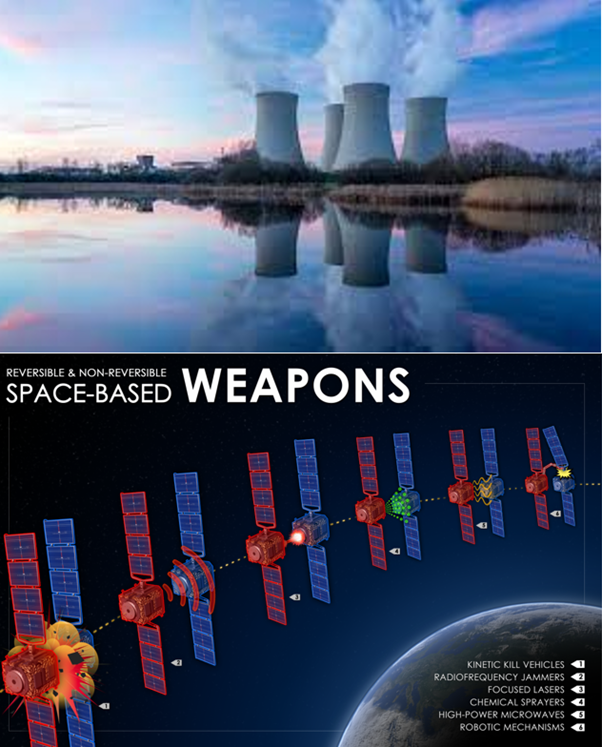

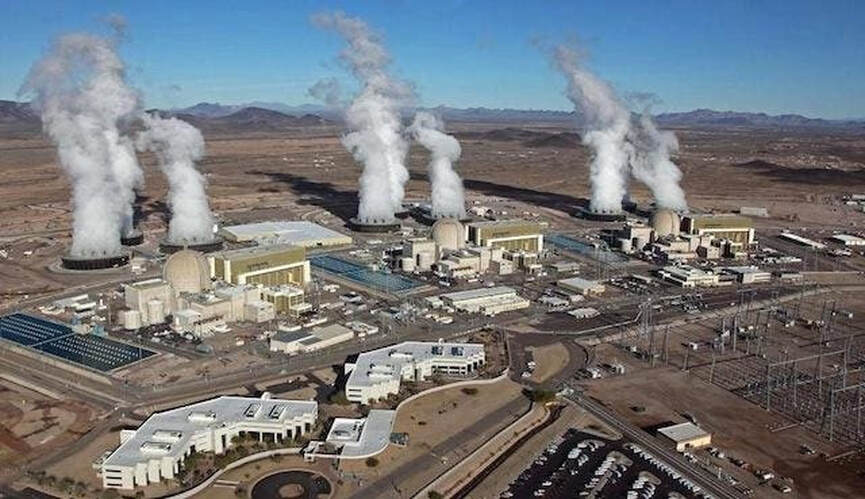

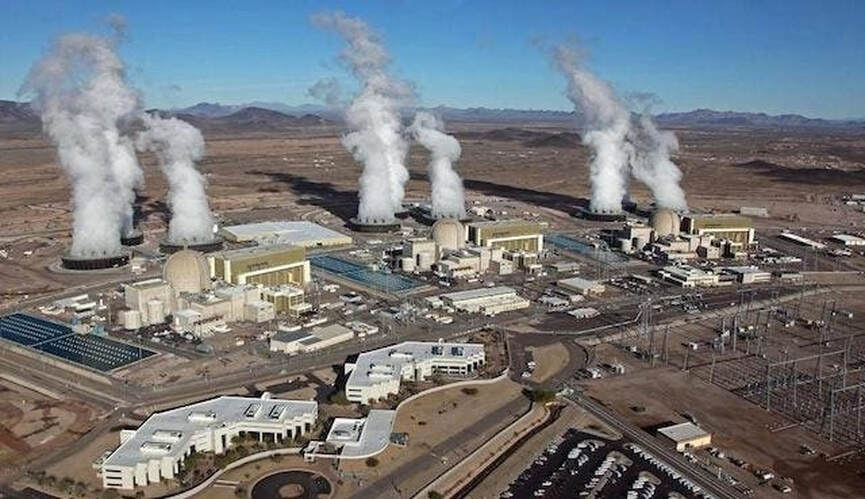

- Top: Powerplant as a peaceful example of nuclear technology:

- Bottom: Future examples of Space-based nuclear weapons.

Nuclear technology is technology that involves the nuclear reactions of atomic nuclei. Among the notable nuclear technologies are nuclear reactors, nuclear medicine and nuclear weapons. It is also used, among other things, in smoke detectors and gun sights.

History and scientific background:

Discovery:

Main article: Nuclear physics

The vast majority of common, natural phenomena on Earth only involve gravity and electromagnetism, and not nuclear reactions. This is because atomic nuclei are generally kept apart because they contain positive electrical charges and therefore repel each other.

In 1896, Henri Becquerel was investigating phosphorescence in uranium salts when he discovered a new phenomenon which came to be called radioactivity. He, Pierre Curie and Marie Curie began investigating the phenomenon. In the process, they isolated the element radium, which is highly radioactive.

They discovered that radioactive materials produce intense, penetrating rays of three distinct sorts, which they labeled alpha, beta, and gamma after the first three Greek letters. Some of these kinds of radiation could pass through ordinary matter, and all of them could be harmful in large amounts. All of the early researchers received various radiation burns, much like sunburn, and thought little of it.

The new phenomenon of radioactivity was seized upon by the manufacturers of quack medicine (as had the discoveries of electricity and magnetism, earlier), and a number of patent medicines and treatments involving radioactivity were put forward.

Gradually it was realized that the radiation produced by radioactive decay was ionizing radiation, and that even quantities too small to burn could pose a severe long-term hazard.

Many of the scientists working on radioactivity died of cancer as a result of their exposure. Radioactive patent medicines mostly disappeared, but other applications of radioactive materials persisted, such as the use of radium salts to produce glowing dials on meters.

As the atom came to be better understood, the nature of radioactivity became clearer. Some larger atomic nuclei are unstable, and so decay (release matter or energy) after a random interval.

The three forms of radiation that Becquerel and the Curies discovered are also more fully understood:

All three types of radiation occur naturally in certain elements.

It has also become clear that the ultimate source of most terrestrial energy is nuclear, either through radiation from the Sun caused by stellar thermonuclear reactions or by radioactive decay of uranium within the Earth, the principal source of geothermal energy.

Nuclear fission:

Main article: Nuclear fission

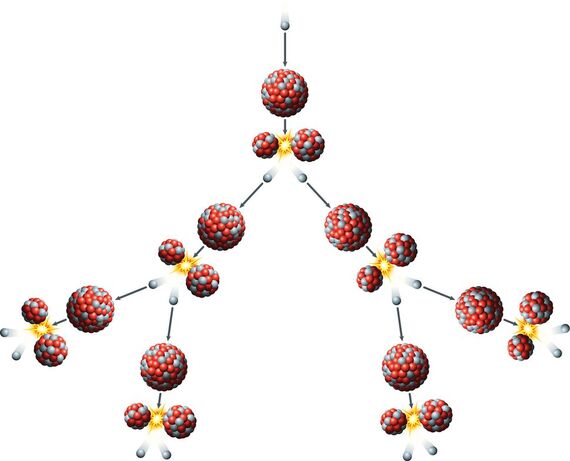

In natural nuclear radiation, the byproducts are very small compared to the nuclei from which they originate. Nuclear fission is the process of splitting a nucleus into roughly equal parts, and releasing energy and neutrons in the process. If these neutrons are captured by another unstable nucleus, they can fission as well, leading to a chain reaction.

The average number of neutrons released per nucleus that go on to fission another nucleus is referred to as k. Values of k larger than 1 mean that the fission reaction is releasing more neutrons than it absorbs, and therefore is referred to as a self-sustaining chain reaction.

A mass of fissile material large enough (and in a suitable configuration) to induce a self-sustaining chain reaction is called a critical mass.

When a neutron is captured by a suitable nucleus, fission may occur immediately, or the nucleus may persist in an unstable state for a short time. If there are enough immediate decays to carry on the chain reaction, the mass is said to be prompt critical, and the energy release will grow rapidly and uncontrollably, usually leading to an explosion.

When discovered on the eve of World War II, this insight led multiple countries to begin programs investigating the possibility of constructing an atomic bomb — a weapon which utilized fission reactions to generate far more energy than could be created with chemical explosives.

The Manhattan Project, run by the United States with the help of the United Kingdom and Canada, developed multiple fission weapons which were used against Japan in 1945 at Hiroshima and Nagasaki. During the project, the first fission reactors were developed as well, though they were primarily for weapons manufacture and did not generate electricity.

In 1951, the first nuclear fission power plant was the first to produce electricity at the Experimental Breeder Reactor No. 1 (EBR-1), in Arco, Idaho, ushering in the "Atomic Age" of more intensive human energy use.

However, if the mass is critical only when the delayed neutrons are included, then the reaction can be controlled, for example by the introduction or removal of neutron absorbers.

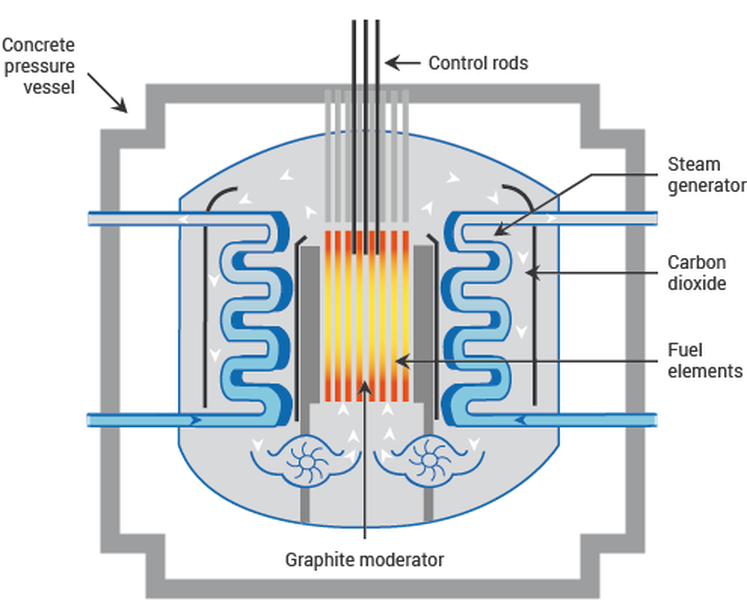

This is what allows nuclear reactors to be built. Fast neutrons are not easily captured by nuclei; they must be slowed (slow neutrons), generally by collision with the nuclei of a neutron moderator, before they can be easily captured. Today, this type of fission is commonly used to generate electricity.

Nuclear fusion:

Main article: Nuclear fusion

See also: Timeline of nuclear fusion

If nuclei are forced to collide, they can undergo nuclear fusion. This process may release or absorb energy. When the resulting nucleus is lighter than that of iron, energy is normally released; when the nucleus is heavier than that of iron, energy is generally absorbed. This process of fusion occurs in stars, which derive their energy from hydrogen and helium.

They form, through stellar nucleosynthesis, the light elements (lithium to calcium) as well as some of the heavy elements (beyond iron and nickel, via the S-process). The remaining abundance of heavy elements, from nickel to uranium and beyond, is due to supernova nucleosynthesis, the R-process.

Of course, these natural processes of astrophysics are not examples of nuclear "technology". Because of the very strong repulsion of nuclei, fusion is difficult to achieve in a controlled fashion. Hydrogen bombs obtain their enormous destructive power from fusion, but their energy cannot be controlled.

Controlled fusion is achieved in particle accelerators; this is how many synthetic elements are produced. A fusor can also produce controlled fusion and is a useful neutron source. However, both of these devices operate at a net energy loss. Controlled, viable fusion power has proven elusive, despite the occasional hoax.

Technical and theoretical difficulties have hindered the development of working civilian fusion technology, though research continues to this day around the world.

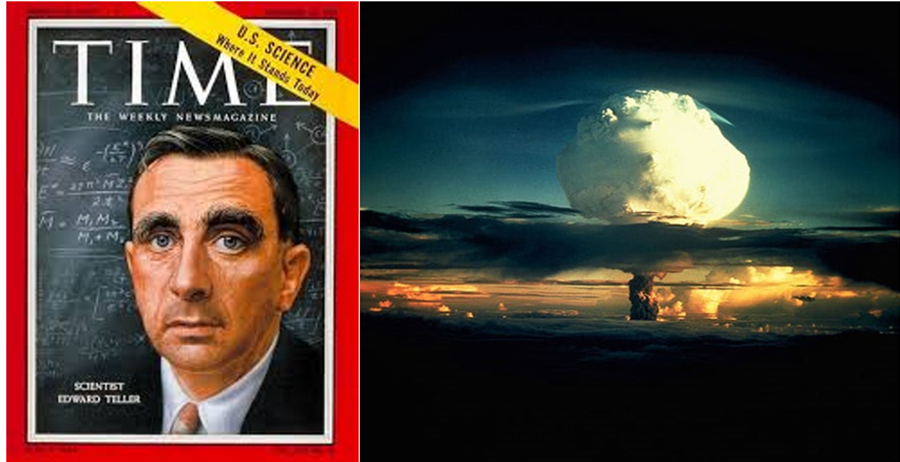

Nuclear fusion was initially pursued only in theoretical stages during World War II, when scientists on the Manhattan Project (led by Edward Teller) investigated it as a method to build a bomb. The project abandoned fusion after concluding that it would require a fission reaction to detonate.

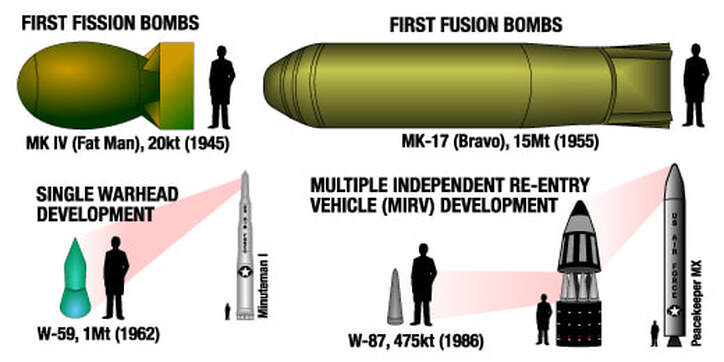

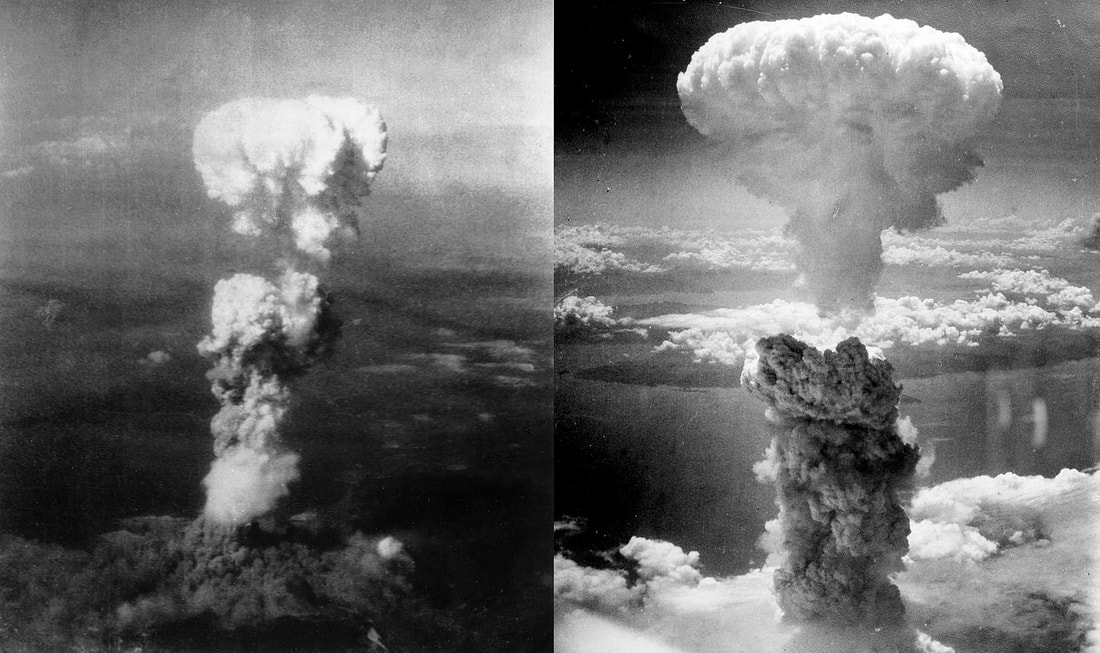

It took until 1952 for the first full hydrogen bomb to be detonated, so-called because it used reactions between deuterium and tritium. Fusion reactions are much more energetic per unit mass of fuel than fission reactions, but starting the fusion chain reaction is much more difficult.

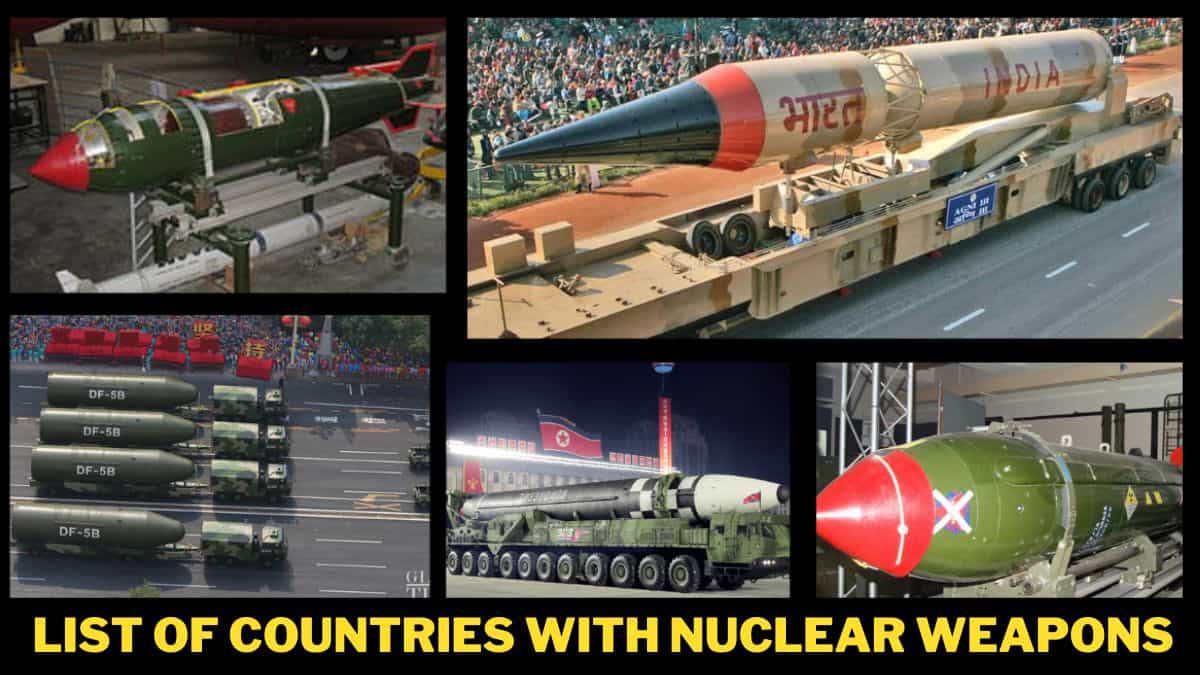

Nuclear weapons:

Main article: Nuclear weapon

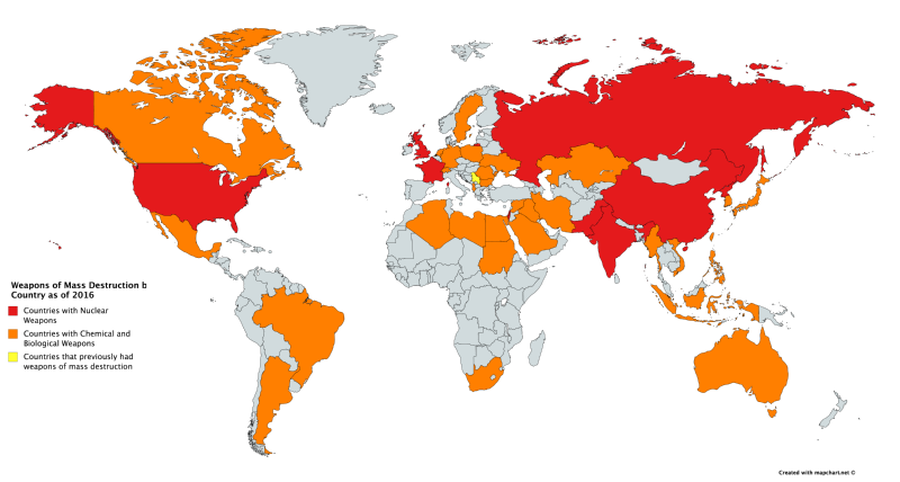

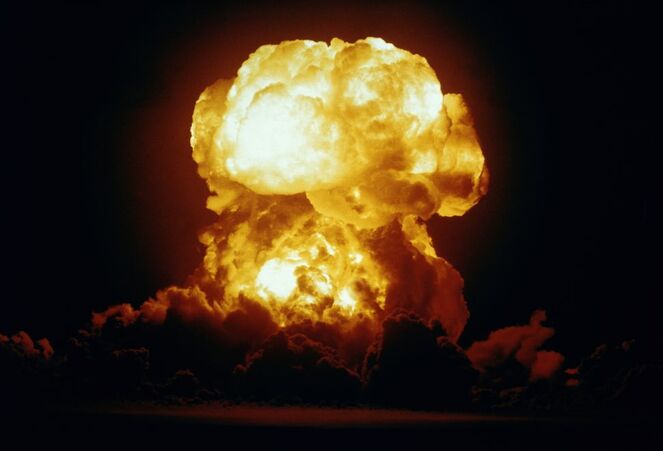

A nuclear weapon is an explosive device that derives its destructive force from nuclear reactions, either fission or a combination of fission and fusion. Both reactions release vast quantities of energy from relatively small amounts of matter. Even small nuclear devices can devastate a city by blast, fire and radiation.

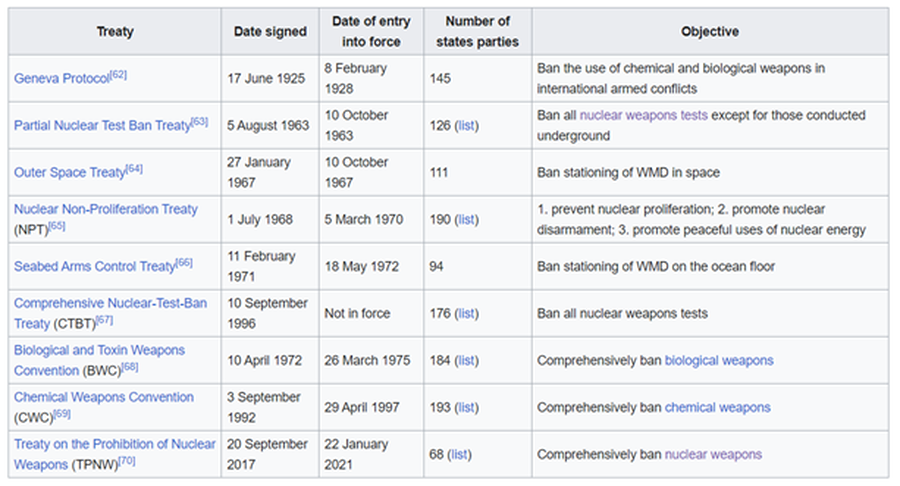

Nuclear weapons are considered weapons of mass destruction, and their use and control has been a major aspect of international policy since their debut.

The design of a nuclear weapon is more complicated than it might seem. Such a weapon must hold one or more subcritical fissile masses stable for deployment, then induce criticality (create a critical mass) for detonation. It also is quite difficult to ensure that such a chain reaction consumes a significant fraction of the fuel before the device flies apart.

The procurement of a nuclear fuel is also more difficult than it might seem, since sufficiently unstable substances for this process do not currently occur naturally on Earth in suitable amounts.

One isotope of uranium, namely uranium-235, is naturally occurring and sufficiently unstable, but it is always found mixed with the more stable isotope uranium-238. The latter accounts for more than 99% of the weight of natural uranium. Therefore, some method of isotope separation based on the weight of three neutrons must be performed to enrich (isolate) uranium-235.

Alternatively, the element plutonium possesses an isotope that is sufficiently unstable for this process to be usable. Terrestrial plutonium does not currently occur naturally in sufficient quantities for such use, so it must be manufactured in a nuclear reactor.

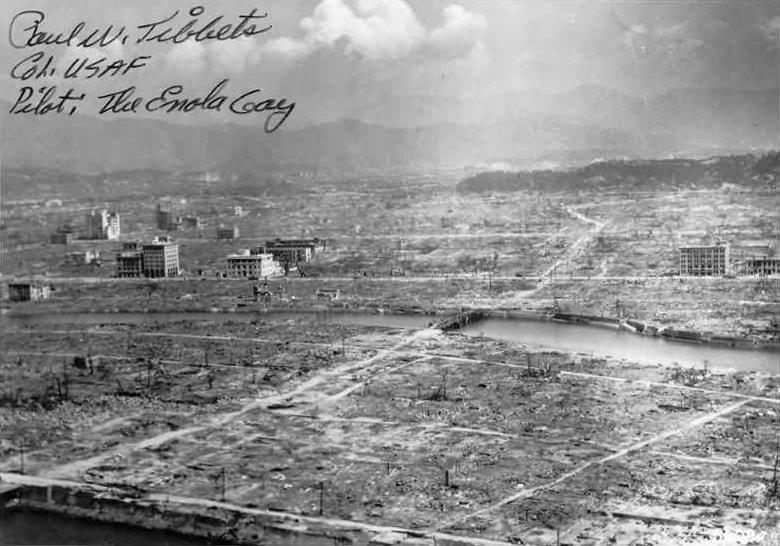

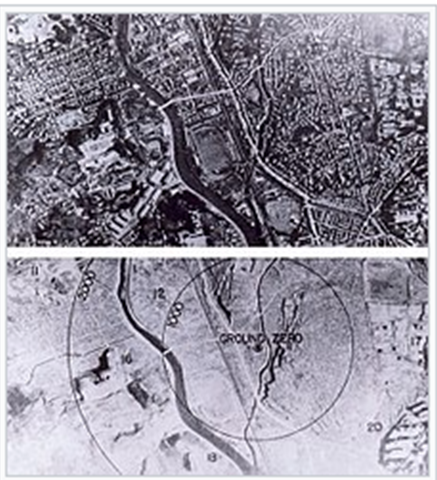

Ultimately, the Manhattan Project manufactured nuclear weapons based on each of these elements. They detonated the first nuclear weapon in a test code-named "Trinity", near Alamogordo, New Mexico, on July 16, 1945. The test was conducted to ensure that the implosion method of detonation would work, which it did.

A uranium bomb, Little Boy, was dropped on the Japanese city Hiroshima on August 6, 1945, followed three days later by the plutonium-based Fat Man on Nagasaki. In the wake of unprecedented devastation and casualties from a single weapon, the Japanese government soon surrendered, ending World War II.

Since these bombings, no nuclear weapons have been deployed offensively. Nevertheless, they prompted an arms race to develop increasingly destructive bombs to provide a nuclear deterrent.

Just over four years later, on August 29, 1949, the Soviet Union detonated its first fission weapon. The United Kingdom followed on October 2, 1952; France, on February 13, 1960; and China component to a nuclear weapon.

Approximately half of the deaths from Hiroshima and Nagasaki died two to five years afterward from radiation exposure. A radiological weapon is a type of nuclear weapon designed to distribute hazardous nuclear material in enemy areas. Such a weapon would not have the explosive capability of a fission or fusion bomb, but would kill many people and contaminate a large area.

A radiological weapon has never been deployed. While considered useless by a conventional military, such a weapon raises concerns over nuclear terrorism.

There have been over 2,000 nuclear tests conducted since 1945. In 1963, all nuclear and many non-nuclear states signed the Limited Test Ban Treaty, pledging to refrain from testing nuclear weapons in the atmosphere, underwater, or in outer space. The treaty permitted underground nuclear testing.

France continued atmospheric testing until 1974, while China continued up until 1980. The last underground test by the United States was in 1992, the Soviet Union in 1990, the United Kingdom in 1991, and both France and China continued testing until 1996.

After signing the Comprehensive Test Ban Treaty in 1996 (which had as of 2011 not entered into force), all of these states have pledged to discontinue all nuclear testing. Non-signatories India and Pakistan last tested nuclear weapons in 1998.

Nuclear weapons are the most destructive weapons known - the archetypal weapons of mass destruction. Throughout the Cold War, the opposing powers had huge nuclear arsenals, sufficient to kill hundreds of millions of people. Generations of people grew up under the shadow of nuclear devastation, portrayed in films such as Dr. Strangelove and The Atomic Cafe.

However, the tremendous energy release in the detonation of a nuclear weapon also suggested the possibility of a new energy source.

Civilian uses:

Nuclear power:

Further information: Nuclear power and Nuclear reactor technology

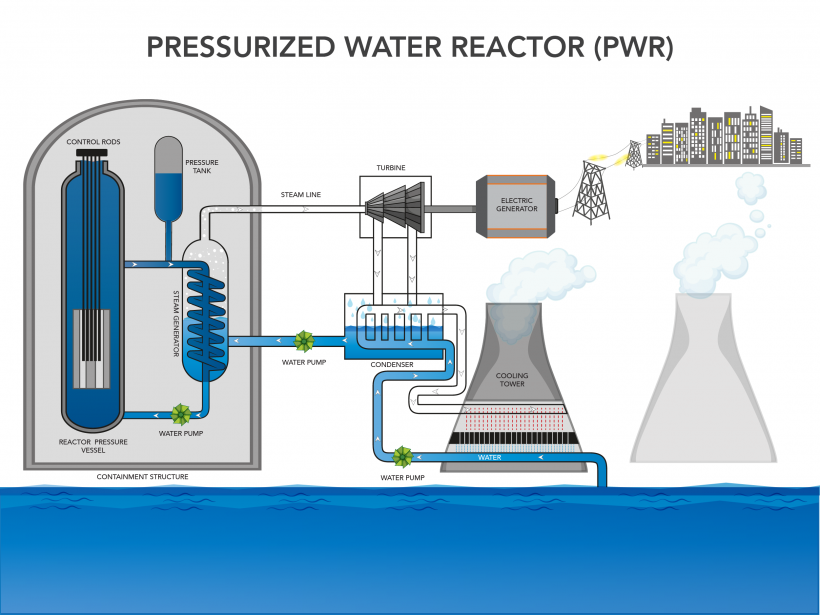

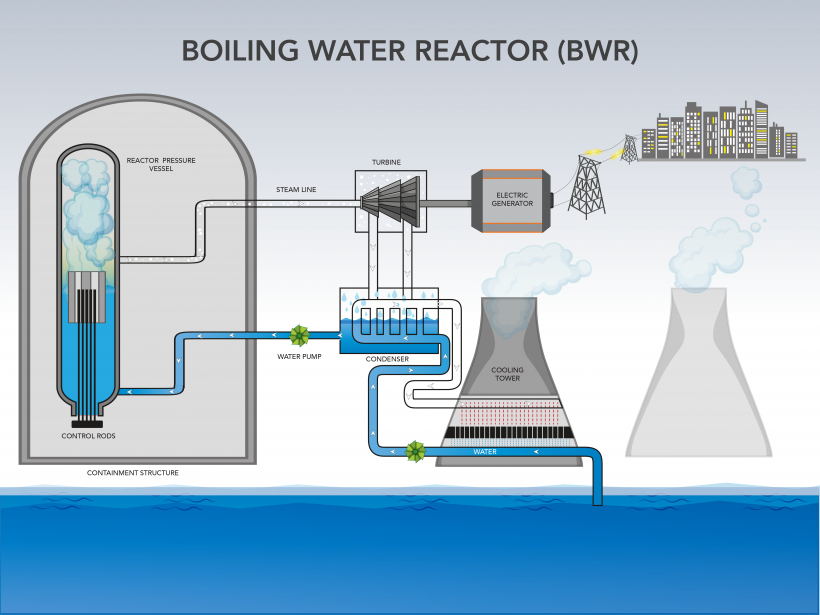

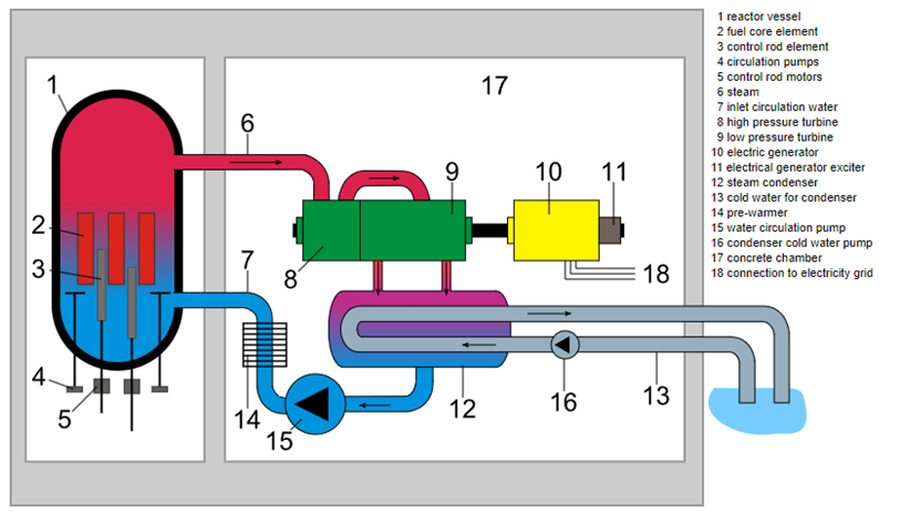

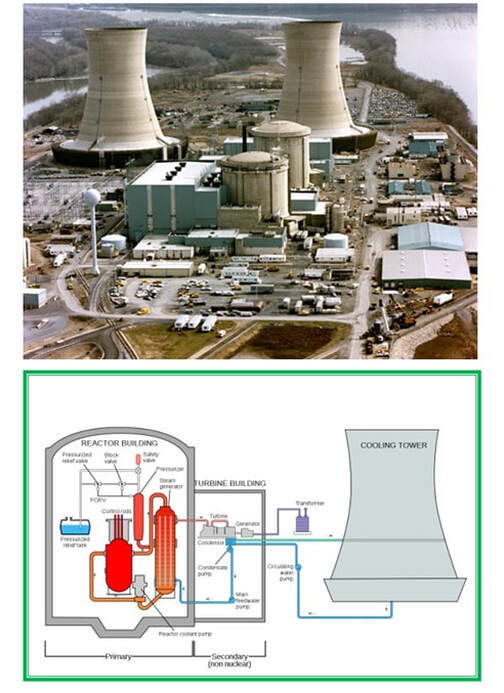

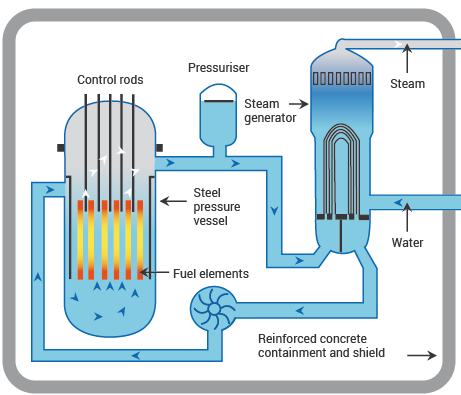

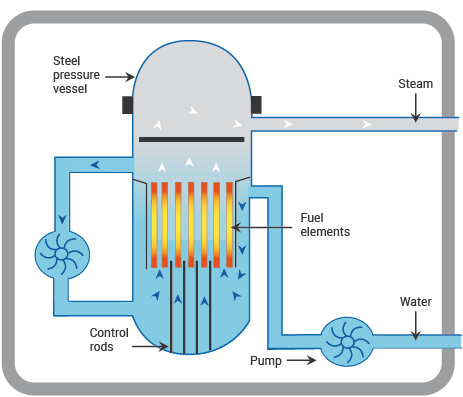

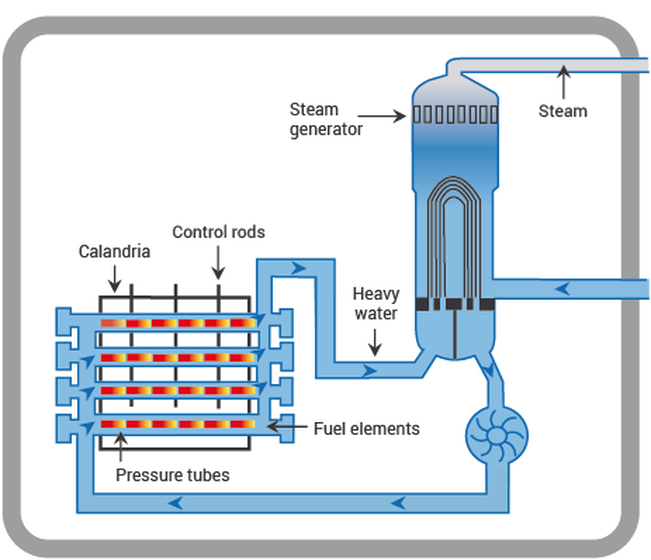

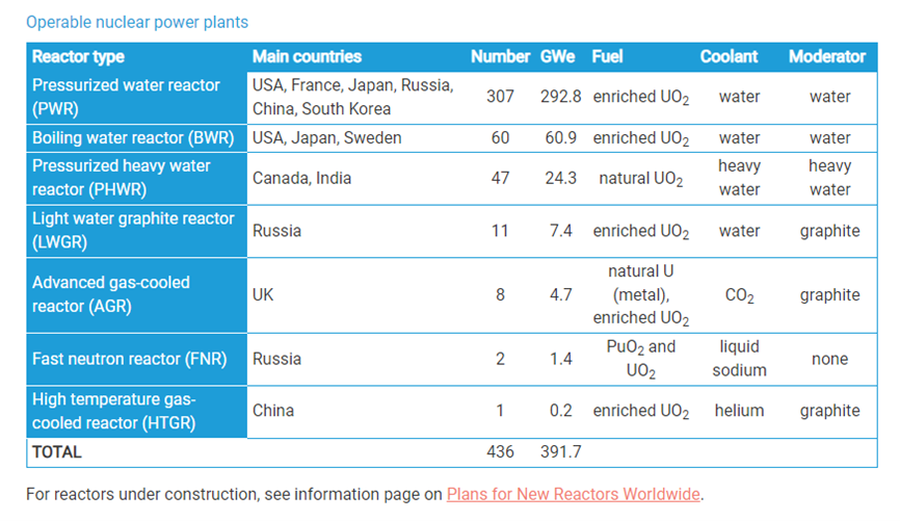

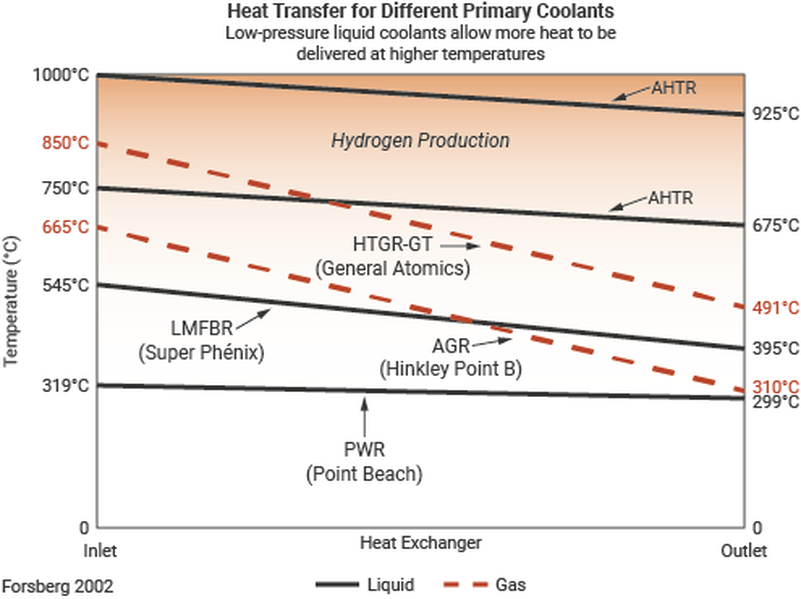

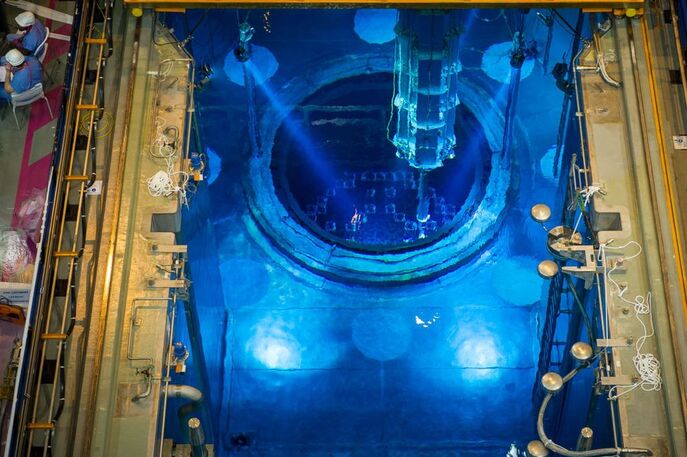

Nuclear power is a type of nuclear technology involving the controlled use of nuclear fission to release energy for work including propulsion, heat, and the generation of electricity.

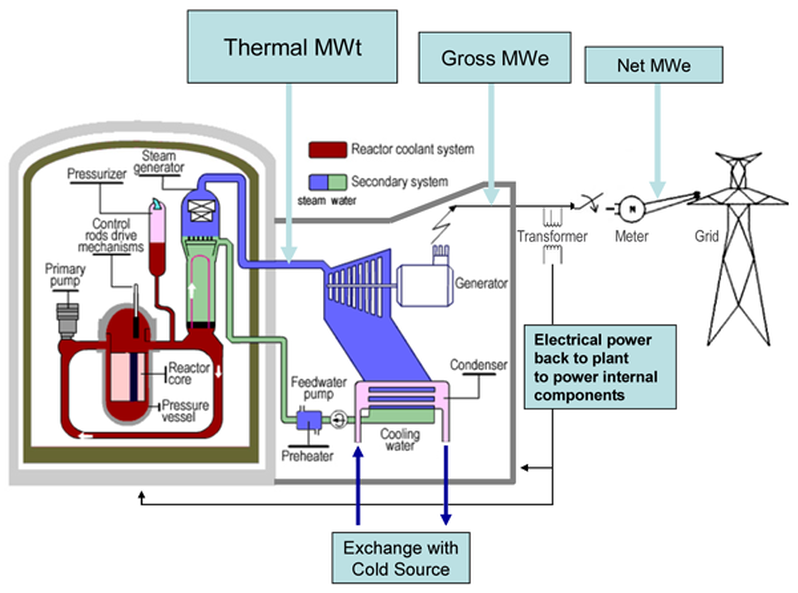

Nuclear energy is produced by a controlled nuclear chain reaction which creates heat—and which is used to boil water, produce steam, and drive a steam turbine. The turbine is used to generate electricity and/or to do mechanical work.

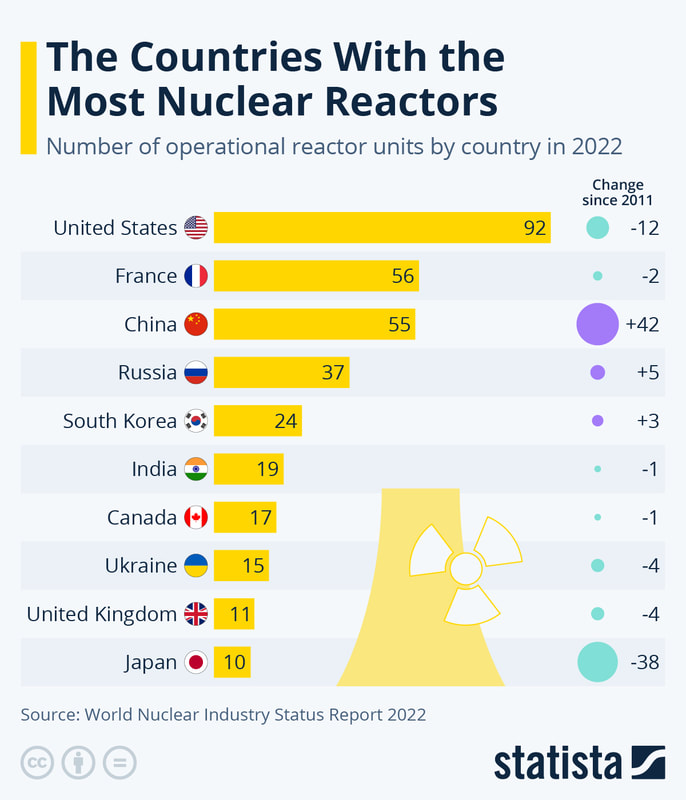

Currently nuclear power provides approximately 15.7% of the world's electricity (in 2004) and is used to propel aircraft carriers, icebreakers and submarines (so far economics and fears in some ports have prevented the use of nuclear power in transport ships).

All nuclear power plants use fission. No man-made fusion reaction has resulted in a viable source of electricity.

Medical applications:

Further information: Nuclear medicine

The medical applications of nuclear technology are divided into diagnostics and radiation treatment.

Imaging - The largest use of ionizing radiation in medicine is in medical radiography to make images of the inside of the human body using x-rays. This is the largest artificial source of radiation exposure for humans. Medical and dental x-ray imagers use of cobalt-60 or other x-ray sources.

A number of radiopharmaceuticals are used, sometimes attached to organic molecules, to act as radioactive tracers or contrast agents in the human body. Positron emitting nucleotides are used for high resolution, short time span imaging in applications known as Positron emission tomography.

Radiation is also used to treat diseases in radiation therapy.

Industrial applications:

Since some ionizing radiation can penetrate matter, they are used for a variety of measuring methods. X-rays and gamma rays are used in industrial radiography to make images of the inside of solid products, as a means of nondestructive testing and inspection. The piece to be radiographed is placed between the source and a photographic film in a cassette. After a certain exposure time, the film is developed and it shows any internal defects of the material.

Gauges - Gauges use the exponential absorption law of gamma rays

Electrostatic control - To avoid the build-up of static electricity in production of paper, plastics, synthetic textiles, etc., a ribbon-shaped source of the alpha emitter 241Am can be placed close to the material at the end of the production line. The source ionizes the air to remove electric charges on the material.

Radioactive tracers - Since radioactive isotopes behave, chemically, mostly like the inactive element, the behavior of a certain chemical substance can be followed by tracing the radioactivity.

Oil and Gas Exploration- Nuclear well logging is used to help predict the commercial viability of new or existing wells. The technology involves the use of a neutron or gamma-ray source and a radiation detector which are lowered into boreholes to determine the properties of the surrounding rock such as porosity and lithography.

Road Construction - Nuclear moisture/density gauges are used to determine the density of soils, asphalt, and concrete. Typically a cesium-137 source is used.

Commercial applications:

Food processing and agriculture:

In biology and agriculture, radiation is used to induce mutations to produce new or improved species, such as in atomic gardening. Another use in insect control is the sterile insect technique, where male insects are sterilized by radiation and released, so they have no offspring, to reduce the population.

In industrial and food applications, radiation is used for sterilization of tools and equipment.

An advantage is that the object may be sealed in plastic before sterilization. An emerging use in food production is the sterilization of food using food irradiation.

Food irradiation is the process of exposing food to ionizing radiation in order to destroy microorganisms, bacteria, viruses, or insects that might be present in the food. The radiation sources used include radioisotope gamma ray sources, X-ray generators and electron accelerators.

Further applications include sprout inhibition, delay of ripening, increase of juice yield, and improvement of re-hydration. Irradiation is a more general term of deliberate exposure of materials to radiation to achieve a technical goal (in this context 'ionizing radiation' is implied). As such it is also used on non-food items, such as medical hardware, plastics, tubes for gas-pipelines, hoses for floor-heating, shrink-foils for food packaging, automobile parts, wires and cables (isolation), tires, and even gemstones.

Compared to the amount of food irradiated, the volume of those every-day applications is huge but not noticed by the consumer.

The genuine effect of processing food by ionizing radiation relates to damages to the DNA, the basic genetic information for life. Microorganisms can no longer proliferate and continue their malignant or pathogenic activities.

Spoilage causing micro-organisms cannot continue their activities. Insects do not survive or become incapable of procreation. Plants cannot continue the natural ripening or aging process. All these effects are beneficial to the consumer and the food industry, likewise.

The amount of energy imparted for effective food irradiation is low compared to cooking the same; even at a typical dose of 10 kGy most food, which is (with regard to warming) physically equivalent to water, would warm by only about 2.5 °C (4.5 °F).

The specialty of processing food by ionizing radiation is the fact, that the energy density per atomic transition is very high, it can cleave molecules and induce ionization (hence the name) which cannot be achieved by mere heating. This is the reason for new beneficial effects, however at the same time, for new concerns.

The treatment of solid food by ionizing radiation can provide an effect similar to heat pasteurization of liquids, such as milk. However, the use of the term, cold pasteurization, to describe irradiated foods is controversial, because pasteurization and irradiation are fundamentally different processes, although the intended end results can in some cases be similar.

Detractors of food irradiation have concerns about the health hazards of induced radioactivity.

A report for the industry advocacy group American Council on Science and Health entitled "Irradiated Foods" states: "The types of radiation sources approved for the treatment of foods have specific energy levels well below that which would cause any element in food to become radioactive. Food undergoing irradiation does not become any more radioactive than luggage passing through an airport X-ray scanner or teeth that have been X-rayed."

Food irradiation is currently permitted by over 40 countries and volumes are estimated to exceed 500,000 metric tons (490,000 long tons; 550,000 short tons) annually worldwide.

Food irradiation is essentially a non-nuclear technology; it relies on the use of ionizing radiation which may be generated by accelerators for electrons and conversion into bremsstrahlung, but which may use also gamma-rays from nuclear decay.

There is a worldwide industry for processing by ionizing radiation, the majority by number and by processing power using accelerators. Food irradiation is only a niche application compared to medical supplies, plastic materials, raw materials, gemstones, cables and wires, etc.

Accidents:

Main articles: Nuclear and radiation accidents and Nuclear safety

Nuclear accidents, because of the powerful forces involved, are often very dangerous.

Historically, the first incidents involved fatal radiation exposure. Marie Curie died from aplastic anemia which resulted from her high levels of exposure.

Two scientists, an American and Canadian respectively, Harry Daghlian and Louis Slotin, died after mishandling the same plutonium mass. Unlike conventional weapons, the intense light, heat, and explosive force is not the only deadly component to a nuclear weapon.

Approximately half of the deaths from Hiroshima and Nagasaki died two to five years afterward from radiation exposure.

Civilian nuclear and radiological accidents primarily involve nuclear power plants. Most common are nuclear leaks that expose workers to hazardous material. A nuclear meltdown refers to the more serious hazard of releasing nuclear material into the surrounding environment.

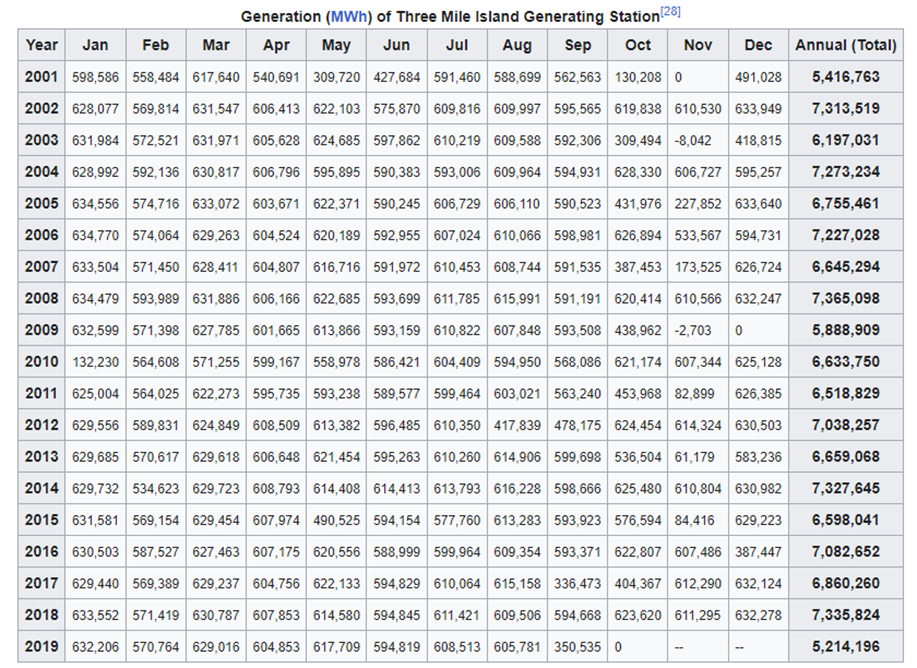

The most significant meltdowns occurred at Three Mile Island in Pennsylvania and Chernobyl in the Soviet Ukraine. Plus, the earthquake and tsunami on March 11, 2011 caused serious damage to three nuclear reactors and a spent fuel storage pond at the Fukushima Daiichi nuclear power plant in Japan.

Military reactors that experienced similar accidents were Windscale in the United Kingdom and SL-1 in the United States.

Military accidents usually involve the loss or unexpected detonation of nuclear weapons.

The Castle Bravo test in 1954 produced a larger yield than expected, which contaminated nearby islands, a Japanese fishing boat (with one fatality), and raised concerns about contaminated fish in Japan. In the 1950s through 1970s, several nuclear bombs were lost from submarines and aircraft, some of which have never been recovered.

The last twenty years have seen a marked decline in such accidents.

Examples of environmental benefits:

Proponents of nuclear energy note that annually, nuclear-generated electricity reduces 470 million metric tons of carbon dioxide emissions that would otherwise come from fossil fuels.

Additionally, the amount of comparatively low waste that nuclear energy does create is safely disposed of by the large scale nuclear energy production facilities or it is repurposed/recycled for other energy uses. Proponents of nuclear energy also bring to attention the opportunity cost of utilizing other forms of electricity.

For example, the Environmental Protection Agency estimates that coal kills 30,000 people a year, as a result of its environmental impact, while 60 people died in the Chernobyl disaster.

A real world example of impact provided by proponents of nuclear energy is the 650,000 ton increase in carbon emissions in the two months following the closure of the Vermont Yankee nuclear plant.

See also

History and scientific background:

Discovery:

Main article: Nuclear physics

The vast majority of common, natural phenomena on Earth only involve gravity and electromagnetism, and not nuclear reactions. This is because atomic nuclei are generally kept apart because they contain positive electrical charges and therefore repel each other.

In 1896, Henri Becquerel was investigating phosphorescence in uranium salts when he discovered a new phenomenon which came to be called radioactivity. He, Pierre Curie and Marie Curie began investigating the phenomenon. In the process, they isolated the element radium, which is highly radioactive.

They discovered that radioactive materials produce intense, penetrating rays of three distinct sorts, which they labeled alpha, beta, and gamma after the first three Greek letters. Some of these kinds of radiation could pass through ordinary matter, and all of them could be harmful in large amounts. All of the early researchers received various radiation burns, much like sunburn, and thought little of it.

The new phenomenon of radioactivity was seized upon by the manufacturers of quack medicine (as had the discoveries of electricity and magnetism, earlier), and a number of patent medicines and treatments involving radioactivity were put forward.

Gradually it was realized that the radiation produced by radioactive decay was ionizing radiation, and that even quantities too small to burn could pose a severe long-term hazard.

Many of the scientists working on radioactivity died of cancer as a result of their exposure. Radioactive patent medicines mostly disappeared, but other applications of radioactive materials persisted, such as the use of radium salts to produce glowing dials on meters.

As the atom came to be better understood, the nature of radioactivity became clearer. Some larger atomic nuclei are unstable, and so decay (release matter or energy) after a random interval.

The three forms of radiation that Becquerel and the Curies discovered are also more fully understood:

- Alpha decay is when a nucleus releases an alpha particle, which is two protons and two neutrons, equivalent to a helium nucleus.

- Beta decay is the release of a beta particle, a high-energy electron.

- Gamma decay releases gamma rays, which unlike alpha and beta radiation are not matter but electromagnetic radiation of very high frequency, and therefore energy. This type of radiation is the most dangerous and most difficult to block.

All three types of radiation occur naturally in certain elements.

It has also become clear that the ultimate source of most terrestrial energy is nuclear, either through radiation from the Sun caused by stellar thermonuclear reactions or by radioactive decay of uranium within the Earth, the principal source of geothermal energy.

Nuclear fission:

Main article: Nuclear fission

In natural nuclear radiation, the byproducts are very small compared to the nuclei from which they originate. Nuclear fission is the process of splitting a nucleus into roughly equal parts, and releasing energy and neutrons in the process. If these neutrons are captured by another unstable nucleus, they can fission as well, leading to a chain reaction.

The average number of neutrons released per nucleus that go on to fission another nucleus is referred to as k. Values of k larger than 1 mean that the fission reaction is releasing more neutrons than it absorbs, and therefore is referred to as a self-sustaining chain reaction.

A mass of fissile material large enough (and in a suitable configuration) to induce a self-sustaining chain reaction is called a critical mass.

When a neutron is captured by a suitable nucleus, fission may occur immediately, or the nucleus may persist in an unstable state for a short time. If there are enough immediate decays to carry on the chain reaction, the mass is said to be prompt critical, and the energy release will grow rapidly and uncontrollably, usually leading to an explosion.

When discovered on the eve of World War II, this insight led multiple countries to begin programs investigating the possibility of constructing an atomic bomb — a weapon which utilized fission reactions to generate far more energy than could be created with chemical explosives.

The Manhattan Project, run by the United States with the help of the United Kingdom and Canada, developed multiple fission weapons which were used against Japan in 1945 at Hiroshima and Nagasaki. During the project, the first fission reactors were developed as well, though they were primarily for weapons manufacture and did not generate electricity.

In 1951, the first nuclear fission power plant was the first to produce electricity at the Experimental Breeder Reactor No. 1 (EBR-1), in Arco, Idaho, ushering in the "Atomic Age" of more intensive human energy use.

However, if the mass is critical only when the delayed neutrons are included, then the reaction can be controlled, for example by the introduction or removal of neutron absorbers.

This is what allows nuclear reactors to be built. Fast neutrons are not easily captured by nuclei; they must be slowed (slow neutrons), generally by collision with the nuclei of a neutron moderator, before they can be easily captured. Today, this type of fission is commonly used to generate electricity.

Nuclear fusion:

Main article: Nuclear fusion

See also: Timeline of nuclear fusion

If nuclei are forced to collide, they can undergo nuclear fusion. This process may release or absorb energy. When the resulting nucleus is lighter than that of iron, energy is normally released; when the nucleus is heavier than that of iron, energy is generally absorbed. This process of fusion occurs in stars, which derive their energy from hydrogen and helium.

They form, through stellar nucleosynthesis, the light elements (lithium to calcium) as well as some of the heavy elements (beyond iron and nickel, via the S-process). The remaining abundance of heavy elements, from nickel to uranium and beyond, is due to supernova nucleosynthesis, the R-process.

Of course, these natural processes of astrophysics are not examples of nuclear "technology". Because of the very strong repulsion of nuclei, fusion is difficult to achieve in a controlled fashion. Hydrogen bombs obtain their enormous destructive power from fusion, but their energy cannot be controlled.

Controlled fusion is achieved in particle accelerators; this is how many synthetic elements are produced. A fusor can also produce controlled fusion and is a useful neutron source. However, both of these devices operate at a net energy loss. Controlled, viable fusion power has proven elusive, despite the occasional hoax.

Technical and theoretical difficulties have hindered the development of working civilian fusion technology, though research continues to this day around the world.

Nuclear fusion was initially pursued only in theoretical stages during World War II, when scientists on the Manhattan Project (led by Edward Teller) investigated it as a method to build a bomb. The project abandoned fusion after concluding that it would require a fission reaction to detonate.

It took until 1952 for the first full hydrogen bomb to be detonated, so-called because it used reactions between deuterium and tritium. Fusion reactions are much more energetic per unit mass of fuel than fission reactions, but starting the fusion chain reaction is much more difficult.

Nuclear weapons:

Main article: Nuclear weapon

A nuclear weapon is an explosive device that derives its destructive force from nuclear reactions, either fission or a combination of fission and fusion. Both reactions release vast quantities of energy from relatively small amounts of matter. Even small nuclear devices can devastate a city by blast, fire and radiation.

Nuclear weapons are considered weapons of mass destruction, and their use and control has been a major aspect of international policy since their debut.

The design of a nuclear weapon is more complicated than it might seem. Such a weapon must hold one or more subcritical fissile masses stable for deployment, then induce criticality (create a critical mass) for detonation. It also is quite difficult to ensure that such a chain reaction consumes a significant fraction of the fuel before the device flies apart.

The procurement of a nuclear fuel is also more difficult than it might seem, since sufficiently unstable substances for this process do not currently occur naturally on Earth in suitable amounts.

One isotope of uranium, namely uranium-235, is naturally occurring and sufficiently unstable, but it is always found mixed with the more stable isotope uranium-238. The latter accounts for more than 99% of the weight of natural uranium. Therefore, some method of isotope separation based on the weight of three neutrons must be performed to enrich (isolate) uranium-235.

Alternatively, the element plutonium possesses an isotope that is sufficiently unstable for this process to be usable. Terrestrial plutonium does not currently occur naturally in sufficient quantities for such use, so it must be manufactured in a nuclear reactor.

Ultimately, the Manhattan Project manufactured nuclear weapons based on each of these elements. They detonated the first nuclear weapon in a test code-named "Trinity", near Alamogordo, New Mexico, on July 16, 1945. The test was conducted to ensure that the implosion method of detonation would work, which it did.

A uranium bomb, Little Boy, was dropped on the Japanese city Hiroshima on August 6, 1945, followed three days later by the plutonium-based Fat Man on Nagasaki. In the wake of unprecedented devastation and casualties from a single weapon, the Japanese government soon surrendered, ending World War II.

Since these bombings, no nuclear weapons have been deployed offensively. Nevertheless, they prompted an arms race to develop increasingly destructive bombs to provide a nuclear deterrent.

Just over four years later, on August 29, 1949, the Soviet Union detonated its first fission weapon. The United Kingdom followed on October 2, 1952; France, on February 13, 1960; and China component to a nuclear weapon.

Approximately half of the deaths from Hiroshima and Nagasaki died two to five years afterward from radiation exposure. A radiological weapon is a type of nuclear weapon designed to distribute hazardous nuclear material in enemy areas. Such a weapon would not have the explosive capability of a fission or fusion bomb, but would kill many people and contaminate a large area.

A radiological weapon has never been deployed. While considered useless by a conventional military, such a weapon raises concerns over nuclear terrorism.

There have been over 2,000 nuclear tests conducted since 1945. In 1963, all nuclear and many non-nuclear states signed the Limited Test Ban Treaty, pledging to refrain from testing nuclear weapons in the atmosphere, underwater, or in outer space. The treaty permitted underground nuclear testing.

France continued atmospheric testing until 1974, while China continued up until 1980. The last underground test by the United States was in 1992, the Soviet Union in 1990, the United Kingdom in 1991, and both France and China continued testing until 1996.

After signing the Comprehensive Test Ban Treaty in 1996 (which had as of 2011 not entered into force), all of these states have pledged to discontinue all nuclear testing. Non-signatories India and Pakistan last tested nuclear weapons in 1998.

Nuclear weapons are the most destructive weapons known - the archetypal weapons of mass destruction. Throughout the Cold War, the opposing powers had huge nuclear arsenals, sufficient to kill hundreds of millions of people. Generations of people grew up under the shadow of nuclear devastation, portrayed in films such as Dr. Strangelove and The Atomic Cafe.

However, the tremendous energy release in the detonation of a nuclear weapon also suggested the possibility of a new energy source.

Civilian uses:

Nuclear power:

Further information: Nuclear power and Nuclear reactor technology

Nuclear power is a type of nuclear technology involving the controlled use of nuclear fission to release energy for work including propulsion, heat, and the generation of electricity.

Nuclear energy is produced by a controlled nuclear chain reaction which creates heat—and which is used to boil water, produce steam, and drive a steam turbine. The turbine is used to generate electricity and/or to do mechanical work.

Currently nuclear power provides approximately 15.7% of the world's electricity (in 2004) and is used to propel aircraft carriers, icebreakers and submarines (so far economics and fears in some ports have prevented the use of nuclear power in transport ships).

All nuclear power plants use fission. No man-made fusion reaction has resulted in a viable source of electricity.

Medical applications:

Further information: Nuclear medicine

The medical applications of nuclear technology are divided into diagnostics and radiation treatment.

Imaging - The largest use of ionizing radiation in medicine is in medical radiography to make images of the inside of the human body using x-rays. This is the largest artificial source of radiation exposure for humans. Medical and dental x-ray imagers use of cobalt-60 or other x-ray sources.

A number of radiopharmaceuticals are used, sometimes attached to organic molecules, to act as radioactive tracers or contrast agents in the human body. Positron emitting nucleotides are used for high resolution, short time span imaging in applications known as Positron emission tomography.

Radiation is also used to treat diseases in radiation therapy.

Industrial applications:

Since some ionizing radiation can penetrate matter, they are used for a variety of measuring methods. X-rays and gamma rays are used in industrial radiography to make images of the inside of solid products, as a means of nondestructive testing and inspection. The piece to be radiographed is placed between the source and a photographic film in a cassette. After a certain exposure time, the film is developed and it shows any internal defects of the material.

Gauges - Gauges use the exponential absorption law of gamma rays

- Level indicators: Source and detector are placed at opposite sides of a container, indicating the presence or absence of material in the horizontal radiation path. Beta or gamma sources are used, depending on the thickness and the density of the material to be measured. The method is used for containers of liquids or of grainy substances

- Thickness gauges: if the material is of constant density, the signal measured by the radiation detector depends on the thickness of the material. This is useful for continuous production, like of paper, rubber, etc.

Electrostatic control - To avoid the build-up of static electricity in production of paper, plastics, synthetic textiles, etc., a ribbon-shaped source of the alpha emitter 241Am can be placed close to the material at the end of the production line. The source ionizes the air to remove electric charges on the material.

Radioactive tracers - Since radioactive isotopes behave, chemically, mostly like the inactive element, the behavior of a certain chemical substance can be followed by tracing the radioactivity.

- Examples:

- Adding a gamma tracer to a gas or liquid in a closed system makes it possible to find a hole in a tube.

- Adding a tracer to the surface of the component of a motor makes it possible to measure wear by measuring the activity of the lubricating oil.

Oil and Gas Exploration- Nuclear well logging is used to help predict the commercial viability of new or existing wells. The technology involves the use of a neutron or gamma-ray source and a radiation detector which are lowered into boreholes to determine the properties of the surrounding rock such as porosity and lithography.

Road Construction - Nuclear moisture/density gauges are used to determine the density of soils, asphalt, and concrete. Typically a cesium-137 source is used.

Commercial applications:

- radioluminescence

- tritium illumination: Tritium is used with phosphor in rifle sights to increase nighttime firing accuracy. Some runway markers and building exit signs use the same technology, to remain illuminated during blackouts.

- Betavoltaics.

- Smoke detector: An ionization smoke detector includes a tiny mass of radioactive americium-241, which is a source of alpha radiation. Two ionisation chambers are placed next to each other. Both contain a small source of 241Am that gives rise to a small constant current. One is closed and serves for comparison, the other is open to ambient air; it has a gridded electrode. When smoke enters the open chamber, the current is disrupted as the smoke particles attach to the charged ions and restore them to a neutral electrical state. This reduces the current in the open chamber. When the current drops below a certain threshold, the alarm is triggered.

Food processing and agriculture:

In biology and agriculture, radiation is used to induce mutations to produce new or improved species, such as in atomic gardening. Another use in insect control is the sterile insect technique, where male insects are sterilized by radiation and released, so they have no offspring, to reduce the population.

In industrial and food applications, radiation is used for sterilization of tools and equipment.

An advantage is that the object may be sealed in plastic before sterilization. An emerging use in food production is the sterilization of food using food irradiation.

Food irradiation is the process of exposing food to ionizing radiation in order to destroy microorganisms, bacteria, viruses, or insects that might be present in the food. The radiation sources used include radioisotope gamma ray sources, X-ray generators and electron accelerators.

Further applications include sprout inhibition, delay of ripening, increase of juice yield, and improvement of re-hydration. Irradiation is a more general term of deliberate exposure of materials to radiation to achieve a technical goal (in this context 'ionizing radiation' is implied). As such it is also used on non-food items, such as medical hardware, plastics, tubes for gas-pipelines, hoses for floor-heating, shrink-foils for food packaging, automobile parts, wires and cables (isolation), tires, and even gemstones.

Compared to the amount of food irradiated, the volume of those every-day applications is huge but not noticed by the consumer.

The genuine effect of processing food by ionizing radiation relates to damages to the DNA, the basic genetic information for life. Microorganisms can no longer proliferate and continue their malignant or pathogenic activities.

Spoilage causing micro-organisms cannot continue their activities. Insects do not survive or become incapable of procreation. Plants cannot continue the natural ripening or aging process. All these effects are beneficial to the consumer and the food industry, likewise.

The amount of energy imparted for effective food irradiation is low compared to cooking the same; even at a typical dose of 10 kGy most food, which is (with regard to warming) physically equivalent to water, would warm by only about 2.5 °C (4.5 °F).

The specialty of processing food by ionizing radiation is the fact, that the energy density per atomic transition is very high, it can cleave molecules and induce ionization (hence the name) which cannot be achieved by mere heating. This is the reason for new beneficial effects, however at the same time, for new concerns.

The treatment of solid food by ionizing radiation can provide an effect similar to heat pasteurization of liquids, such as milk. However, the use of the term, cold pasteurization, to describe irradiated foods is controversial, because pasteurization and irradiation are fundamentally different processes, although the intended end results can in some cases be similar.

Detractors of food irradiation have concerns about the health hazards of induced radioactivity.

A report for the industry advocacy group American Council on Science and Health entitled "Irradiated Foods" states: "The types of radiation sources approved for the treatment of foods have specific energy levels well below that which would cause any element in food to become radioactive. Food undergoing irradiation does not become any more radioactive than luggage passing through an airport X-ray scanner or teeth that have been X-rayed."

Food irradiation is currently permitted by over 40 countries and volumes are estimated to exceed 500,000 metric tons (490,000 long tons; 550,000 short tons) annually worldwide.

Food irradiation is essentially a non-nuclear technology; it relies on the use of ionizing radiation which may be generated by accelerators for electrons and conversion into bremsstrahlung, but which may use also gamma-rays from nuclear decay.

There is a worldwide industry for processing by ionizing radiation, the majority by number and by processing power using accelerators. Food irradiation is only a niche application compared to medical supplies, plastic materials, raw materials, gemstones, cables and wires, etc.

Accidents:

Main articles: Nuclear and radiation accidents and Nuclear safety

Nuclear accidents, because of the powerful forces involved, are often very dangerous.

Historically, the first incidents involved fatal radiation exposure. Marie Curie died from aplastic anemia which resulted from her high levels of exposure.

Two scientists, an American and Canadian respectively, Harry Daghlian and Louis Slotin, died after mishandling the same plutonium mass. Unlike conventional weapons, the intense light, heat, and explosive force is not the only deadly component to a nuclear weapon.

Approximately half of the deaths from Hiroshima and Nagasaki died two to five years afterward from radiation exposure.

Civilian nuclear and radiological accidents primarily involve nuclear power plants. Most common are nuclear leaks that expose workers to hazardous material. A nuclear meltdown refers to the more serious hazard of releasing nuclear material into the surrounding environment.

The most significant meltdowns occurred at Three Mile Island in Pennsylvania and Chernobyl in the Soviet Ukraine. Plus, the earthquake and tsunami on March 11, 2011 caused serious damage to three nuclear reactors and a spent fuel storage pond at the Fukushima Daiichi nuclear power plant in Japan.

Military reactors that experienced similar accidents were Windscale in the United Kingdom and SL-1 in the United States.

Military accidents usually involve the loss or unexpected detonation of nuclear weapons.

The Castle Bravo test in 1954 produced a larger yield than expected, which contaminated nearby islands, a Japanese fishing boat (with one fatality), and raised concerns about contaminated fish in Japan. In the 1950s through 1970s, several nuclear bombs were lost from submarines and aircraft, some of which have never been recovered.

The last twenty years have seen a marked decline in such accidents.

Examples of environmental benefits:

Proponents of nuclear energy note that annually, nuclear-generated electricity reduces 470 million metric tons of carbon dioxide emissions that would otherwise come from fossil fuels.

Additionally, the amount of comparatively low waste that nuclear energy does create is safely disposed of by the large scale nuclear energy production facilities or it is repurposed/recycled for other energy uses. Proponents of nuclear energy also bring to attention the opportunity cost of utilizing other forms of electricity.

For example, the Environmental Protection Agency estimates that coal kills 30,000 people a year, as a result of its environmental impact, while 60 people died in the Chernobyl disaster.

A real world example of impact provided by proponents of nuclear energy is the 650,000 ton increase in carbon emissions in the two months following the closure of the Vermont Yankee nuclear plant.

See also

- Nuclear power debate

- Outline of nuclear technology

- Radiology

- Nuclear Energy Institute – Beneficial Uses of Radiation

- Nuclear Technology

- National Isotope Development Center – U.S. Government source of isotopes for basic and applied nuclear science and nuclear technology – production, research, development, distribution, and information

What you need to know about the U.S. fusion energy breakthrough* including Nuclear Fusion and Nuclear Power

* -- Published in Washington Post 12/13/2022

* -- Published in Washington Post 12/13/2022

- YouTube Video: Nuclear Fusion: Inside the breakthrough that could change our world | 60 Minutes

- YouTube Video: How Developments In Nuclear Fusion Change Everything | Neil deGrasse Tyson Explains...

- YouTube Video: How This Fusion Reactor Will Make Electricity by 2024

The Washington Post 12/13/2022: On Tuesday, the Energy Department announced a long-awaited milestone in the development of nuclear fusion energy: net energy gain. The news could galvanize the fusion community, which has long hyped the technology as a possible clean energy tool to combat climate change.

10 steps you can take to lower your carbon footprint:

But how big of a deal is the “net energy gain” anyway — and what does it mean for the fusion power plants of the future? Here’s what you need to know.

What is fusion energy?

Existing nuclear power plants work through fission — splitting apart heavy atoms to create energy. In fission, a neutron collides with a heavy uranium atom, splitting it into lighter atoms and releasing a lot of heat and energy at the same time.

Fusion, on the other hand, works in the opposite way — it involves smushing two atoms (often two hydrogen atoms) together to create a new element (often helium), in the same way that stars creates energy. In that process, the two hydrogen atoms lose a small amount of mass, which is converted to energy according to Einstein’s famous equation, E=mc². Because the speed of light is very, very fast — 300,000,000 meters per second — even a tiny amount of mass lost can result in a ton of energy.

What is ‘net energy gain,’ and how did the researchers achieve it?

Up to this point, researchers have been able to fuse two hydrogen atoms together successfully, but it has always taken more energy to do the reaction than they get back. Net energy gain — where they get more energy back than they put in to create the reaction — has been the elusive holy grail of fusion research.

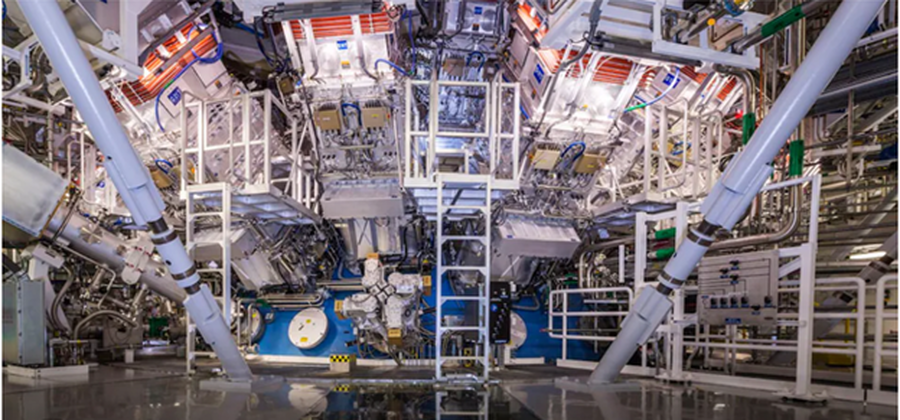

On Tuesday, researchers at the National Ignition Facility at the Lawrence Livermore National Laboratory in California announced that they attained net energy gain by shooting lasers at hydrogen atoms.

The lasers delivered 2.05 megajoules of energy and created 3.15 megajoules of fusion energy, a gain of about 1.5 times. The 192 laser beams compressed the hydrogen atoms down to about 100 times the density of lead and heated them to approximately 100 million degrees Celsius. The high density and temperature caused the atoms to merge into helium.

Other methods being researched involve using magnets to confine superhot plasma.

“It’s like the Kitty Hawk moment for the Wright brothers,” said Melanie Windridge, a plasma physicist and the CEO of Fusion Energy Insights. “It’s like the plane taking off.”

Does this mean fusion energy is ready for prime time?

No. Scientists refer to the current breakthrough as “scientific net energy gain” — meaning that more energy has come out of the reaction than was inputted by the laser. That’s a huge milestone that has never before been achieved.

But it’s only a net energy gain at the micro level. The lasers used at the Livermore lab are only about 1 percent efficient, according to Troy Carter, a plasma physicist at the University of California at Los Angeles. That means that it takes about 100 times more energy to run the lasers than they are ultimately able to deliver to the hydrogen atoms.

So researchers will still have to reach “engineering net energy gain,” or the point at which the entire process takes less energy than is outputted by the reaction. They will also have to figure out how to turn the outputted energy — currently in the form of kinetic energy from the helium nucleus and the neutron — into a form that is usable for electricity. They could do that by converting it to heat, then heating steam to turn a turbine and run a generator. That process also has efficiency limitations.

All that means that the energy gain will probably need to be pushed much, much higher for fusion to actually be commercially viable.

At the moment, researchers can also only do the fusion reaction about once a day. In between, they have to allow the lasers to cool and replace the fusion fuel target. A commercially viable plant would need to be able to do it several times per second, said Dennis Whyte, director of the Plasma Science and Fusion Center at MIT. “Once you’ve got scientific viability,” he said, “you’ve got to figure out engineering viability.”

What are the benefits of fusion?

Fusion’s possibilities are huge. The technology is much, much safer than nuclear fission, since fusion can’t create runaway reactions. It also doesn’t produce radioactive byproducts that need to be stored, or harmful carbon emissions; it simply produces inert helium and a neutron. And we’re not likely to run out of fuel: The fuel for fusion is just heavy hydrogen atoms, which can be found in seawater.

When could fusion actually power our homes?

That’s the trillion-dollar question. For decades, scientists have joked that fusion is always 30 or 40 years away. Over the years, researchers have variously predicted that fusion plants will be operational in the 1990s, the 2000s, the 2010s and the 2020s.

Current fusion experts argue that it’s not a matter of time, but a matter of will — if governments and private donors finance fusion aggressively, they say, a prototype fusion power plant could be available in the 2030s.

“The timeline is not really a question of time,” Carter said. “It’s a question of innovating and putting the effort in.”

___________________________________________________________________________

Fusion power (Wikipedia):

Fusion power is a proposed form of power generation that would generate electricity by using heat from nuclear fusion reactions. In a fusion process, two lighter atomic nuclei combine to form a heavier nucleus, while releasing energy. Devices designed to harness this energy are known as fusion reactors.

Research into fusion reactors began in the 1940s, but as of 2022, only one design, an inertial confinement laser-driven fusion machine at the US National Ignition Facility, has conclusively produced a positive fusion energy gain factor, i.e. more power output than input.

Fusion processes require fuel and a confined environment with sufficient temperature, pressure, and confinement time to create a plasma in which fusion can occur. The combination of these figures that results in a power-producing system is known as the Lawson criterion. In stars, the most common fuel is hydrogen, and gravity provides extremely long confinement times that reach the conditions needed for fusion energy production.

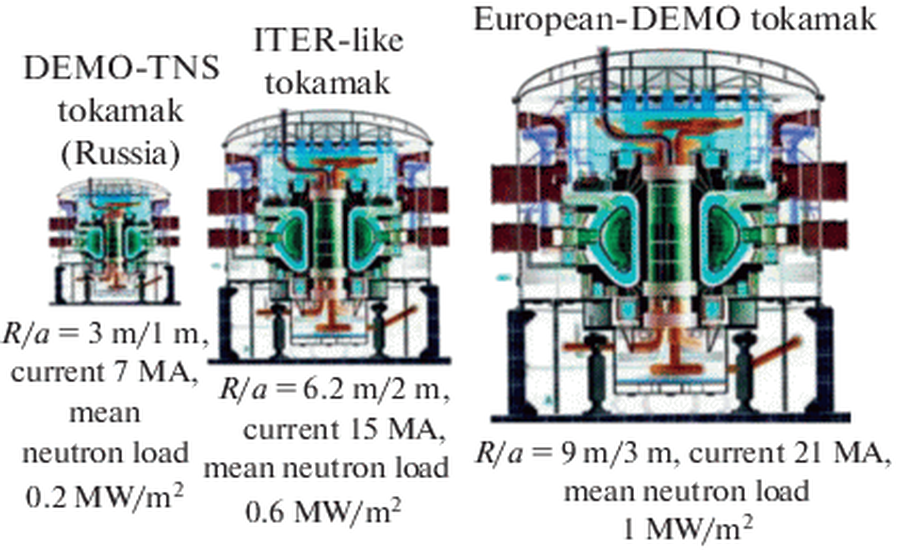

Proposed fusion reactors generally use heavy hydrogen isotopes such as deuterium and tritium (and especially a mixture of the two), which react more easily than protium (the most common hydrogen isotope), to allow them to reach the Lawson criterion requirements with less extreme conditions. Most designs aim to heat their fuel to around 100 million degrees, which presents a major challenge in producing a successful design.

As a source of power, nuclear fusion is expected to have many advantages over fission. These include reduced radioactivity in operation and little high-level nuclear waste, ample fuel supplies, and increased safety.

However, the necessary combination of temperature, pressure, and duration has proven to be difficult to produce in a practical and economical manner. A second issue that affects common reactions is managing neutrons that are released during the reaction, which over time degrade many common materials used within the reaction chamber.

Fusion researchers have investigated various confinement concepts. The early emphasis was on three main systems: z-pinch, stellarator, and magnetic mirror. The current leading designs are the tokamak and inertial confinement (ICF) by laser. Both designs are under research at very large scales, most notably the ITER tokamak in France, and the National Ignition Facility (NIF) laser in the United States.

Researchers are also studying other designs that may offer cheaper approaches. Among these alternatives, there is increasing interest in magnetized target fusion and inertial electrostatic confinement, and new variations of the stellarator.

Click on any of the blue hyperlinks for more about Fusion Power:

Nuclear fusion:

Nuclear fusion is a reaction in which two or more atomic nuclei are combined to form one or more different atomic nuclei and subatomic particles (neutrons or protons).

The difference in mass between the reactants and products is manifested as either the release or absorption of energy. This difference in mass arises due to the difference in nuclear binding energy between the atomic nuclei before and after the reaction. Nuclear fusion is the process that powers active or main-sequence stars and other high-magnitude stars, where large amounts of energy are released.

A nuclear fusion process that produces atomic nuclei lighter than iron-56 or nickel-62 will generally release energy. These elements have a relatively small mass and a relatively large binding energy per nucleon.

Fusion of nuclei lighter than these releases energy (an exothermic process), while the fusion of heavier nuclei results in energy retained by the product nucleons, and the resulting reaction is endothermic. The opposite is true for the reverse process, called nuclear fission. Nuclear fusion uses lighter elements, such as hydrogen and helium, which are in general more fusible; while the heavier elements, such as uranium, thorium and plutonium, are more fissionable.

The extreme astrophysical event of a supernova can produce enough energy to fuse nuclei into elements heavier than iron.

Click on any of the following blue hyperlinks for more about Nuclear Fusion:

10 steps you can take to lower your carbon footprint:

But how big of a deal is the “net energy gain” anyway — and what does it mean for the fusion power plants of the future? Here’s what you need to know.

What is fusion energy?

Existing nuclear power plants work through fission — splitting apart heavy atoms to create energy. In fission, a neutron collides with a heavy uranium atom, splitting it into lighter atoms and releasing a lot of heat and energy at the same time.

Fusion, on the other hand, works in the opposite way — it involves smushing two atoms (often two hydrogen atoms) together to create a new element (often helium), in the same way that stars creates energy. In that process, the two hydrogen atoms lose a small amount of mass, which is converted to energy according to Einstein’s famous equation, E=mc². Because the speed of light is very, very fast — 300,000,000 meters per second — even a tiny amount of mass lost can result in a ton of energy.

What is ‘net energy gain,’ and how did the researchers achieve it?

Up to this point, researchers have been able to fuse two hydrogen atoms together successfully, but it has always taken more energy to do the reaction than they get back. Net energy gain — where they get more energy back than they put in to create the reaction — has been the elusive holy grail of fusion research.

On Tuesday, researchers at the National Ignition Facility at the Lawrence Livermore National Laboratory in California announced that they attained net energy gain by shooting lasers at hydrogen atoms.

The lasers delivered 2.05 megajoules of energy and created 3.15 megajoules of fusion energy, a gain of about 1.5 times. The 192 laser beams compressed the hydrogen atoms down to about 100 times the density of lead and heated them to approximately 100 million degrees Celsius. The high density and temperature caused the atoms to merge into helium.

Other methods being researched involve using magnets to confine superhot plasma.

“It’s like the Kitty Hawk moment for the Wright brothers,” said Melanie Windridge, a plasma physicist and the CEO of Fusion Energy Insights. “It’s like the plane taking off.”

Does this mean fusion energy is ready for prime time?

No. Scientists refer to the current breakthrough as “scientific net energy gain” — meaning that more energy has come out of the reaction than was inputted by the laser. That’s a huge milestone that has never before been achieved.

But it’s only a net energy gain at the micro level. The lasers used at the Livermore lab are only about 1 percent efficient, according to Troy Carter, a plasma physicist at the University of California at Los Angeles. That means that it takes about 100 times more energy to run the lasers than they are ultimately able to deliver to the hydrogen atoms.

So researchers will still have to reach “engineering net energy gain,” or the point at which the entire process takes less energy than is outputted by the reaction. They will also have to figure out how to turn the outputted energy — currently in the form of kinetic energy from the helium nucleus and the neutron — into a form that is usable for electricity. They could do that by converting it to heat, then heating steam to turn a turbine and run a generator. That process also has efficiency limitations.

All that means that the energy gain will probably need to be pushed much, much higher for fusion to actually be commercially viable.

At the moment, researchers can also only do the fusion reaction about once a day. In between, they have to allow the lasers to cool and replace the fusion fuel target. A commercially viable plant would need to be able to do it several times per second, said Dennis Whyte, director of the Plasma Science and Fusion Center at MIT. “Once you’ve got scientific viability,” he said, “you’ve got to figure out engineering viability.”

What are the benefits of fusion?

Fusion’s possibilities are huge. The technology is much, much safer than nuclear fission, since fusion can’t create runaway reactions. It also doesn’t produce radioactive byproducts that need to be stored, or harmful carbon emissions; it simply produces inert helium and a neutron. And we’re not likely to run out of fuel: The fuel for fusion is just heavy hydrogen atoms, which can be found in seawater.

When could fusion actually power our homes?

That’s the trillion-dollar question. For decades, scientists have joked that fusion is always 30 or 40 years away. Over the years, researchers have variously predicted that fusion plants will be operational in the 1990s, the 2000s, the 2010s and the 2020s.

Current fusion experts argue that it’s not a matter of time, but a matter of will — if governments and private donors finance fusion aggressively, they say, a prototype fusion power plant could be available in the 2030s.

“The timeline is not really a question of time,” Carter said. “It’s a question of innovating and putting the effort in.”

___________________________________________________________________________

Fusion power (Wikipedia):

Fusion power is a proposed form of power generation that would generate electricity by using heat from nuclear fusion reactions. In a fusion process, two lighter atomic nuclei combine to form a heavier nucleus, while releasing energy. Devices designed to harness this energy are known as fusion reactors.

Research into fusion reactors began in the 1940s, but as of 2022, only one design, an inertial confinement laser-driven fusion machine at the US National Ignition Facility, has conclusively produced a positive fusion energy gain factor, i.e. more power output than input.

Fusion processes require fuel and a confined environment with sufficient temperature, pressure, and confinement time to create a plasma in which fusion can occur. The combination of these figures that results in a power-producing system is known as the Lawson criterion. In stars, the most common fuel is hydrogen, and gravity provides extremely long confinement times that reach the conditions needed for fusion energy production.

Proposed fusion reactors generally use heavy hydrogen isotopes such as deuterium and tritium (and especially a mixture of the two), which react more easily than protium (the most common hydrogen isotope), to allow them to reach the Lawson criterion requirements with less extreme conditions. Most designs aim to heat their fuel to around 100 million degrees, which presents a major challenge in producing a successful design.

As a source of power, nuclear fusion is expected to have many advantages over fission. These include reduced radioactivity in operation and little high-level nuclear waste, ample fuel supplies, and increased safety.

However, the necessary combination of temperature, pressure, and duration has proven to be difficult to produce in a practical and economical manner. A second issue that affects common reactions is managing neutrons that are released during the reaction, which over time degrade many common materials used within the reaction chamber.

Fusion researchers have investigated various confinement concepts. The early emphasis was on three main systems: z-pinch, stellarator, and magnetic mirror. The current leading designs are the tokamak and inertial confinement (ICF) by laser. Both designs are under research at very large scales, most notably the ITER tokamak in France, and the National Ignition Facility (NIF) laser in the United States.

Researchers are also studying other designs that may offer cheaper approaches. Among these alternatives, there is increasing interest in magnetized target fusion and inertial electrostatic confinement, and new variations of the stellarator.

Click on any of the blue hyperlinks for more about Fusion Power:

- Background

- Methods

- Common tools

- Fuels

- Material selection

- Safety and the environment

- Economics

- Regulation

- Geopolitics

- Advantages

- History

- Records

- See also:

- COLEX process, for production of Li-6

- Fusion ignition

- High beta fusion reactor

- Inertial electrostatic confinement

- Levitated dipole

- List of fusion experiments

- Magnetic mirror

- Fusion Device Information System

- Fusion Energy Base

- Fusion Industry Association

- Princeton Satellite Systems News

- U.S. Fusion Energy Science Program

- Ball, Philip. "The chase for fusion energy". Nature. Retrieved 2021-11-22.

Nuclear fusion:

Nuclear fusion is a reaction in which two or more atomic nuclei are combined to form one or more different atomic nuclei and subatomic particles (neutrons or protons).

The difference in mass between the reactants and products is manifested as either the release or absorption of energy. This difference in mass arises due to the difference in nuclear binding energy between the atomic nuclei before and after the reaction. Nuclear fusion is the process that powers active or main-sequence stars and other high-magnitude stars, where large amounts of energy are released.

A nuclear fusion process that produces atomic nuclei lighter than iron-56 or nickel-62 will generally release energy. These elements have a relatively small mass and a relatively large binding energy per nucleon.

Fusion of nuclei lighter than these releases energy (an exothermic process), while the fusion of heavier nuclei results in energy retained by the product nucleons, and the resulting reaction is endothermic. The opposite is true for the reverse process, called nuclear fission. Nuclear fusion uses lighter elements, such as hydrogen and helium, which are in general more fusible; while the heavier elements, such as uranium, thorium and plutonium, are more fissionable.

The extreme astrophysical event of a supernova can produce enough energy to fuse nuclei into elements heavier than iron.

Click on any of the following blue hyperlinks for more about Nuclear Fusion:

- History

- Process

- Nuclear fusion in stars

- Requirements

- Artificial fusion

- Important reactions

- Mathematical description of cross section

- See also:

- China Fusion Engineering Test Reactor

- Cold fusion

- Focus fusion

- Fusenet

- Fusion rocket

- Impulse generator

- Joint European Torus

- List of fusion experiments

- List of Fusor examples

- List of plasma (physics) articles

- Neutron source

- Nuclear energy

- Nuclear fusion–fission hybrid

- Nuclear physics

- Nuclear reactor

- Nucleosynthesis

- Periodic table

- Pulsed power

- Pure fusion weapon

- Teller–Ulam design

- Thermonuclear fusion

- Timeline of nuclear fusion

- Triple-alpha process

- NuclearFiles.org – A repository of documents related to nuclear power.

- Annotated bibliography for nuclear fusion from the Alsos Digital Library for Nuclear Issues

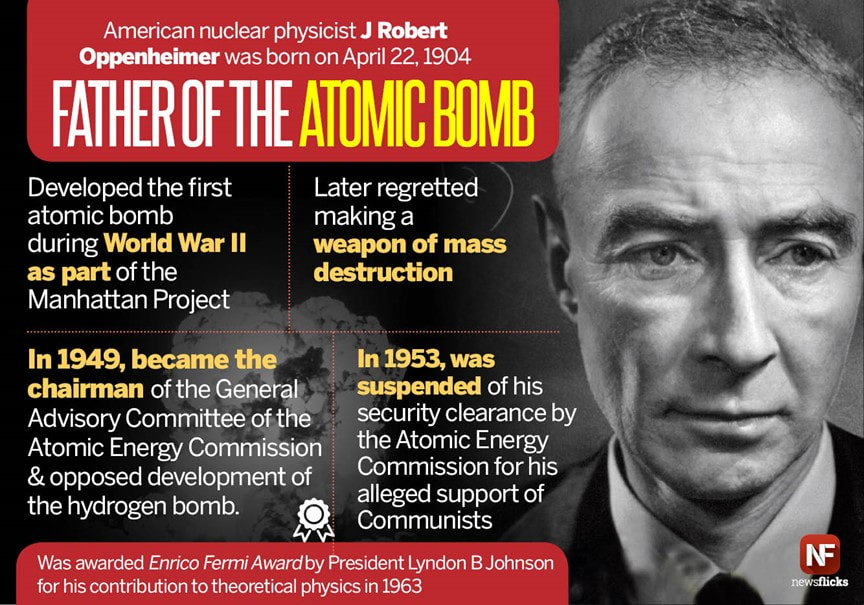

J. Robert Oppenheimer and the Manhattan Project

Courtesy of the New York Times: OPINION--GUEST ESSAY

The Tragedy of J. Robert Oppenheimer

July 17, 2023

By Kai Bird

Mr. Bird is the director of the Leon Levy Center for Biography and co-author with the late Martin J. Sherwin of “American Prometheus: The Triumph and Tragedy of J. Robert Oppenheimer.”

One day in the spring of 1954, J. Robert Oppenheimer ran into Albert Einstein outside their offices at the Institute for Advanced Study in Princeton, N.J. Oppenheimer had been the director of the institute since 1947 and Einstein a faculty member since he fled Germany in 1933. The two men might argue about quantum physics — Einstein grumbled that he just didn’t think that God played dice with the universe — but they were good friends.

Oppenheimer took the occasion to explain to Einstein that he was going to be absent from the institute for some weeks. He was being forced to defend himself in Washington, D.C., during a secret hearing against charges that he was a security risk, and perhaps even disloyal.

Einstein argued that Oppenheimer “had no obligation to subject himself to the witch hunt, that he had served his country well, and that if this was the reward she [America] offered he should turn his back on her.” Oppenheimer demurred, saying he could not turn his back on America. “He loved America,” said Verna Hobson, his secretary who was a witness to the conversation, “and this love was as deep as his love of science.”

“Einstein doesn’t understand,” Oppenheimer told Ms. Hobson. But as Einstein walked back into his office he told his own assistant, nodding in the direction of Oppenheimer, “There goes a narr,” or fool.

Einstein was right. Oppenheimer was foolishly subjecting himself to a kangaroo court in which he was soon stripped of his security clearance and publicly humiliated. The charges were flimsy, but by a vote of 2 to 1 the security panel of the Atomic Energy Commission deemed Oppenheimer a loyal citizen who was nevertheless a security risk: “We find that Dr. Oppenheimer’s continuing conduct and association have reflected a serious disregard for the requirements of the security system.”

The scientist would no longer be trusted with the nation’s secrets. Celebrated in 1945 as the “father of the atomic bomb,” nine years later he would become the chief celebrity victim of the McCarthyite maelstrom.

Oppenheimer may have been naïve, but he was right to fight the charges — and right to use his influence as one of the country’s pre-eminent scientists to speak out against a nuclear arms race. In the months and years leading up to the security hearing, Oppenheimer had criticized the decision to build a “super” hydrogen bomb. Astonishingly, he had gone so far as to say that the Hiroshima bomb was used “against an essentially defeated enemy.”

The atomic bomb, he warned, “is a weapon for aggressors, and the elements of surprise and terror are as intrinsic to it as are the fissionable nuclei.” These forthright dissents against the prevailing view of Washington’s national security establishment earned him powerful political enemies. That was precisely why he was being charged with disloyalty.

It is my hope that Christopher Nolan’s stunning new film on Oppenheimer’s complicated legacy will initiate a national conversation not only about our existential relationship to weapons of mass destruction, but also the need in our society for scientists as public intellectuals. Mr. Nolan’s three-hour film is a riveting thriller and mystery story that delves deeply into what this country did to its most famous scientist.

Sadly, Oppenheimer’s life story is relevant to our current political predicaments.

Oppenheimer was destroyed by a political movement characterized by rank know-nothing, anti-intellectual, xenophobic demagogues. The witch-hunters of that season are the direct ancestors of our current political actors of a certain paranoid style.

I’m thinking of Roy Cohn, Senator Joseph McCarthy’s chief counsel, who tried to subpoena Oppenheimer in 1954, only to be warned that this could interfere with the impending security hearing against Oppenheimer.

Yes, that Roy Cohn, who taught former President Donald Trump his brash, wholly deranged style of politics. Just recall the former president’s fact-challenged comments on the pandemic or climate change. This is a worldview proudly scornful of science.

After America’s most celebrated scientist was falsely accused and publicly humiliated, the Oppenheimer case sent a warning to all scientists not to stand up in the political arena as public intellectuals. This was the real tragedy of Oppenheimer. What happened to him also damaged our ability as a society to debate honestly about scientific theory — the very foundation of our modern world.

Quantum physics has utterly transformed our understanding of the universe. And this science has also given us a revolution in computing power and incredible biomedical innovations to prolong human life. Yet, too many of our citizens still distrust scientists and fail to understand the scientific quest, the trial and error inherent in testing any theory against facts by experimenting.

Just look at what happened to our public health civil servants during the recent pandemic.

Editors’ Picks:

We stand on the cusp of another technological revolution in which artificial intelligence will transform how we live and work, and yet we are not yet having the kind of informed civil discourse with its innovators that could help us to make wise policy decisions on its regulation.

Our politicians need to listen more to technology innovators like Sam Altman and quantum physicists like Kip Thorne and Michio Kaku.

Oppenheimer was trying desperately to have that kind of conversation about nuclear weapons. He was trying to warn our generals that these are not battlefield weapons, but weapons of pure terror. But our politicians chose to silence him; the result was that we spent the Cold War engaged in a costly and dangerous arms race.

Today, Vladimir Putin’s not-so-veiled threats to deploy tactical nuclear weapons in the war in Ukraine are a stark reminder that we can never be complacent about living with nuclear weapons. Oppenheimer did not regret what he did at Los Alamos; he understood that you cannot stop curious human beings from discovering the physical world around them.

One cannot halt the scientific quest, nor can one un-invent the atomic bomb.

But Oppenheimer always believed that human beings could learn to regulate these technologies and integrate them into a sustainable and humane civilization. We can only hope he was right.

[End of Opinion Piece]

___________________________________________________________________________

Robert Oppenheimer -- Wikipedia:

Julius Robert Oppenheimer(April 22, 1904 – February 18, 1967) was an American theoretical physicist and director of the Los Alamos Laboratory during World War II. He is often credited as the "father of the atomic bomb" for his role in organizing the Manhattan Project, the research and development undertaking that created the first nuclear weapons (see further below).

Born to German Jewish immigrants in New York City, Oppenheimer earned a bachelor's degree in chemistry from Harvard University in 1925 and a PhD in physics from the University of Göttingen in Germany in 1927.

After research at other institutions, he joined the physics department at the University of California, Berkeley, where he became a full professor in 1936. He made significant contributions to theoretical physics, including:

With his students, he also made contributions to the theory of neutron stars and black holes, quantum field theory, and the interactions of cosmic rays.

In 1942, Oppenheimer was recruited to work on the Manhattan Project, and in 1943 was appointed director of the project's Los Alamos Laboratory in New Mexico, tasked with developing the first nuclear weapons; his leadership and scientific expertise were instrumental in the success of the project.

On July 16, 1945, he was present at the Trinity test of the first atomic bomb. In August 1945, the weapons were used in the bombings of Hiroshima and Nagasaki in Japan, which remain the only use of nuclear weapons in an armed conflict.

In 1947, Oppenheimer became the director of the Institute for Advanced Study in Princeton, New Jersey, and chaired the influential General Advisory Committee of the newly created United States Atomic Energy Commission. He lobbied for international control of nuclear power, to avert nuclear proliferation and a nuclear arms race with the Soviet Union.

He opposed the development of the hydrogen bomb during a 1949–1950 governmental debate on the question. During the Second Red Scare, Oppenheimer's stances, together with past associations he had with people and organizations affiliated with the Communist Party USA, led to the revocation of his security clearance following a 1954 security hearing.

In 1963, President John F. Kennedy awarded him (and Lyndon B. Johnson presented him with) the Enrico Fermi Award as a gesture of political rehabilitation. In 2022, the U.S. government vacated its 1954 decision, saying that the process had been flawed.

Click on any of the following blue hyperlinks for more about J. Robert Oppenheimer:

[Your WebHost: the article on the Manhattan Project below is lengthy and highly technical: to instead be taken the Topic Outline for a selective reading, Click Here.]

The Manhattan Project:

The Manhattan Project was a research and development undertaking during World War II that produced the first nuclear weapons. It was led by the United States with the support of the United Kingdom and Canada.